This is the multi-page printable view of this section. Click here to print.

Deploying and configuring Dapr in your environment

- 1: Observability

- 1.1: Tracing

- 1.1.1: Distributed tracing overview

- 1.1.2: W3C trace context overview

- 1.1.3: Configure Dapr to send distributed tracing data

- 1.1.4: Open Telemetry Collector

- 1.1.4.1: Using OpenTelemetry Collector to collect traces

- 1.1.4.2: Using OpenTelemetry Collector to collect traces to send to App Insights

- 1.1.4.3: Using OpenTelemetry Collector to collect traces to send to Jaeger

- 1.1.5: How-To: Set-up New Relic for distributed tracing

- 1.1.6: How-To: Set up Zipkin for distributed tracing

- 1.1.7: How-To: Set up Datadog for distributed tracing

- 1.2: Metrics

- 1.2.1: Configure metrics

- 1.2.2: How-To: Observe metrics with Prometheus

- 1.2.3: How-To: Observe metrics with Grafana

- 1.2.4: How-To: Set-up New Relic to collect and analyze metrics

- 1.2.5: How-To: Set up Azure Monitor to search logs and collect metrics

- 1.3: Logging

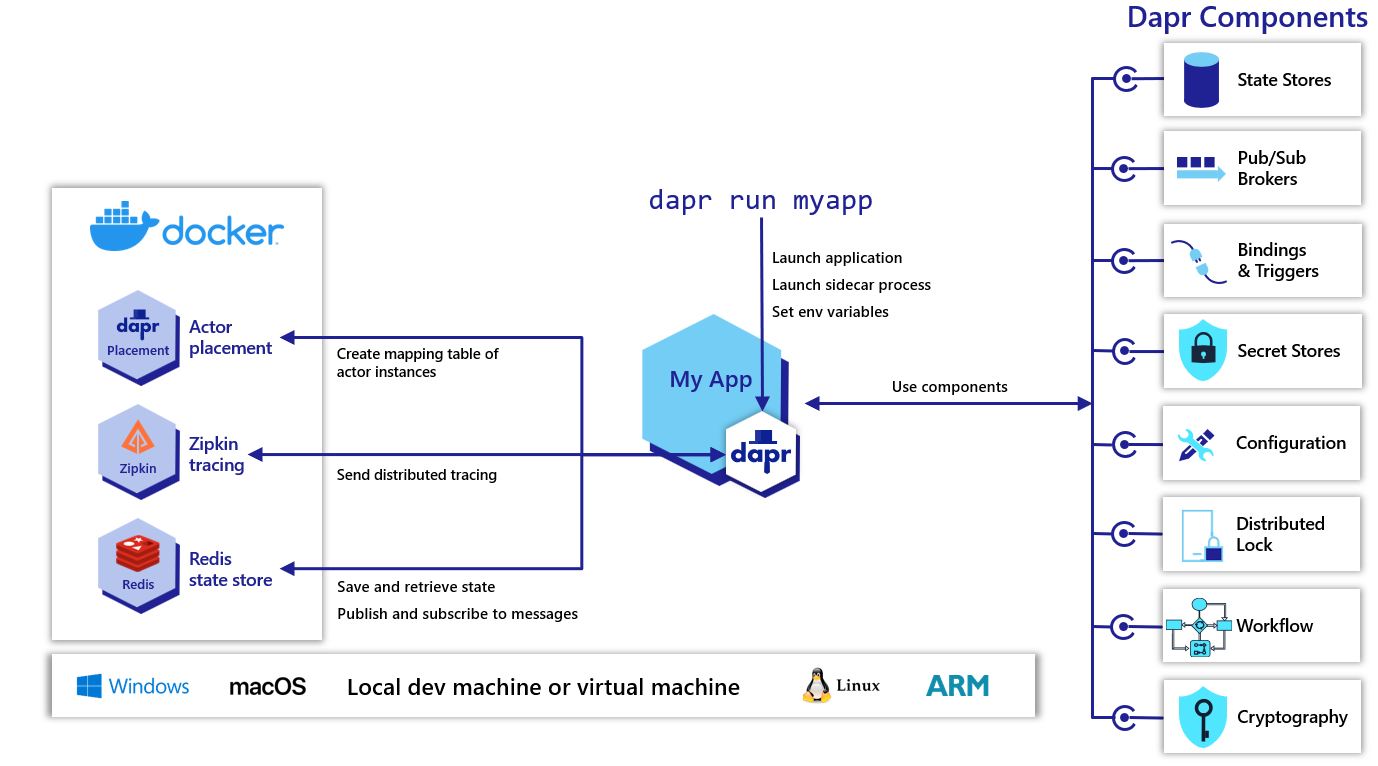

- 2: Hosting options for Dapr

- 2.1: Run Dapr in self-hosted mode

- 2.1.1: Overview of Dapr in self-hosted mode

- 2.1.2: How-To: Run Dapr in self-hosted mode with Docker

- 2.1.3: How-To: Run Dapr in self-hosted mode with Podman

- 2.1.4: How-To: Run Dapr in an offline or airgap environment

- 2.1.5: How-To: Run Dapr in self-hosted mode without Docker

- 2.1.6: How-to: Persist Scheduler Jobs

- 2.1.7: Steps to upgrade Dapr in a self-hosted environment

- 2.1.8: Uninstall Dapr in a self-hosted environment

- 2.2: Deploy and run Dapr in Kubernetes mode

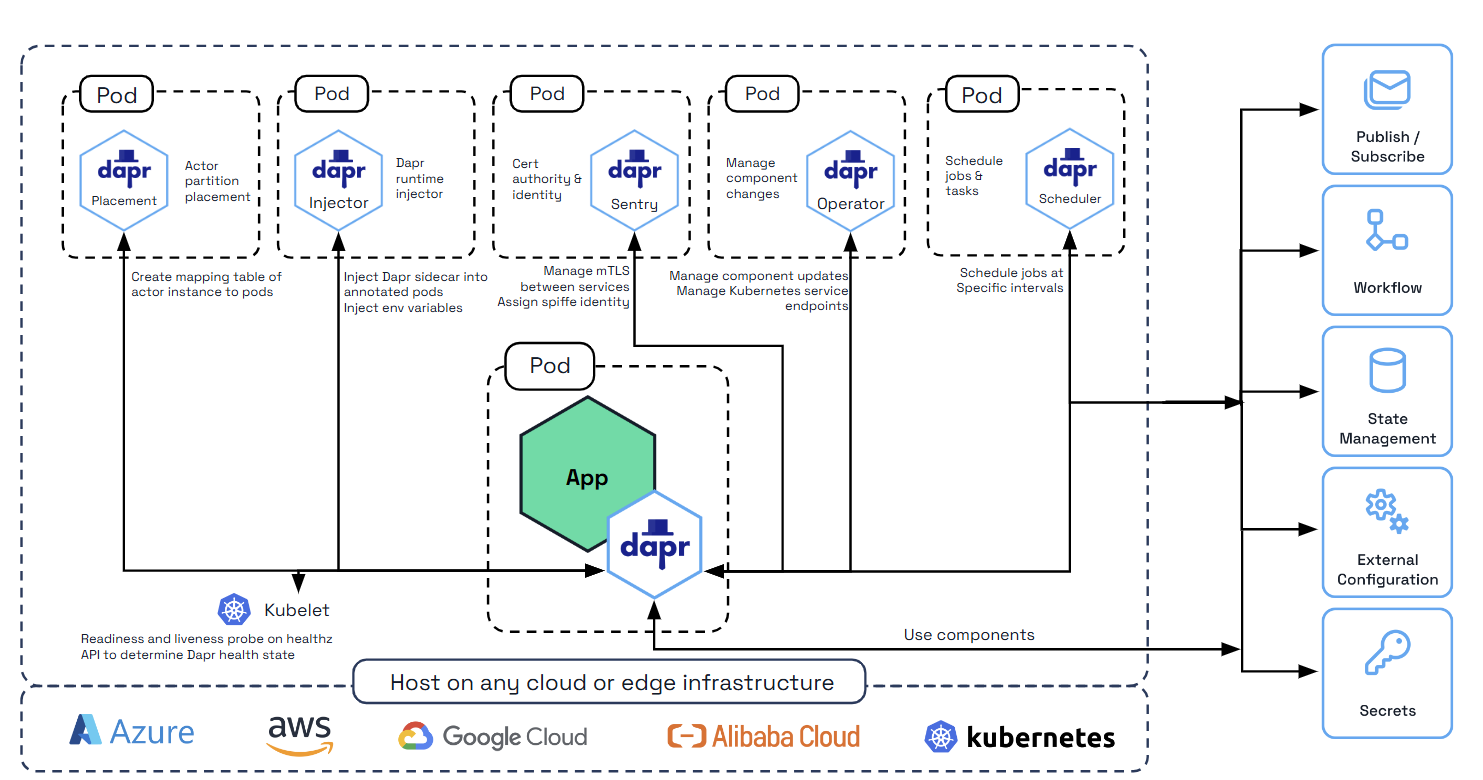

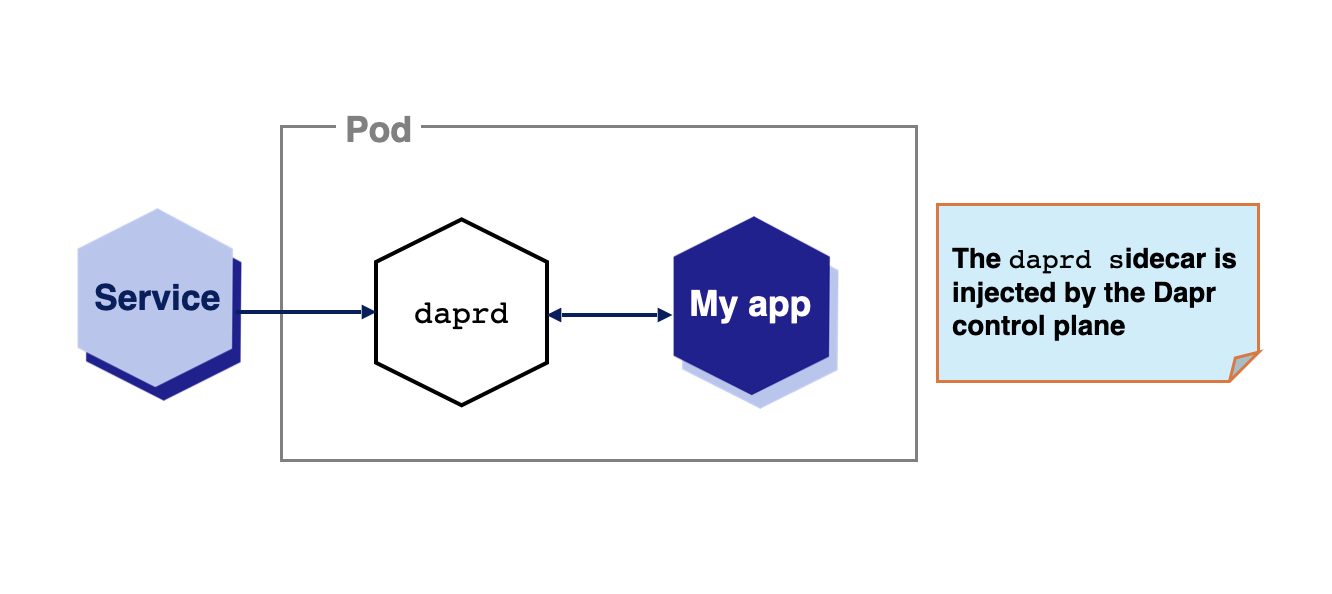

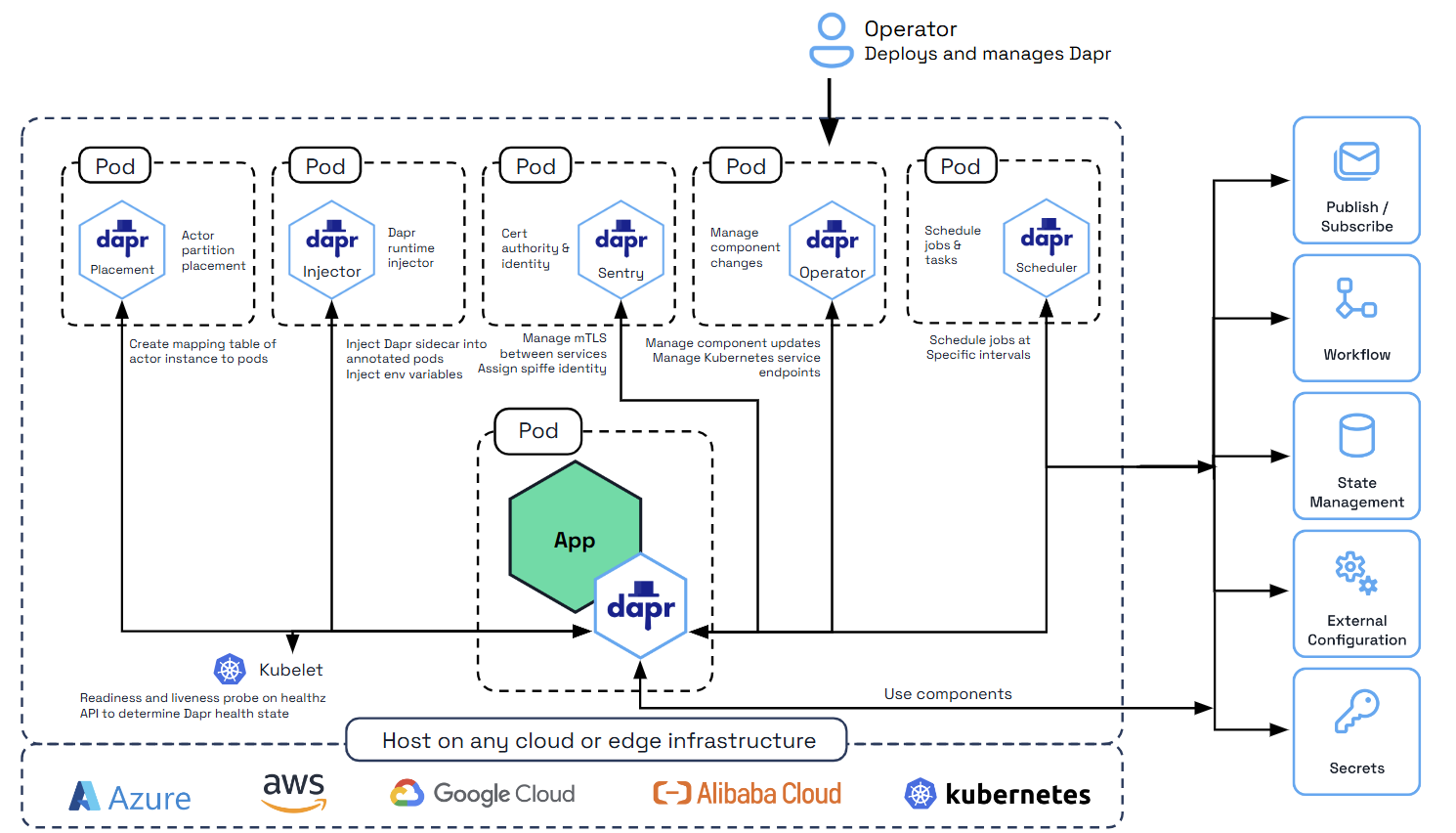

- 2.2.1: Overview of Dapr on Kubernetes

- 2.2.2: Kubernetes cluster setup

- 2.2.2.1: Set up a Minikube cluster

- 2.2.2.2: Set up a KiND cluster

- 2.2.2.3: Set up an Azure Kubernetes Service (AKS) cluster

- 2.2.2.4: Set up a Google Kubernetes Engine (GKE) cluster

- 2.2.2.5: Set up an Elastic Kubernetes Service (EKS) cluster

- 2.2.3: Deploy Dapr on a Kubernetes cluster

- 2.2.4: Upgrade Dapr on a Kubernetes cluster

- 2.2.5: Production guidelines on Kubernetes

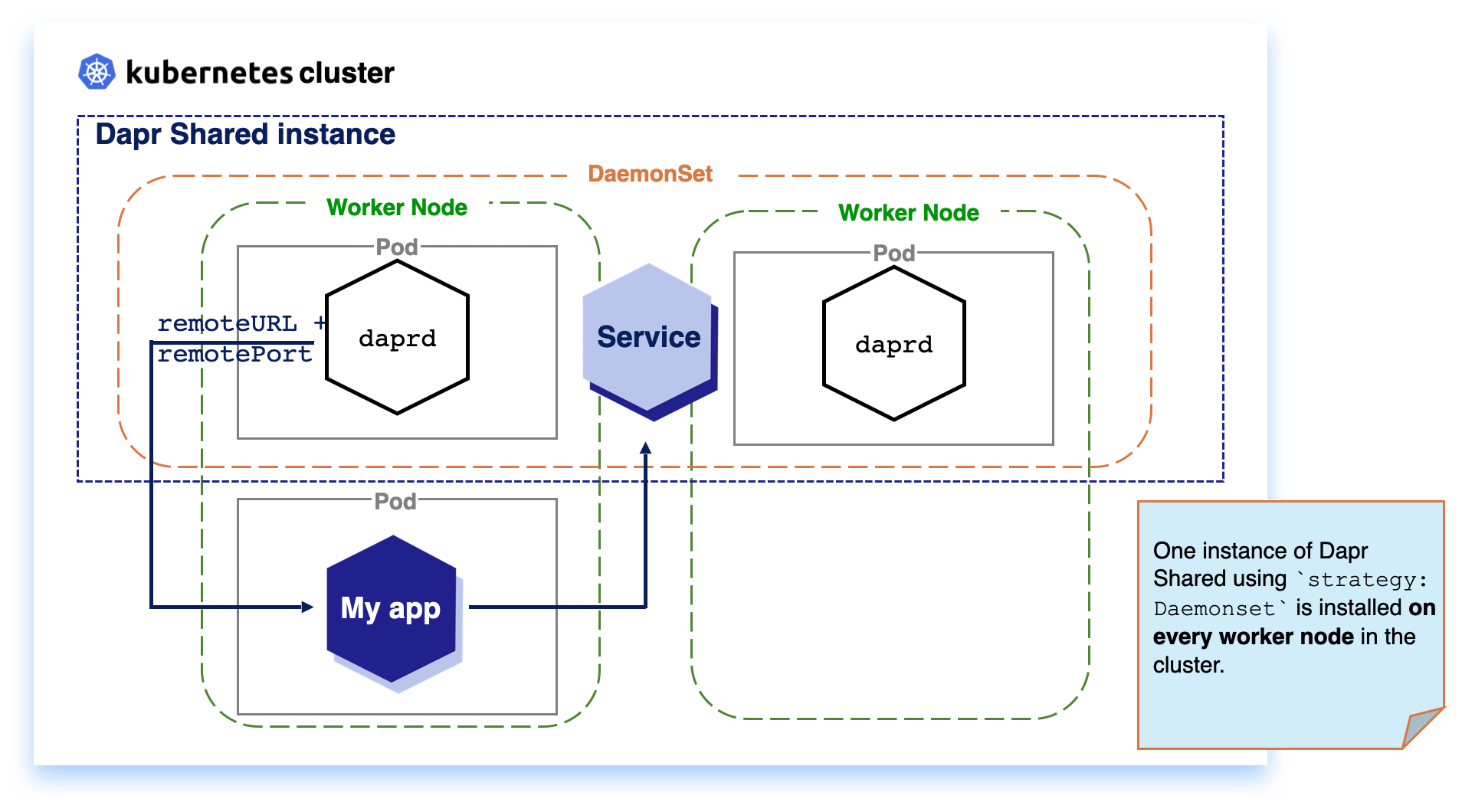

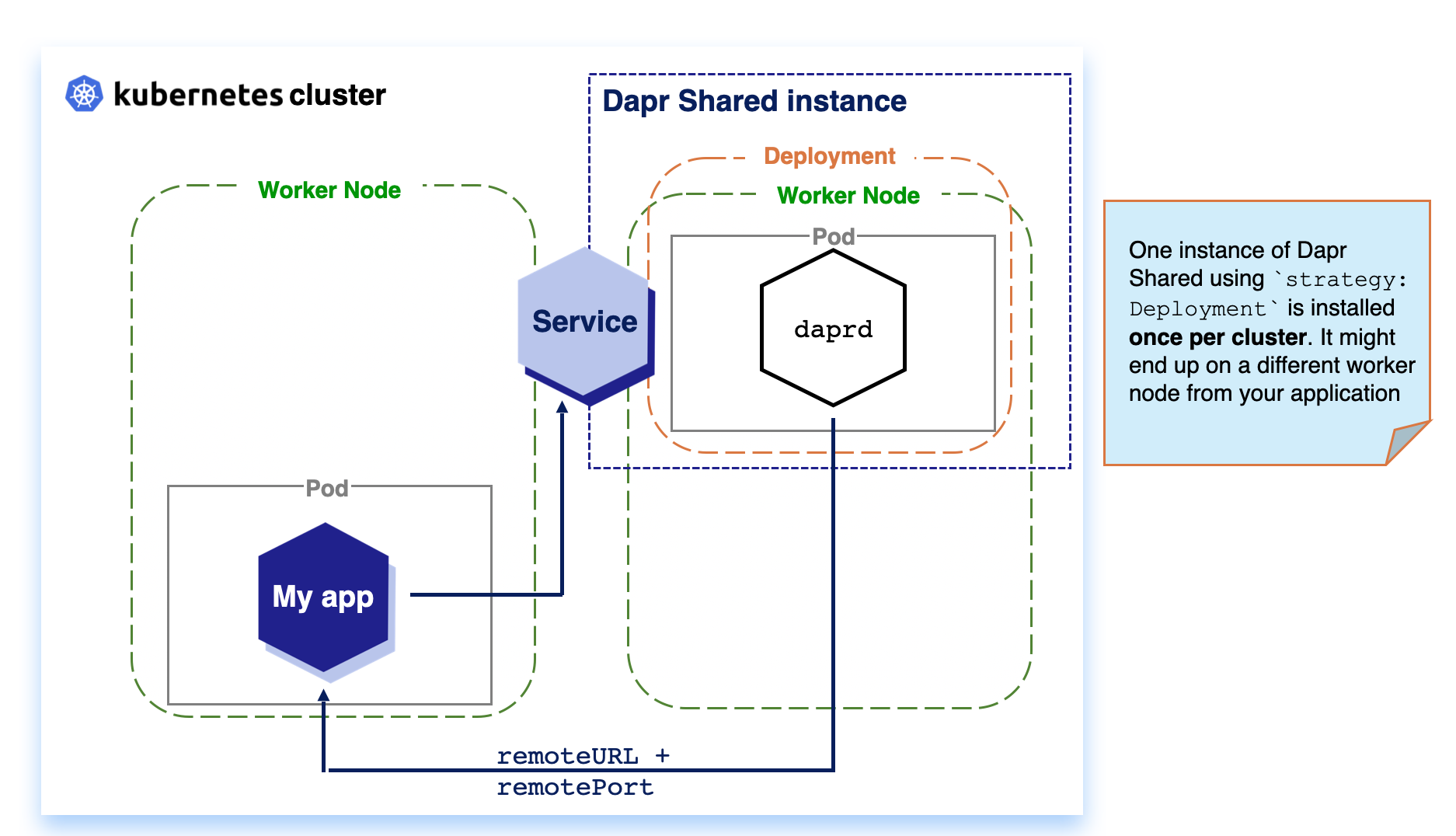

- 2.2.6: Deploy Dapr per-node or per-cluster with Dapr Shared

- 2.2.7: How-to: Persist Scheduler Jobs

- 2.2.8: Deploy to hybrid Linux/Windows Kubernetes clusters

- 2.2.9: Running Dapr with a Kubernetes Job

- 2.2.10: How-to: Mount Pod volumes to the Dapr sidecar

- 2.3: Run Dapr in a serverless offering

- 2.3.1: Azure Container Apps

- 3: Manage Dapr configuration

- 3.1: Dapr configuration

- 3.2: How-To: Control concurrency and rate limit applications

- 3.3: How-To: Limit the secrets that can be read from secret stores

- 3.4: How-To: Apply access control list configuration for service invocation

- 3.5: How-To: Selectively enable Dapr APIs on the Dapr sidecar

- 3.6: How-To: Configure Dapr to use gRPC

- 3.7: How-To: Handle large HTTP header size

- 3.8: How-To: Handle large http body requests

- 3.9: How-To: Install certificates in the Dapr sidecar

- 3.10: How-To: Enable preview features

- 3.11: How-To: Configure Environment Variables from Secrets for Dapr sidecar

- 4: Managing components in Dapr

- 4.1: Certification lifecycle

- 4.2: Updating components

- 4.3: How-To: Scope components to one or more applications

- 4.4: How-To: Reference secrets in components

- 4.5: State stores components

- 4.6: Pub/Sub brokers

- 4.7: Secret store components

- 4.8: Bindings components

- 4.9: How-To: Register a pluggable component

- 4.10: Configure middleware components

- 5: Securing Dapr deployments

- 5.1: Setup & configure mTLS certificates

- 5.2: Configure endpoint authorization with OAuth

- 5.3: Enable API token authentication in Dapr

- 5.4: Authenticate requests from Dapr using token authentication

- 6: Error recovery using resiliency policies

- 6.1: Overview

- 6.2: Resiliency policies

- 6.2.1: Timeout resiliency policies

- 6.2.2: Retry and back-off resiliency policies

- 6.2.2.1: Retry resiliency policies

- 6.2.2.2: Override default retry resiliency policies

- 6.2.3: Circuit breaker resiliency policies

- 6.2.4: Default resiliency policies

- 6.3: Targets

- 6.4: Health checks

- 6.4.1: App health checks

- 6.4.2: Sidecar health

- 7: Support and versioning

- 7.1: Versioning policy

- 7.2: Supported runtime and SDK releases

- 7.3: Breaking changes and deprecations

- 7.4: Reporting security issues

- 7.5: Preview features

- 7.6: Alpha and Beta APIs

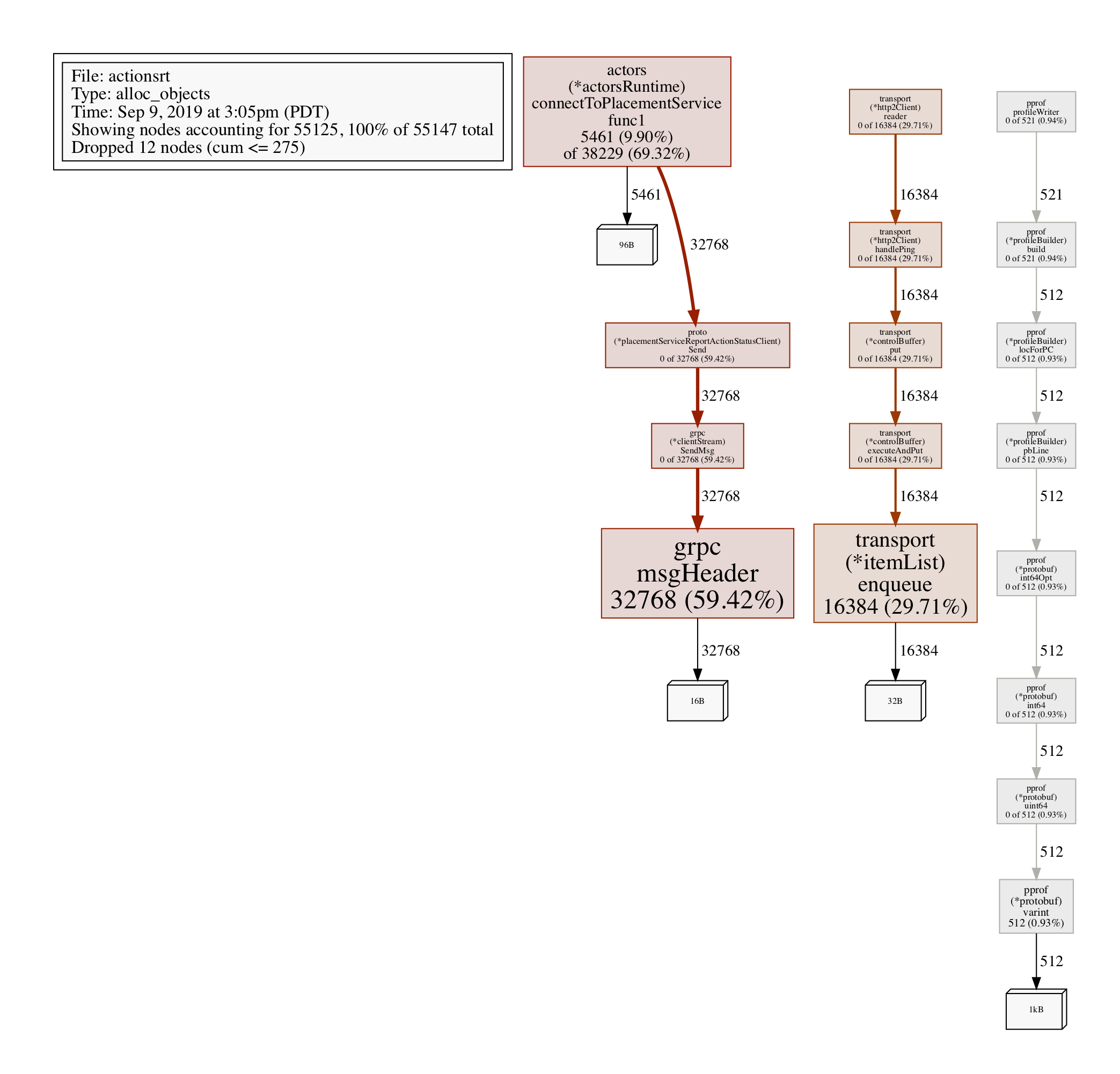

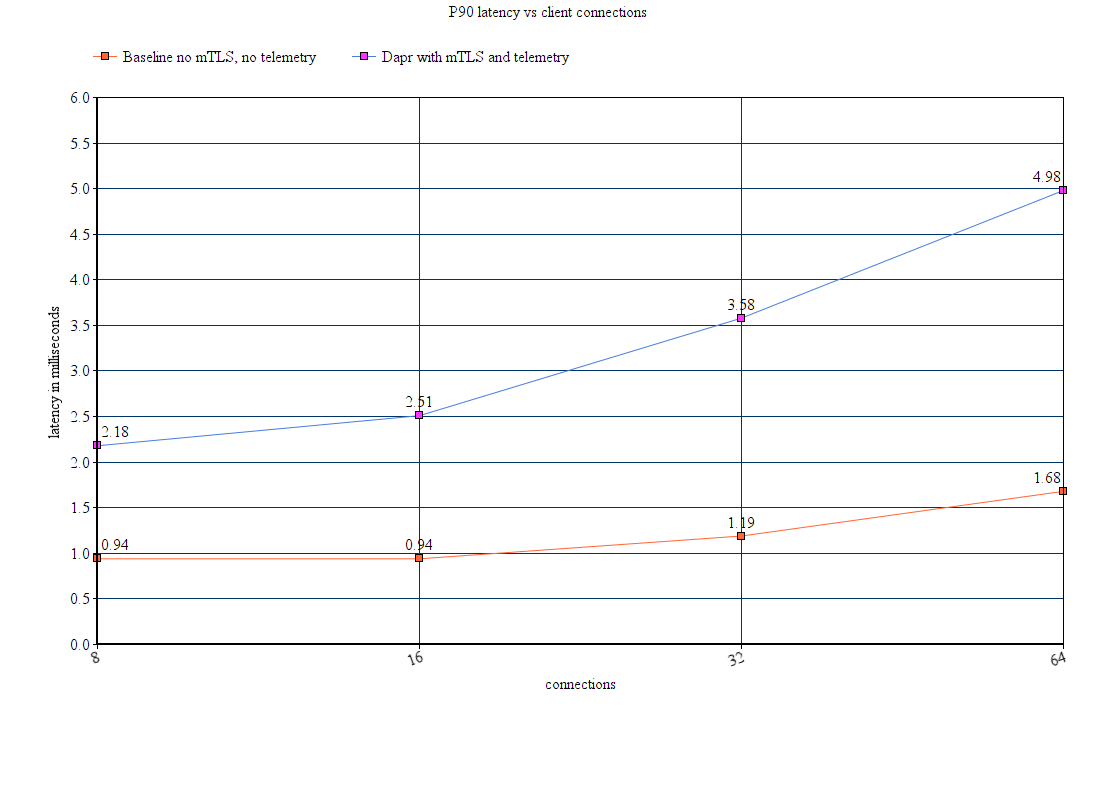

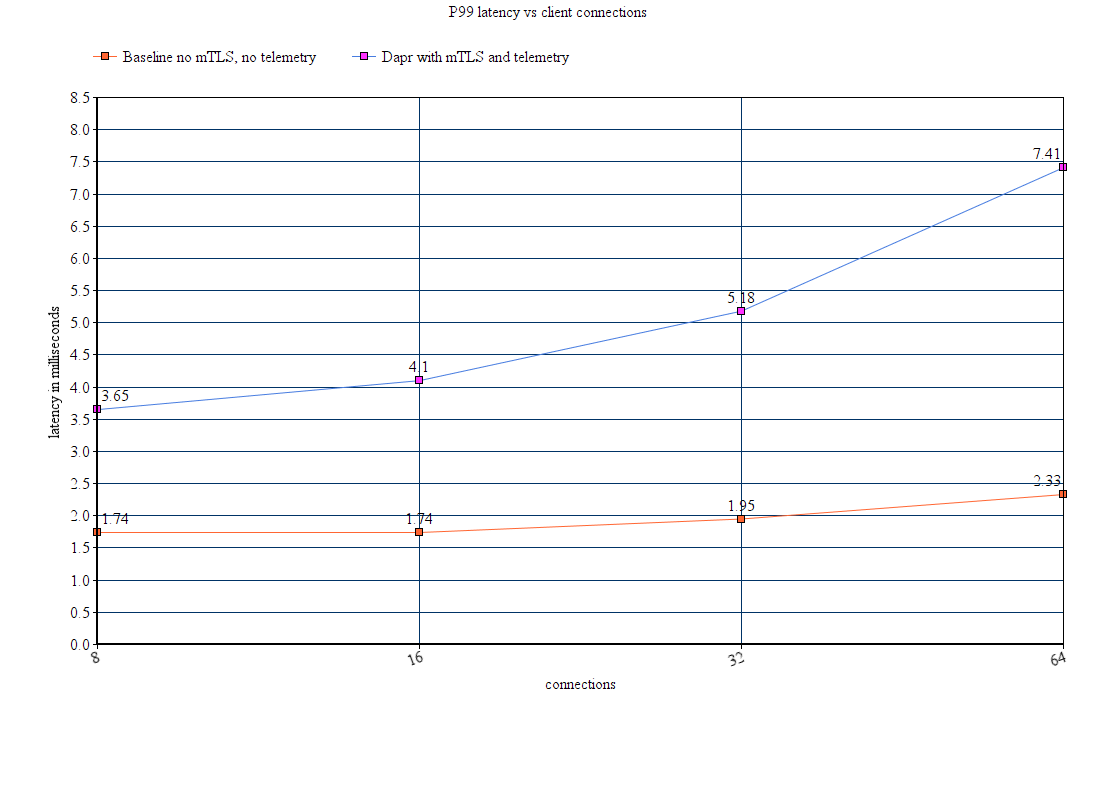

- 8: Performance and scalability statistics of Dapr

- 9: Debugging and Troubleshooting

1 - Observability

The following overview video and demo demonstrates how observability in Dapr works.

More about Dapr Observability

Learn more about how to use Dapr Observability:

- Explore observability via any of the supporting Dapr SDKs.

- Review the Observability API reference documentation.

- Read the general overview of the observability concept in Dapr.

1.1 - Tracing

1.1.1 - Distributed tracing overview

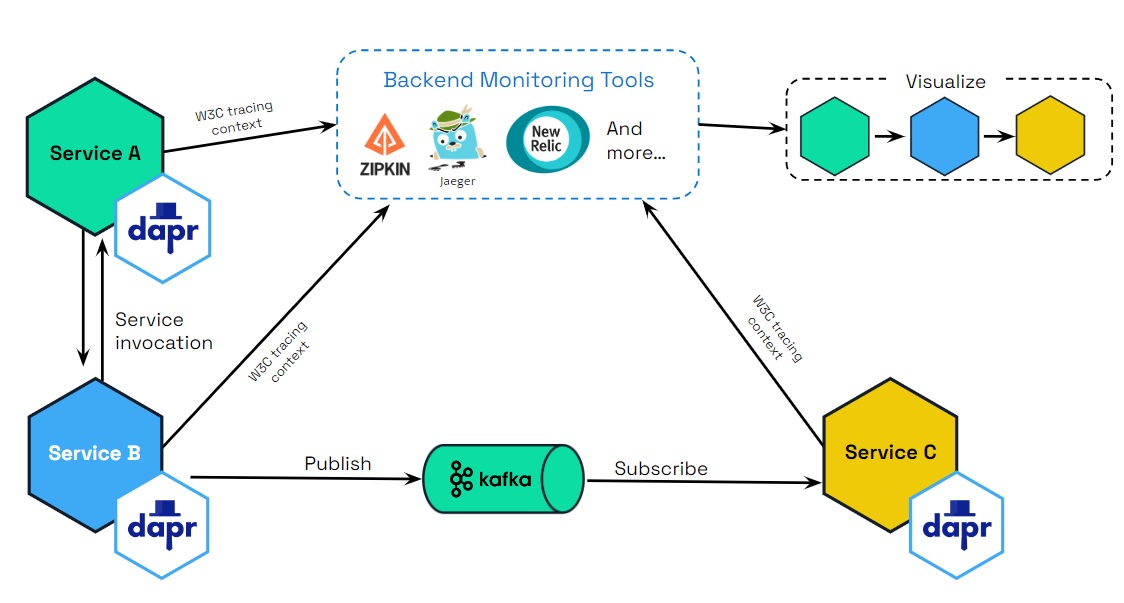

Dapr uses the Open Telemetry (OTEL) and Zipkin protocols for distributed traces. OTEL is the industry standard and is the recommended trace protocol to use.

Most observability tools support OTEL, including:

The following diagram demonstrates how Dapr (using OTEL and Zipkin protocols) integrates with multiple observability tools.

Scenarios

Tracing is used with service invocaton and pub/sub APIs. You can flow trace context between services that uses these APIs. There are two scenarios for how tracing is used:

- Dapr generates the trace context and you propagate the trace context to another service.

- You generate the trace context and Dapr propagates the trace context to a service.

Scenario 1: Dapr generates trace context headers

Propagating sequential service calls

Dapr takes care of creating the trace headers. However, when there are more than two services, you’re responsible for propagating the trace headers between them. Let’s go through the scenarios with examples:

Single service invocation call

For example, service A -> service B.

Dapr generates the trace headers in service A, which are then propagated from service A to service B. No further propagation is needed.

Multiple sequential service invocation calls

For example, service A -> service B -> propagate trace headers to -> service C and so on to further Dapr-enabled services.

Dapr generates the trace headers at the beginning of the request in service A, which are then propagated to service B. You are now responsible for taking the headers and propagating them to service C, since this is specific to your application.

In other words, if the app is calling to Dapr and wants to trace with an existing trace header (span), it must always propagate to Dapr (from service B to service C, in this example). Dapr always propagates trace spans to an application.

Note

No helper methods are exposed in Dapr SDKs to propagate and retrieve trace context. You need to use HTTP/gRPC clients to propagate and retrieve trace headers through HTTP headers and gRPC metadata.Request is from external endpoint

For example, from a gateway service to a Dapr-enabled service A.

An external gateway ingress calls Dapr, which generates the trace headers and calls service A. Service A then calls service B and further Dapr-enabled services.

You must propagate the headers from service A to service B. For example: Ingress -> service A -> propagate trace headers -> service B. This is similar to case 2.

Pub/sub messages

Dapr generates the trace headers in the published message topic. For rawPayload messages, it is possible to specify the traceparent header to propagate the tracing information. These trace headers are propagated to any services listening on that topic.

Propagating multiple different service calls

In the following scenarios, Dapr does some of the work for you, with you then creating or propagating trace headers.

Multiple service calls to different services from single service

When you are calling multiple services from a single service, you need to propagate the trace headers. For example:

service A -> service B

[ .. some code logic ..]

service A -> service C

[ .. some code logic ..]

service A -> service D

[ .. some code logic ..]

In this case:

- When

service Afirst callsservice B, Dapr generates the trace headers inservice A. - The trace headers in

service Aare propagated toservice B. - These trace headers are returned in the response from

service Bas part of response headers. - You then need to propagate the returned trace context to the next services, like

service Candservice D, as Dapr does not know you want to reuse the same header.

Scenario 2: You generate your own trace context headers from non-Daprized applications

Generating your own trace context headers is more unusual and typically not required when calling Dapr.

However, there are scenarios where you could specifically choose to add W3C trace headers into a service call. For example, you have an existing application that does not use Dapr. In this case, Dapr still propagates the trace context headers for you.

If you decide to generate trace headers yourself, there are three ways this can be done:

Standard OpenTelemetry SDK

You can use the industry standard OpenTelemetry SDKs to generate trace headers and pass these trace headers to a Dapr-enabled service. This is the preferred method.

Vendor SDK

You can use a vendor SDK that provides a way to generate W3C trace headers and pass them to a Dapr-enabled service.

W3C trace context

You can handcraft a trace context following W3C trace context specifications and pass them to a Dapr-enabled service.

Read the trace context overview for more background and examples on W3C trace context and headers.

Baggage Support

Dapr supports two distinct mechanisms for propagating W3C Baggage alongside trace context:

Context Baggage (OpenTelemetry)

- Follows OpenTelemetry conventions with decoded values

- Used when working with OpenTelemetry context propagation

- Values are stored and transmitted in their original, unencoded form

- Recommended for OpenTelemetry integrations and when working with application context

Header/Metadata Baggage

- You must URL encode special characters (for example,

%20for spaces,%2Ffor slashes) when setting header/metadata baggage - Values remain percent-encoded in transport as required by the W3C Baggage spec

- Values stay encoded when inspecting raw headers/metadata

- Only OpenTelemetry APIs will decode the values

- Example: Use

serverNode=DF%2028(notserverNode=DF 28) when setting header baggage

- You must URL encode special characters (for example,

For security purposes, context baggage and header baggage are strictly separated and never merged between domains. This ensures that baggage values maintain their intended format and security properties.

Using Baggage with Dapr

You can propagate baggage using either mechanism, depending on your use case.

- In your application code: Set the baggage in the context before making a Dapr API call

- When calling Dapr: Pass the context to any Dapr API call

- Inside Dapr: The Dapr runtime automatically picks up the baggage

- Propagation: Dapr automatically propagates the baggage to downstream services, maintaining the appropriate encoding for each mechanism

Here are examples of both mechanisms:

1. Using Context Baggage (OpenTelemetry)

When using OpenTelemetry SDK:

import otelbaggage "go.opentelemetry.io/otel/baggage"

// Set baggage in context (values remain unencoded)

baggage, err = otelbaggage.Parse("userId=cassie,serverNode=DF%2028")

...

ctx := otelbaggage.ContextWithBaggage(t.Context(), baggage)

)

// Pass this context to any Dapr API call

client.InvokeMethodWithContent(ctx, "serviceB", ...)

2. Using Header/Metadata Baggage

When using gRPC metadata:

import "google.golang.org/grpc/metadata"

// Set URL-encoded baggage in context

ctx = metadata.AppendToOutgoingContext(ctx,

"baggage", "userId=cassie,serverNode=DF%2028",

)

// Pass this context to any Dapr API call

client.InvokeMethodWithContent(ctx, "serviceB", ...)

3. Receiving Baggage in Target Service

In your target service, you can access the propagated baggage:

// Using OpenTelemetry (values are automatically decoded)

import "go.opentelemetry.io/otel/baggage"

bag := baggage.FromContext(ctx)

userID := bag.Member("userId").Value() // "cassie"

// Using raw gRPC metadata (values remain percent-encoded)

import "google.golang.org/grpc/metadata"

md, _ := metadata.FromIncomingContext(ctx)

if values := md.Get("baggage"); len(values) > 0 {

// values[0] contains the percent-encoded string you set: "userId=cassie,serverNode=DF%2028"

// Remember: You must URL encode special characters when setting baggage

// To decode the values, use OpenTelemetry APIs:

bag, err := baggage.Parse(values[0])

...

userID := bag.Member("userId").Value() // "cassie"

}

HTTP Example (URL-encoded):

curl -X POST http://localhost:3500/v1.0/invoke/serviceB/method/hello \

-H "Content-Type: application/json" \

-H "baggage: userID=cassie,serverNode=DF%2028" \

-d '{"message": "Hello service B"}'

gRPC Example (URL-encoded):

ctx = grpcMetadata.AppendToOutgoingContext(ctx,

"baggage", "userID=cassie,serverNode=DF%2028",

)

Common Use Cases

Baggage is useful for:

- Propagating user IDs or correlation IDs across services

- Passing tenant or environment information

- Maintaining consistent context across service boundaries

- Debugging and troubleshooting distributed transactions

Best Practices

Choose the Right Mechanism

- Use Context Baggage when working with OpenTelemetry

- Use Header Baggage when working directly with HTTP/gRPC

Security Considerations

- Be mindful that baggage is propagated across service boundaries

- Don’t include sensitive information in baggage

- Remember that context and header baggage remain separate

Related Links

1.1.2 - W3C trace context overview

Dapr uses the Open Telemetry protocol, which in turn uses the W3C trace context for distributed tracing for both service invocation and pub/sub messaging. Dapr generates and propagates the trace context information, which can be sent to observability tools for visualization and querying.

Background

Distributed tracing is a methodology implemented by tracing tools to follow, analyze, and debug a transaction across multiple software components.

Typically, a distributed trace traverses more than one service, which requires it to be uniquely identifiable. Trace context propagation passes along this unique identification.

In the past, trace context propagation was implemented individually by each different tracing vendor. In multi-vendor environments, this causes interoperability problems, such as:

- Traces collected by different tracing vendors can’t be correlated, as there is no shared unique identifier.

- Traces crossing boundaries between different tracing vendors can’t be propagated, as there is no forwarded, uniformly agreed set of identification.

- Vendor-specific metadata might be dropped by intermediaries.

- Cloud platform vendors, intermediaries, and service providers cannot guarantee to support trace context propagation, as there is no standard to follow.

Previously, most applications were monitored by a single tracing vendor and stayed within the boundaries of a single platform provider, so these problems didn’t have a significant impact.

Today, an increasing number of applications are distributed and leverage multiple middleware services and cloud platforms. This transformation of modern applications requires a distributed tracing context propagation standard.

The W3C trace context specification defines a universally agreed-upon format for the exchange of trace context propagation data (referred to as trace context). Trace context solves the above problems by providing:

- A unique identifier for individual traces and requests, allowing trace data of multiple providers to be linked together.

- An agreed-upon mechanism to forward vendor-specific trace data and avoid broken traces when multiple tracing tools participate in a single transaction.

- An industry standard that intermediaries, platforms, and hardware providers can support.

This unified approach for propagating trace data improves visibility into the behavior of distributed applications, facilitating problem and performance analysis.

W3C trace context and headers format

W3C trace context

Dapr uses the standard W3C trace context headers.

- For HTTP requests, Dapr uses

traceparentheader. - For gRPC requests, Dapr uses

grpc-trace-binheader.

When a request arrives without a trace ID, Dapr creates a new one. Otherwise, it passes the trace ID along the call chain.

W3C trace headers

These are the specific trace context headers that are generated and propagated by Dapr for HTTP and gRPC.

Copy these headers when propagating a trace context header from an HTTP response to an HTTP request:

Traceparent header

The traceparent header represents the incoming request in a tracing system in a common format, understood by all vendors:

traceparent: 00-0af7651916cd43dd8448eb211c80319c-b7ad6b7169203331-01

Learn more about the traceparent fields details.

Tracestate header

The tracestate header includes the parent in a potentially vendor-specific format:

tracestate: congo=t61rcWkgMzE

Learn more about the tracestate fields details.

Baggage Support

Dapr supports W3C Baggage for propagating key-value pairs alongside trace context through two distinct mechanisms:

Context Baggage (OpenTelemetry)

- Follows OpenTelemetry conventions with decoded values

- Used when propagating baggage through application context

- Values are stored in their original, unencoded form

- Example of how it would be printed with OpenTelemetry APIs:

baggage: userId=cassie,serverNode=DF 28,isVIP=true

HTTP Header Baggage

- You must URL encode special characters (for example,

%20for spaces,%2Ffor slashes) when setting header baggage - Values remain percent-encoded in HTTP headers as required by the W3C Baggage spec

- Values stay encoded when inspecting raw headers in Dapr

- Only OpenTelemetry APIs like

otelbaggage.Parse()will decode the values - Example (note the URL-encoded space

%20):curl -X POST http://localhost:3500/v1.0/invoke/serviceB/method/hello \ -H "Content-Type: application/json" \ -H "baggage: userId=cassie,serverNode=DF%2028,isVIP=true" \ -d '{"message": "Hello service B"}'

- You must URL encode special characters (for example,

For security purposes, context baggage and header baggage are strictly separated and never merged between domains. This ensures that baggage values maintain their intended format and security properties in each domain.

Multiple baggage headers are supported and will be combined according to the W3C specification. Dapr automatically propagates baggage across service calls while maintaining the appropriate encoding for each domain.

In the gRPC API calls, trace context is passed through grpc-trace-bin header.

Baggage Support

Dapr supports W3C Baggage for propagating key-value pairs alongside trace context through two distinct mechanisms:

Context Baggage (OpenTelemetry)

- Follows OpenTelemetry conventions with decoded values

- Used when propagating baggage through gRPC context

- Values are stored in their original, unencoded form

- Example of how it would be printed with OpenTelemetry APIs:

baggage: userId=cassie,serverNode=DF 28,isVIP=true

gRPC Metadata Baggage

- You must URL encode special characters (for example,

%20for spaces,%2Ffor slashes) when setting metadata baggage - Values remain percent-encoded in gRPC metadata

- Example (note the URL-encoded space

%20):baggage: userId=cassie,serverNode=DF%2028,isVIP=true

- You must URL encode special characters (for example,

For security purposes, context baggage and metadata baggage are strictly separated and never merged between domains. This ensures that baggage values maintain their intended format and security properties in each domain.

Multiple baggage metadata entries are supported and will be combined according to the W3C specification. Dapr automatically propagates baggage across service calls while maintaining the appropriate encoding for each domain.

Related Links

1.1.3 - Configure Dapr to send distributed tracing data

Note

It is recommended to run Dapr with tracing enabled for any production scenario. You can configure Dapr to send tracing and telemetry data to many observability tools based on your environment, whether it is running in the cloud or on-premises.Configuration

The tracing section under the Configuration spec contains the following properties:

spec:

tracing:

samplingRate: "1"

otel:

endpointAddress: "myendpoint.cluster.local:4317"

zipkin:

endpointAddress: "https://..."

The following table lists the properties for tracing:

| Property | Type | Description |

|---|---|---|

samplingRate | string | Set sampling rate for tracing to be enabled or disabled. |

stdout | bool | True write more verbose information to the traces |

otel.endpointAddress | string | Set the Open Telemetry (OTEL) target hostname and optionally port. If this is used, you do not need to specify the ‘zipkin’ section. |

otel.isSecure | bool | Is the connection to the endpoint address encrypted. |

otel.protocol | string | Set to http or grpc protocol. |

zipkin.endpointAddress | string | Set the Zipkin server URL. If this is used, you do not need to specify the otel section. |

To enable tracing, use a configuration file (in self hosted mode) or a Kubernetes configuration object (in Kubernetes mode). For example, the following configuration object changes the sample rate to 1 (every span is sampled), and sends trace using OTEL protocol to the OTEL server at localhost:4317

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: tracing

spec:

tracing:

samplingRate: "1"

otel:

endpointAddress: "localhost:4317"

isSecure: false

protocol: grpc

Sampling rate

Dapr uses probabilistic sampling. The sample rate defines the probability a tracing span will be sampled and can have a value between 0 and 1 (inclusive). The default sample rate is 0.0001 (i.e. 1 in 10,000 spans is sampled).

Changing samplingRate to 0 disables tracing altogether.

Environment variables

The OpenTelemetry (otel) endpoint can also be configured via an environment variables. The presence of the OTEL_EXPORTER_OTLP_ENDPOINT environment variable turns on tracing for the sidecar.

| Environment Variable | Description |

|---|---|

OTEL_EXPORTER_OTLP_ENDPOINT | Sets the Open Telemetry (OTEL) server hostname and optionally port, turns on tracing |

OTEL_EXPORTER_OTLP_INSECURE | Sets the connection to the endpoint as unencrypted (true/false) |

OTEL_EXPORTER_OTLP_PROTOCOL | Transport protocol (grpc, http/protobuf, http/json) |

Next steps

Learn how to set up tracing with one of the following tools:

1.1.4 - Open Telemetry Collector

1.1.4.1 - Using OpenTelemetry Collector to collect traces

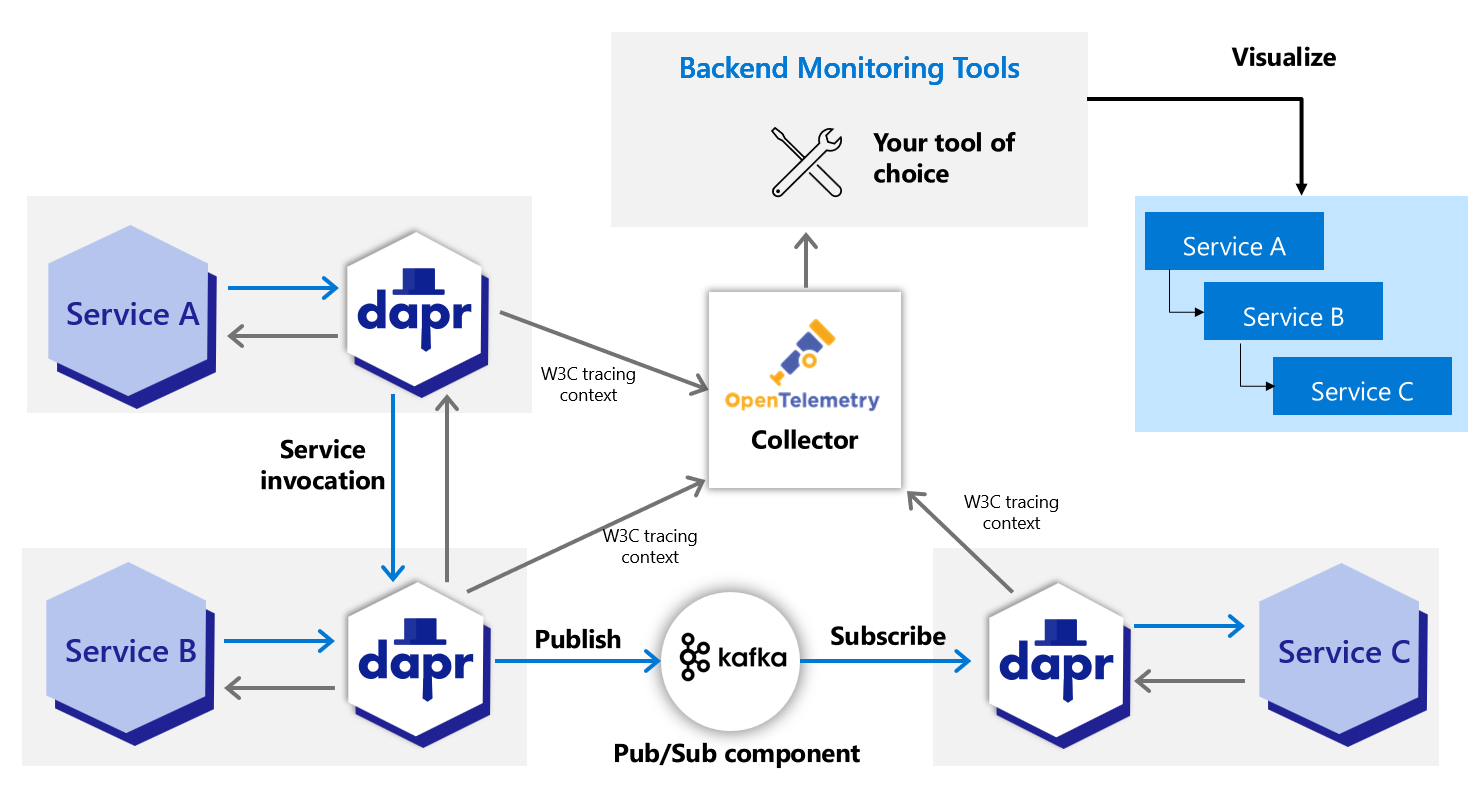

Dapr directly writes traces using the OpenTelemetry (OTLP) protocol as the recommended method. For observability tools that support the OTLP directly, it is recommended to use the OpenTelemetry Collector, as it allows your application to quickly offload data and includes features, such as retries, batching, and encryption. For more information, read the Open Telemetry Collector documentation.

Dapr can also write traces using the Zipkin protocol. Prior to supporting the OTLP protocol, the Zipkin protocol was used with the OpenTelemetry Collector to send traces to observability tools such as AWS X-Ray, Google Cloud Operations Suite, and Azure Monitor. Both protocol approaches are valid, however the OpenTelemetry protocol is the recommended choice.

Prerequisites

- Install Dapr on Kubernetes

- Verify your trace backends are already set up to receive traces

- Review your OTEL Collector exporter’s required parameters:

Set up OTEL Collector to push to your trace backend

Check out the

open-telemetry-collector-generic.yaml.Replace the

<your-exporter-here>section with the correct settings for your trace exporter.- Refer to the OTEL Collector links in the prerequisites section to determine the correct settings.

Apply the configuration with:

kubectl apply -f open-telemetry-collector-generic.yaml

Set up Dapr to send traces to OTEL Collector

Set up a Dapr configuration file to turn on tracing and deploy a tracing exporter component that uses the OpenTelemetry Collector.

Use this

collector-config.yamlfile to create your own configuration.Apply the configuration with:

kubectl apply -f collector-config.yaml

Deploy your app with tracing

Apply the appconfig configuration by adding a dapr.io/config annotation to the container that you want to participate in the distributed tracing, as shown in the following example:

apiVersion: apps/v1

kind: Deployment

metadata:

...

spec:

...

template:

metadata:

...

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "MyApp"

dapr.io/app-port: "8080"

dapr.io/config: "appconfig"

Note

If you are using one of the Dapr tutorials, such as distributed calculator, theappconfig configuration is already configured, so no additional settings are needed.You can register multiple tracing exporters at the same time, and the tracing logs are forwarded to all registered exporters.

That’s it! There’s no need to include any SDKs or instrument your application code. Dapr automatically handles the distributed tracing for you.

View traces

Deploy and run some applications. Wait for the trace to propagate to your tracing backend and view them there.

Related links

- Try out the observability quickstart

- Learn how to set tracing configuration options

1.1.4.2 - Using OpenTelemetry Collector to collect traces to send to App Insights

Dapr integrates with OpenTelemetry (OTEL) Collector using the OpenTelemetry protocol (OTLP). This guide walks through an example using Dapr to push traces to Azure Application Insights, using the OpenTelemetry Collector.

Prerequisites

- Install Dapr on Kubernetes

- Create an Application Insights resource and make note of your Application Insights connection string.

Set up OTEL Collector to push to your App Insights instance

To push traces to your Application Insights instance, install the OpenTelemetry Collector on your Kubernetes cluster.

Download and inspect the

open-telemetry-collector-appinsights.yamlfile.Replace the

<CONNECTION_STRING>placeholder with your App Insights connection string.Deploy the OpenTelemetry Collector into the same namespace where your Dapr-enabled applications are running:

kubectl apply -f open-telemetry-collector-appinsights.yaml

Set up Dapr to send traces to the OpenTelemetry Collector

Create a Dapr configuration file to enable tracing and send traces to the OpenTelemetry Collector via OTLP.

Download and inspect the

collector-config-otel.yaml. Update thenamespaceandotel.endpointAddressvalues to align with the namespace where your Dapr-enabled applications and OpenTelemetry Collector are deployed.Apply the configuration with:

kubectl apply -f collector-config-otel.yaml

Deploy your app with tracing

Apply the tracing configuration by adding a dapr.io/config annotation to the Dapr applications that you want to include in distributed tracing, as shown in the following example:

apiVersion: apps/v1

kind: Deployment

metadata:

...

spec:

...

template:

metadata:

...

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "MyApp"

dapr.io/app-port: "8080"

dapr.io/config: "tracing"

Note

If you are using one of the Dapr tutorials, such as distributed calculator, you will need to update theappconfig configuration to tracing.You can register multiple tracing exporters at the same time, and the tracing logs are forwarded to all registered exporters.

That’s it! There’s no need to include any SDKs or instrument your application code. Dapr automatically handles the distributed tracing for you.

View traces

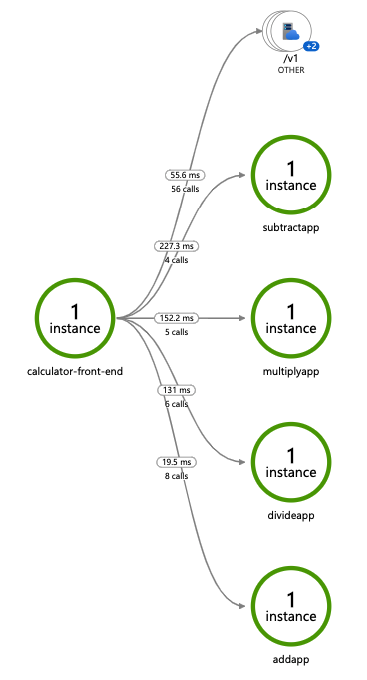

Deploy and run some applications. After a few minutes, you should see tracing logs appearing in your App Insights resource. You can also use the Application Map to examine the topology of your services, as shown below:

Note

Only operations going through Dapr API exposed by Dapr sidecar (for example, service invocation or event publishing) are displayed in Application Map topology.Related links

- Try out the observability quickstart

- Learn how to set tracing configuration options

1.1.4.3 - Using OpenTelemetry Collector to collect traces to send to Jaeger

While Dapr supports writing traces using OpenTelemetry (OTLP) and Zipkin protocols, Zipkin support for Jaeger has been deprecated in favor of OTLP. Although Jaeger supports OTLP directly, the recommended approach for production is to use the OpenTelemetry Collector to collect traces from Dapr and send them to Jaeger, allowing your application to quickly offload data and take advantage of features like retries, batching, and encryption. For more information, read the Open Telemetry Collector documentation.

Configure Jaeger in self-hosted mode

Local setup

The simplest way to start Jaeger is to run the pre-built, all-in-one Jaeger image published to DockerHub and expose the OTLP port:

docker run -d --name jaeger \

-p 4317:4317 \

-p 16686:16686 \

jaegertracing/all-in-one:1.49

Next, create the following config.yaml file locally:

Note: Because you are using the Open Telemetry protocol to talk to Jaeger, you need to fill out the

otelsection of the tracing configuration and set theendpointAddressto the address of the Jaeger container.

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: tracing

namespace: default

spec:

tracing:

samplingRate: "1"

stdout: true

otel:

endpointAddress: "localhost:4317"

isSecure: false

protocol: grpc

To launch the application referring to the new YAML configuration file, use

the --config option. For example:

dapr run --app-id myapp --app-port 3000 node app.js --config config.yaml

View traces

To view traces in your browser, go to http://localhost:16686 to see the Jaeger UI.

Configure Jaeger on Kubernetes with the OpenTelemetry Collector

The following steps show you how to configure Dapr to send distributed tracing data to the OpenTelemetry Collector which, in turn, sends the traces to Jaeger.

Prerequisites

- Install Dapr on Kubernetes

- Set up Jaeger using the Jaeger Kubernetes Operator

Set up OpenTelemetry Collector to push to Jaeger

To push traces to your Jaeger instance, install the OpenTelemetry Collector on your Kubernetes cluster.

Download and inspect the

open-telemetry-collector-jaeger.yamlfile.In the data section of the

otel-collector-confConfigMap, update theotlp/jaeger.endpointvalue to reflect the endpoint of your Jaeger collector Kubernetes service object.Deploy the OpenTelemetry Collector into the same namespace where your Dapr-enabled applications are running:

kubectl apply -f open-telemetry-collector-jaeger.yaml

Set up Dapr to send traces to OpenTelemetryCollector

Create a Dapr configuration file to enable tracing and export the sidecar traces to the OpenTelemetry Collector.

Use the

collector-config-otel.yamlfile to create your own Dapr configuration.Update the

namespaceandotel.endpointAddressvalues to align with the namespace where your Dapr-enabled applications and OpenTelemetry Collector are deployed.Apply the configuration with:

kubectl apply -f collector-config.yaml

Deploy your app with tracing enabled

Apply the tracing Dapr configuration by adding a dapr.io/config annotation to the application deployment that you want to enable distributed tracing for, as shown in the following example:

apiVersion: apps/v1

kind: Deployment

metadata:

...

spec:

...

template:

metadata:

...

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "MyApp"

dapr.io/app-port: "8080"

dapr.io/config: "tracing"

You can register multiple tracing exporters at the same time, and the tracing logs are forwarded to all registered exporters.

That’s it! There’s no need to include the OpenTelemetry SDK or instrument your application code. Dapr automatically handles the distributed tracing for you.

View traces

To view Dapr sidecar traces, port-forward the Jaeger Service and open the UI:

kubectl port-forward svc/jaeger-query 16686 -n observability

In your browser, go to http://localhost:16686 and you will see the Jaeger UI.

References

1.1.5 - How-To: Set-up New Relic for distributed tracing

Prerequisites

- Perpetually free New Relic account, 100 GB/month of free data ingest, 1 free full access user, unlimited free basic users

Configure Dapr tracing

Dapr natively captures metrics and traces that can be send directly to New Relic. The easiest way to export these is by configuring Dapr to send the traces to New Relic’s Trace API using the Zipkin trace format.

In order for the integration to send data to New Relic Telemetry Data Platform, you need a New Relic Insights Insert API key.

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: appconfig

namespace: default

spec:

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "https://trace-api.newrelic.com/trace/v1?Api-Key=<NR-INSIGHTS-INSERT-API-KEY>&Data-Format=zipkin&Data-Format-Version=2"

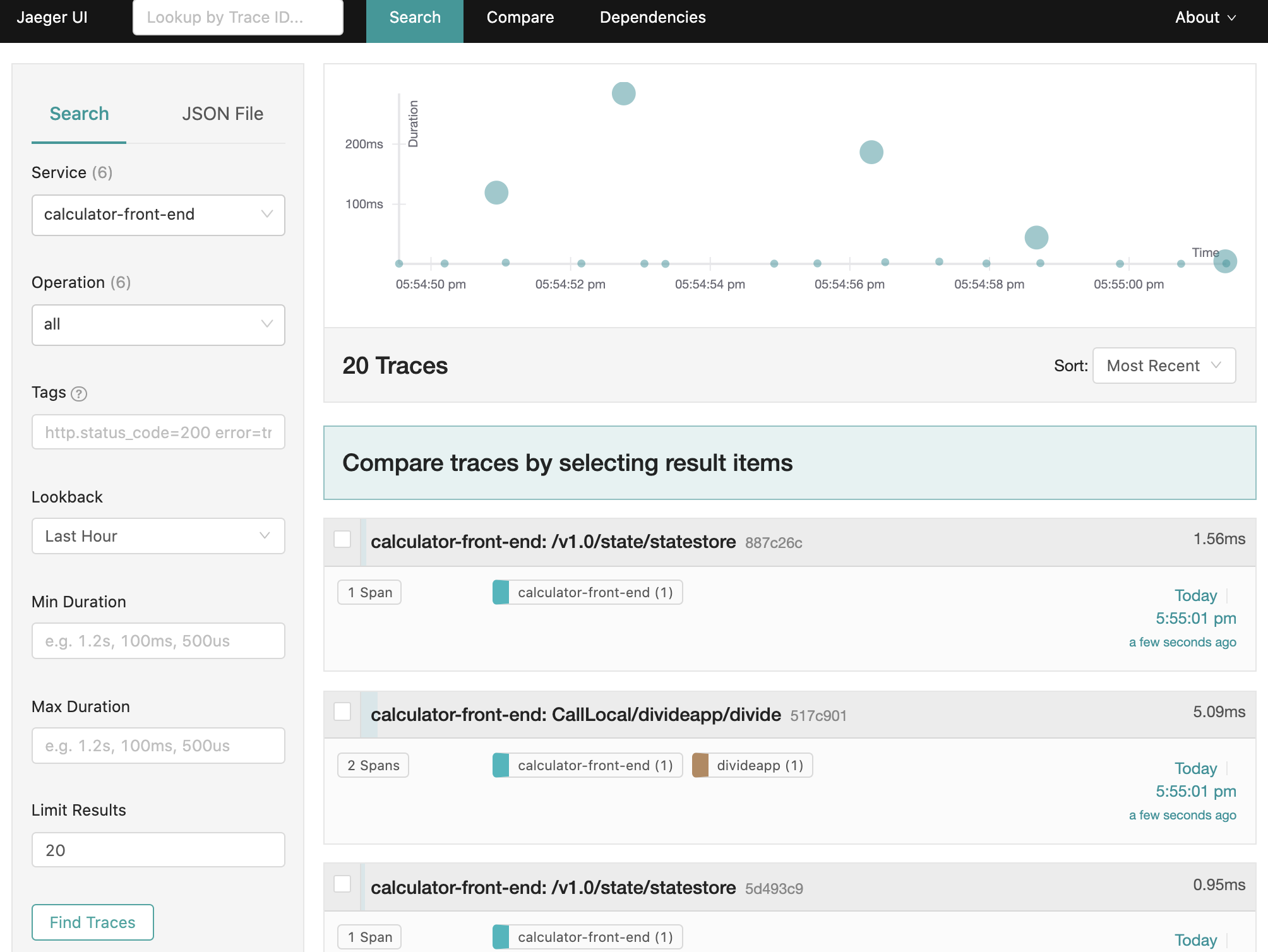

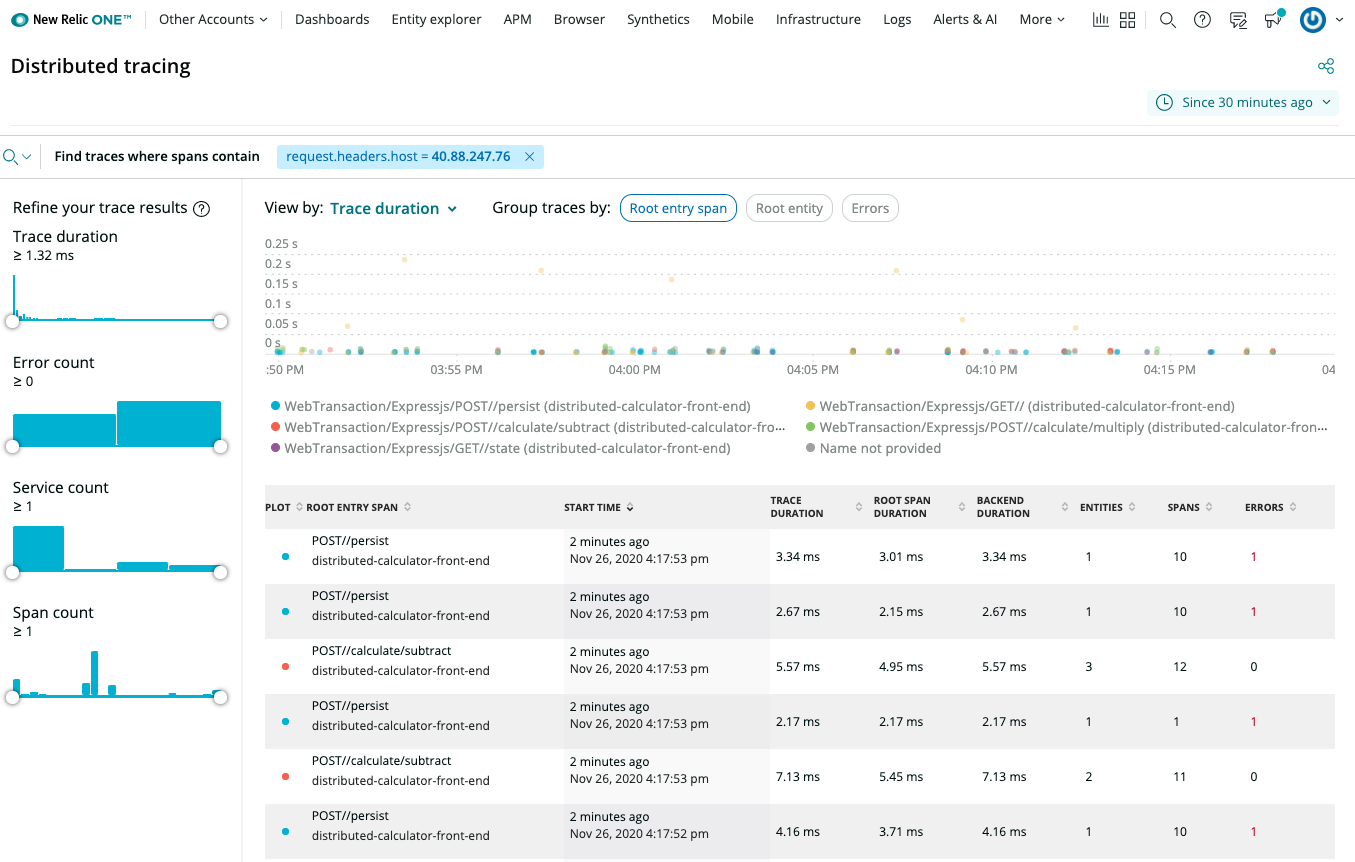

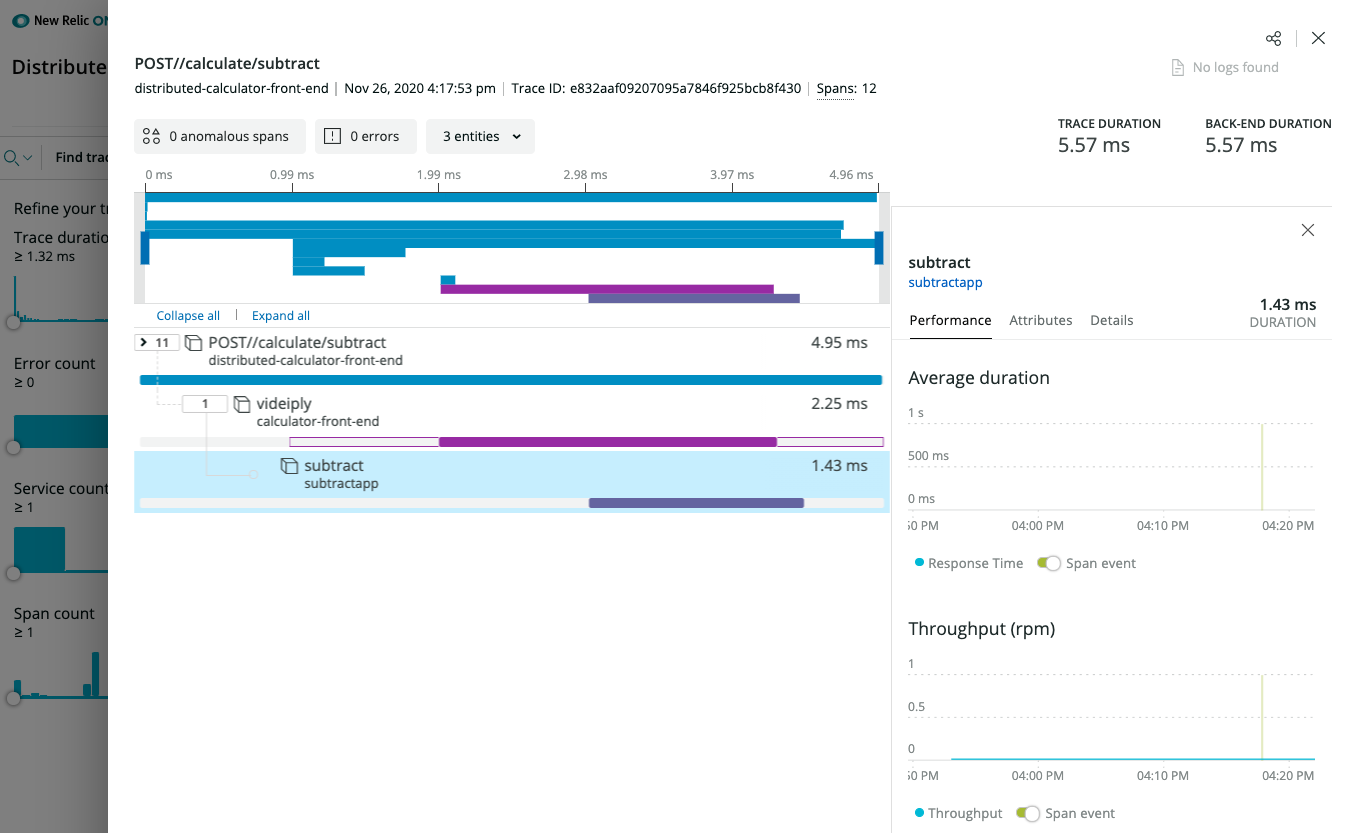

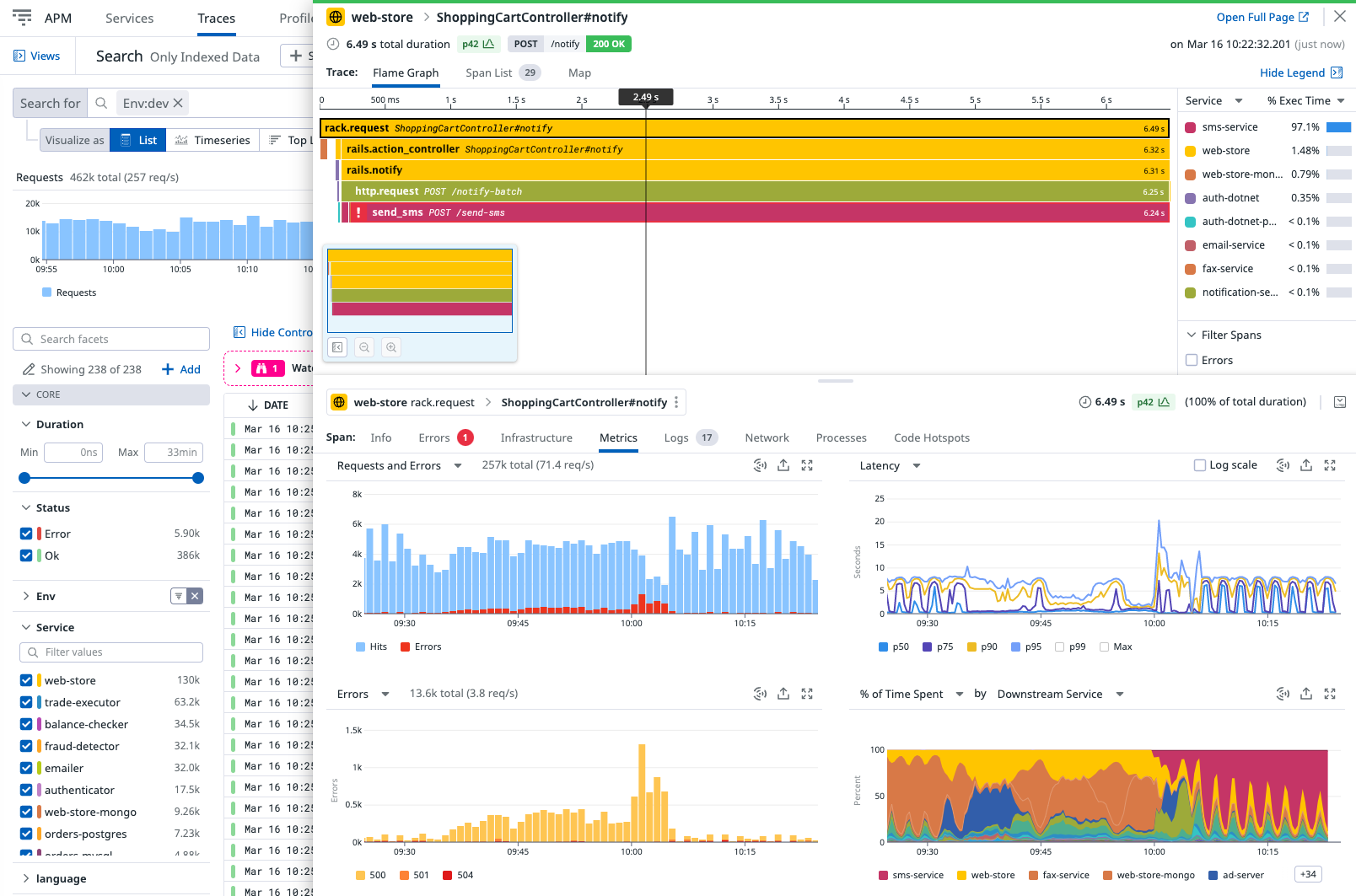

Viewing Traces

New Relic Distributed Tracing overview

New Relic Distributed Tracing details

(optional) New Relic Instrumentation

In order for the integrations to send data to New Relic Telemetry Data Platform, you either need a New Relic license key or New Relic Insights Insert API key.

OpenTelemetry instrumentation

Leverage the different language specific OpenTelemetry implementations, for example New Relic Telemetry SDK and OpenTelemetry support for .NET. In this case, use the OpenTelemetry Trace Exporter. See example here.

New Relic Language agent

Similarly to the OpenTelemetry instrumentation, you can also leverage a New Relic language agent. As an example, the New Relic agent instrumentation for .NET Core is part of the Dockerfile. See example here.

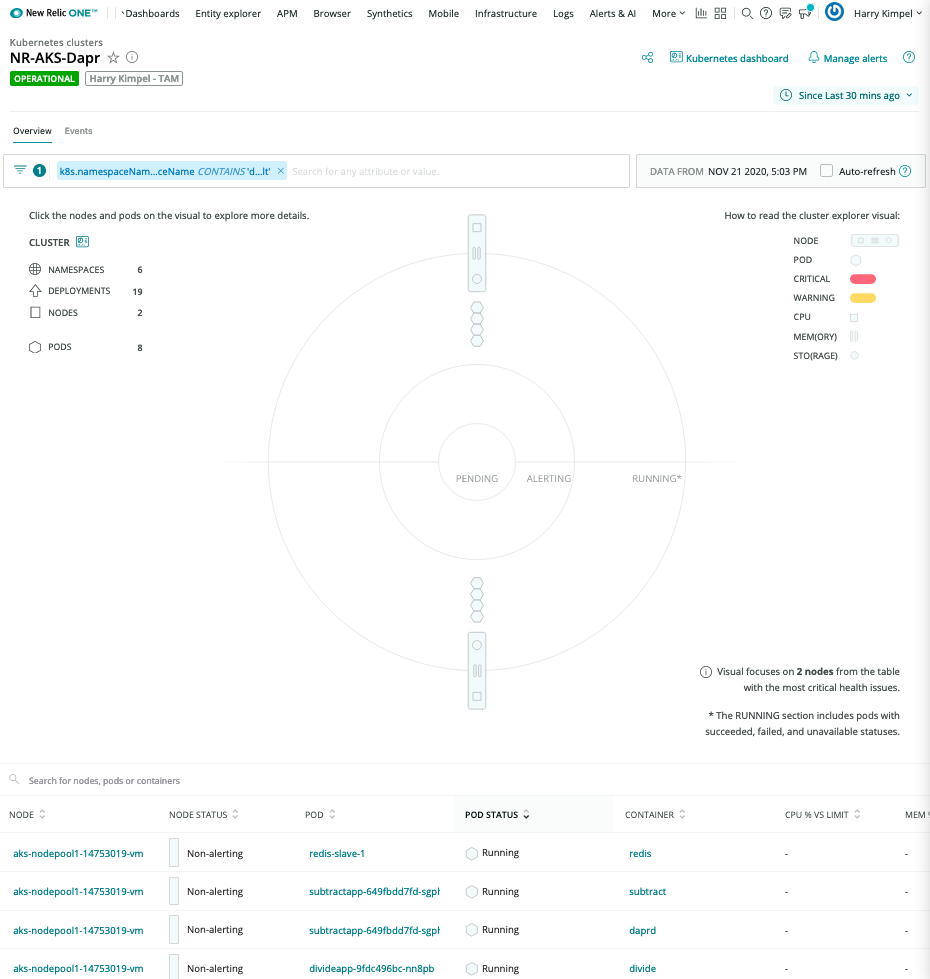

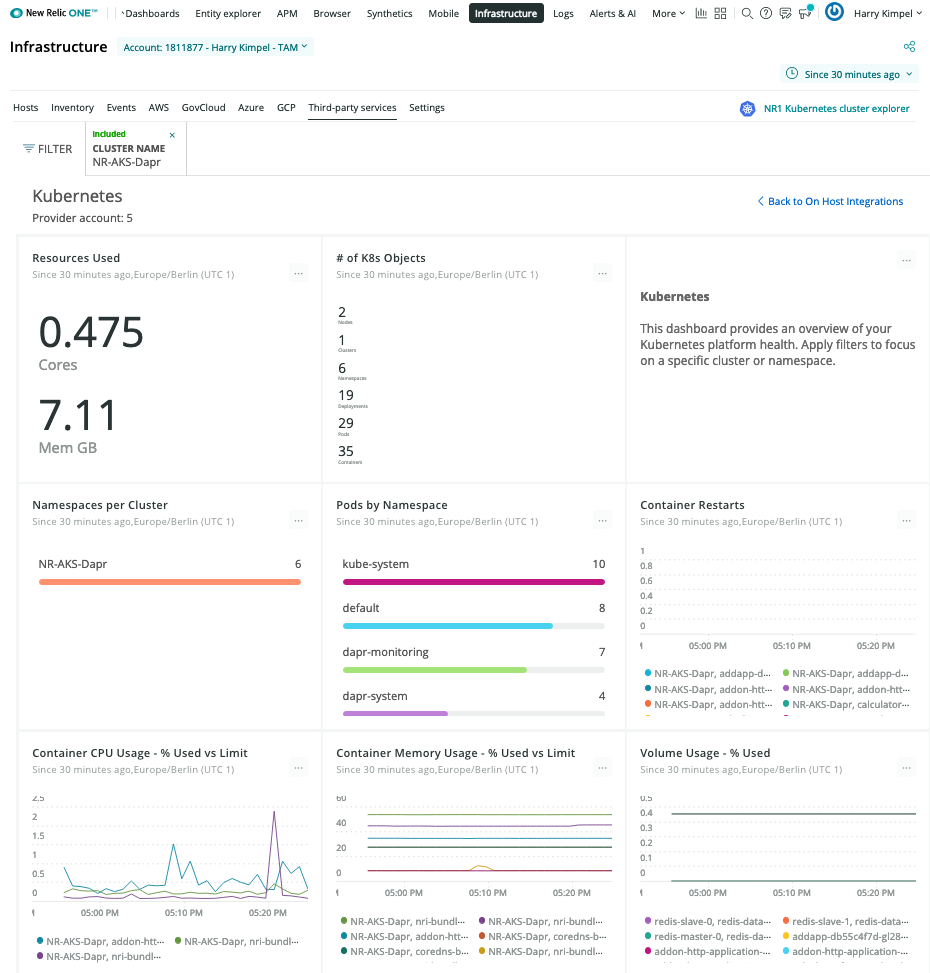

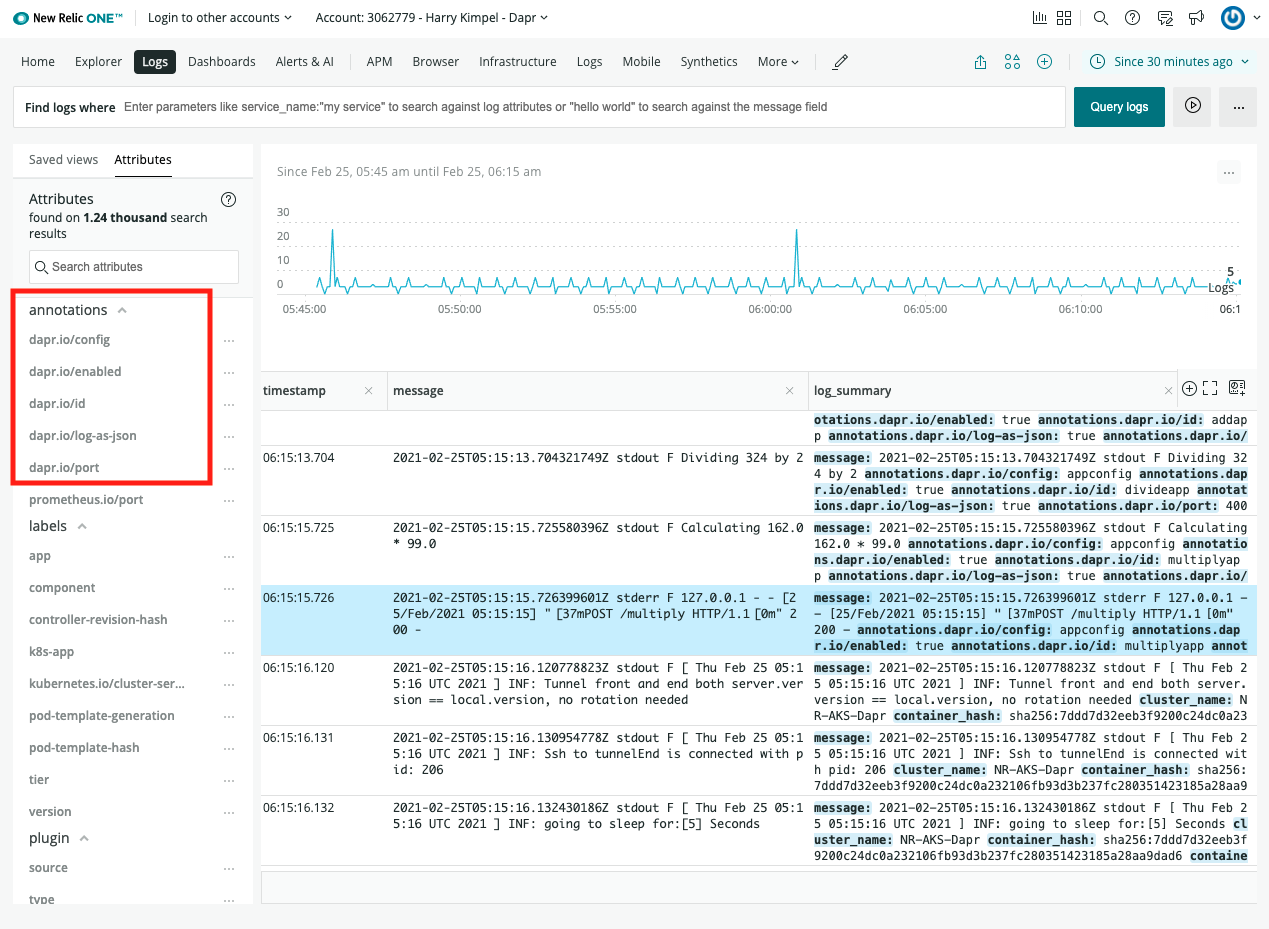

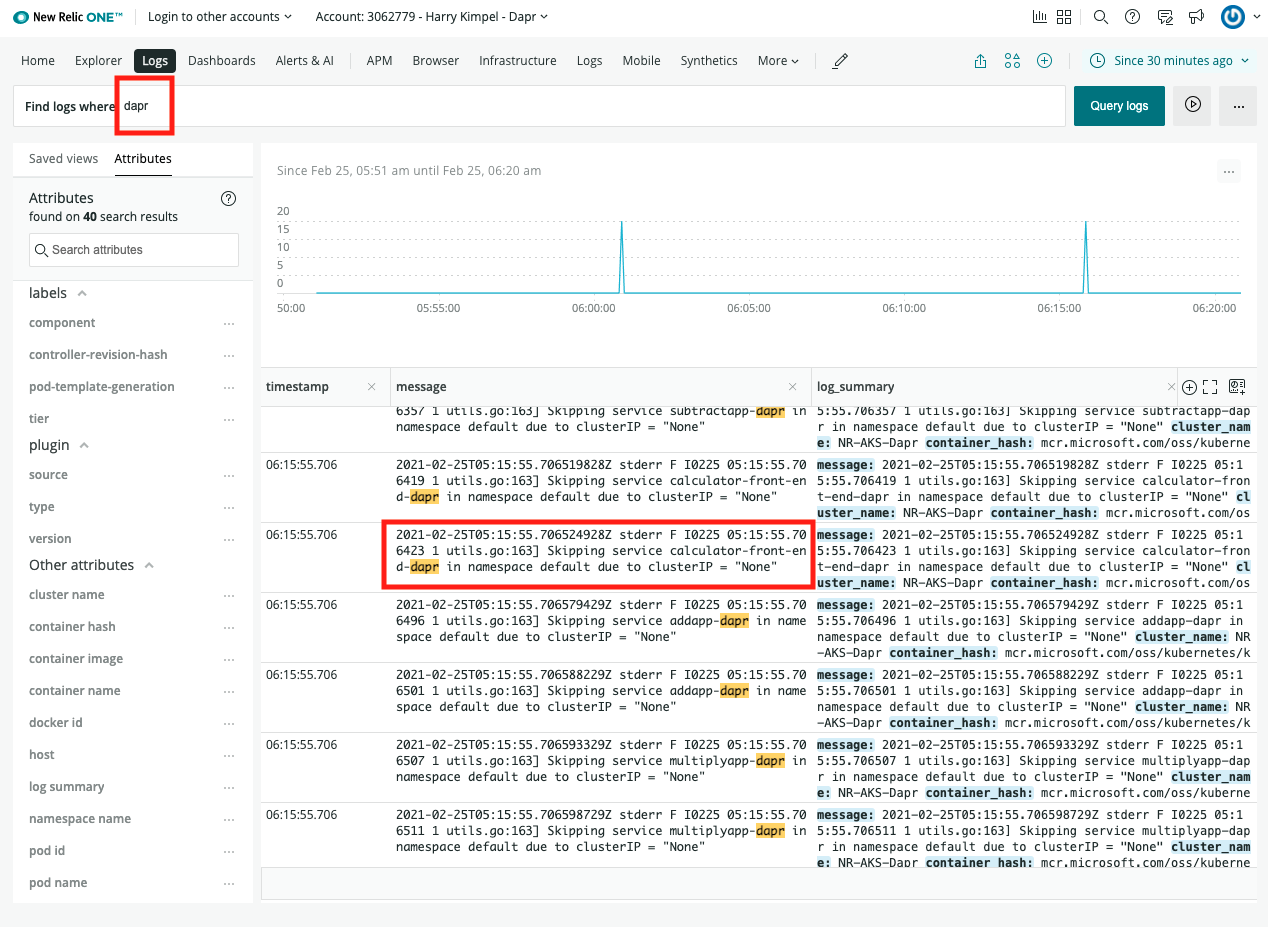

(optional) Enable New Relic Kubernetes integration

In case Dapr and your applications run in the context of a Kubernetes environment, you can enable additional metrics and logs.

The easiest way to install the New Relic Kubernetes integration is to use the automated installer to generate a manifest. It bundles not just the integration DaemonSets, but also other New Relic Kubernetes configurations, like Kubernetes events, Prometheus OpenMetrics, and New Relic log monitoring.

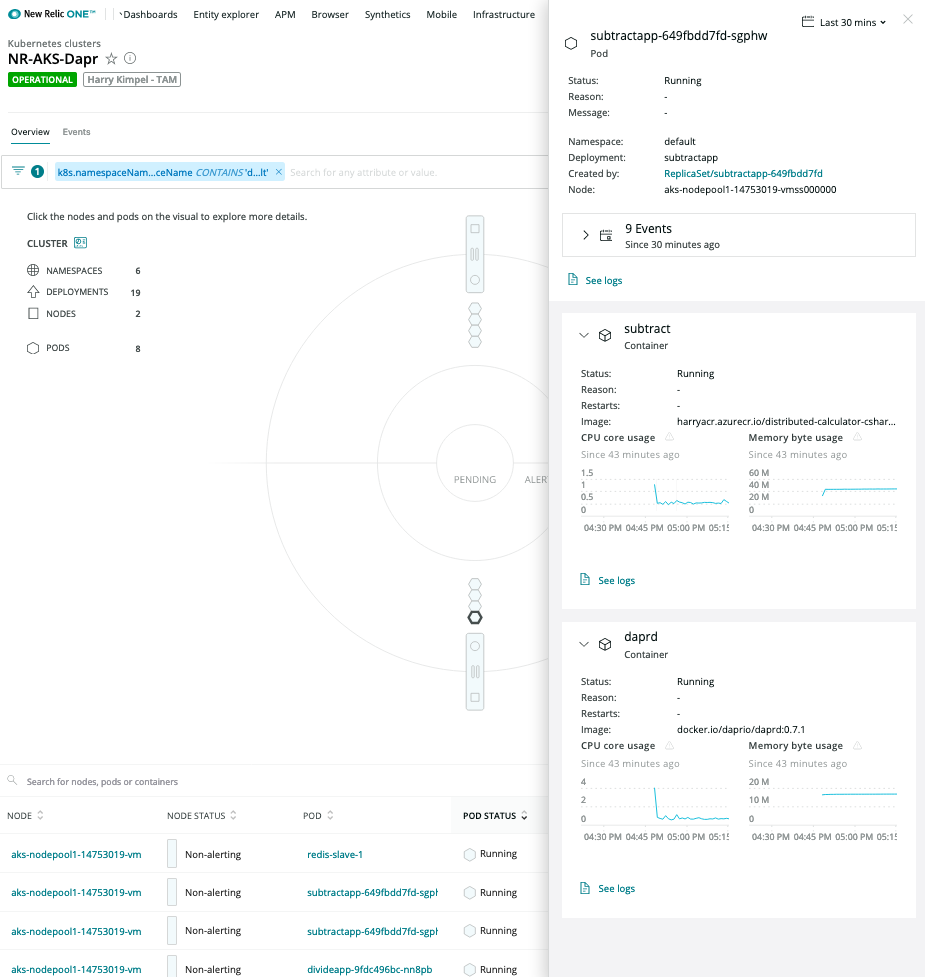

New Relic Kubernetes Cluster Explorer

The New Relic Kubernetes Cluster Explorer provides a unique visualization of the entire data and deployments of the data collected by the Kubernetes integration.

It is a good starting point to observe all your data and dig deeper into any performance issues or incidents happening inside of the application or microservices.

Automated correlation is part of the visualization capabilities of New Relic.

Pod-level details

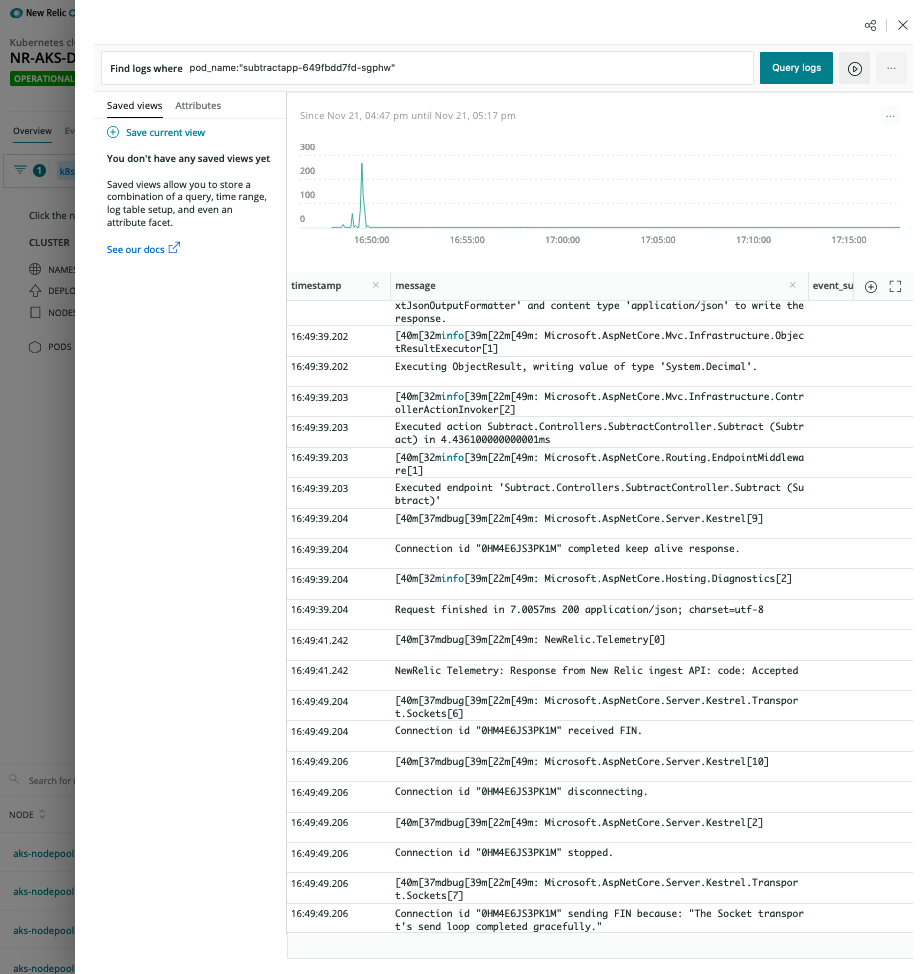

Logs in Context

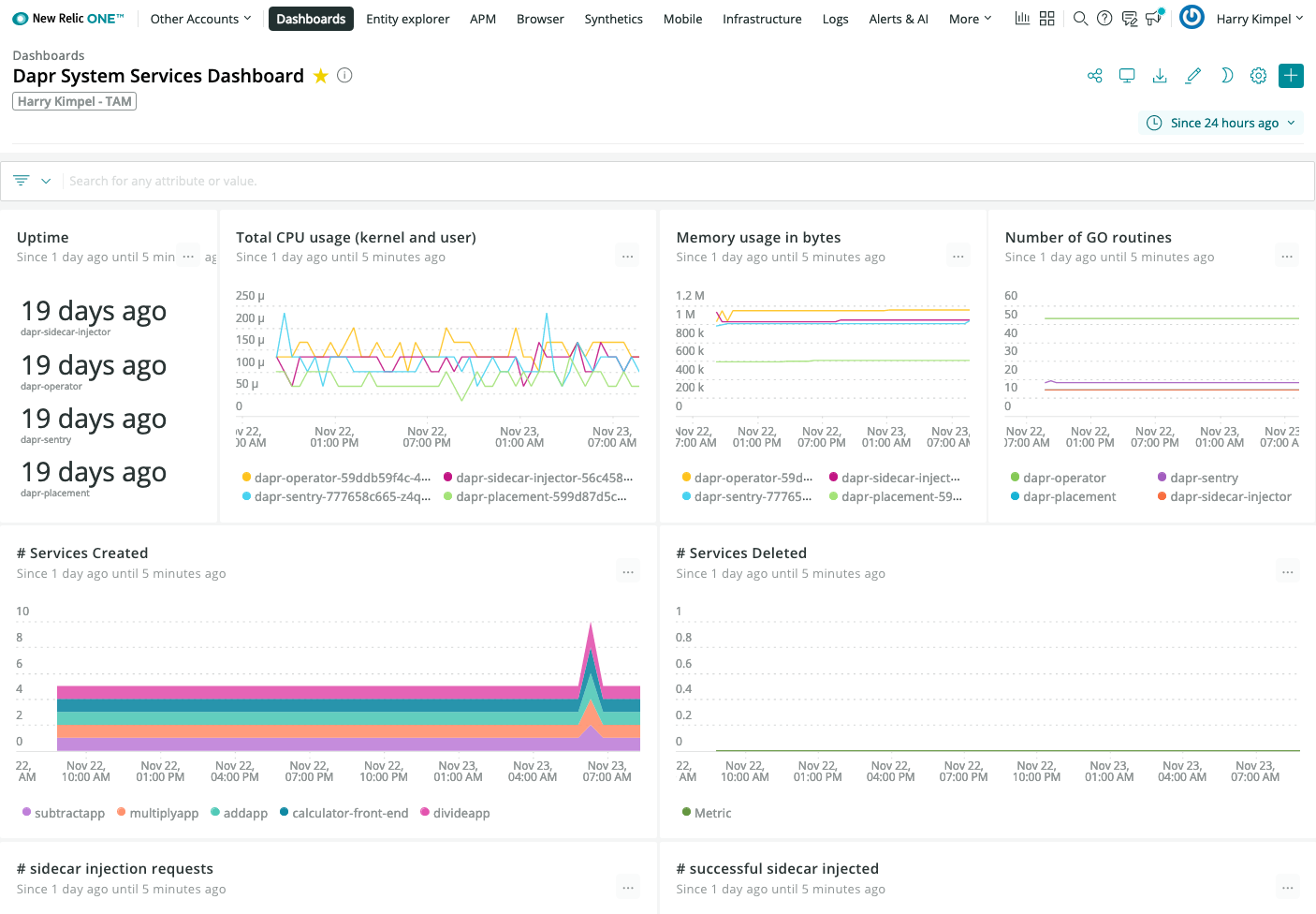

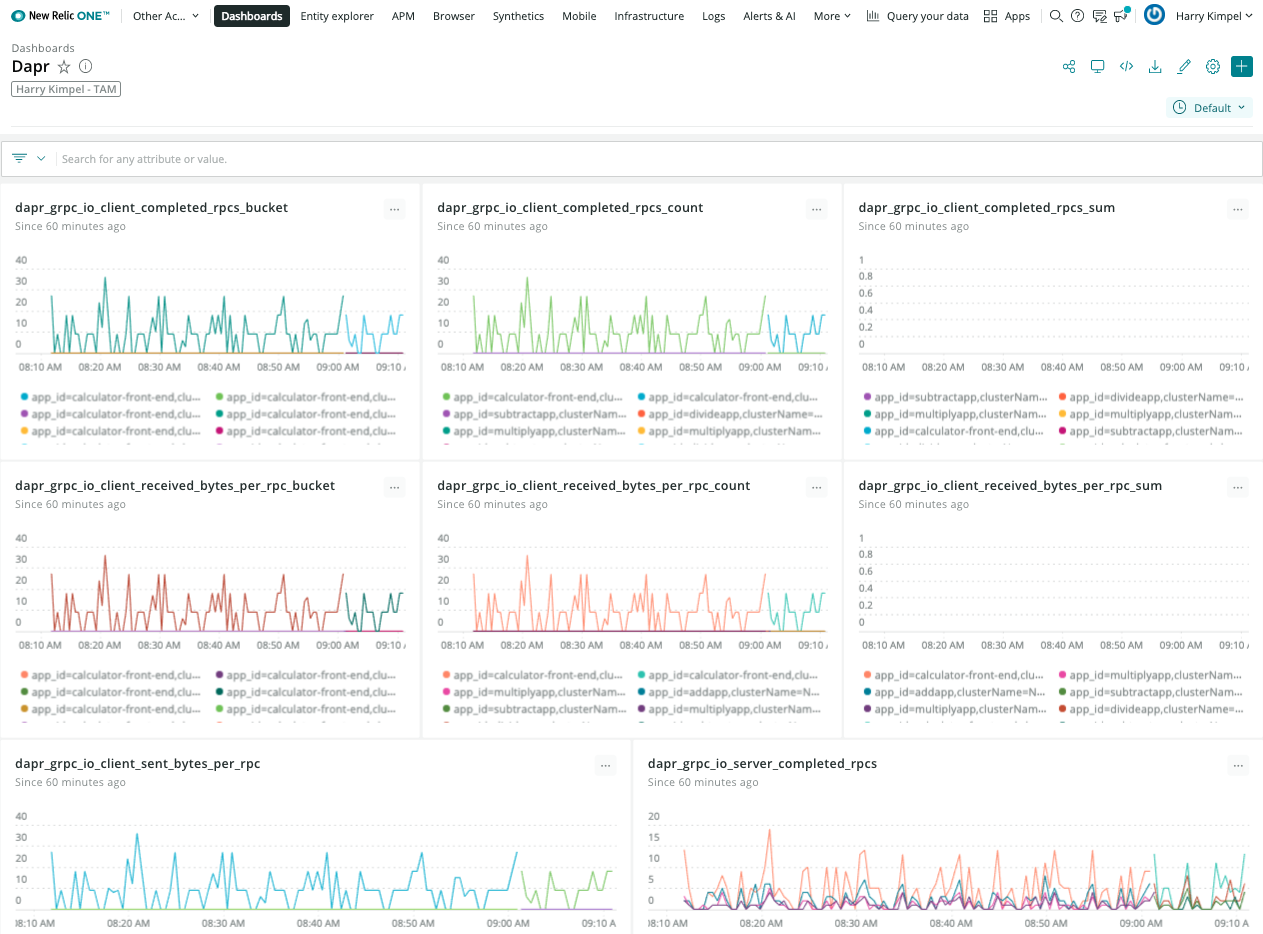

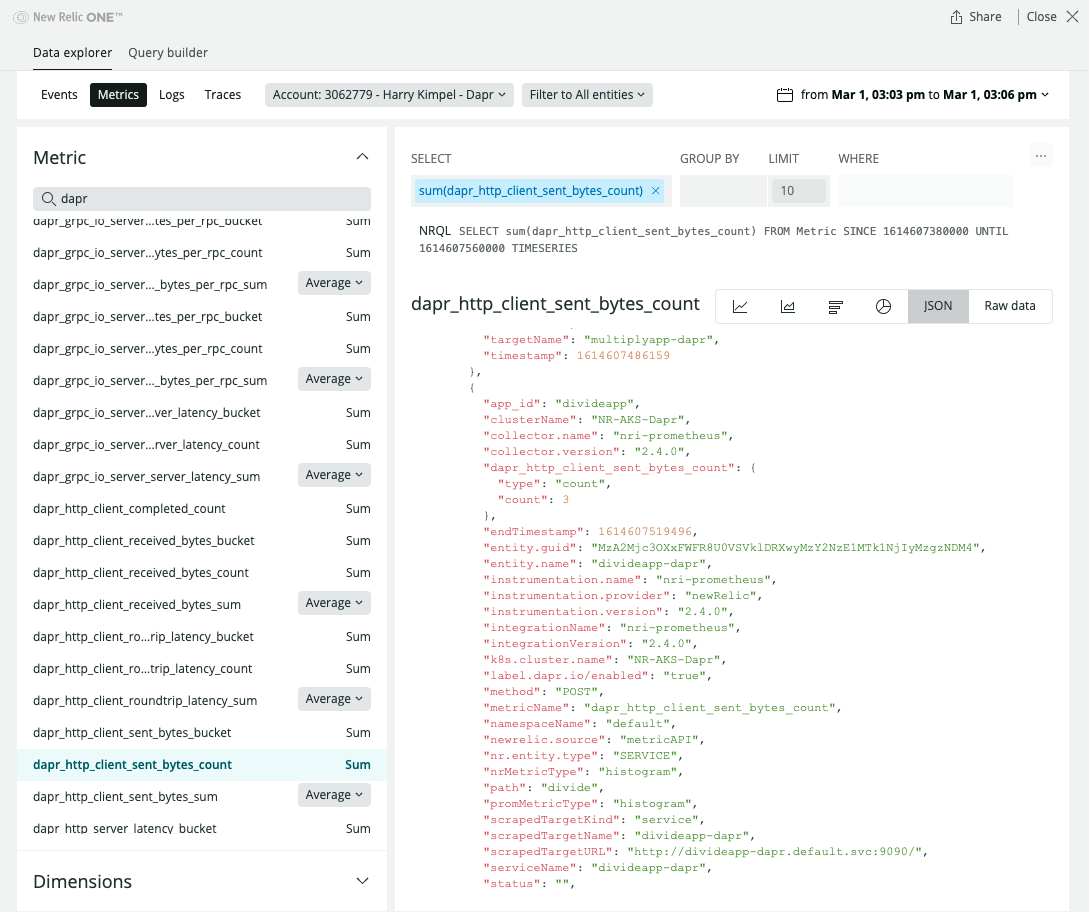

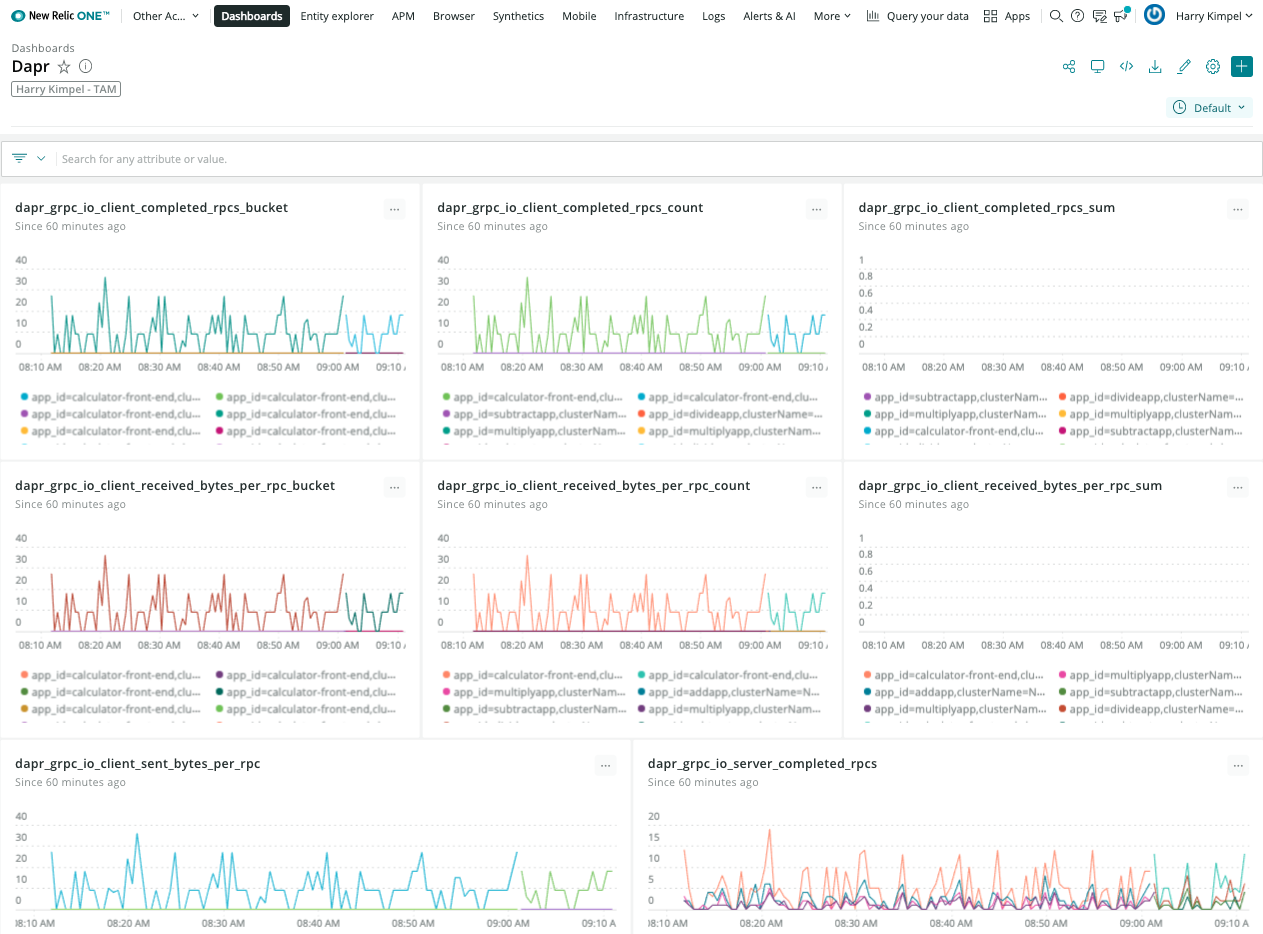

New Relic Dashboards

Kubernetes Overview

Dapr System Services

Dapr Metrics

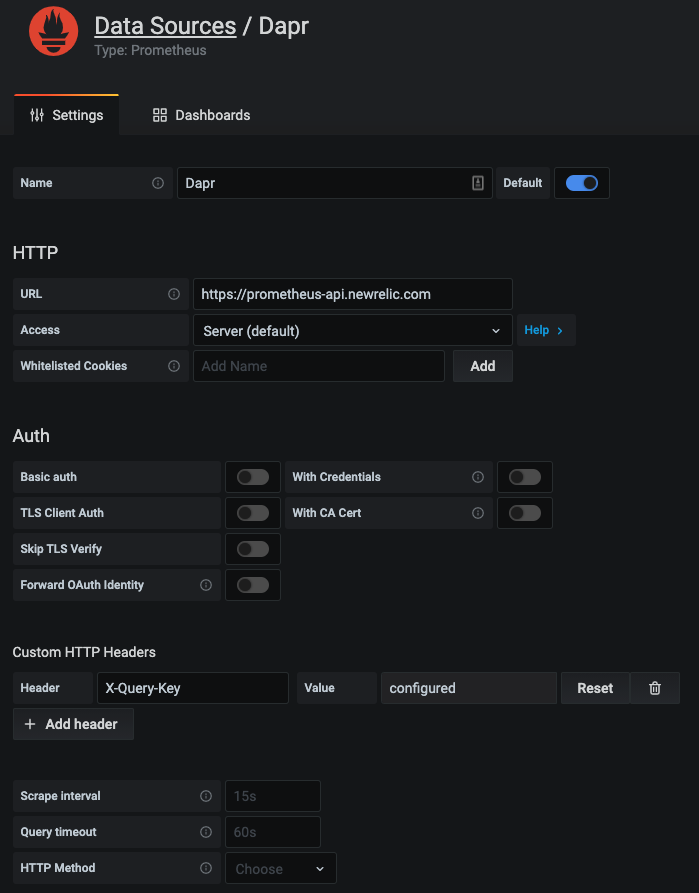

New Relic Grafana integration

New Relic teamed up with Grafana Labs so you can use the Telemetry Data Platform as a data source for Prometheus metrics and see them in your existing dashboards, seamlessly tapping into the reliability, scale, and security provided by New Relic.

Grafana dashboard templates to monitor Dapr system services and sidecars can easily be used without any changes. New Relic provides a native endpoint for Prometheus metrics into Grafana. A datasource can easily be set-up:

And the exact same dashboard templates from Dapr can be imported to visualize Dapr system services and sidecars.

New Relic Alerts

All the data that is collected from Dapr, Kubernetes or any services that run on top of can be used to set-up alerts and notifications into the preferred channel of your choice. See Alerts and Applied Intelligence.

Related Links/References

1.1.6 - How-To: Set up Zipkin for distributed tracing

Configure self hosted mode

For self hosted mode, on running dapr init:

- The following YAML file is created by default in

$HOME/.dapr/config.yaml(on Linux/Mac) or%USERPROFILE%\.dapr\config.yaml(on Windows) and it is referenced by default ondapr runcalls unless otherwise overridden `:

- config.yaml

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: daprConfig

namespace: default

spec:

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "http://localhost:9411/api/v2/spans"

- The openzipkin/zipkin docker container is launched on running

dapr initor it can be launched with the following code.

Launch Zipkin using Docker:

docker run -d -p 9411:9411 openzipkin/zipkin

- The applications launched with

dapr runby default reference the config file in$HOME/.dapr/config.yamlor%USERPROFILE%\.dapr\config.yamland can be overridden with the Dapr CLI using the--configparam:

dapr run --app-id mynode --app-port 3000 node app.js

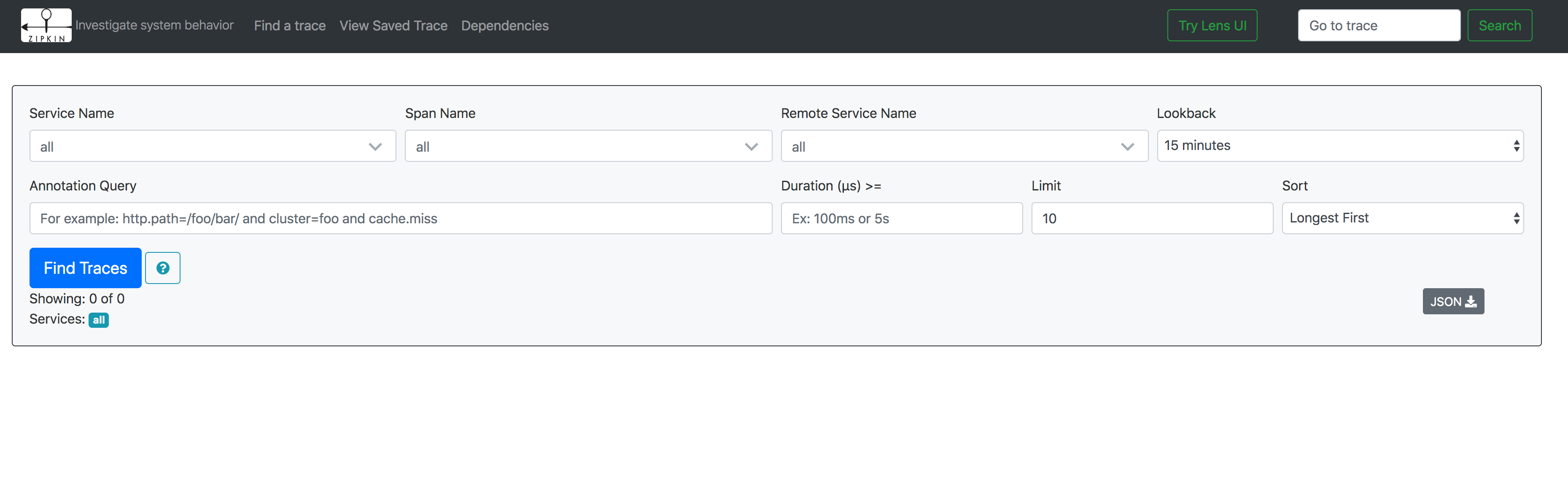

Viewing Traces

To view traces, in your browser go to http://localhost:9411 and you will see the Zipkin UI.

Configure Kubernetes

The following steps shows you how to configure Dapr to send distributed tracing data to Zipkin running as a container in your Kubernetes cluster, and how to view them.

Setup

First, deploy Zipkin:

kubectl create deployment zipkin --image openzipkin/zipkin

Create a Kubernetes service for the Zipkin pod:

kubectl expose deployment zipkin --type ClusterIP --port 9411

Next, create the following YAML file locally:

- tracing.yaml configuration

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: tracing

namespace: default

spec:

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "http://zipkin.default.svc.cluster.local:9411/api/v2/spans"

Now, deploy the the Dapr configuration file:

kubectl apply -f tracing.yaml

In order to enable this configuration for your Dapr sidecar, add the following annotation to your pod spec template:

annotations:

dapr.io/config: "tracing"

That’s it! Your sidecar is now configured to send traces to Zipkin.

Viewing Tracing Data

To view traces, connect to the Zipkin service and open the UI:

kubectl port-forward svc/zipkin 9411:9411

In your browser, go to http://localhost:9411 and you will see the Zipkin UI.

References

1.1.7 - How-To: Set up Datadog for distributed tracing

Dapr captures metrics and traces that can be sent directly to Datadog through the OpenTelemetry Collector Datadog exporter.

Configure Dapr tracing with the OpenTelemetry Collector and Datadog

Using the OpenTelemetry Collector Datadog exporter, you can configure Dapr to create traces for each application in your Kubernetes cluster and collect them in Datadog.

Before you begin, set up the OpenTelemetry Collector.

Add your Datadog API key to the

./deploy/opentelemetry-collector-generic-datadog.yamlfile in thedatadogexporter configuration section:data: otel-collector-config: ... exporters: ... datadog: api: key: <YOUR_API_KEY>Apply the

opentelemetry-collectorconfiguration by running the following command.kubectl apply -f ./deploy/open-telemetry-collector-generic-datadog.yamlSet up a Dapr configuration file that will turn on tracing and deploy a tracing exporter component that uses the OpenTelemetry Collector.

kubectl apply -f ./deploy/collector-config.yamlApply the

appconfigconfiguration by adding adapr.io/configannotation to the container that you want to participate in the distributed tracing.annotations: dapr.io/config: "appconfig"Create and configure the application. Once running, telemetry data is sent to Datadog and visible in Datadog APM.

Related Links/References

1.2 - Metrics

1.2.1 - Configure metrics

By default, each Dapr system process emits Go runtime/process metrics and has their own Dapr metrics.

Prometheus endpoint

The Dapr sidecar exposes a Prometheus-compatible metrics endpoint that you can scrape to gain a greater understanding of how Dapr is behaving.

Configuring metrics using the CLI

The metrics application endpoint is enabled by default. You can disable it by passing the command line argument --enable-metrics=false.

The default metrics port is 9090. You can override this by passing the command line argument --metrics-port to daprd.

Configuring metrics in Kubernetes

You can also enable/disable the metrics for a specific application by setting the dapr.io/enable-metrics: "false" annotation on your application deployment. With the metrics exporter disabled, daprd does not open the metrics listening port.

The following Kubernetes deployment example shows how metrics are explicitly enabled with the port specified as “9090”.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nodeapp

labels:

app: node

spec:

replicas: 1

selector:

matchLabels:

app: node

template:

metadata:

labels:

app: node

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "nodeapp"

dapr.io/app-port: "3000"

dapr.io/enable-metrics: "true"

dapr.io/metrics-port: "9090"

spec:

containers:

- name: node

image: dapriosamples/hello-k8s-node:latest

ports:

- containerPort: 3000

imagePullPolicy: Always

Configuring metrics using application configuration

You can also enable metrics via application configuration. To disable the metrics collection in the Dapr sidecars by default, set spec.metrics.enabled to false.

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: tracing

namespace: default

spec:

metrics:

enabled: false

Configuring metrics for error codes

You can enable additional metrics for Dapr API error codes by setting spec.metrics.recordErrorCodes to true. Dapr APIs which communicate back to their caller may return standardized error codes. A new metric called error_code_total is recorded, which allows monitoring of error codes triggered by application, code, and category. See the errorcodes package for specific codes and categories.

Example configuration:

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: tracing

namespace: default

spec:

metrics:

enabled: true

recordErrorCodes: true

Example metric:

{

"app_id": "publisher-app",

"category": "state",

"dapr_io_enabled": "true",

"error_code": "ERR_STATE_STORE_NOT_CONFIGURED",

"instance": "10.244.1.64:9090",

"job": "kubernetes-service-endpoints",

"namespace": "my-app",

"node": "my-node",

"service": "publisher-app-dapr"

}

Optimizing HTTP metrics reporting with path matching

When invoking Dapr using HTTP, metrics are created for each requested method by default. This can result in a high number of metrics, known as high cardinality, which can impact memory usage and CPU.

Path matching allows you to manage and control the cardinality of HTTP metrics in Dapr. This is an aggregation of metrics, so rather than having a metric for each event, you can reduce the number of metrics events and report an overall number. Learn more about how to set the cardinality in configuration.

This configuration is opt-in and is enabled via the Dapr configuration spec.metrics.http.pathMatching. When defined, it enables path matching, which standardizes specified paths for both metrics paths. This reduces the number of unique metrics paths, making metrics more manageable and reducing resource consumption in a controlled way.

When spec.metrics.http.pathMatching is combined with the increasedCardinality flag set to false, non-matched paths are transformed into a catch-all bucket to control and limit cardinality, preventing unbounded path growth. Conversely, when increasedCardinality is true (the default), non-matched paths are passed through as they normally would be, allowing for potentially higher cardinality but preserving the original path data.

Examples of Path Matching in HTTP Metrics

The following examples demonstrate how to use the Path Matching API in Dapr for managing HTTP metrics. On each example, the metrics are collected from 5 HTTP requests to the /orders endpoint with different order IDs. By adjusting cardinality and utilizing path matching, you can fine-tune metric granularity to balance detail and resource efficiency.

These examples illustrate the cardinality of the metrics, highlighting that high cardinality configurations result in many entries, which correspond to higher memory usage for handling metrics. For simplicity, the following example focuses on a single metric: dapr_http_server_request_count.

Low cardinality with path matching (Recommendation)

Configuration:

http:

increasedCardinality: false

pathMatching:

- /orders/{orderID}

Metrics generated:

# matched paths

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders/{orderID}",status="200"} 5

# unmatched paths

dapr_http_server_request_count{app_id="order-service",method="GET",path="",status="200"} 1

With low cardinality and path matching configured, you get the best of both worlds by grouping the metrics for the important endpoints without compromising the cardinality. This approach helps avoid high memory usage and potential security issues.

Low cardinality without path matching

Configuration:

http:

increasedCardinality: false

Metrics generated:

dapr_http_server_request_count{app_id="order-service",method="GET", path="",status="200"} 5

In low cardinality mode, the path, which is the main source of unbounded cardinality, is dropped. This results in metrics that primarily indicate the number of requests made to the service for a given HTTP method, but without any information about the paths invoked.

High cardinality with path matching

Configuration:

http:

increasedCardinality: true

pathMatching:

- /orders/{orderID}

Metrics generated:

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders/{orderID}",status="200"} 5

This example results from the same HTTP requests as the example above, but with path matching configured for the path /orders/{orderID}. By using path matching, you achieve reduced cardinality by grouping the metrics based on the matched path.

High Cardinality without path matching

Configuration:

http:

increasedCardinality: true

Metrics generated:

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders/1",status="200"} 1

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders/2",status="200"} 1

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders/3",status="200"} 1

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders/4",status="200"} 1

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders/5",status="200"} 1

For each request, a new metric is created with the request path. This process continues for every request made to a new order ID, resulting in unbounded cardinality since the IDs are ever-growing.

HTTP metrics exclude verbs

The excludeVerbs option allows you to exclude specific HTTP verbs from being reported in the metrics. This can be useful in high-performance applications where memory savings are critical.

Examples of excluding HTTP verbs in metrics

The following examples demonstrate how to exclude HTTP verbs in Dapr for managing HTTP metrics.

Default - Include HTTP verbs

Configuration:

http:

excludeVerbs: false

Metrics generated:

dapr_http_server_request_count{app_id="order-service",method="GET",path="/orders",status="200"} 1

dapr_http_server_request_count{app_id="order-service",method="POST",path="/orders",status="200"} 1

In this example, the HTTP method is included in the metrics, resulting in a separate metric for each request to the /orders endpoint.

Exclude HTTP verbs

Configuration:

http:

excludeVerbs: true

Metrics generated:

dapr_http_server_request_count{app_id="order-service",method="",path="/orders",status="200"} 2

In this example, the HTTP method is excluded from the metrics, resulting in a single metric for all requests to the /orders endpoint.

Configuring custom latency histogram buckets

Dapr uses cumulative histogram metrics to group latency values into buckets, where each bucket contains:

- A count of the number of requests with that latency

- All the requests with lower latency

Using the default latency bucket configurations

By default, Dapr groups request latency metrics into the following buckets:

1, 2, 3, 4, 5, 6, 8, 10, 13, 16, 20, 25, 30, 40, 50, 65, 80, 100, 130, 160, 200, 250, 300, 400, 500, 650, 800, 1000, 2000, 5000, 10000, 20000, 50000, 100000

Grouping latency values in a cumulative fashion allows buckets to be used or dropped as needed for increased or decreased granularity of data. For example, if a request takes 3ms, it’s counted in the 3ms bucket, the 4ms bucket, the 5ms bucket, and so on. Similarly, if a request takes 10ms, it’s counted in the 10ms bucket, the 13ms bucket, the 16ms bucket, and so on. After these two requests have completed, the 3ms bucket has a count of 1 and the 10ms bucket has a count of 2, since both the 3ms and 10ms requests are included here.

This shows up as follows:

| 1 | 2 | 3 | 4 | 5 | 6 | 8 | 10 | 13 | 16 | 20 | 25 | 30 | 40 | 50 | 65 | 80 | 100 | 130 | 160 | ….. | 100000 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | ….. | 2 |

The default number of buckets works well for most use cases, but can be adjusted as needed. Each request creates 34 different metrics, leaving this value to grow considerably for a large number of applications. More accurate latency percentiles can be achieved by increasing the number of buckets. However, a higher number of buckets increases the amount of memory used to store the metrics, potentially negatively impacting your monitoring system.

It is recommended to keep the number of latency buckets set to the default value, unless you are seeing unwanted memory pressure in your monitoring system. Configuring the number of buckets allows you to choose applications where:

- You want to see more detail with a higher number of buckets

- Broader values are sufficient by reducing the buckets

Take note of the default latency values your applications are producing before configuring the number buckets.

Customizing latency buckets to your scenario

Tailor the latency buckets to your needs, by modifying the spec.metrics.latencyDistributionBuckets field in the Dapr configuration spec for your application(s).

For example, if you aren’t interested in extremely low latency values (1-10ms), you can group them in a single 10ms bucket. Similarly, you can group the high values in a single bucket (1000-5000ms), while keeping more detail in the middle range of values that you are most interested in.

The following Configuration spec example replaces the default 34 buckets with 11 buckets, giving a higher level of granularity in the middle range of values:

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: custom-metrics

spec:

metrics:

enabled: true

latencyDistributionBuckets: [10, 25, 40, 50, 70, 100, 150, 200, 500, 1000, 5000]

Transform metrics with regular expressions

You can set regular expressions for every metric exposed by the Dapr sidecar to “transform” their values. See a list of all Dapr metrics.

The name of the rule must match the name of the metric that is transformed. The following example shows how to apply a regular expression for the label method in the metric dapr_runtime_service_invocation_req_sent_total:

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: daprConfig

spec:

metrics:

enabled: true

http:

increasedCardinality: true

rules:

- name: dapr_runtime_service_invocation_req_sent_total

labels:

- name: method

regex:

"orders/": "orders/.+"

When this configuration is applied, a recorded metric with the method label of orders/a746dhsk293972nz is replaced with orders/.

Using regular expressions to reduce metrics cardinality is considered legacy. We encourage all users to set spec.metrics.http.increasedCardinality to false instead, which is simpler to configure and offers better performance.

References

1.2.2 - How-To: Observe metrics with Prometheus

Setup Prometheus Locally

To run Prometheus on your local machine, you can either install and run it as a process or run it as a Docker container.

Install

Note

You don’t need to install Prometheus if you plan to run it as a Docker container. Please refer to the Container instructions.To install Prometheus, follow the steps outlined here for your OS.

Configure

Now you’ve installed Prometheus, you need to create a configuration.

Below is an example Prometheus configuration, save this to a file i.e. /tmp/prometheus.yml or C:\Temp\prometheus.yml

global:

scrape_interval: 15s # By default, scrape targets every 15 seconds.

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

- job_name: 'dapr'

# Override the global default and scrape targets from this job every 5 seconds.

scrape_interval: 5s

static_configs:

- targets: ['localhost:9090'] # Replace with Dapr metrics port if not default

Run as Process

Run Prometheus with your configuration to start it collecting metrics from the specified targets.

./prometheus --config.file=/tmp/prometheus.yml --web.listen-address=:8080

We change the port so it doesn’t conflict with Dapr’s own metrics endpoint.

If you are not currently running a Dapr application, the target will show as offline. In order to start collecting metrics you must start Dapr with the metrics port matching the one provided as the target in the configuration.

Once Prometheus is running, you’ll be able to visit its dashboard by visiting http://localhost:8080.

Run as Container

To run Prometheus as a Docker container on your local machine, first ensure you have Docker installed and running.

Then you can run Prometheus as a Docker container using:

docker run \

--net=host \

-v /tmp/prometheus.yml:/etc/prometheus/prometheus.yml \

prom/prometheus --config.file=/etc/prometheus/prometheus.yml --web.listen-address=:8080

--net=host ensures that the Prometheus instance will be able to connect to any Dapr instances running on the host machine. If you plan to run your Dapr apps in containers as well, you’ll need to run them on a shared Docker network and update the configuration with the correct target address.

Once Prometheus is running, you’ll be able to visit its dashboard by visiting http://localhost:8080.

Setup Prometheus on Kubernetes

Prerequisites

Install Prometheus

- First create namespace that can be used to deploy the Grafana and Prometheus monitoring tools

kubectl create namespace dapr-monitoring

- Install Prometheus

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

helm install dapr-prom prometheus-community/prometheus -n dapr-monitoring

If you are Minikube user or want to disable persistent volume for development purposes, you can disable it by using the following command.

helm install dapr-prom prometheus-community/prometheus -n dapr-monitoring

--set alertmanager.persistence.enabled=false --set pushgateway.persistentVolume.enabled=false --set server.persistentVolume.enabled=false

For automatic discovery of Dapr targets (Service Discovery), use:

helm install dapr-prom prometheus-community/prometheus -f values.yaml -n dapr-monitoring --create-namespace

values.yaml File

alertmanager:

persistence:

enabled: false

pushgateway:

persistentVolume:

enabled: false

server:

persistentVolume:

enabled: false

# Adds additional scrape configurations to prometheus.yml

# Uses service discovery to find Dapr and Dapr sidecar targets

extraScrapeConfigs: |-

- job_name: dapr-sidecars

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: "true"

source_labels:

- __meta_kubernetes_pod_annotation_dapr_io_enabled

- action: keep

regex: "true"

source_labels:

- __meta_kubernetes_pod_annotation_dapr_io_enable_metrics

- action: replace

replacement: ${1}

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

replacement: ${1}

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

regex: (.*);daprd

replacement: ${1}-dapr

source_labels:

- __meta_kubernetes_pod_annotation_dapr_io_app_id

- __meta_kubernetes_pod_container_name

target_label: service

- action: replace

replacement: ${1}:9090

source_labels:

- __meta_kubernetes_pod_ip

target_label: __address__

- job_name: dapr

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: dapr

source_labels:

- __meta_kubernetes_pod_label_app_kubernetes_io_name

- action: keep

regex: dapr

source_labels:

- __meta_kubernetes_pod_label_app_kubernetes_io_part_of

- action: replace

replacement: ${1}

source_labels:

- __meta_kubernetes_pod_label_app

target_label: app

- action: replace

replacement: ${1}

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

replacement: ${1}

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

replacement: ${1}:9090

source_labels:

- __meta_kubernetes_pod_ip

target_label: __address__

- Validation

Ensure Prometheus is running in your cluster.

kubectl get pods -n dapr-monitoring

Expected output:

NAME READY STATUS RESTARTS AGE

dapr-prom-kube-state-metrics-9849d6cc6-t94p8 1/1 Running 0 4m58s

dapr-prom-prometheus-alertmanager-749cc46f6-9b5t8 2/2 Running 0 4m58s

dapr-prom-prometheus-node-exporter-5jh8p 1/1 Running 0 4m58s

dapr-prom-prometheus-node-exporter-88gbg 1/1 Running 0 4m58s

dapr-prom-prometheus-node-exporter-bjp9f 1/1 Running 0 4m58s

dapr-prom-prometheus-pushgateway-688665d597-h4xx2 1/1 Running 0 4m58s

dapr-prom-prometheus-server-694fd8d7c-q5d59 2/2 Running 0 4m58s

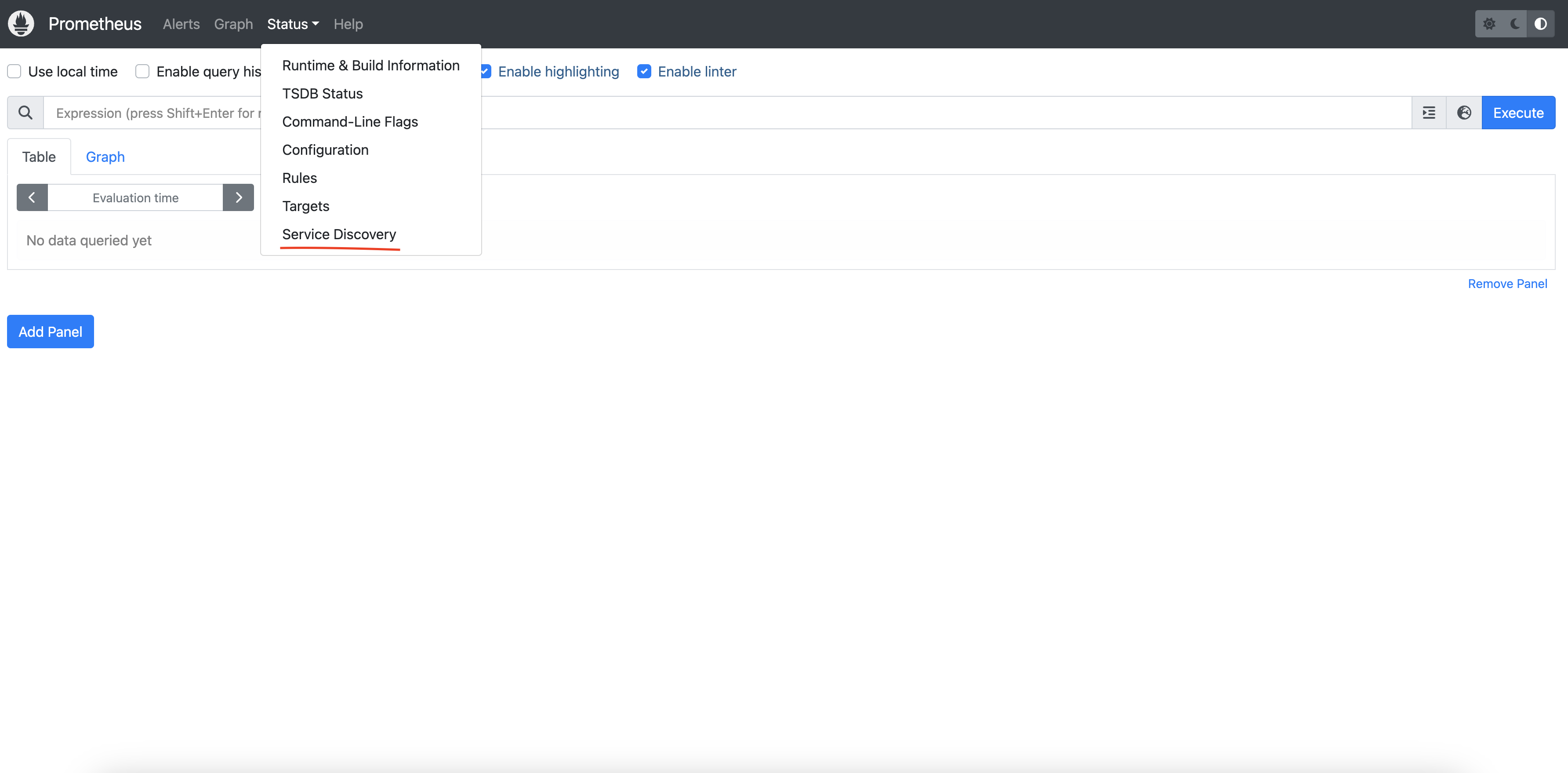

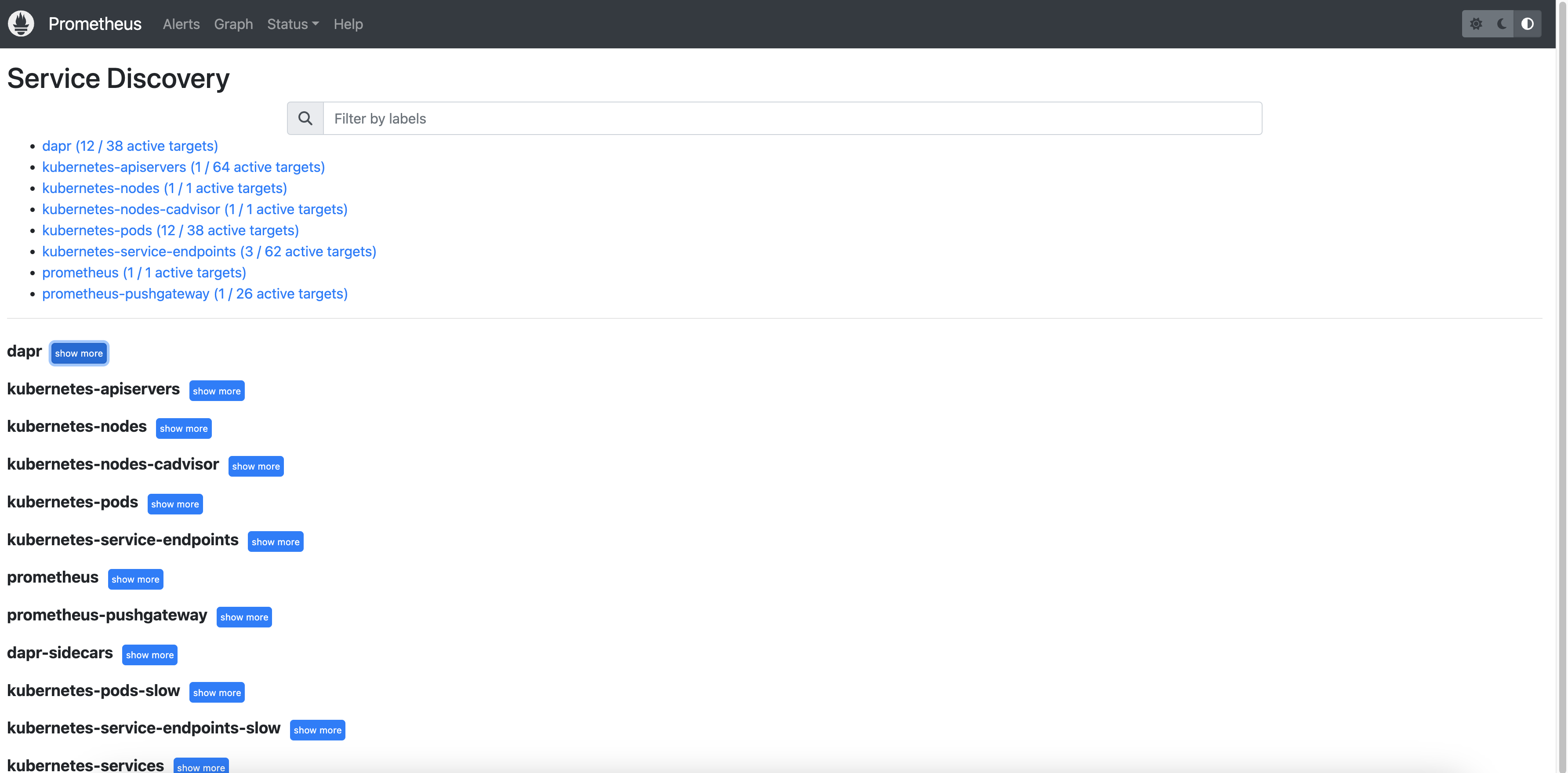

Access the Prometheus Dashboard

To view the Prometheus dashboard and check service discovery:

kubectl port-forward svc/dapr-prom-prometheus-server 9090:80 -n dapr-monitoring

Open a browser and visit http://localhost:9090. Navigate to Status > Service Discovery to verify that the Dapr targets are discovered correctly.

You can see the job_name and its discovered targets.

Example

References

1.2.3 - How-To: Observe metrics with Grafana

Available dashboards

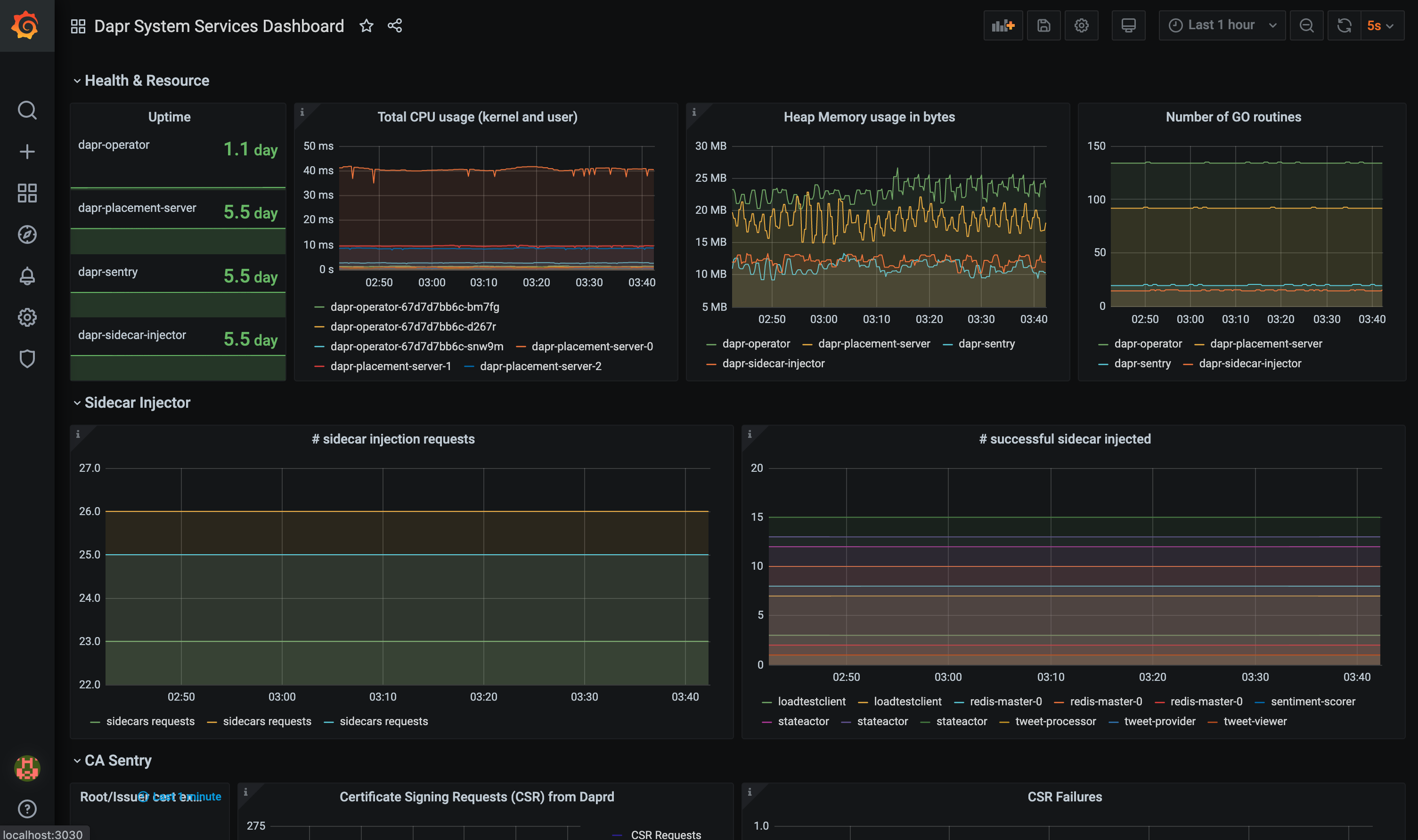

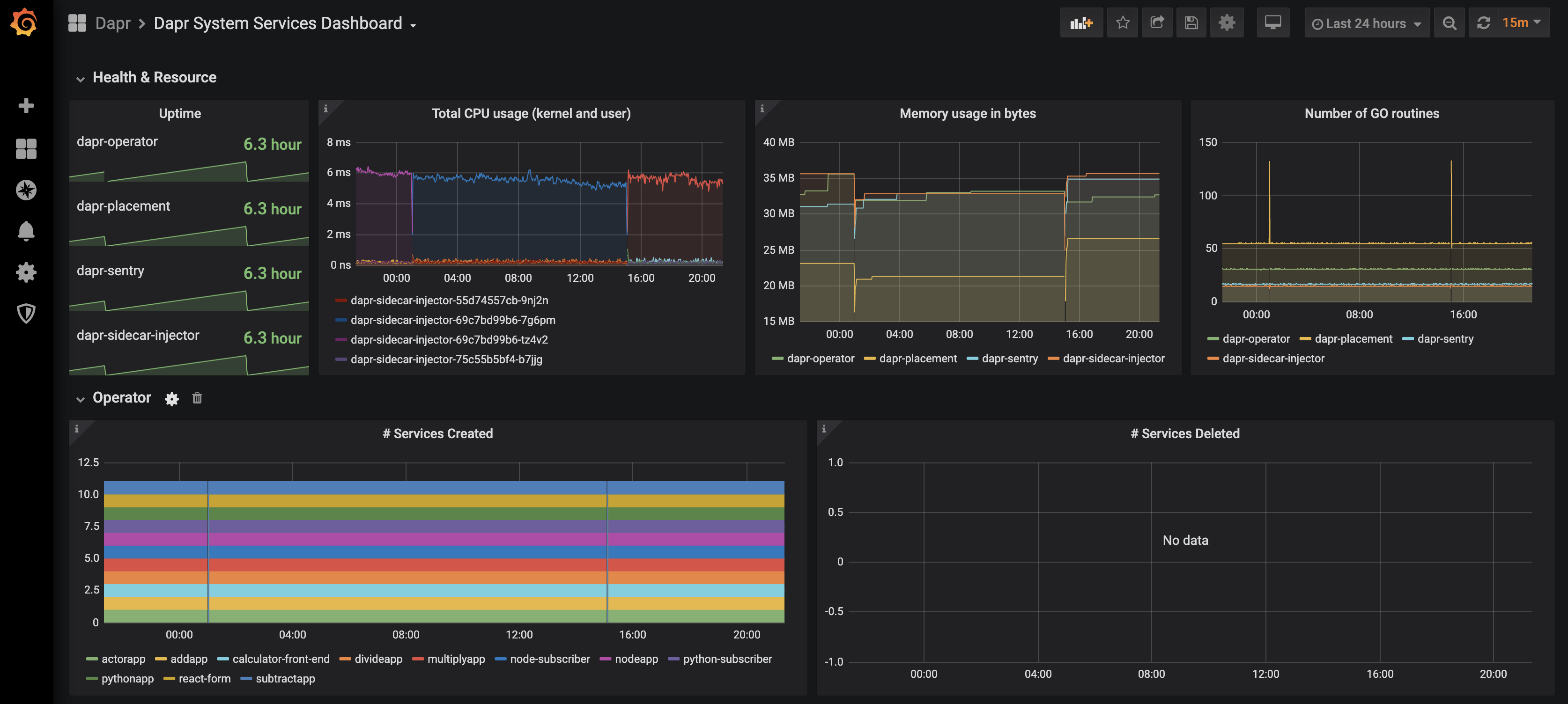

The grafana-system-services-dashboard.json template shows Dapr system component status, dapr-operator, dapr-sidecar-injector, dapr-sentry, and dapr-placement:

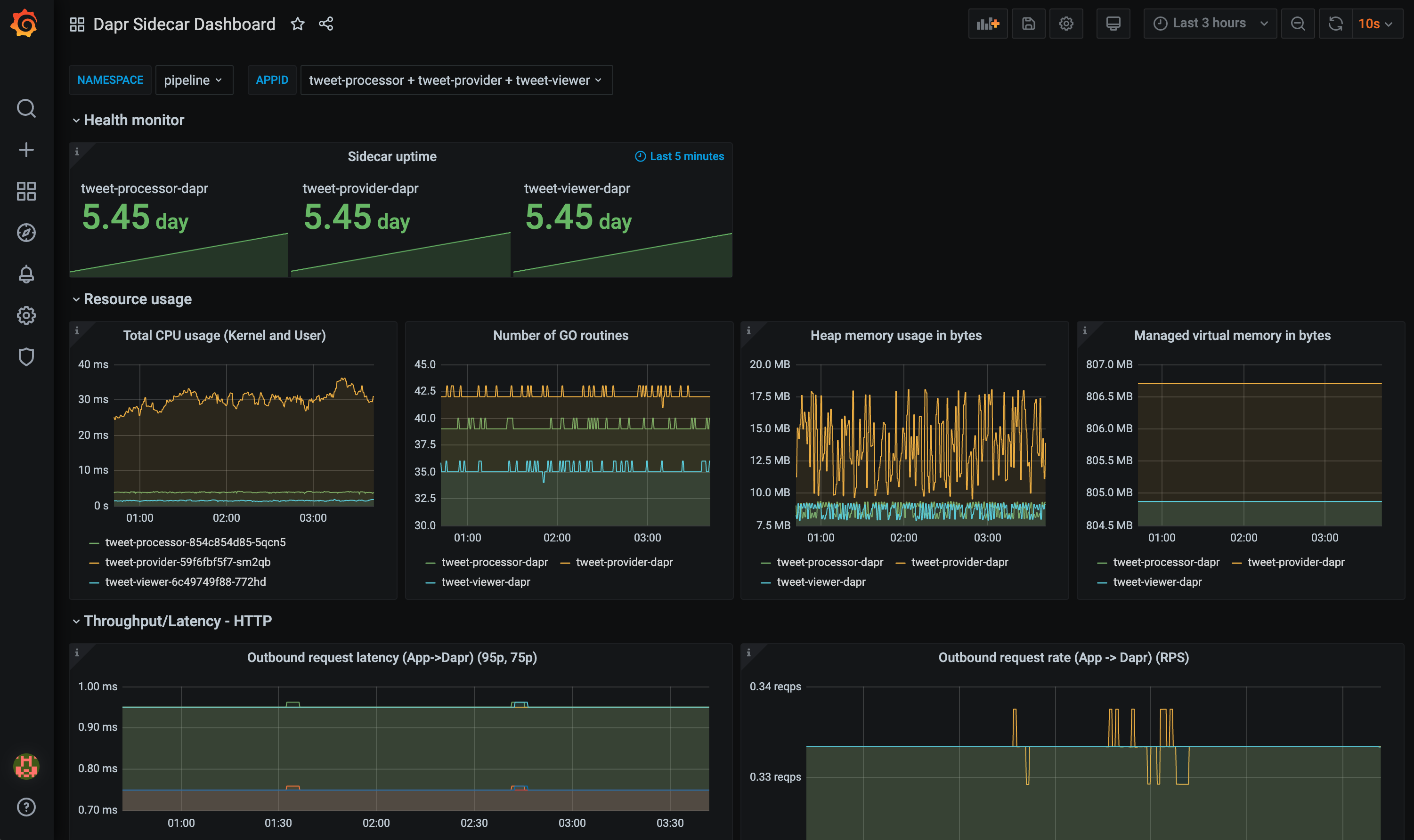

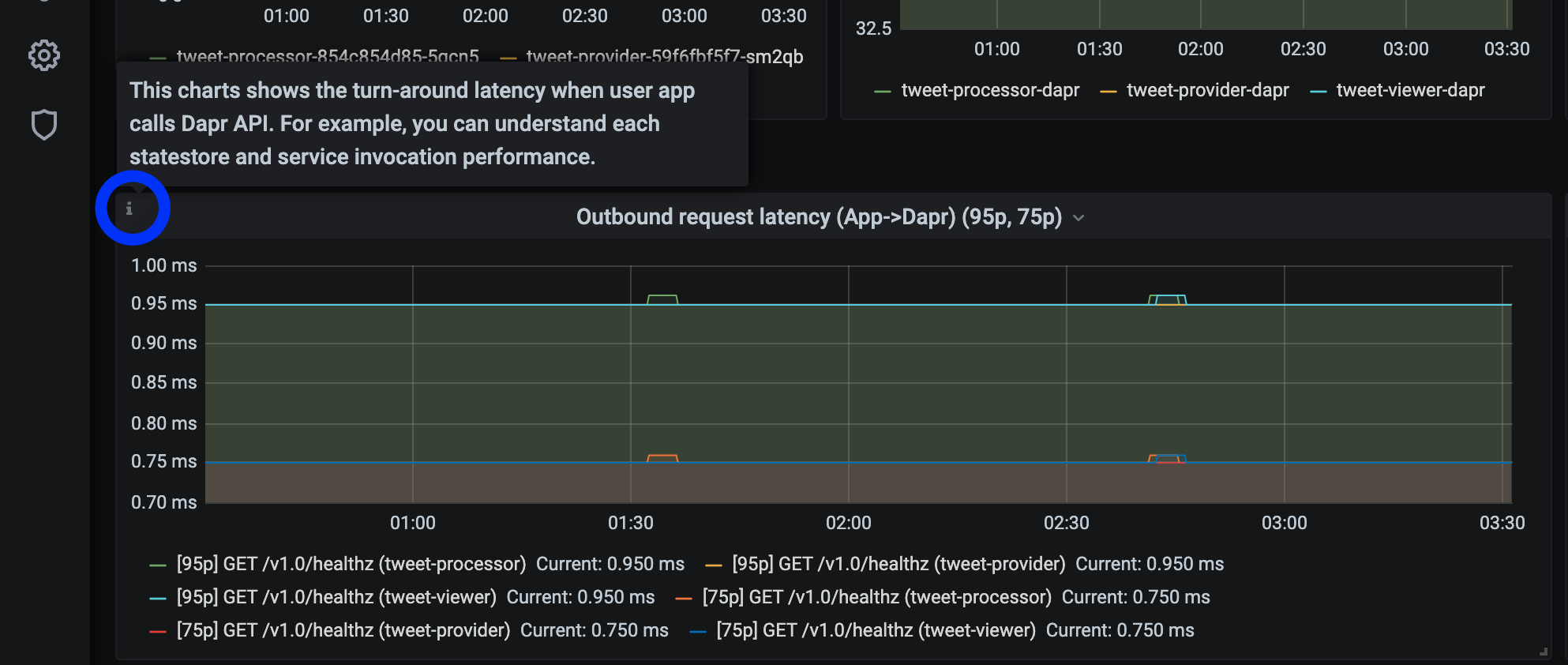

The grafana-sidecar-dashboard.json template shows Dapr sidecar status, including sidecar health/resources, throughput/latency of HTTP and gRPC, Actor, mTLS, etc.:

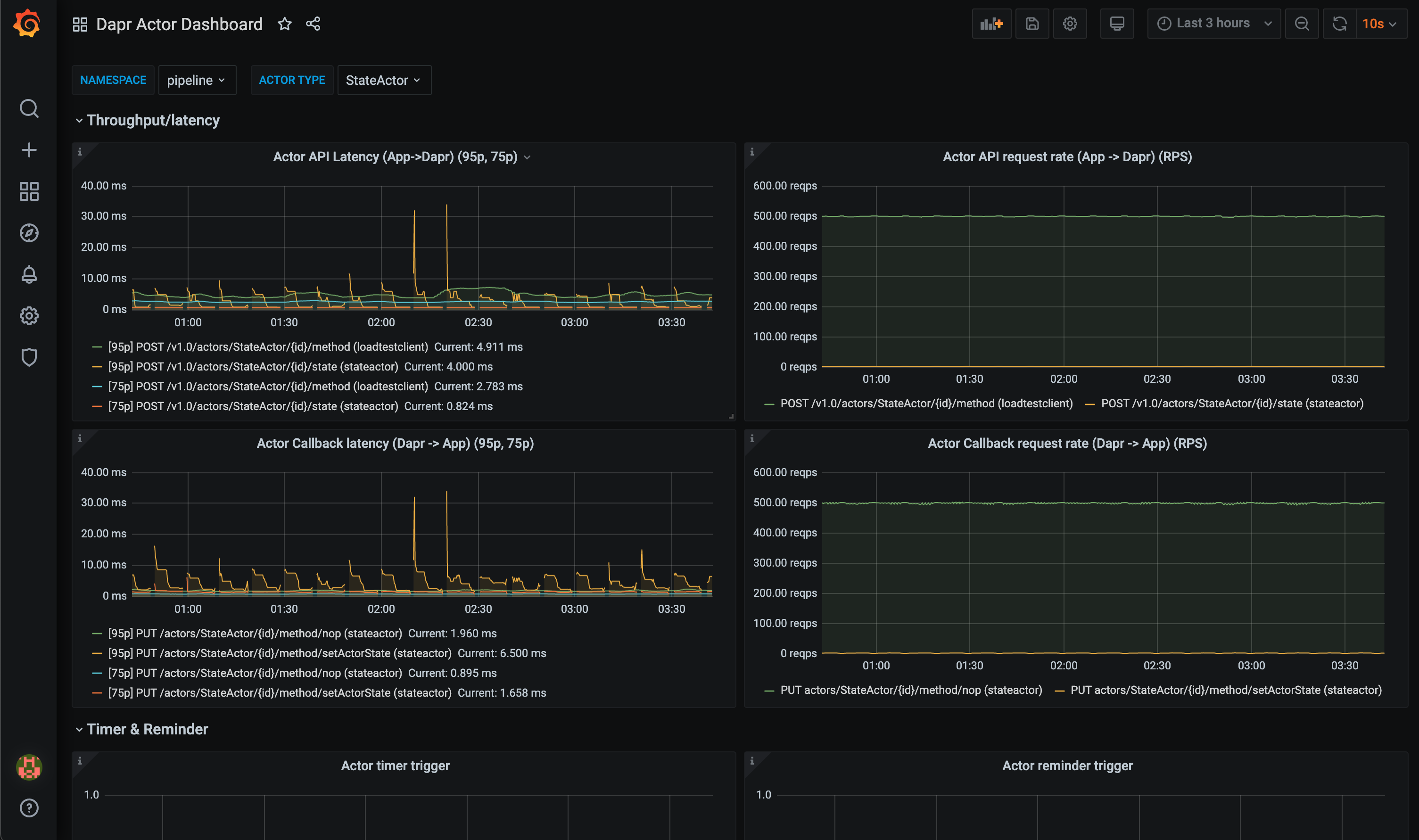

The grafana-actor-dashboard.json template shows Dapr Sidecar status, actor invocation throughput/latency, timer/reminder triggers, and turn-based concurrnecy:

Pre-requisites

Setup on Kubernetes

Install Grafana

Add the Grafana Helm repo:

helm repo add grafana https://grafana.github.io/helm-charts helm repo updateInstall the chart:

helm install grafana grafana/grafana -n dapr-monitoringNote

If you are Minikube user or want to disable persistent volume for development purpose, you can disable it by using the following command instead:

helm install grafana grafana/grafana -n dapr-monitoring --set persistence.enabled=falseRetrieve the admin password for Grafana login:

kubectl get secret --namespace dapr-monitoring grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echoYou will get a password similar to

cj3m0OfBNx8SLzUlTx91dEECgzRlYJb60D2evof1%. Remove the%character from the password to getcj3m0OfBNx8SLzUlTx91dEECgzRlYJb60D2evof1as the admin password.Validation Grafana is running in your cluster:

kubectl get pods -n dapr-monitoring NAME READY STATUS RESTARTS AGE dapr-prom-kube-state-metrics-9849d6cc6-t94p8 1/1 Running 0 4m58s dapr-prom-prometheus-alertmanager-749cc46f6-9b5t8 2/2 Running 0 4m58s dapr-prom-prometheus-node-exporter-5jh8p 1/1 Running 0 4m58s dapr-prom-prometheus-node-exporter-88gbg 1/1 Running 0 4m58s dapr-prom-prometheus-node-exporter-bjp9f 1/1 Running 0 4m58s dapr-prom-prometheus-pushgateway-688665d597-h4xx2 1/1 Running 0 4m58s dapr-prom-prometheus-server-694fd8d7c-q5d59 2/2 Running 0 4m58s grafana-c49889cff-x56vj 1/1 Running 0 5m10s

Configure Prometheus as data source

First you need to connect Prometheus as a data source to Grafana.

Port-forward to svc/grafana:

kubectl port-forward svc/grafana 8080:80 -n dapr-monitoring Forwarding from 127.0.0.1:8080 -> 3000 Forwarding from [::1]:8080 -> 3000 Handling connection for 8080 Handling connection for 8080Open a browser to

http://localhost:8080Login to Grafana

- Username =

admin - Password = Password from above

- Username =

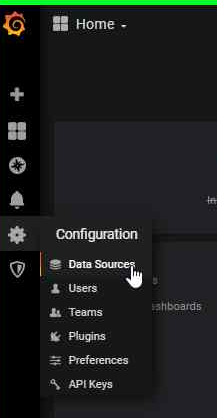

Select

ConfigurationandData Sources

Add Prometheus as a data source.

Get your Prometheus HTTP URL

The Prometheus HTTP URL follows the format

http://<prometheus service endpoint>.<namespace>Start by getting the Prometheus server endpoint by running the following command:

kubectl get svc -n dapr-monitoring NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dapr-prom-kube-state-metrics ClusterIP 10.0.174.177 <none> 8080/TCP 7d9h dapr-prom-prometheus-alertmanager ClusterIP 10.0.255.199 <none> 80/TCP 7d9h dapr-prom-prometheus-node-exporter ClusterIP None <none> 9100/TCP 7d9h dapr-prom-prometheus-pushgateway ClusterIP 10.0.190.59 <none> 9091/TCP 7d9h dapr-prom-prometheus-server ClusterIP 10.0.172.191 <none> 80/TCP 7d9h elasticsearch-master ClusterIP 10.0.36.146 <none> 9200/TCP,9300/TCP 7d10h elasticsearch-master-headless ClusterIP None <none> 9200/TCP,9300/TCP 7d10h grafana ClusterIP 10.0.15.229 <none> 80/TCP 5d5h kibana-kibana ClusterIP 10.0.188.224 <none> 5601/TCP 7d10hIn this guide the server name is

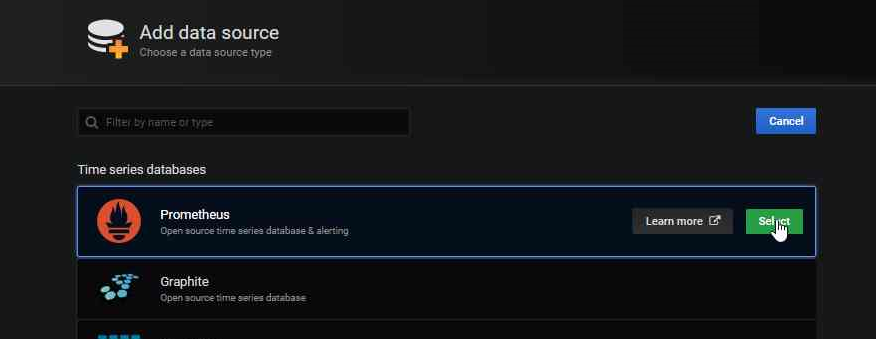

dapr-prom-prometheus-serverand the namespace isdapr-monitoring, so the HTTP URL will behttp://dapr-prom-prometheus-server.dapr-monitoring.Fill in the following settings:

- Name:

Dapr - HTTP URL:

http://dapr-prom-prometheus-server.dapr-monitoring - Default: On

- Skip TLS Verify: On

- Necessary in order to save and test the configuration

- Name:

Click

Save & Testbutton to verify that the connection succeeded.

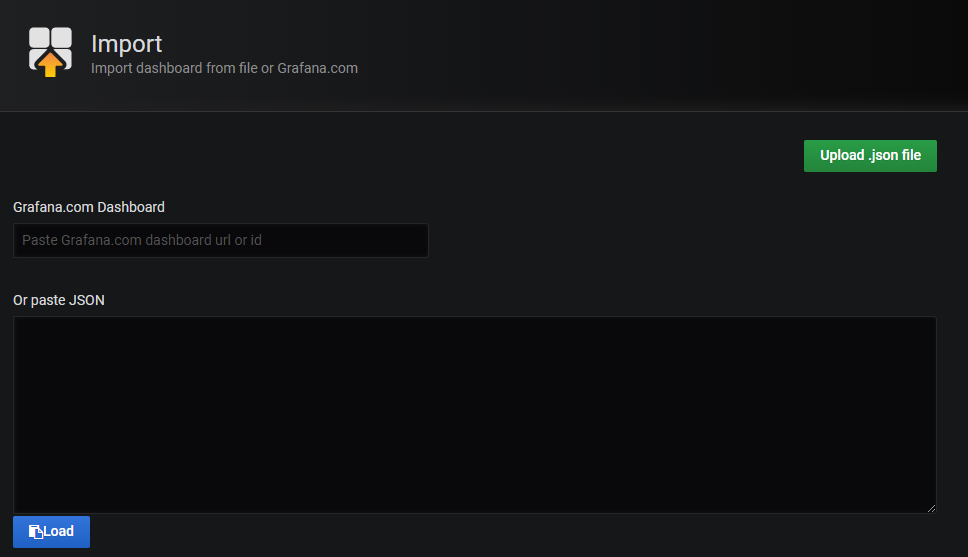

Import dashboards in Grafana

In the upper left corner of the Grafana home screen, click the “+” option, then “Import”.

You can now import Grafana dashboard templates from release assets for your Dapr version:

Find the dashboard that you imported and enjoy

Tip

Hover your mouse over the

iin the corner to the description of each chart:

References

- Dapr Observability

- Prometheus Installation

- Prometheus on Kubernetes

- Prometheus Query Language

- Supported Dapr metrics

Example

1.2.4 - How-To: Set-up New Relic to collect and analyze metrics

Prerequisites

- Perpetually free New Relic account, 100 GB/month of free data ingest, 1 free full access user, unlimited free basic users

Background

New Relic offers a Prometheus OpenMetrics Integration.

This document explains how to install it in your cluster, either using a Helm chart (recommended).

Installation

Install Helm following the official instructions.

Add the New Relic official Helm chart repository following these instructions

Run the following command to install the New Relic Logging Kubernetes plugin via Helm, replacing the placeholder value YOUR_LICENSE_KEY with your New Relic license key:

helm install nri-prometheus newrelic/nri-prometheus --set licenseKey=YOUR_LICENSE_KEY

View Metrics

Related Links/References

1.2.5 - How-To: Set up Azure Monitor to search logs and collect metrics

Prerequisites

Enable Prometheus metric scrape using config map

Make sure that Azure Monitor Agents (AMA) are running.

$ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE ... ama-logs-48kpv 2/2 Running 0 2d13h ama-logs-mx24c 2/2 Running 0 2d13h ama-logs-rs-f9bbb9898-vbt6k 1/1 Running 0 30h ama-logs-sm2mz 2/2 Running 0 2d13h ama-logs-z7p4c 2/2 Running 0 2d13h ...Apply config map to enable Prometheus metrics endpoint scrape.

You can use azm-config-map.yaml to enable Prometheus metrics endpoint scrape.

If you installed Dapr to a different namespace, you need to change the monitor_kubernetes_pod_namespaces array values. For example:

...

prometheus-data-collection-settings: |-

[prometheus_data_collection_settings.cluster]

interval = "1m"

monitor_kubernetes_pods = true

monitor_kubernetes_pods_namespaces = ["dapr-system", "default"]

[prometheus_data_collection_settings.node]

interval = "1m"

...

Apply config map:

kubectl apply -f ./azm-config.map.yaml

Install Dapr with JSON formatted logs

Install Dapr with enabling JSON-formatted logs.

helm install dapr dapr/dapr --namespace dapr-system --set global.logAsJson=trueEnable JSON formatted log in Dapr sidecar and add Prometheus annotations.

Note: The Azure Monitor Agents (AMA) only sends the metrics if the Prometheus annotations are set.

Add dapr.io/log-as-json: "true" annotation to your deployment yaml.

Example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: pythonapp

namespace: default

labels:

app: python

spec:

replicas: 1

selector:

matchLabels:

app: python

template:

metadata:

labels:

app: python

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "pythonapp"

dapr.io/log-as-json: "true"

prometheus.io/scrape: "true"

prometheus.io/port: "9090"

prometheus.io/path: "/"

...

Search metrics and logs with Azure Monitor

Go to Azure Monitor in the Azure portal.

Search Dapr Logs.

Here is an example query, to parse JSON formatted logs and query logs from Dapr system processes.

ContainerLog

| extend parsed=parse_json(LogEntry)

| project Time=todatetime(parsed['time']), app_id=parsed['app_id'], scope=parsed['scope'],level=parsed['level'], msg=parsed['msg'], type=parsed['type'], ver=parsed['ver'], instance=parsed['instance']

| where level != ""

| sort by Time

- Search Metrics.

This query, queries process_resident_memory_bytes Prometheus metrics for Dapr system processes and renders timecharts.

InsightsMetrics

| where Namespace == "prometheus" and Name == "process_resident_memory_bytes"

| extend tags=parse_json(Tags)

| project TimeGenerated, Name, Val, app=tostring(tags['app'])

| summarize memInBytes=percentile(Val, 99) by bin(TimeGenerated, 1m), app

| where app startswith "dapr-"

| render timechart

References

1.3 - Logging

1.3.1 - Logs

Dapr produces structured logs to stdout, either in plain-text or JSON-formatted. By default, all Dapr processes (runtime, or sidecar, and all control plane services) write logs to the console (stdout) in plain-text. To enable JSON-formatted logging, you need to add the --log-as-json command flag when running Dapr processes.

Note

If you want to use a search engine such as Elastic Search or Azure Monitor to search the logs, it is strongly recommended to use JSON-formatted logs which the log collector and search engine can parse using the built-in JSON parser.Log schema

Dapr produces logs based on the following schema:

| Field | Description | Example |

|---|---|---|

| time | ISO8601 Timestamp | 2011-10-05T14:48:00.000Z |

| level | Log Level (info/warn/debug/error) | info |

| type | Log Type | log |

| msg | Log Message | hello dapr! |

| scope | Logging Scope | dapr.runtime |

| instance | Container Name | dapr-pod-xxxxx |

| app_id | Dapr App ID | dapr-app |

| ver | Dapr Runtime Version | 1.9.0 |

API logging may add other structured fields, as described in the documentation for API logging.

Plain text and JSON formatted logs

- Plain-text log examples

time="2022-11-01T17:08:48.303776-07:00" level=info msg="starting Dapr Runtime -- version 1.9.0 -- commit v1.9.0-g5dfcf2e" instance=dapr-pod-xxxx scope=dapr.runtime type=log ver=1.9.0

time="2022-11-01T17:08:48.303913-07:00" level=info msg="log level set to: info" instance=dapr-pod-xxxx scope=dapr.runtime type=log ver=1.9.0

- JSON-formatted log examples

{"instance":"dapr-pod-xxxx","level":"info","msg":"starting Dapr Runtime -- version 1.9.0 -- commit v1.9.0-g5dfcf2e","scope":"dapr.runtime","time":"2022-11-01T17:09:45.788005Z","type":"log","ver":"1.9.0"}

{"instance":"dapr-pod-xxxx","level":"info","msg":"log level set to: info","scope":"dapr.runtime","time":"2022-11-01T17:09:45.788075Z","type":"log","ver":"1.9.0"}

Log formats

Dapr supports printing either plain-text, the default, or JSON-formatted logs.

To use JSON-formatted logs, you need to add additional configuration options when you install Dapr and when deploy your apps. The recommendation is to use JSON-formatted logs because most log collectors and search engines can parse JSON more easily with built-in parsers.

Enabling JSON logging with the Dapr CLI

When using the Dapr CLI to run an application, pass the --log-as-json option to enable JSON-formatted logs, for example:

dapr run \

--app-id orderprocessing \

--resources-path ./components/ \

--log-as-json \

-- python3 OrderProcessingService.py

Enabling JSON logging in Kubernetes

The following steps describe how to configure JSON-formatted logs for Kubernetes

Dapr control plane

All services in the Dapr control plane (such as operator, sentry, etc) support a --log-as-json option to enable JSON-formatted logging.

If you’re deploying Dapr to Kubernetes using a Helm chart, you can enable JSON-formatted logs for Dapr system services by passing the --set global.logAsJson=true option; for example:

helm upgrade --install dapr \

dapr/dapr \

--namespace dapr-system \

--set global.logAsJson=true

Enable JSON-formatted log for Dapr sidecars

You can enable JSON-formatted logs in Dapr sidecars by adding the dapr.io/log-as-json: "true" annotation to the deployment, for example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: pythonapp

labels:

app: python

spec:

selector:

matchLabels:

app: python

template:

metadata:

labels:

app: python

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "pythonapp"

# This enables JSON-formatted logging

dapr.io/log-as-json: "true"

...

API Logging

API logging enables you to see the API calls your application makes to the Dapr sidecar, to debug issues or monitor the behavior of your application. You can combine both Dapr API logging with Dapr log events.

See configure and view Dapr Logs and configure and view Dapr API Logs for more information.

Log collectors

If you run Dapr in a Kubernetes cluster, Fluentd is a popular container log collector. You can use Fluentd with a JSON parser plugin to parse Dapr JSON-formatted logs. This how-to shows how to configure Fluentd in your cluster.

If you are using Azure Kubernetes Service, you can use the built-in agent to collect logs with Azure Monitor without needing to install Fluentd.

Search engines

If you use Fluentd, we recommend using Elastic Search and Kibana. This how-to shows how to set up Elastic Search and Kibana in your Kubernetes cluster.

If you are using the Azure Kubernetes Service, you can use Azure Monitor for containers without installing any additional monitoring tools. Also read How to enable Azure Monitor for containers

References

1.3.2 - How-To: Set up Fluentd, Elastic search and Kibana in Kubernetes

Prerequisites

Install Elastic search and Kibana

Create a Kubernetes namespace for monitoring tools

kubectl create namespace dapr-monitoringAdd the helm repo for Elastic Search

helm repo add elastic https://helm.elastic.co helm repo updateInstall Elastic Search using Helm

By default, the chart creates 3 replicas which must be on different nodes. If your cluster has fewer than 3 nodes, specify a smaller number of replicas. For example, this sets the number of replicas to 1:

helm install elasticsearch elastic/elasticsearch --version 7.17.3 -n dapr-monitoring --set replicas=1Otherwise:

helm install elasticsearch elastic/elasticsearch --version 7.17.3 -n dapr-monitoringIf you are using minikube or simply want to disable persistent volumes for development purposes, you can do so by using the following command:

helm install elasticsearch elastic/elasticsearch --version 7.17.3 -n dapr-monitoring --set persistence.enabled=false,replicas=1Install Kibana

helm install kibana elastic/kibana --version 7.17.3 -n dapr-monitoringEnsure that Elastic Search and Kibana are running in your Kubernetes cluster

$ kubectl get pods -n dapr-monitoring NAME READY STATUS RESTARTS AGE elasticsearch-master-0 1/1 Running 0 6m58s kibana-kibana-95bc54b89-zqdrk 1/1 Running 0 4m21s

Install Fluentd

Install config map and Fluentd as a daemonset

Download these config files:

Note: If you already have Fluentd running in your cluster, please enable the nested json parser so that it can parse JSON-formatted logs from Dapr.

Apply the configurations to your cluster:

kubectl apply -f ./fluentd-config-map.yaml kubectl apply -f ./fluentd-dapr-with-rbac.yamlEnsure that Fluentd is running as a daemonset. The number of FluentD instances should be the same as the number of cluster nodes. In the example below, there is only one node in the cluster:

$ kubectl get pods -n kube-system -w NAME READY STATUS RESTARTS AGE coredns-6955765f44-cxjxk 1/1 Running 0 4m41s coredns-6955765f44-jlskv 1/1 Running 0 4m41s etcd-m01 1/1 Running 0 4m48s fluentd-sdrld 1/1 Running 0 14s

Install Dapr with JSON formatted logs

Install Dapr with enabling JSON-formatted logs

helm repo add dapr https://dapr.github.io/helm-charts/ helm repo update helm install dapr dapr/dapr --namespace dapr-system --set global.logAsJson=trueEnable JSON formatted log in Dapr sidecar

Add the

dapr.io/log-as-json: "true"annotation to your deployment yaml. For example:apiVersion: apps/v1 kind: Deployment metadata: name: pythonapp namespace: default labels: app: python spec: replicas: 1 selector: matchLabels: app: python template: metadata: labels: app: python annotations: dapr.io/enabled: "true" dapr.io/app-id: "pythonapp" dapr.io/log-as-json: "true" ...

Search logs

Note: Elastic Search takes a time to index the logs that Fluentd sends.

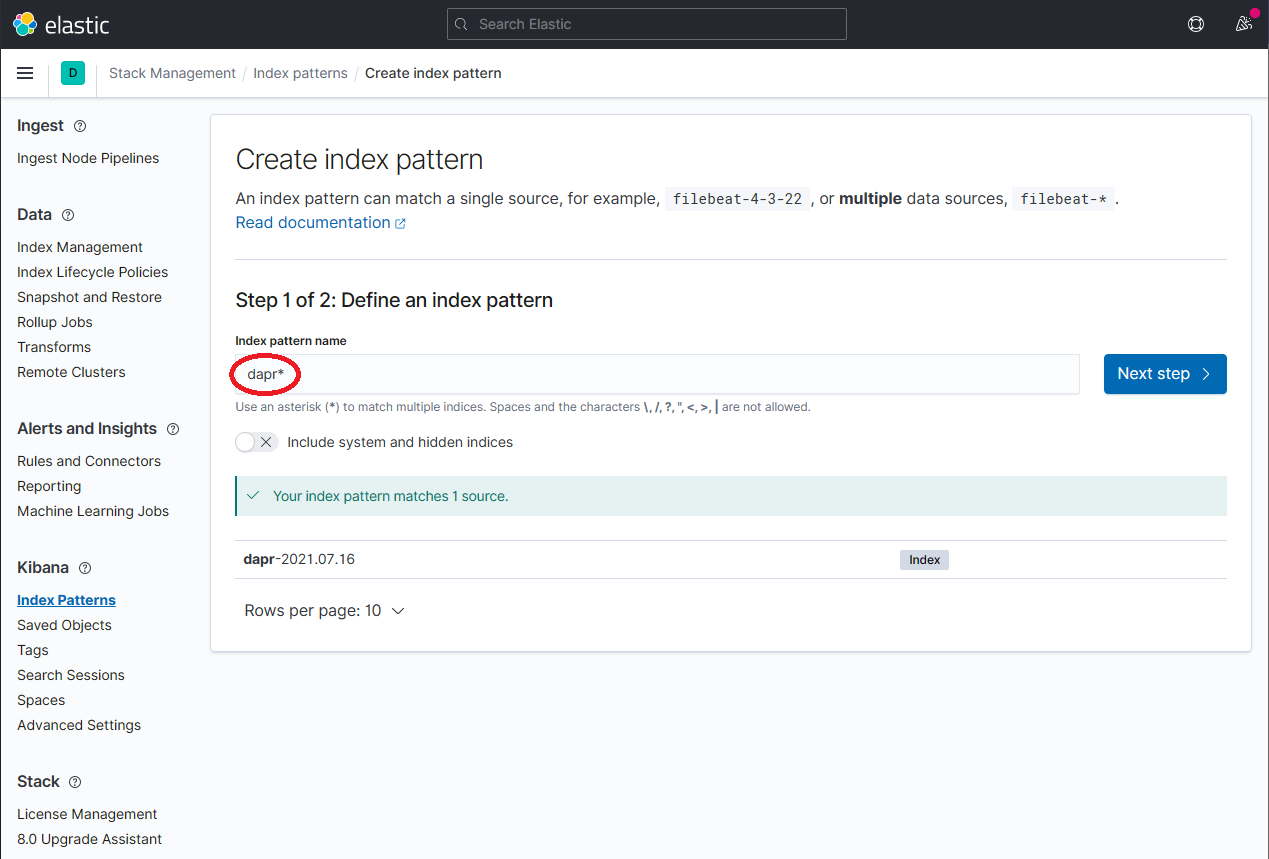

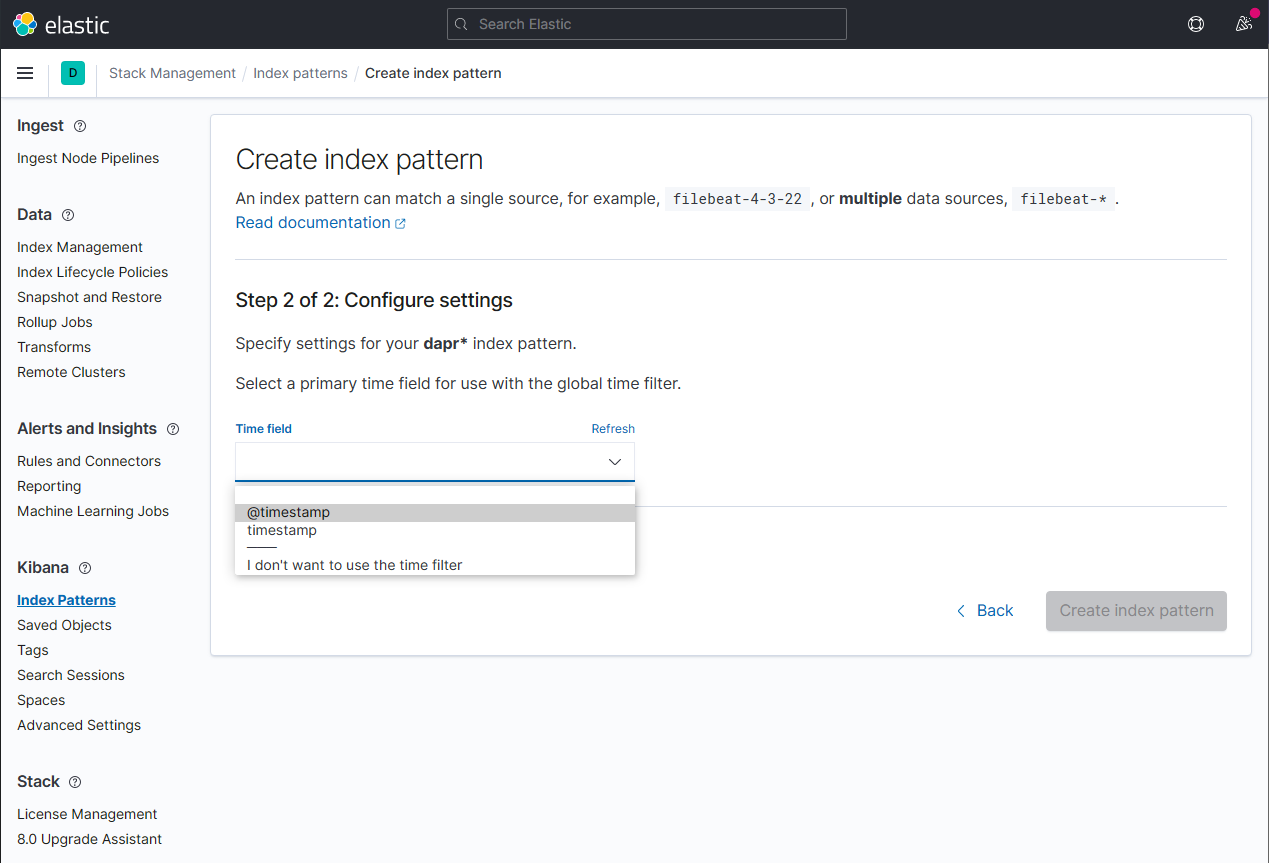

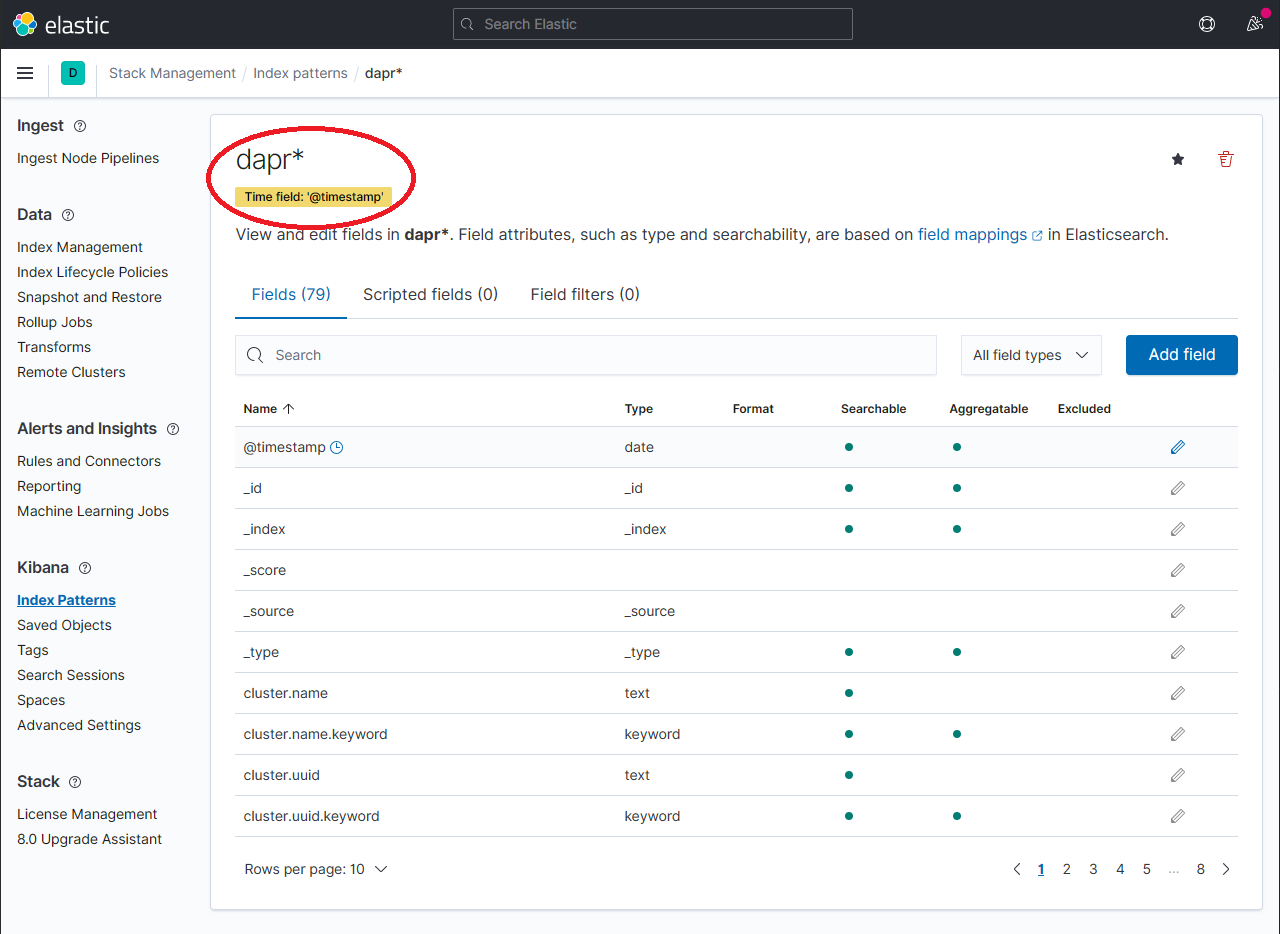

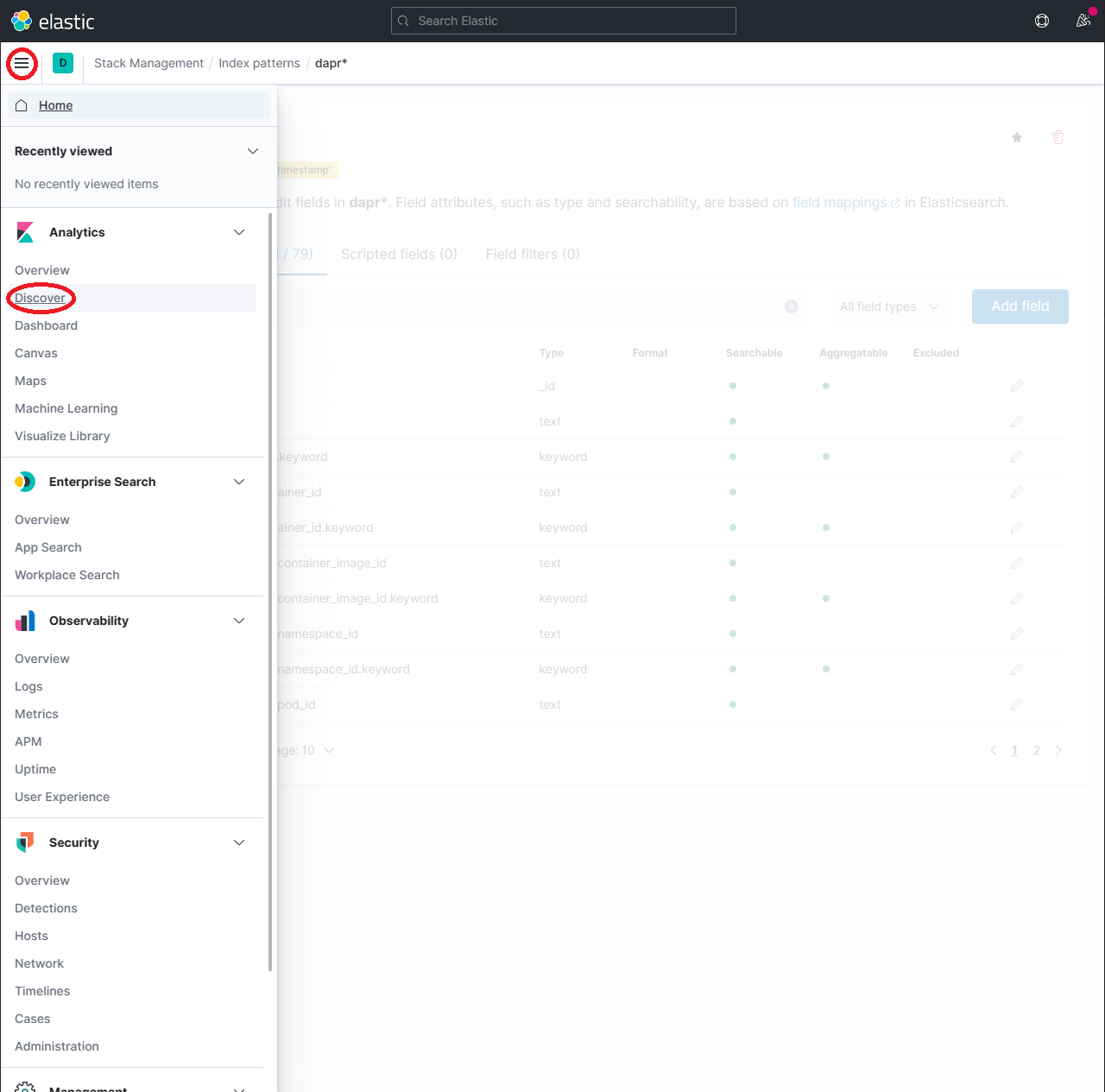

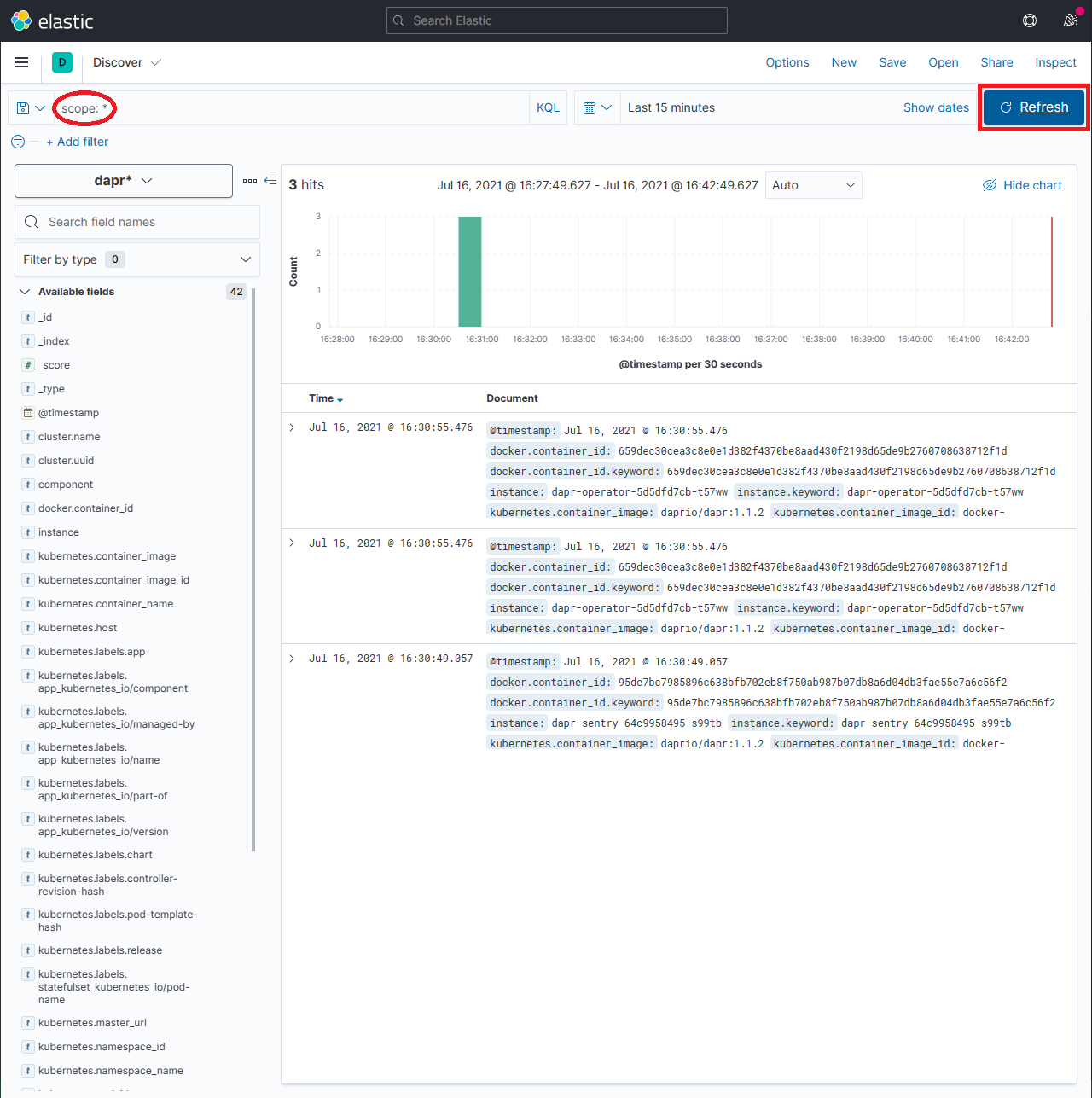

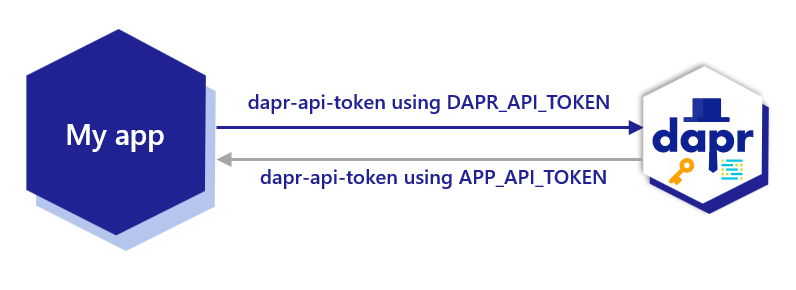

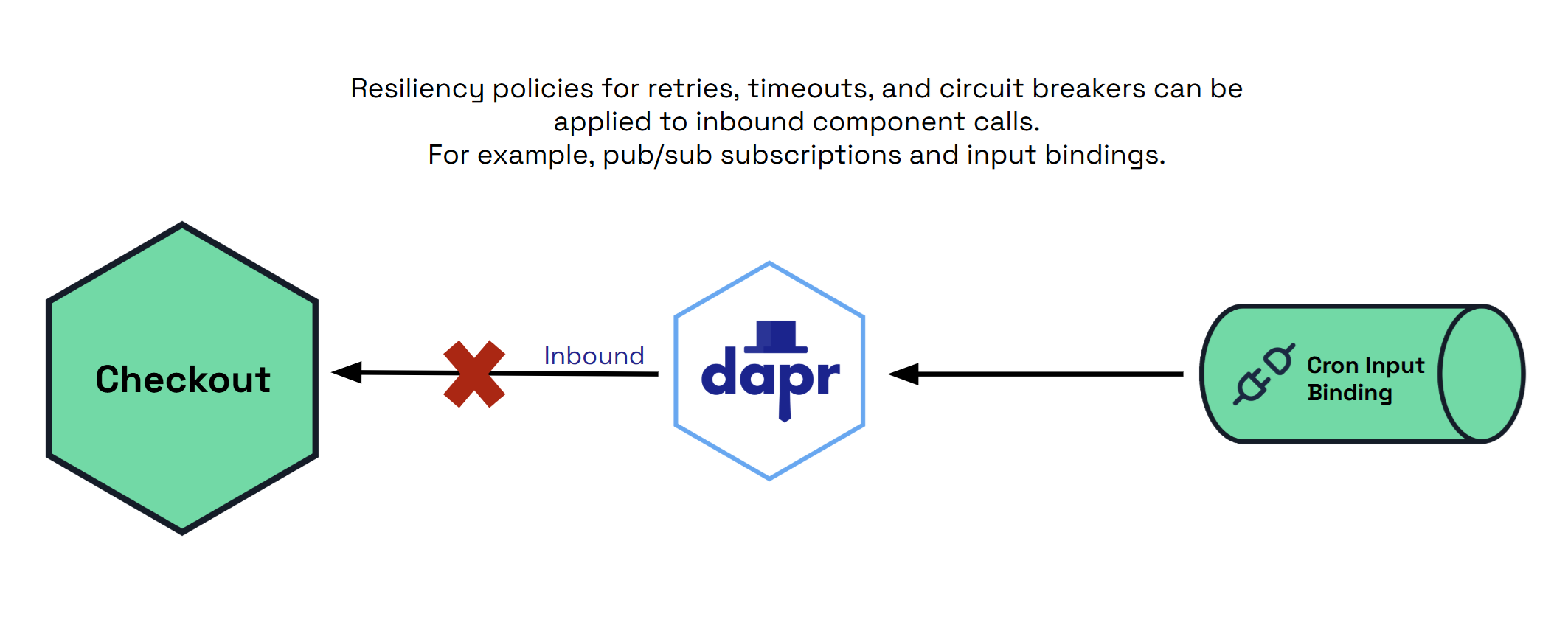

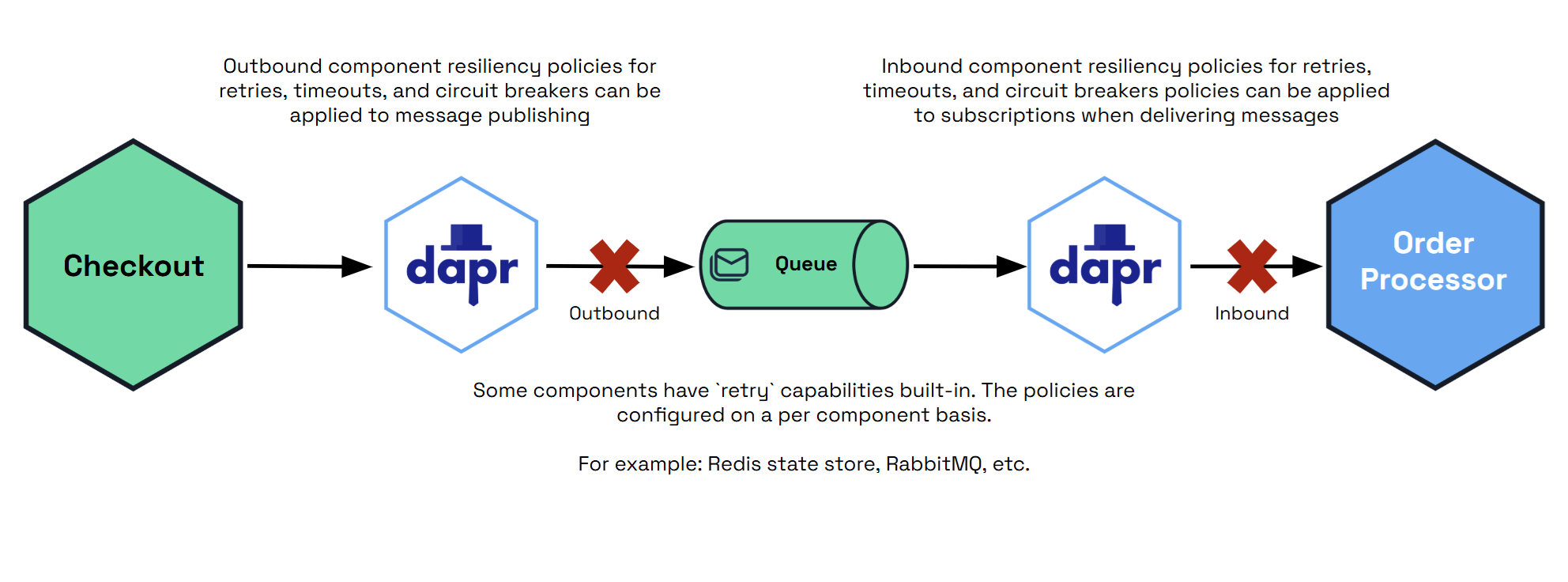

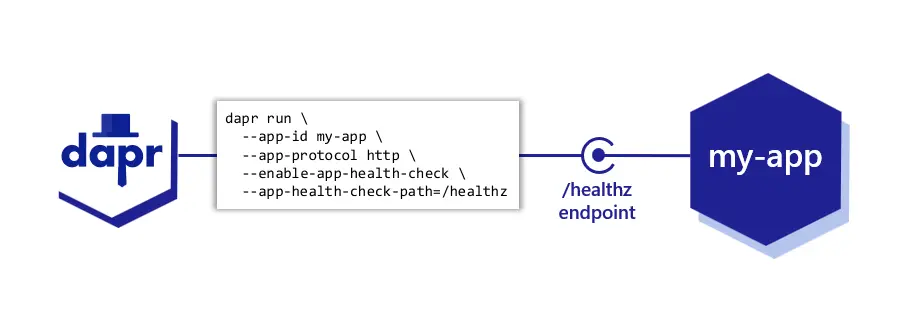

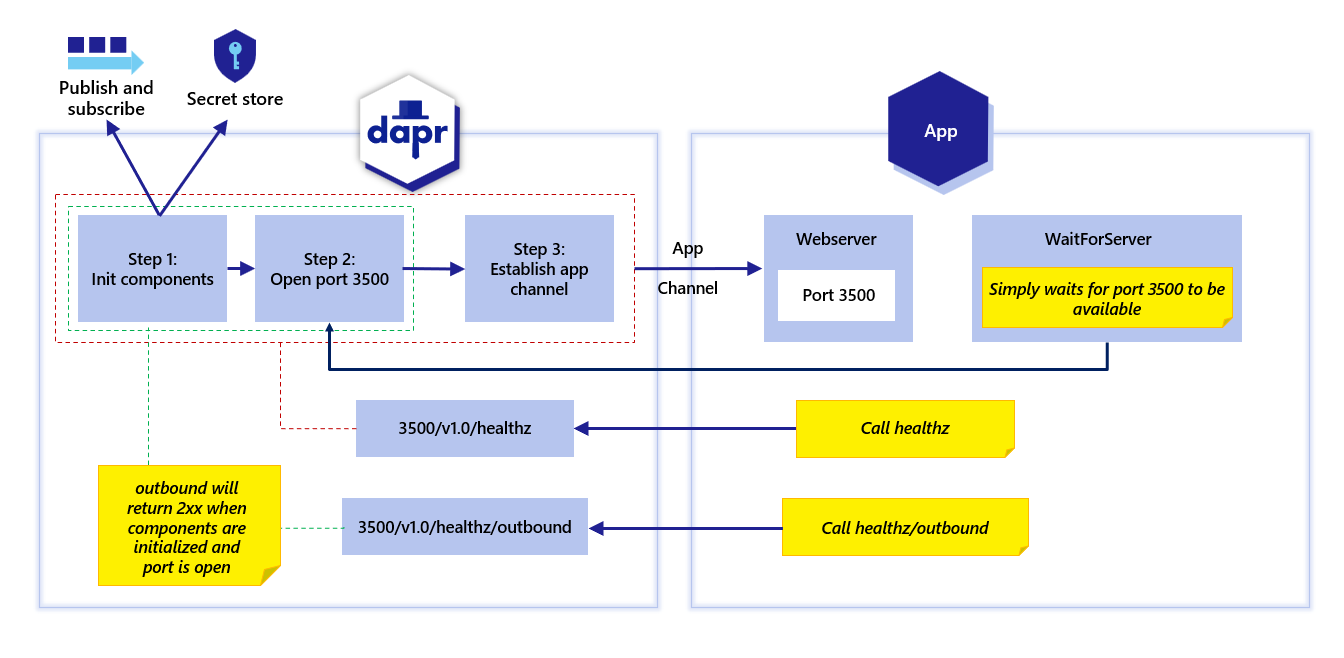

Port-forward from localhost to