This is the multi-page printable view of this section. Click here to print.

Local development

- 1: IDE support

- 1.1: Visual Studio Code integration with Dapr

- 1.1.1: Dapr Visual Studio Code extension overview

- 1.1.2: How-To: Debug Dapr applications with Visual Studio Code

- 1.1.3: Developing Dapr applications with Dev Containers

- 1.2: IntelliJ

- 2: Multi-App Run

- 3: How to: Use the gRPC interface in your Dapr application

- 4: Serialization in Dapr's SDKs

1 - IDE support

1.1 - Visual Studio Code integration with Dapr

1.1.1 - Dapr Visual Studio Code extension overview

Dapr offers a preview Dapr Visual Studio Code extension for local development which enables users a variety of features related to better managing their Dapr applications and debugging of your Dapr applications for all supported Dapr languages which are .NET, Go, PHP, Python and Java.

Features

Scaffold Dapr debugging tasks

The Dapr extension helps you debug your applications with Dapr using Visual Studio Code’s built-in debugging capability.

Using the Dapr: Scaffold Dapr Tasks Command Palette operation, you can update your existing task.json and launch.json files to launch and configure the Dapr sidecar when you begin debugging.

- Make sure you have a launch configuration set for your app. (Learn more)

- Open the Command Palette with

Ctrl+Shift+P - Select

Dapr: Scaffold Dapr Tasks - Run your app and the Dapr sidecar with

F5or via the Run view.

Scaffold Dapr components

When adding Dapr to your application, you may want to have a dedicated components directory, separate from the default components initialized as part of dapr init.

To create a dedicated components folder with the default statestore, pubsub, and zipkin components, use the Dapr: Scaffold Dapr Components Command Palette operation.

- Open your application directory in Visual Studio Code

- Open the Command Palette with

Ctrl+Shift+P - Select

Dapr: Scaffold Dapr Components - Run your application with

dapr run --resources-path ./components -- ...

View running Dapr applications

The Applications view shows Dapr applications running locally on your machine.

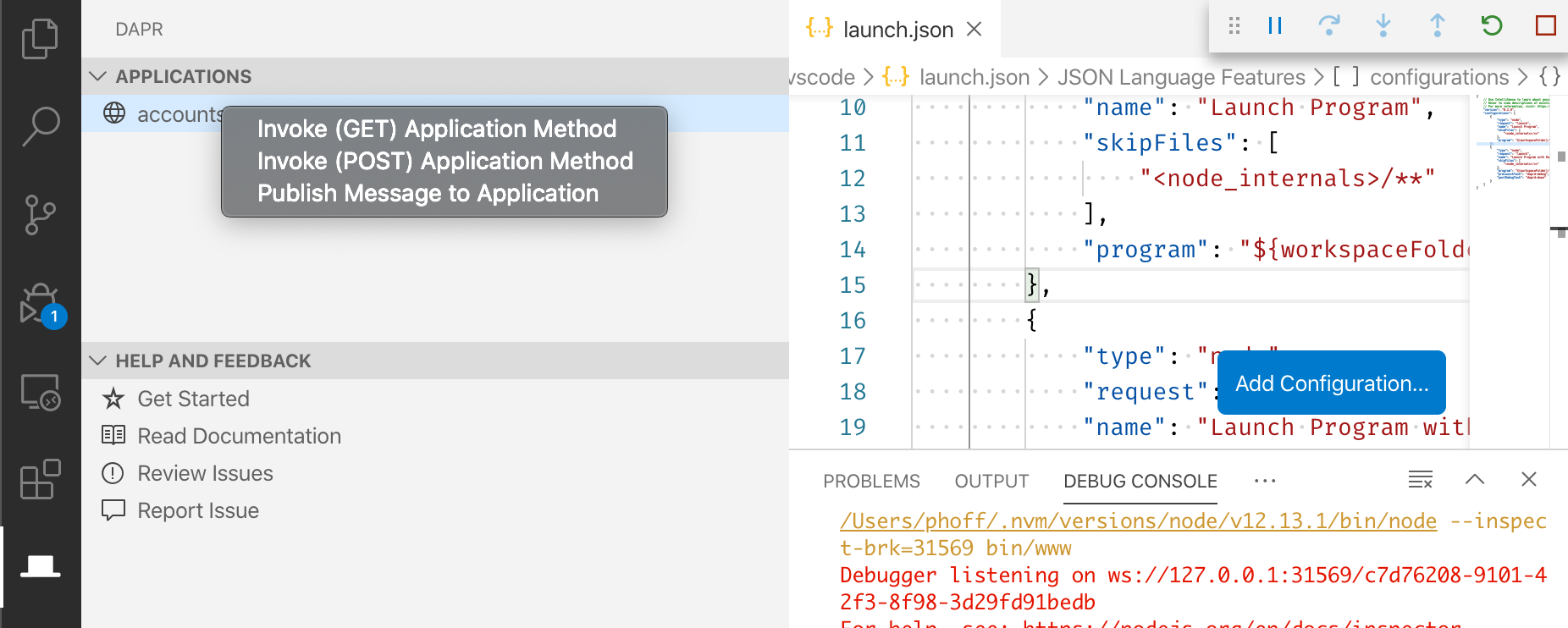

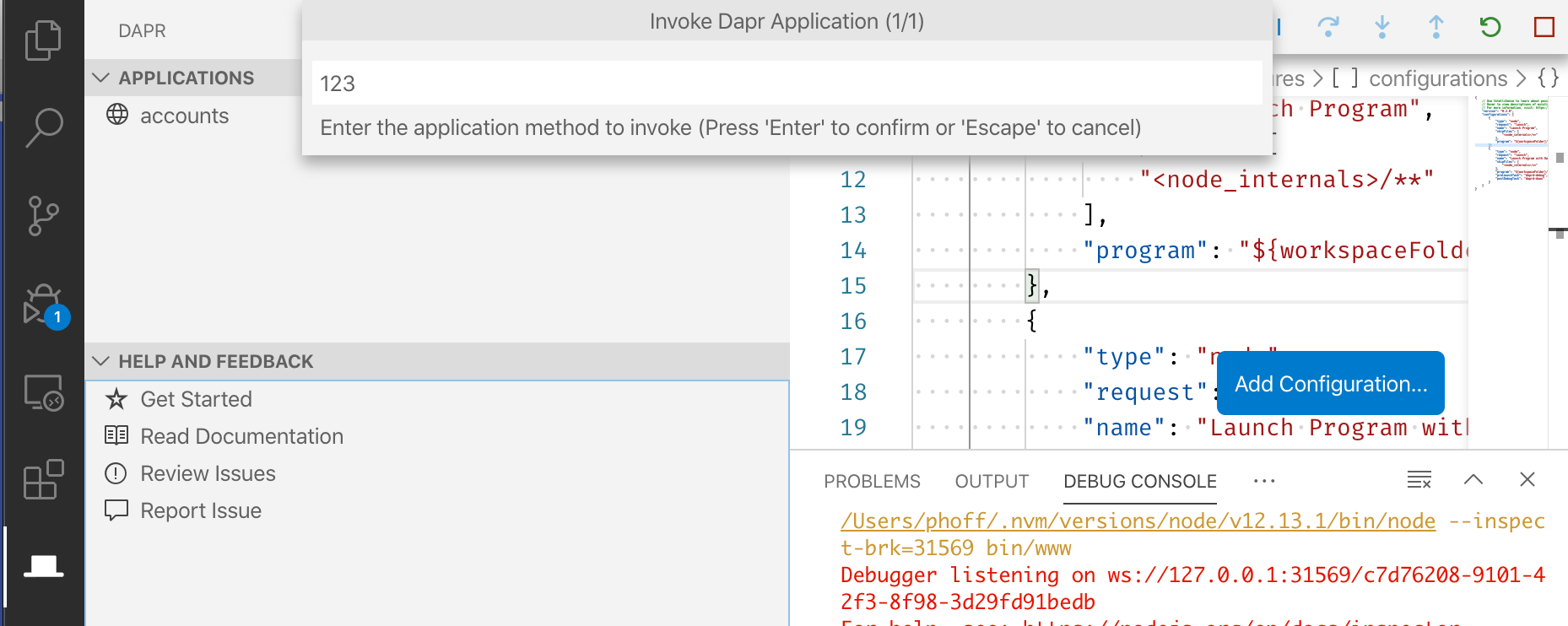

Invoke Dapr applications

Within the Applications view, users can right-click and invoke Dapr apps via GET or POST methods, optionally specifying a payload.

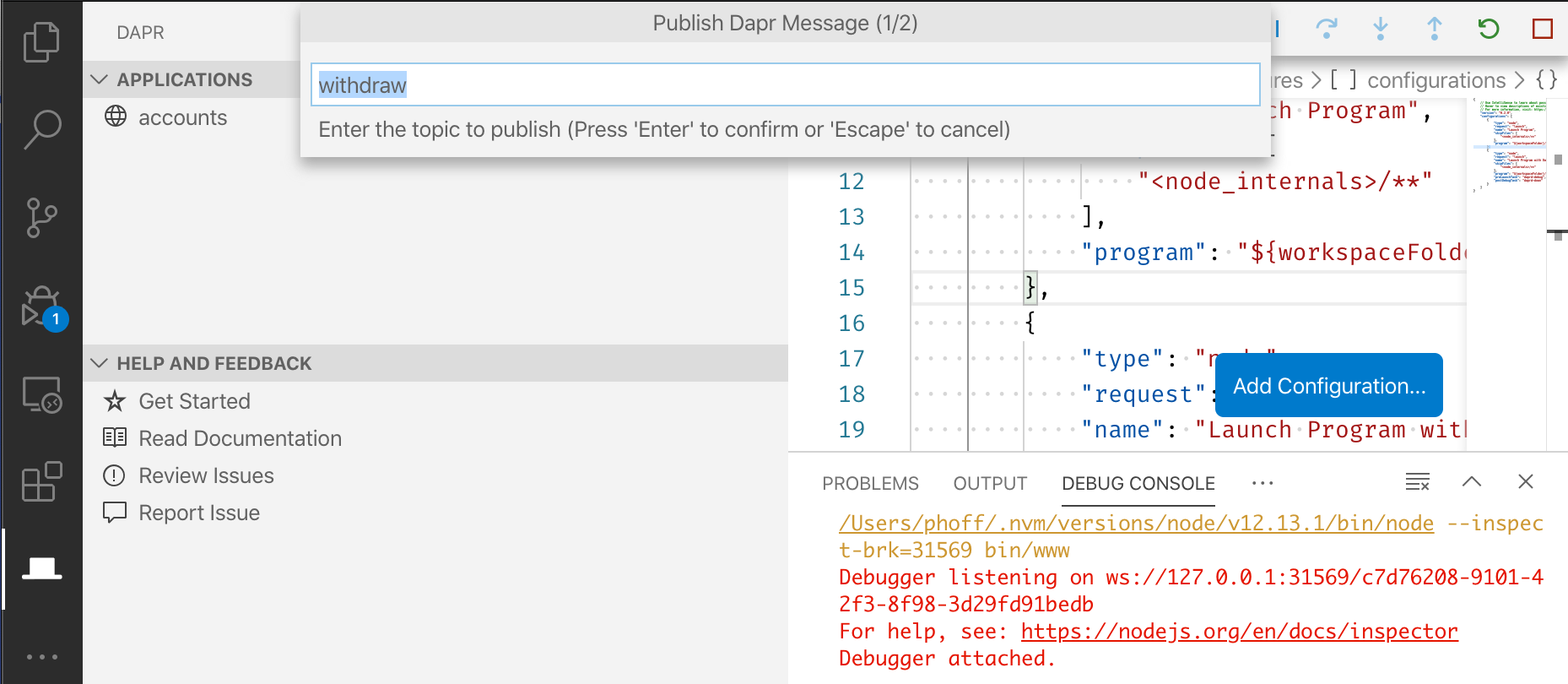

Publish events to Dapr applications

Within the Applications view, users can right-click and publish messages to a running Dapr application, specifying the topic and payload.

Users can also publish messages to all running applications.

Additional resources

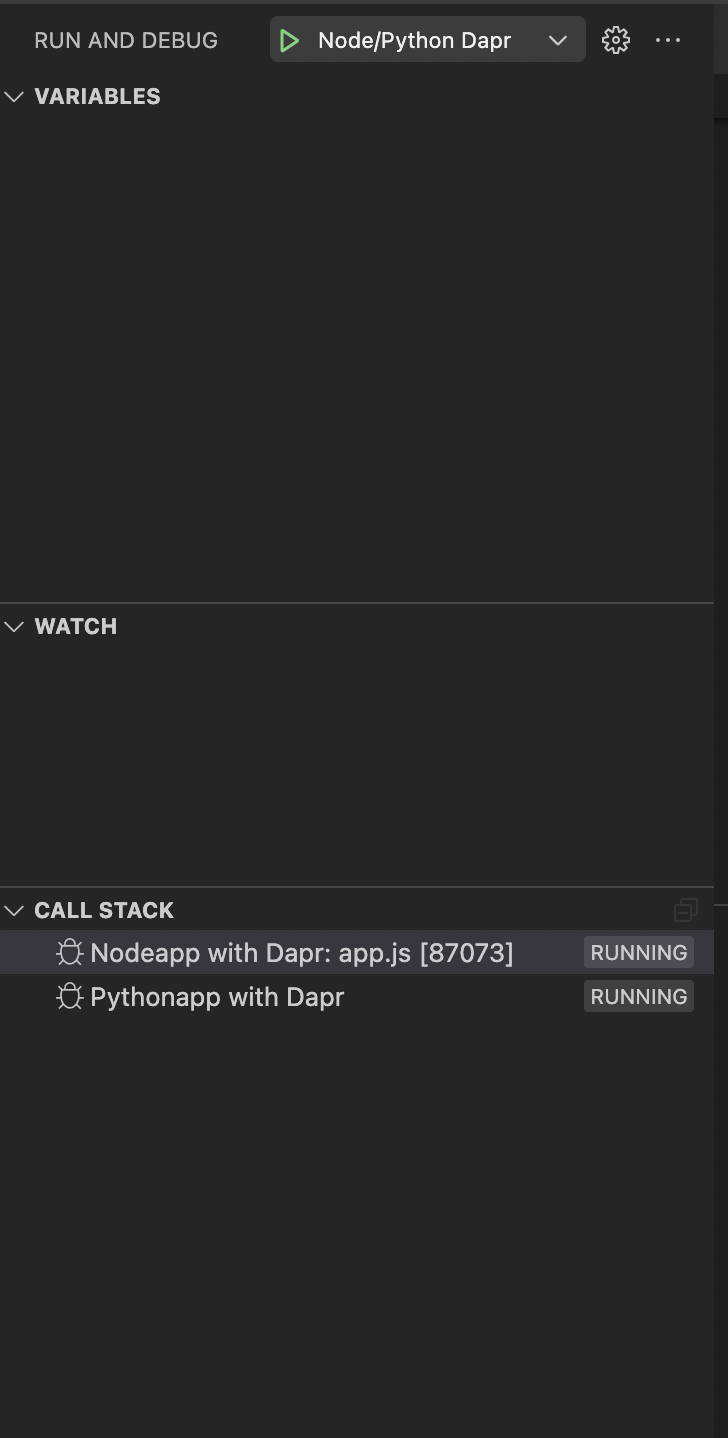

Debugging multiple Dapr applications at the same time

Using the VS Code extension, you can debug multiple Dapr applications at the same time with Multi-target debugging.

Community call demo

Watch this video on how to use the Dapr VS Code extension:

1.1.2 - How-To: Debug Dapr applications with Visual Studio Code

Manual debugging

When developing Dapr applications, you typically use the Dapr CLI to start your daprized service similar to this:

dapr run --app-id nodeapp --app-port 3000 --dapr-http-port 3500 app.js

One approach to attaching the debugger to your service is to first run daprd with the correct arguments from the command line and then launch your code and attach the debugger. While this is a perfectly acceptable solution, it does require a few extra steps and some instruction to developers who might want to clone your repo and hit the “play” button to begin debugging.

If your application is a collection of microservices, each with a Dapr sidecar, it will be useful to debug them together in Visual Studio Code. This page will use the hello world quickstart to showcase how to configure VSCode to debug multiple Dapr application using VSCode debugging.

Prerequisites

- Install the Dapr extension. You will be using the tasks it offers later on.

- Optionally clone the hello world quickstart

Step 1: Configure launch.json

The file .vscode/launch.json contains launch configurations for a VS Code debug run. This file defines what will launch and how it is configured when the user begins debugging. Configurations are available for each programming language in the Visual Studio Code marketplace.

Scaffold debugging configuration

The Dapr VSCode extension offers built-in scaffolding to generate launch.json and tasks.json for you.

In the case of the hello world quickstart, two applications are launched, each with its own Dapr sidecar. One is written in Node.JS, and the other in Python. You’ll notice each configuration contains a daprd run preLaunchTask and a daprd stop postDebugTask.

{

"version": "0.2.0",

"configurations": [

{

"type": "pwa-node",

"request": "launch",

"name": "Nodeapp with Dapr",

"skipFiles": [

"<node_internals>/**"

],

"program": "${workspaceFolder}/node/app.js",

"preLaunchTask": "daprd-debug-node",

"postDebugTask": "daprd-down-node"

},

{

"type": "python",

"request": "launch",

"name": "Pythonapp with Dapr",

"program": "${workspaceFolder}/python/app.py",

"console": "integratedTerminal",

"preLaunchTask": "daprd-debug-python",

"postDebugTask": "daprd-down-python"

}

]

}

If you’re using ports other than the default ports baked into the code, set the DAPR_HTTP_PORT and DAPR_GRPC_PORT environment variables in the launch.json debug configuration. Match with the httpPort and grpcPort in the daprd tasks.json. For example, launch.json:

{

// Set the non-default HTTP and gRPC ports

"env": {

"DAPR_HTTP_PORT": "3502",

"DAPR_GRPC_PORT": "50002"

},

}

tasks.json:

{

// Match with ports set in launch.json

"httpPort": 3502,

"grpcPort": 50002

}

Each configuration requires a request, type and name. These parameters help VSCode identify the task configurations in the .vscode/tasks.json files.

typedefines the language used. Depending on the language, it might require an extension found in the marketplace, such as the Python Extension.nameis a unique name for the configuration. This is used for compound configurations when calling multiple configurations in your project.${workspaceFolder}is a VS Code variable reference. This is the path to the workspace opened in VS Code.- The

preLaunchTaskandpostDebugTaskparameters refer to the program configurations run before and after launching the application. See step 2 on how to configure these.

For more information on VSCode debugging parameters see VS Code launch attributes.

Step 2: Configure tasks.json

For each task defined in .vscode/launch.json , a corresponding task definition must exist in .vscode/tasks.json.

For the quickstart, each service needs a task to launch a Dapr sidecar with the daprd type, and a task to stop the sidecar with daprd-down. The parameters appId, httpPort, metricsPort, label and type are required. Additional optional parameters are available, see the reference table here.

{

"version": "2.0.0",

"tasks": [

{

"label": "daprd-debug-node",

"type": "daprd",

"appId": "nodeapp",

"appPort": 3000,

"httpPort": 3500,

"metricsPort": 9090

},

{

"label": "daprd-down-node",

"type": "daprd-down",

"appId": "nodeapp"

},

{

"label": "daprd-debug-python",

"type": "daprd",

"appId": "pythonapp",

"httpPort": 53109,

"grpcPort": 53317,

"metricsPort": 9091

},

{

"label": "daprd-down-python",

"type": "daprd-down",

"appId": "pythonapp"

}

]

}

Step 3: Configure a compound launch in launch.json

A compound launch configuration can defined in .vscode/launch.json and is a set of two or more launch configurations that are launched in parallel. Optionally, a preLaunchTask can be specified and run before the individual debug sessions are started.

For this example the compound configuration is:

{

"version": "2.0.0",

"configurations": [...],

"compounds": [

{

"name": "Node/Python Dapr",

"configurations": ["Nodeapp with Dapr","Pythonapp with Dapr"]

}

]

}

Step 4: Launch your debugging session

You can now run the applications in debug mode by finding the compound command name you have defined in the previous step in the VS Code debugger:

You are now debugging multiple applications with Dapr!

Daprd parameter table

Below are the supported parameters for VS Code tasks. These parameters are equivalent to daprd arguments as detailed in this reference:

| Parameter | Description | Required | Example |

|---|---|---|---|

allowedOrigins |

Allowed HTTP origins (default “*”) | No | "allowedOrigins": "*" |

appId |

The unique ID of the application. Used for service discovery, state encapsulation and the pub/sub consumer ID | Yes | "appId": "divideapp" |

appMaxConcurrency |

Limit the concurrency of your application. A valid value is any number larger than 0 | No | "appMaxConcurrency": -1 |

appPort |

This parameter tells Dapr which port your application is listening on | Yes | "appPort": 4000 |

appProtocol |

Tells Dapr which protocol your application is using. Valid options are http, grpc, https, grpcs, h2c. Default is http. |

No | "appProtocol": "http" |

args |

Sets a list of arguments to pass on to the Dapr app | No | “args”: [] |

componentsPath |

Path for components directory. If empty, components will not be loaded. | No | "componentsPath": "./components" |

config |

Tells Dapr which Configuration resource to use | No | "config": "./config" |

controlPlaneAddress |

Address for a Dapr control plane | No | "controlPlaneAddress": "http://localhost:1366/" |

enableProfiling |

Enable profiling | No | "enableProfiling": false |

enableMtls |

Enables automatic mTLS for daprd to daprd communication channels | No | "enableMtls": false |

grpcPort |

gRPC port for the Dapr API to listen on (default “50001”) | Yes, if multiple apps | "grpcPort": 50004 |

httpPort |

The HTTP port for the Dapr API | Yes | "httpPort": 3502 |

internalGrpcPort |

gRPC port for the Dapr Internal API to listen on | No | "internalGrpcPort": 50001 |

logAsJson |

Setting this parameter to true outputs logs in JSON format. Default is false | No | "logAsJson": false |

logLevel |

Sets the log level for the Dapr sidecar. Allowed values are debug, info, warn, error. Default is info | No | "logLevel": "debug" |

metricsPort |

Sets the port for the sidecar metrics server. Default is 9090 | Yes, if multiple apps | "metricsPort": 9093 |

mode |

Runtime mode for Dapr (default “standalone”) | No | "mode": "standalone" |

placementHostAddress |

Addresses for Dapr Actor Placement servers | No | "placementHostAddress": "http://localhost:1313/" |

profilePort |

The port for the profile server (default “7777”) | No | "profilePort": 7777 |

sentryAddress |

Address for the Sentry CA service | No | "sentryAddress": "http://localhost:1345/" |

type |

Tells VS Code it will be a daprd task type | Yes | "type": "daprd" |

Related Links

1.1.3 - Developing Dapr applications with Dev Containers

The Visual Studio Code Dev Containers extension lets you use a self-contained Docker container as a complete development environment, without installing any additional packages, libraries, or utilities in your local filesystem.

Dapr has pre-built Dev Containers for C# and JavaScript/TypeScript; you can pick the one of your choice for a ready made environment. Note these pre-built containers automatically update to the latest Dapr release.

We also publish a Dev Container feature that installs the Dapr CLI inside any Dev Container.

Setup the development environment

Prerequisites

Add the Dapr CLI using a Dev Container feature

You can install the Dapr CLI inside any Dev Container using Dev Container features.

To do that, edit your devcontainer.json and add two objects in the "features" section:

"features": {

// Install the Dapr CLI

"ghcr.io/dapr/cli/dapr-cli:0": {},

// Enable Docker (via Docker-in-Docker)

"ghcr.io/devcontainers/features/docker-in-docker:2": {},

// Alternatively, use Docker-outside-of-Docker (uses Docker in the host)

//"ghcr.io/devcontainers/features/docker-outside-of-docker:1": {},

}

After saving the JSON file and (re-)building the container that hosts your development environment, you will have the Dapr CLI (and Docker) available, and can install Dapr by running this command in the container:

dapr init

Example: create a Java Dev Container for Dapr

This is an example of creating a Dev Container for creating Java apps that use Dapr, based on the official Java 17 Dev Container image.

Place this in a file called .devcontainer/devcontainer.json in your project:

// For format details, see https://aka.ms/devcontainer.json. For config options, see the

// README at: https://github.com/devcontainers/templates/tree/main/src/java

{

"name": "Java",

// Or use a Dockerfile or Docker Compose file. More info: https://containers.dev/guide/dockerfile

"image": "mcr.microsoft.com/devcontainers/java:0-17",

"features": {

"ghcr.io/devcontainers/features/java:1": {

"version": "none",

"installMaven": "false",

"installGradle": "false"

},

// Install the Dapr CLI

"ghcr.io/dapr/cli/dapr-cli:0": {},

// Enable Docker (via Docker-in-Docker)

"ghcr.io/devcontainers/features/docker-in-docker:2": {},

// Alternatively, use Docker-outside-of-Docker (uses Docker in the host)

//"ghcr.io/devcontainers/features/docker-outside-of-docker:1": {},

}

// Use 'forwardPorts' to make a list of ports inside the container available locally.

// "forwardPorts": [],

// Use 'postCreateCommand' to run commands after the container is created.

// "postCreateCommand": "java -version",

// Configure tool-specific properties.

// "customizations": {},

// Uncomment to connect as root instead. More info: https://aka.ms/dev-containers-non-root.

// "remoteUser": "root"

}

Then, using the VS Code command palette (CTRL + SHIFT + P or CMD + SHIFT + P on Mac), select Dev Containers: Rebuild and Reopen in Container.

Use a pre-built Dev Container (C# and JavaScript/TypeScript)

- Open your application workspace in VS Code

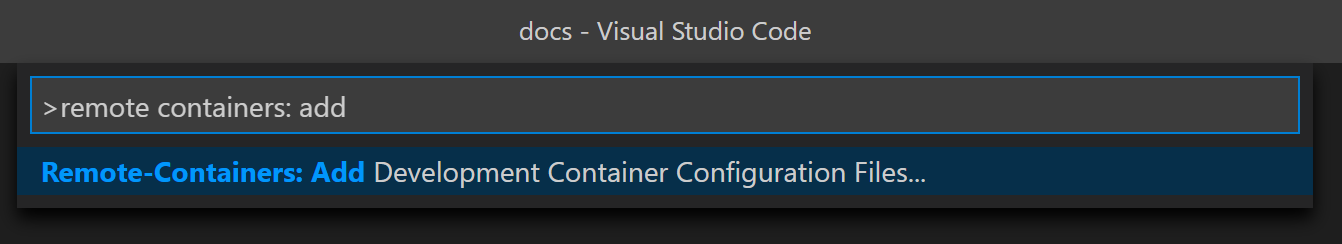

- In the command command palette (

CTRL + SHIFT + PorCMD + SHIFT + Pon Mac) type and selectDev Containers: Add Development Container Configuration Files...

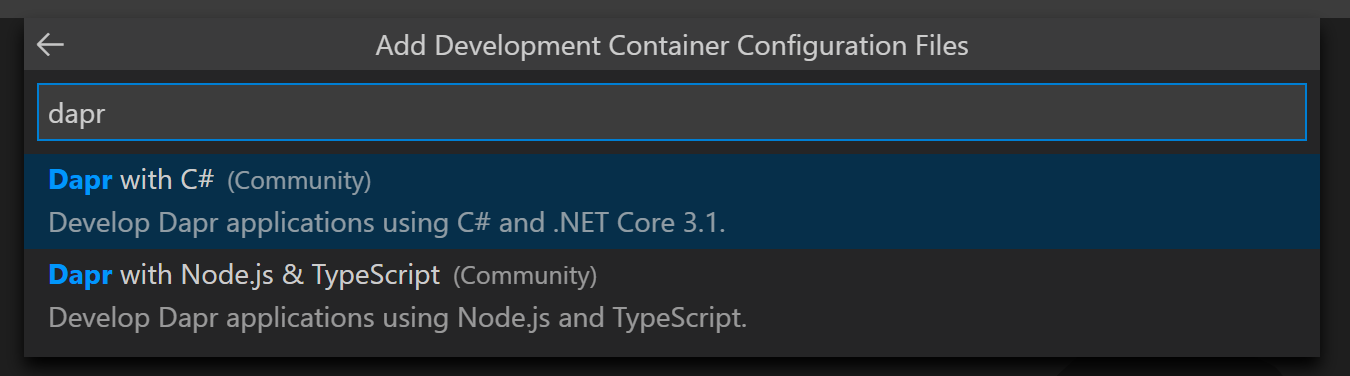

- Type

daprto filter the list to available Dapr remote containers and choose the language container that matches your application. Note you may need to selectShow All Definitions...

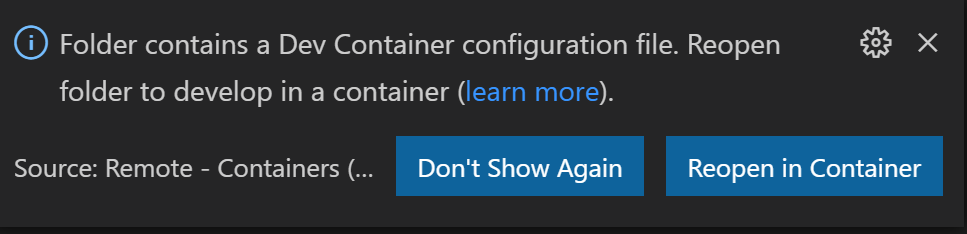

- Follow the prompts to reopen your workspace in the container.

Example

Watch this video on how to use the Dapr Dev Containers with your application.

1.2 - IntelliJ

When developing Dapr applications, you typically use the Dapr CLI to start your ‘Daprized’ service similar to this:

dapr run --app-id nodeapp --app-port 3000 --dapr-http-port 3500 app.js

This uses the default components yaml files (created on dapr init) so that your service can interact with the local Redis container. This is great when you are just getting started but what if you want to attach a debugger to your service and step through the code? This is where you can use the dapr cli without invoking an app.

One approach to attaching the debugger to your service is to first run dapr run -- from the command line and then launch your code and attach the debugger. While this is a perfectly acceptable solution, it does require a few extra steps (like switching between terminal and IDE) and some instruction to developers who might want to clone your repo and hit the “play” button to begin debugging.

This document explains how to use dapr directly from IntelliJ. As a pre-requisite, make sure you have initialized the Dapr’s dev environment via dapr init.

Let’s get started!

Add Dapr as an ‘External Tool’

First, quit IntelliJ before modifying the configurations file directly.

IntelliJ configuration file location

For versions 2020.1 and above the configuration files for tools should be located in:

%USERPROFILE%\AppData\Roaming\JetBrains\IntelliJIdea2020.1\tools\

$HOME/.config/JetBrains/IntelliJIdea2020.1/tools/

~/Library/Application\ Support/JetBrains/IntelliJIdea2020.1/tools/

The configuration file location is different for version 2019.3 or prior. See here for more details.

Change the version of IntelliJ in the path if needed.

Create or edit the file in <CONFIG PATH>/tools/External\ Tools.xml (change IntelliJ version in path if needed). The <CONFIG PATH> is OS dependent as seen above.

Add a new <tool></tool> entry:

<toolSet name="External Tools">

...

<!-- 1. Each tool has its own app-id, so create one per application to be debugged -->

<tool name="dapr for DemoService in examples" description="Dapr sidecar" showInMainMenu="false" showInEditor="false" showInProject="false" showInSearchPopup="false" disabled="false" useConsole="true" showConsoleOnStdOut="true" showConsoleOnStdErr="true" synchronizeAfterRun="true">

<exec>

<!-- 2. For Linux or MacOS use: /usr/local/bin/dapr -->

<option name="COMMAND" value="C:\dapr\dapr.exe" />

<!-- 3. Choose app, http and grpc ports that do not conflict with other daprd command entries (placement address should not change). -->

<option name="PARAMETERS" value="run -app-id demoservice -app-port 3000 -dapr-http-port 3005 -dapr-grpc-port 52000" />

<!-- 4. Use the folder where the `components` folder is located -->

<option name="WORKING_DIRECTORY" value="C:/Code/dapr/java-sdk/examples" />

</exec>

</tool>

...

</toolSet>

Optionally, you may also create a new entry for a sidecar tool that can be reused across many projects:

<toolSet name="External Tools">

...

<!-- 1. Reusable entry for apps with app port. -->

<tool name="dapr with app-port" description="Dapr sidecar" showInMainMenu="false" showInEditor="false" showInProject="false" showInSearchPopup="false" disabled="false" useConsole="true" showConsoleOnStdOut="true" showConsoleOnStdErr="true" synchronizeAfterRun="true">

<exec>

<!-- 2. For Linux or MacOS use: /usr/local/bin/dapr -->

<option name="COMMAND" value="c:\dapr\dapr.exe" />

<!-- 3. Prompts user 4 times (in order): app id, app port, Dapr's http port, Dapr's grpc port. -->

<option name="PARAMETERS" value="run --app-id $Prompt$ --app-port $Prompt$ --dapr-http-port $Prompt$ --dapr-grpc-port $Prompt$" />

<!-- 4. Use the folder where the `components` folder is located -->

<option name="WORKING_DIRECTORY" value="$ProjectFileDir$" />

</exec>

</tool>

<!-- 1. Reusable entry for apps without app port. -->

<tool name="dapr without app-port" description="Dapr sidecar" showInMainMenu="false" showInEditor="false" showInProject="false" showInSearchPopup="false" disabled="false" useConsole="true" showConsoleOnStdOut="true" showConsoleOnStdErr="true" synchronizeAfterRun="true">

<exec>

<!-- 2. For Linux or MacOS use: /usr/local/bin/dapr -->

<option name="COMMAND" value="c:\dapr\dapr.exe" />

<!-- 3. Prompts user 3 times (in order): app id, Dapr's http port, Dapr's grpc port. -->

<option name="PARAMETERS" value="run --app-id $Prompt$ --dapr-http-port $Prompt$ --dapr-grpc-port $Prompt$" />

<!-- 4. Use the folder where the `components` folder is located -->

<option name="WORKING_DIRECTORY" value="$ProjectFileDir$" />

</exec>

</tool>

...

</toolSet>

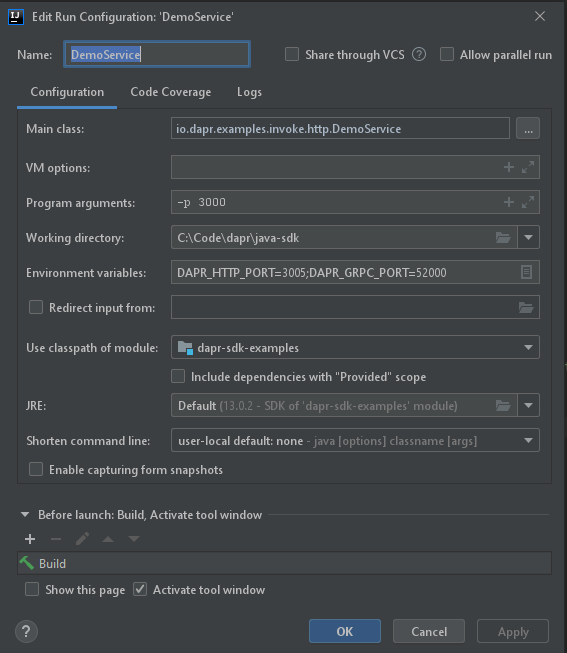

Create or edit run configuration

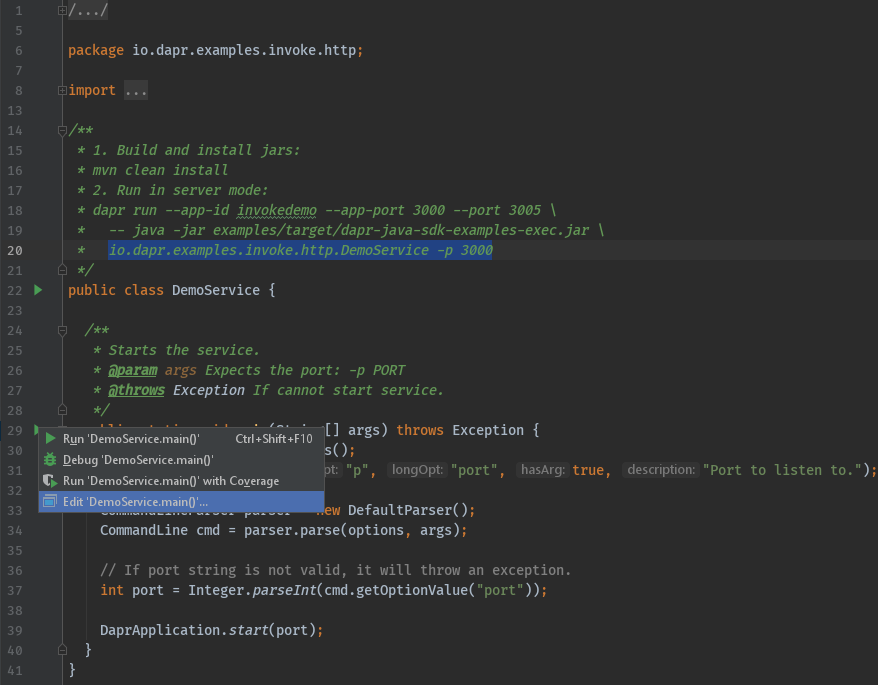

Now, create or edit the run configuration for the application to be debugged. It can be found in the menu next to the main() function.

Now, add the program arguments and environment variables. These need to match the ports defined in the entry in ‘External Tool’ above.

- Command line arguments for this example:

-p 3000 - Environment variables for this example:

DAPR_HTTP_PORT=3005;DAPR_GRPC_PORT=52000

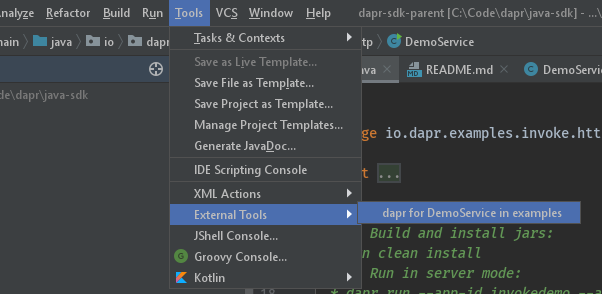

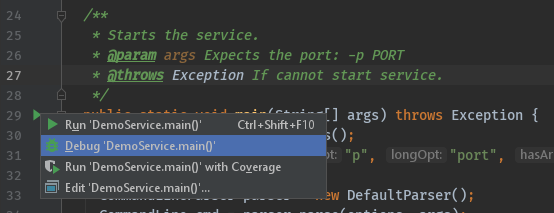

Start debugging

Once the one-time config above is done, there are two steps required to debug a Java application with Dapr in IntelliJ:

- Start

daprviaTools->External Toolin IntelliJ.

- Start your application in debug mode.

Wrapping up

After debugging, make sure you stop both dapr and your app in IntelliJ.

Note: Since you launched the service(s) using the dapr run CLI command, the dapr list command will show runs from IntelliJ in the list of apps that are currently running with Dapr.

Happy debugging!

Related links

- Change in IntelliJ configuration directory location

2 - Multi-App Run

2.1 - Multi-App Run overview

Note

Multi-App Run for Kubernetes is currently a preview feature.Let’s say you want to run several applications locally to test them together, similar to a production scenario. Multi-App Run allows you to start and stop a set of applications simultaneously, either:

- Locally/self-hosted with processes, or

- By building container images and deploying to a Kubernetes cluster

- You can use a local Kubernetes cluster (KiND) or one deploy to a Cloud (AKS, EKS, and GKE).

The Multi-App Run template file describes how to start multiple applications as if you had run many separate CLI run commands. By default, this template file is called dapr.yaml.

Multi-App Run template file

When you execute dapr run -f ., it starts the multi-app template file (named dapr.yaml) present in the current directory to run all the applications.

You can name template file with preferred name other than the default. For example dapr run -f ./<your-preferred-file-name>.yaml.

The following example includes some of the template properties you can customize for your applications. In the example, you can simultaneously launch 2 applications with app IDs of processor and emit-metrics.

version: 1

apps:

- appID: processor

appDirPath: ../apps/processor/

appPort: 9081

daprHTTPPort: 3510

command: ["go","run", "app.go"]

- appID: emit-metrics

appDirPath: ../apps/emit-metrics/

daprHTTPPort: 3511

env:

DAPR_HOST_ADD: localhost

command: ["go","run", "app.go"]

For a more in-depth example and explanation of the template properties, see Multi-app template.

Locations for resources and configuration files

You have options on where to place your applications’ resources and configuration files when using Multi-App Run.

Point to one file location (with convention)

You can set all of your applications resources and configurations at the ~/.dapr root. This is helpful when all applications share the same resources path, like when testing on a local machine.

Separate file locations for each application (with convention)

When using Multi-App Run, each application directory can have a .dapr folder, which contains a config.yaml file and a resources directory. Otherwise, if the .dapr directory is not present within the app directory, the default ~/.dapr/resources/ and ~/.dapr/config.yaml locations are used.

If you decide to add a .dapr directory in each application directory, with a /resources directory and config.yaml file, you can specify different resources paths for each application. This approach remains within convention by using the default ~/.dapr.

Point to separate locations (custom)

You can also name each app directory’s .dapr directory something other than .dapr, such as, webapp, or backend. This helps if you’d like to be explicit about resource or application directory paths.

Logs

The run template provides two log destination fields for each application and its associated daprd process:

-

appLogDestination: This field configures the log destination for the application. The possible values areconsole,fileandfileAndConsole. The default value isfileAndConsolewhere application logs are written to both console and to a file by default. -

daprdLogDestination: This field configures the log destination for thedaprdprocess. The possible values areconsole,fileandfileAndConsole. The default value isfilewhere thedaprdlogs are written to a file by default.

Log file format

Logs for application and daprd are captured in separate files. These log files are created automatically under .dapr/logs directory under each application directory (appDirPath in the template). These log file names follow the pattern seen below:

<appID>_app_<timestamp>.log(file name format forapplog)<appID>_daprd_<timestamp>.log(file name format fordaprdlog)

Even if you’ve decided to rename your resources folder to something other than .dapr, the log files are written only to the .dapr/logs folder (created in the application directory).

Watch the demo

Multi-App Run template file

When you execute dapr run -k -f . or dapr run -k -f dapr.yaml, the applications defined in the dapr.yaml Multi-App Run template file starts in Kubernetes default namespace.

Note: Currently, the Multi-App Run template can only start applications in the default Kubernetes namespace.

The necessary default service and deployment definitions for Kubernetes are generated within the .dapr/deploy folder for each app in the dapr.yaml template.

If the createService field is set to true in the dapr.yaml template for an app, then the service.yaml file is generated in the .dapr/deploy folder of the app.

Otherwise, only the deployment.yaml file is generated for each app that has the containerImage field set.

The files service.yaml and deployment.yaml are used to deploy the applications in default namespace in Kubernetes. This feature is specifically targeted only for running multiple apps in a dev/test environment in Kubernetes.

You can name the template file with any preferred name other than the default. For example:

dapr run -k -f ./<your-preferred-file-name>.yaml

The following example includes some of the template properties you can customize for your applications. In the example, you can simultaneously launch 2 applications with app IDs of nodeapp and pythonapp.

version: 1

common:

apps:

- appID: nodeapp

appDirPath: ./nodeapp/

appPort: 3000

containerImage: ghcr.io/dapr/samples/hello-k8s-node:latest

containerImagePullPolicy: Always

createService: true

env:

APP_PORT: 3000

- appID: pythonapp

appDirPath: ./pythonapp/

containerImage: ghcr.io/dapr/samples/hello-k8s-python:latest

Note:

- If the

containerImagefield is not specified,dapr run -k -fproduces an error.- The containerImagePullPolicy indicates that a new container image is always downloaded for this app.

- The

createServicefield defines a basic service in Kubernetes (ClusterIP or LoadBalancer) that targets the--app-portspecified in the template. IfcreateServiceisn’t specified, the application is not accessible from outside the cluster.

For a more in-depth example and explanation of the template properties, see Multi-app template.

Logs

The run template provides two log destination fields for each application and its associated daprd process:

-

appLogDestination: This field configures the log destination for the application. The possible values areconsole,fileandfileAndConsole. The default value isfileAndConsolewhere application logs are written to both console and to a file by default. -

daprdLogDestination: This field configures the log destination for thedaprdprocess. The possible values areconsole,fileandfileAndConsole. The default value isfilewhere thedaprdlogs are written to a file by default.

Log file format

Logs for application and daprd are captured in separate files. These log files are created automatically under .dapr/logs directory under each application directory (appDirPath in the template). These log file names follow the pattern seen below:

<appID>_app_<timestamp>.log(file name format forapplog)<appID>_daprd_<timestamp>.log(file name format fordaprdlog)

Even if you’ve decided to rename your resources folder to something other than .dapr, the log files are written only to the .dapr/logs folder (created in the application directory).

Watch the demo

Watch this video for an overview on Multi-App Run in Kubernetes:

Next steps

2.2 - How to: Use the Multi-App Run template file

Note

Multi-App Run for Kubernetes is currently a preview feature.The Multi-App Run template file is a YAML file that you can use to run multiple applications at once. In this guide, you’ll learn how to:

- Use the multi-app template

- View started applications

- Stop the multi-app template

- Structure the multi-app template file

Use the multi-app template

You can use the multi-app template file in one of the following two ways:

Execute by providing a directory path

When you provide a directory path, the CLI will try to locate the Multi-App Run template file, named dapr.yaml by default in the directory. If the file is not found, the CLI will return an error.

Execute the following CLI command to read the Multi-App Run template file, named dapr.yaml by default:

# the template file needs to be called `dapr.yaml` by default if a directory path is given

dapr run -f <dir_path>

dapr run -f <dir_path> -k

Execute by providing a file path

If the Multi-App Run template file is named something other than dapr.yaml, then you can provide the relative or absolute file path to the command:

dapr run -f ./path/to/<your-preferred-file-name>.yaml

dapr run -f ./path/to/<your-preferred-file-name>.yaml -k

View the started applications

Once the multi-app template is running, you can view the started applications with the following command:

dapr list

dapr list -k

Stop the multi-app template

Stop the multi-app run template anytime with either of the following commands:

# the template file needs to be called `dapr.yaml` by default if a directory path is given

dapr stop -f <dir_path>

or:

dapr stop -f ./path/to/<your-preferred-file-name>.yaml

# the template file needs to be called `dapr.yaml` by default if a directory path is given

dapr stop -f <dir_path> -k

or:

dapr stop -f ./path/to/<your-preferred-file-name>.yaml -k

Template file structure

The Multi-App Run template file can include the following properties. Below is an example template showing two applications that are configured with some of the properties.

version: 1

common: # optional section for variables shared across apps

resourcesPath: ./app/components # any dapr resources to be shared across apps

env: # any environment variable shared across apps

DEBUG: true

apps:

- appID: webapp # optional

appDirPath: .dapr/webapp/ # REQUIRED

resourcesPath: .dapr/resources # deprecated

resourcesPaths: .dapr/resources # comma separated resources paths. (optional) can be left to default value by convention.

appChannelAddress: 127.0.0.1 # network address where the app listens on. (optional) can be left to default value by convention.

configFilePath: .dapr/config.yaml # (optional) can be default by convention too, ignore if file is not found.

appProtocol: http

appPort: 8080

appHealthCheckPath: "/healthz"

command: ["python3", "app.py"]

appLogDestination: file # (optional), can be file, console or fileAndConsole. default is fileAndConsole.

daprdLogDestination: file # (optional), can be file, console or fileAndConsole. default is file.

- appID: backend # optional

appDirPath: .dapr/backend/ # REQUIRED

appProtocol: grpc

appPort: 3000

unixDomainSocket: "/tmp/test-socket"

env:

DEBUG: false

command: ["./backend"]

The following rules apply for all the paths present in the template file:

- If the path is absolute, it is used as is.

- All relative paths under common section should be provided relative to the template file path.

appDirPathunder apps section should be provided relative to the template file path.- All other relative paths under apps section should be provided relative to the

appDirPath.

version: 1

common: # optional section for variables shared across apps

env: # any environment variable shared across apps

DEBUG: true

apps:

- appID: webapp # optional

appDirPath: .dapr/webapp/ # REQUIRED

appChannelAddress: 127.0.0.1 # network address where the app listens on. (optional) can be left to default value by convention.

appProtocol: http

appPort: 8080

appHealthCheckPath: "/healthz"

appLogDestination: file # (optional), can be file, console or fileAndConsole. default is fileAndConsole.

daprdLogDestination: file # (optional), can be file, console or fileAndConsole. default is file.

containerImage: ghcr.io/dapr/samples/hello-k8s-node:latest # (optional) URI of the container image to be used when deploying to Kubernetes dev/test environment.

containerImagePullPolicy: IfNotPresent # (optional), the container image is downloaded if one is not present locally, otherwise the local one is used.

createService: true # (optional) Create a Kubernetes service for the application when deploying to dev/test environment.

- appID: backend # optional

appDirPath: .dapr/backend/ # REQUIRED

appProtocol: grpc

appPort: 3000

unixDomainSocket: "/tmp/test-socket"

env:

DEBUG: false

The following rules apply for all the paths present in the template file:

- If the path is absolute, it is used as is.

appDirPathunder apps section should be provided relative to the template file path.- All relative paths under app section should be provided relative to the

appDirPath.

Template properties

The properties for the Multi-App Run template align with the dapr run CLI flags, listed in the CLI reference documentation.

| Properties | Required | Details | Example |

|---|---|---|---|

appDirPath |

Y | Path to the your application code | ./webapp/, ./backend/ |

appID |

N | Application’s app ID. If not provided, will be derived from appDirPath |

webapp, backend |

resourcesPath |

N | Deprecated. Path to your Dapr resources. Can be default value by convention | ./app/components, ./webapp/components |

resourcesPaths |

N | Comma separated paths to your Dapr resources. Can be default value by convention | ./app/components, ./webapp/components |

appChannelAddress |

N | The network address the application listens on. Can be left to the default value by convention. | 127.0.0.1 |

configFilePath |

N | Path to your application’s configuration file | ./webapp/config.yaml |

appProtocol |

N | The protocol Dapr uses to talk to the application. | http, grpc |

appPort |

N | The port your application is listening on | 8080, 3000 |

daprHTTPPort |

N | Dapr HTTP port | |

daprGRPCPort |

N | Dapr GRPC port | |

daprInternalGRPCPort |

N | gRPC port for the Dapr Internal API to listen on; used when parsing the value from a local DNS component | |

metricsPort |

N | The port that Dapr sends its metrics information to | |

unixDomainSocket |

N | Path to a unix domain socket dir mount. If specified, communication with the Dapr sidecar uses unix domain sockets for lower latency and greater throughput when compared to using TCP ports. Not available on Windows. | /tmp/test-socket |

profilePort |

N | The port for the profile server to listen on | |

enableProfiling |

N | Enable profiling via an HTTP endpoint | |

apiListenAddresses |

N | Dapr API listen addresses | |

logLevel |

N | The log verbosity. | |

appMaxConcurrency |

N | The concurrency level of the application; default is unlimited | |

placementHostAddress |

N | Comma separated list of addresses for Dapr placement servers | 127.0.0.1:50057,127.0.0.1:50058 |

schedulerHostAddress |

N | Dapr Scheduler Service host address | localhost:50006 |

appSSL |

N | Enable https when Dapr invokes the application | |

maxBodySize |

N | Max size of the request body in MB. Set the value using size units (e.g., 16Mi for 16MB). The default is 4Mi |

|

readBufferSize |

N | Max size of the HTTP read buffer in KB. This also limits the maximum size of HTTP headers. Set the value using size units, for example 32Ki will support headers up to 32KB . Default is 4Ki for 4KB |

|

enableAppHealthCheck |

N | Enable the app health check on the application | true, false |

appHealthCheckPath |

N | Path to the health check file | /healthz |

appHealthProbeInterval |

N | Interval to probe for the health of the app in seconds | |

appHealthProbeTimeout |

N | Timeout for app health probes in milliseconds | |

appHealthThreshold |

N | Number of consecutive failures for the app to be considered unhealthy | |

enableApiLogging |

N | Enable the logging of all API calls from application to Dapr | |

runtimePath |

N | Dapr runtime install path | |

env |

N | Map to environment variable; environment variables applied per application will overwrite environment variables shared across applications | DEBUG, DAPR_HOST_ADD |

appLogDestination |

N | Log destination for outputting app logs; Its value can be file, console or fileAndConsole. Default is fileAndConsole | file, console, fileAndConsole |

daprdLogDestination |

N | Log destination for outputting daprd logs; Its value can be file, console or fileAndConsole. Default is file | file, console, fileAndConsole |

Next steps

The properties for the Multi-App Run template align with the dapr run -k CLI flags, listed in the CLI reference documentation.

| Properties | Required | Details | Example |

|---|---|---|---|

appDirPath |

Y | Path to the your application code | ./webapp/, ./backend/ |

appID |

N | Application’s app ID. If not provided, will be derived from appDirPath |

webapp, backend |

appChannelAddress |

N | The network address the application listens on. Can be left to the default value by convention. | 127.0.0, localhost |

appProtocol |

N | The protocol Dapr uses to talk to the application. | http, grpc |

appPort |

N | The port your application is listening on | 8080, 3000 |

daprHTTPPort |

N | Dapr HTTP port | |

daprGRPCPort |

N | Dapr GRPC port | |

daprInternalGRPCPort |

N | gRPC port for the Dapr Internal API to listen on; used when parsing the value from a local DNS component | |

metricsPort |

N | The port that Dapr sends its metrics information to | |

unixDomainSocket |

N | Path to a unix domain socket dir mount. If specified, communication with the Dapr sidecar uses unix domain sockets for lower latency and greater throughput when compared to using TCP ports. Not available on Windows. | /tmp/test-socket |

profilePort |

N | The port for the profile server to listen on | |

enableProfiling |

N | Enable profiling via an HTTP endpoint | |

apiListenAddresses |

N | Dapr API listen addresses | |

logLevel |

N | The log verbosity. | |

appMaxConcurrency |

N | The concurrency level of the application; default is unlimited | |

placementHostAddress |

N | Comma separated list of addresses for Dapr placement servers | 127.0.0.1:50057,127.0.0.1:50058 |

schedulerHostAddress |

N | Dapr Scheduler Service host address | 127.0.0.1:50006 |

appSSL |

N | Enable HTTPS when Dapr invokes the application | |

maxBodySize |

N | Max size of the request body in MB. Set the value using size units (e.g., 16Mi for 16MB). The default is 4Mi |

16Mi |

readBufferSize |

N | Max size of the HTTP read buffer in KB. This also limits the maximum size of HTTP headers. Set the value using size units, for example 32Ki will support headers up to 32KB . Default is 4Ki for 4KB |

32Ki |

enableAppHealthCheck |

N | Enable the app health check on the application | true, false |

appHealthCheckPath |

N | Path to the health check file | /healthz |

appHealthProbeInterval |

N | Interval to probe for the health of the app in seconds | |

appHealthProbeTimeout |

N | Timeout for app health probes in milliseconds | |

appHealthThreshold |

N | Number of consecutive failures for the app to be considered unhealthy | |

enableApiLogging |

N | Enable the logging of all API calls from application to Dapr | |

env |

N | Map to environment variable; environment variables applied per application will overwrite environment variables shared across applications | DEBUG, DAPR_HOST_ADD |

appLogDestination |

N | Log destination for outputting app logs; Its value can be file, console or fileAndConsole. Default is fileAndConsole | file, console, fileAndConsole |

daprdLogDestination |

N | Log destination for outputting daprd logs; Its value can be file, console or fileAndConsole. Default is file | file, console, fileAndConsole |

containerImage |

N | URI of the container image to be used when deploying to Kubernetes dev/test environment. | ghcr.io/dapr/samples/hello-k8s-python:latest |

containerImagePullPolicy |

N | The container image pull policy (default to Always). |

Always, IfNotPresent, Never |

createService |

N | Create a Kubernetes service for the application when deploying to dev/test environment. | true, false |

Next steps

Watch this video for an overview on Multi-App Run in Kubernetes:

3 - How to: Use the gRPC interface in your Dapr application

Dapr implements both an HTTP and a gRPC API for local calls. gRPC is useful for low-latency, high performance scenarios and has language integration using the proto clients.

Find a list of auto-generated clients in the Dapr SDK documentation.

The Dapr runtime implements a proto service that apps can communicate with via gRPC.

In addition to calling Dapr via gRPC, Dapr supports service-to-service calls with gRPC by acting as a proxy. Learn more in the gRPC service invocation how-to guide.

This guide demonstrates configuring and invoking Dapr with gRPC using a Go SDK application.

Configure Dapr to communicate with an app via gRPC

When running in self-hosted mode, use the --app-protocol flag to tell Dapr to use gRPC to talk to the app.

dapr run --app-protocol grpc --app-port 5005 node app.js

This tells Dapr to communicate with your app via gRPC over port 5005.

On Kubernetes, set the following annotations in your deployment YAML:

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp

namespace: default

labels:

app: myapp

spec:

replicas: 1

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "myapp"

dapr.io/app-protocol: "grpc"

dapr.io/app-port: "5005"

...

Invoke Dapr with gRPC

The following steps show how to create a Dapr client and call the SaveStateData operation on it.

-

Import the package:

package main import ( "context" "log" "os" dapr "github.com/dapr/go-sdk/client" ) -

Create the client:

// just for this demo ctx := context.Background() data := []byte("ping") // create the client client, err := dapr.NewClient() if err != nil { log.Panic(err) } defer client.Close()- Invoke the

SaveStatemethod:

// save state with the key key1 err = client.SaveState(ctx, "statestore", "key1", data) if err != nil { log.Panic(err) } log.Println("data saved") - Invoke the

Now you can explore all the different methods on the Dapr client.

Create a gRPC app with Dapr

The following steps will show how to create an app that exposes a server for with which Dapr can communicate.

-

Import the package:

package main import ( "context" "fmt" "log" "net" "github.com/golang/protobuf/ptypes/any" "github.com/golang/protobuf/ptypes/empty" commonv1pb "github.com/dapr/dapr/pkg/proto/common/v1" pb "github.com/dapr/dapr/pkg/proto/runtime/v1" "google.golang.org/grpc" ) -

Implement the interface:

// server is our user app type server struct { pb.UnimplementedAppCallbackServer } // EchoMethod is a simple demo method to invoke func (s *server) EchoMethod() string { return "pong" } // This method gets invoked when a remote service has called the app through Dapr // The payload carries a Method to identify the method, a set of metadata properties and an optional payload func (s *server) OnInvoke(ctx context.Context, in *commonv1pb.InvokeRequest) (*commonv1pb.InvokeResponse, error) { var response string switch in.Method { case "EchoMethod": response = s.EchoMethod() } return &commonv1pb.InvokeResponse{ ContentType: "text/plain; charset=UTF-8", Data: &any.Any{Value: []byte(response)}, }, nil } // Dapr will call this method to get the list of topics the app wants to subscribe to. In this example, we are telling Dapr // To subscribe to a topic named TopicA func (s *server) ListTopicSubscriptions(ctx context.Context, in *empty.Empty) (*pb.ListTopicSubscriptionsResponse, error) { return &pb.ListTopicSubscriptionsResponse{ Subscriptions: []*pb.TopicSubscription{ {Topic: "TopicA"}, }, }, nil } // Dapr will call this method to get the list of bindings the app will get invoked by. In this example, we are telling Dapr // To invoke our app with a binding named storage func (s *server) ListInputBindings(ctx context.Context, in *empty.Empty) (*pb.ListInputBindingsResponse, error) { return &pb.ListInputBindingsResponse{ Bindings: []string{"storage"}, }, nil } // This method gets invoked every time a new event is fired from a registered binding. The message carries the binding name, a payload and optional metadata func (s *server) OnBindingEvent(ctx context.Context, in *pb.BindingEventRequest) (*pb.BindingEventResponse, error) { fmt.Println("Invoked from binding") return &pb.BindingEventResponse{}, nil } // This method is fired whenever a message has been published to a topic that has been subscribed. Dapr sends published messages in a CloudEvents 0.3 envelope. func (s *server) OnTopicEvent(ctx context.Context, in *pb.TopicEventRequest) (*pb.TopicEventResponse, error) { fmt.Println("Topic message arrived") return &pb.TopicEventResponse{}, nil } -

Create the server:

func main() { // create listener lis, err := net.Listen("tcp", ":50001") if err != nil { log.Fatalf("failed to listen: %v", err) } // create grpc server s := grpc.NewServer() pb.RegisterAppCallbackServer(s, &server{}) fmt.Println("Client starting...") // and start... if err := s.Serve(lis); err != nil { log.Fatalf("failed to serve: %v", err) } }This creates a gRPC server for your app on port 50001.

Run the application

To run locally, use the Dapr CLI:

dapr run --app-id goapp --app-port 50001 --app-protocol grpc go run main.go

On Kubernetes, set the required dapr.io/app-protocol: "grpc" and dapr.io/app-port: "50001 annotations in your pod spec template, as mentioned above.

Other languages

You can use Dapr with any language supported by Protobuf, and not just with the currently available generated SDKs.

Using the protoc tool, you can generate the Dapr clients for other languages like Ruby, C++, Rust, and others.

Related Topics

4 - Serialization in Dapr's SDKs

Dapr SDKs provide serialization for two use cases. First, for API objects sent through request and response payloads. Second, for objects to be persisted. For both of these cases, a default serialization method is provided in each language SDK.

| Language SDK | Default Serializer |

|---|---|

| .NET | DataContracts for remoted actors, System.Text.Json otherwise. Read more about .NET serialization here |

| Java | DefaultObjectSerializer for JSON serialization |

| JavaScript | JSON |

Service invocation

using var client = (new DaprClientBuilder()).Build();

await client.InvokeMethodAsync("myappid", "saySomething", "My Message");

DaprClient client = (new DaprClientBuilder()).build();

client.invokeMethod("myappid", "saySomething", "My Message", HttpExtension.POST).block();

In the example above, the app myappid receives a POST request for the saySomething method with the request payload as

"My Message" - quoted since the serializer will serialize the input String to JSON.

POST /saySomething HTTP/1.1

Host: localhost

Content-Type: text/plain

Content-Length: 12

"My Message"

State management

using var client = (new DaprClientBuilder()).Build();

var state = new Dictionary<string, string>

{

{ "key": "MyKey" },

{ "value": "My Message" }

};

await client.SaveStateAsync("MyStateStore", "MyKey", state);

DaprClient client = (new DaprClientBuilder()).build();

client.saveState("MyStateStore", "MyKey", "My Message").block();

In this example, My Message is saved. It is not quoted because Dapr’s API internally parse the JSON request

object before saving it.

[

{

"key": "MyKey",

"value": "My Message"

}

]

PubSub

using var client = (new DaprClientBuilder()).Build();

await client.PublishEventAsync("MyPubSubName", "TopicName", "My Message");

The event is published and the content is serialized to byte[] and sent to Dapr sidecar. The subscriber receives it as a CloudEvent. Cloud event defines data as String. The Dapr SDK also provides a built-in deserializer for CloudEvent object.

public async Task<IActionResult> HandleMessage(string message)

{

//ASP.NET Core automatically deserializes the UTF-8 encoded bytes to a string

return new Ok();

}

or

app.MapPost("/TopicName", [Topic("MyPubSubName", "TopicName")] (string message) => {

return Results.Ok();

}

DaprClient client = (new DaprClientBuilder()).build();

client.publishEvent("TopicName", "My Message").block();

The event is published and the content is serialized to byte[] and sent to Dapr sidecar. The subscriber receives it as a CloudEvent. Cloud event defines data as String. The Dapr SDK also provides a built-in deserializer for CloudEvent objects.

@PostMapping(path = "/TopicName")

public void handleMessage(@RequestBody(required = false) byte[] body) {

// Dapr's event is compliant to CloudEvent.

CloudEvent event = CloudEvent.deserialize(body);

}

Bindings

For output bindings the object is serialized to byte[] whereas the input binding receives the raw byte[] as-is and deserializes it to the expected object type.

- Output binding:

using var client = (new DaprClientBuilder()).Build();

await client.InvokeBindingAsync("sample", "My Message");

- Input binding (controllers):

[ApiController]

public class SampleController : ControllerBase

{

[HttpPost("propagate")]

public ActionResult<string> GetValue([FromBody] int itemId)

{

Console.WriteLine($"Received message: {itemId}");

return $"itemID:{itemId}";

}

}

- Input binding (minimal API):

app.MapPost("value", ([FromBody] int itemId) =>

{

Console.WriteLine($"Received message: {itemId}");

return ${itemID:{itemId}";

});

- Output binding:

DaprClient client = (new DaprClientBuilder()).build();

client.invokeBinding("sample", "My Message").block();

- Input binding:

@PostMapping(path = "/sample")

public void handleInputBinding(@RequestBody(required = false) byte[] body) {

String message = (new DefaultObjectSerializer()).deserialize(body, String.class);

System.out.println(message);

}

It should print:

My Message

Actor Method invocation

Object serialization and deserialization for Actor method invocation are same as for the service method invocation, the only difference is that the application does not need to deserialize the request or serialize the response since it is all done transparently by the SDK.

For Actor methods, the SDK only supports methods with zero or one parameter.

The .NET SDK supports two different serialization types based on whether you're using strongly-typed (DataContracts) or weakly-typed (DataContracts or System.Text.JSON) actor client. [This document](https://v1-16.docs.dapr.io/developing-applications/sdks/dotnet/dotnet-actors/dotnet-actors-serialization/) can provide more information about the differences between each and additional considerations to keep in mind.- Invoking an Actor’s method using the weakly-typed client and System.Text.JSON:

var proxy = this.ProxyFactory.Create(ActorId.CreateRandom(), "DemoActor");

await proxy.SayAsync("My message");

- Implementing an Actor’s method:

public Task SayAsync(string message)

{

Console.WriteLine(message);

return Task.CompletedTask;

}

- Invoking an Actor’s method:

public static void main() {

ActorProxyBuilder builder = new ActorProxyBuilder("DemoActor");

String result = actor.invokeActorMethod("say", "My Message", String.class).block();

}

- Implementing an Actor’s method:

public String say(String something) {

System.out.println(something);

return "OK";

}

It should print:

My Message

Actor’s state management

Actors can also have state. In this case, the state manager will serialize and deserialize the objects using the state serializer and handle it transparently to the application.

public Task SayAsync(string message)

{

// Reads state from a key

var previousMessage = await this.StateManager.GetStateAsync<string>("lastmessage");

// Sets the new state for the key after serializing it

await this.StateManager.SetStateAsync("lastmessage", message);

return previousMessage;

}

public String actorMethod(String message) {

// Reads a state from key and deserializes it to String.

String previousMessage = super.getActorStateManager().get("lastmessage", String.class).block();

// Sets the new state for the key after serializing it.

super.getActorStateManager().set("lastmessage", message).block();

return previousMessage;

}

Default serializer

The default serializer for Dapr is a JSON serializer with the following expectations:

- Use of basic JSON data types for cross-language and cross-platform compatibility: string, number, array, boolean, null and another JSON object. Every complex property type in application’s serializable objects (DateTime, for example), should be represented as one of the JSON’s basic types.

- Data persisted with the default serializer should be saved as JSON objects too, without extra quotes or encoding. The example below shows how a string and a JSON object would look like in a Redis store.

redis-cli MGET "ActorStateIT_StatefulActorService||StatefulActorTest||1581130928192||message

"This is a message to be saved and retrieved."

redis-cli MGET "ActorStateIT_StatefulActorService||StatefulActorTest||1581130928192||mydata

{"value":"My data value."}

- Custom serializers must serialize object to

byte[]. - Custom serializers must deserialize

byte[]to object. - When user provides a custom serializer, it should be transferred or persisted as

byte[]. When persisting, also encode as Base64 string. This is done natively by most JSON libraries.

redis-cli MGET "ActorStateIT_StatefulActorService||StatefulActorTest||1581130928192||message

"VGhpcyBpcyBhIG1lc3NhZ2UgdG8gYmUgc2F2ZWQgYW5kIHJldHJpZXZlZC4="

redis-cli MGET "ActorStateIT_StatefulActorService||StatefulActorTest||1581130928192||mydata

"eyJ2YWx1ZSI6Ik15IGRhdGEgdmFsdWUuIn0="