More about Dapr Workflow

Learn more about how to use Dapr Workflow:

- Try the Workflow quickstart.

- Explore workflow via any of the supporting Dapr SDKs.

- Review the Workflow API reference documentation.

This is the multi-page printable view of this section. Click here to print.

Learn more about how to use Dapr Workflow:

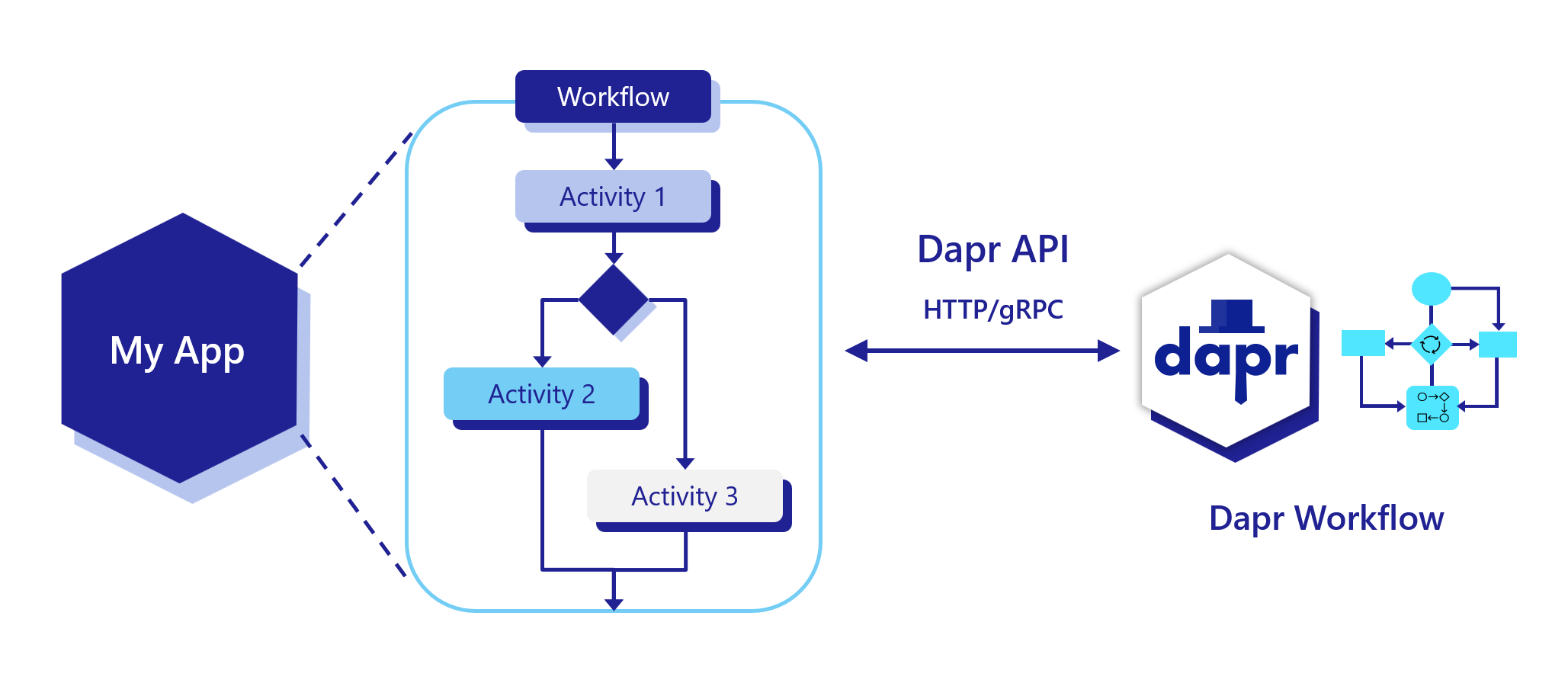

Dapr workflow makes it easy for developers to write business logic and integrations in a reliable way. Since Dapr workflows are stateful, they support long-running and fault-tolerant applications, ideal for orchestrating microservices. Dapr workflow works seamlessly with other Dapr building blocks, such as service invocation, pub/sub, state management, and bindings.

The durable, resilient Dapr Workflow capability:

Some example scenarios that Dapr Workflow can perform are:

With Dapr Workflow, you can write activities and then orchestrate those activities in a workflow. Workflow activities are:

Learn more about workflow activities.

In addition to activities, you can write workflows to schedule other workflows as child workflows. A child workflow has its own instance ID, history, and status that is independent of the parent workflow that started it, except for the fact that terminating the parent workflow terminates all of the child workflows created by it. Child workflow also supports automatic retry policies.

Learn more about child workflows.

Same as Dapr actors, you can schedule reminder-like durable delays for any time range.

Learn more about workflow timers and reminders

When you create an application with workflow code and run it with Dapr, you can call specific workflows that reside in the application. Each individual workflow can be:

Learn more about how manage a workflow using HTTP calls.

Dapr Workflow simplifies complex, stateful coordination requirements in microservice architectures. The following sections describe several application patterns that can benefit from Dapr Workflow.

Learn more about different types of workflow patterns

The Dapr Workflow authoring SDKs are language-specific SDKs that contain types and functions to implement workflow logic. The workflow logic lives in your application and is orchestrated by the Dapr Workflow engine running in the Dapr sidecar via a gRPC stream.

You can use the following SDKs to author a workflow.

| Language stack | Package |

|---|---|

| Python | dapr-ext-workflow |

| JavaScript | DaprWorkflowClient |

| .NET | Dapr.Workflow |

| Java | io.dapr.workflows |

| Go | workflow |

Want to put workflows to the test? Walk through the following quickstart and tutorials to see workflows in action:

| Quickstart/tutorial | Description |

|---|---|

| Workflow quickstart | Run a workflow application with four workflow activities to see Dapr Workflow in action |

| Workflow Python SDK example | Learn how to create a Dapr Workflow and invoke it using the Python dapr-ext-workflow package. |

| Workflow JavaScript SDK example | Learn how to create a Dapr Workflow and invoke it using the JavaScript SDK. |

| Workflow .NET SDK example | Learn how to create a Dapr Workflow and invoke it using ASP.NET Core web APIs. |

| Workflow Java SDK example | Learn how to create a Dapr Workflow and invoke it using the Java io.dapr.workflows package. |

| Workflow Go SDK example | Learn how to create a Dapr Workflow and invoke it using the Go workflow package. |

Want to skip the quickstarts? Not a problem. You can try out the workflow building block directly in your application. After Dapr is installed, you can begin using workflows, starting with how to author a workflow.

Watch this video for an overview on Dapr Workflow:

Now that you’ve learned about the workflow building block at a high level, let’s deep dive into the features and concepts included with the Dapr Workflow engine and SDKs. Dapr Workflow exposes several core features and concepts which are common across all supported languages.

Dapr Workflows are functions you write that define a series of tasks to be executed in a particular order. The Dapr Workflow engine takes care of scheduling and execution of the tasks, including managing failures and retries. If the app hosting your workflows is scaled out across multiple machines, the workflow engine load balances the execution of workflows and their tasks across multiple machines.

There are several different kinds of tasks that a workflow can schedule, including

Each workflow you define has a type name, and individual executions of a workflow require a unique instance ID. Workflow instance IDs can be generated by your app code, which is useful when workflows correspond to business entities like documents or jobs, or can be auto-generated UUIDs. A workflow’s instance ID is useful for debugging and also for managing workflows using the Workflow APIs.

Only one workflow instance with a given ID can exist at any given time. However, if a workflow instance completes or fails, its ID can be reused by a new workflow instance. Note, however, that the new workflow instance effectively replaces the old one in the configured state store.

Dapr Workflows maintain their execution state by using a technique known as event sourcing. Instead of storing the current state of a workflow as a snapshot, the workflow engine manages an append-only log of history events that describe the various steps that a workflow has taken. When using the workflow SDK, these history events are stored automatically whenever the workflow “awaits” for the result of a scheduled task.

When a workflow “awaits” a scheduled task, it unloads itself from memory until the task completes. Once the task completes, the workflow engine schedules the workflow function to run again. This second workflow function execution is known as a replay.

When a workflow function is replayed, it runs again from the beginning. However, when it encounters a task that already completed, instead of scheduling that task again, the workflow engine:

This “replay” behavior continues until the workflow function completes or fails with an error.

Using this replay technique, a workflow is able to resume execution from any “await” point as if it had never been unloaded from memory. Even the values of local variables from previous runs can be restored without the workflow engine knowing anything about what data they stored. This ability to restore state makes Dapr Workflows durable and fault tolerant.

As discussed in the workflow replay section, workflows maintain a write-only event-sourced history log of all its operations. To avoid runaway resource usage, workflows must limit the number of operations they schedule. For example, ensure your workflow doesn’t:

You can use the following two techniques to write workflows that may need to schedule extreme numbers of tasks:

Use the continue-as-new API:

Each workflow SDK exposes a continue-as-new API that workflows can invoke to restart themselves with a new input and history. The continue-as-new API is especially ideal for implementing “eternal workflows”, like monitoring agents, which would otherwise be implemented using a while (true)-like construct. Using continue-as-new is a great way to keep the workflow history size small.

The continue-as-new API truncates the existing history, replacing it with a new history.

Use child workflows: Each workflow SDK exposes an API for creating child workflows. A child workflow behaves like any other workflow, except that it’s scheduled by a parent workflow. Child workflows have:

If a workflow needs to schedule thousands of tasks or more, it’s recommended that those tasks be distributed across child workflows so that no single workflow’s history size grows too large.

Because workflows are long-running and durable, updating workflow code must be done with extreme care. As discussed in the workflow determinism limitation section, workflow code must be deterministic. Updates to workflow code must preserve this determinism if there are any non-completed workflow instances in the system. Otherwise, updates to workflow code can result in runtime failures the next time those workflows execute.

Workflow activities are the basic unit of work in a workflow and are the tasks that get orchestrated in the business process. For example, you might create a workflow to process an order. The tasks may involve checking the inventory, charging the customer, and creating a shipment. Each task would be a separate activity. These activities may be executed serially, in parallel, or some combination of both.

Unlike workflows, activities aren’t restricted in the type of work you can do in them. Activities are frequently used to make network calls or run CPU intensive operations. An activity can also return data back to the workflow.

The Dapr Workflow engine guarantees that each called activity is executed at least once as part of a workflow’s execution. Because activities only guarantee at-least-once execution, it’s recommended that activity logic be implemented as idempotent whenever possible.

In addition to activities, workflows can schedule other workflows as child workflows. A child workflow has its own instance ID, history, and status that is independent of the parent workflow that started it.

Child workflows have many benefits:

The return value of a child workflow is its output. If a child workflow fails with an exception, then that exception is surfaced to the parent workflow, just like it is when an activity task fails with an exception. Child workflows also support automatic retry policies.

Terminating a parent workflow terminates all of the child workflows created by the workflow instance. See the terminate workflow api for more information.

Dapr Workflows allow you to schedule reminder-like durable delays for any time range, including minutes, days, or even years. These durable timers can be scheduled by workflows to implement simple delays or to set up ad-hoc timeouts on other async tasks. More specifically, a durable timer can be set to trigger on a particular date or after a specified duration. There are no limits to the maximum duration of durable timers, which are internally backed by internal actor reminders. For example, a workflow that tracks a 30-day free subscription to a service could be implemented using a durable timer that fires 30-days after the workflow is created. Workflows can be safely unloaded from memory while waiting for a durable timer to fire.

Workflows support durable retry policies for activities and child workflows. Workflow retry policies are separate and distinct from Dapr resiliency policies in the following ways.

Retries are internally implemented using durable timers. This means that workflows can be safely unloaded from memory while waiting for a retry to fire, conserving system resources. This also means that delays between retries can be arbitrarily long, including minutes, hours, or even days.

It’s possible to use both workflow retry policies and Dapr Resiliency policies together. For example, if a workflow activity uses a Dapr client to invoke a service, the Dapr client uses the configured resiliency policy. See Quickstart: Service-to-service resiliency for more information with an example. However, if the activity itself fails for any reason, including exhausting the retries on the resiliency policy, then the workflow’s resiliency policy kicks in.

Because workflow retry policies are configured in code, the exact developer experience may vary depending on the version of the workflow SDK. In general, workflow retry policies can be configured with the following parameters.

| Parameter | Description |

|---|---|

| Maximum number of attempts | The maximum number of times to execute the activity or child workflow. |

| First retry interval | The amount of time to wait before the first retry. |

| Backoff coefficient | The coefficient used to determine the rate of increase of back-off. For example a coefficient of 2 doubles the wait of each subsequent retry. |

| Maximum retry interval | The maximum amount of time to wait before each subsequent retry. |

| Retry timeout | The overall timeout for retries, regardless of any configured max number of attempts. |

Sometimes workflows will need to wait for events that are raised by external systems. For example, an approval workflow may require a human to explicitly approve an order request within an order processing workflow if the total cost exceeds some threshold. Another example is a trivia game orchestration workflow that pauses while waiting for all participants to submit their answers to trivia questions. These mid-execution inputs are referred to as external events.

External events have a name and a payload and are delivered to a single workflow instance. Workflows can create “wait for external event” tasks that subscribe to external events and await those tasks to block execution until the event is received. The workflow can then read the payload of these events and make decisions about which next steps to take. External events can be processed serially or in parallel. External events can be raised by other workflows or by workflow code.

Workflows can also wait for multiple external event signals of the same name, in which case they are dispatched to the corresponding workflow tasks in a first-in, first-out (FIFO) manner. If a workflow receives an external event signal but has not yet created a “wait for external event” task, the event will be saved into the workflow’s history and consumed immediately after the workflow requests the event.

Learn more about external system interaction.

Workflow state can be purged from a state store, purging all its history and removing all metadata related to a specific workflow instance. The purge capability is used for workflows that have run to a COMPLETED, FAILED, or TERMINATED state.

Learn more in the workflow API reference guide.

To take advantage of the workflow replay technique, your workflow code needs to be deterministic. For your workflow code to be deterministic, you may need to work around some limitations.

APIs that generate random numbers, random UUIDs, or the current date are non-deterministic. To work around this limitation, you can:

For example, instead of this:

// DON'T DO THIS!

DateTime currentTime = DateTime.UtcNow;

Guid newIdentifier = Guid.NewGuid();

string randomString = GetRandomString();

// DON'T DO THIS!

Instant currentTime = Instant.now();

UUID newIdentifier = UUID.randomUUID();

String randomString = getRandomString();

// DON'T DO THIS!

const currentTime = new Date();

const newIdentifier = uuidv4();

const randomString = getRandomString();

// DON'T DO THIS!

const currentTime = time.Now()

Do this:

// Do this!!

DateTime currentTime = context.CurrentUtcDateTime;

Guid newIdentifier = context.NewGuid();

string randomString = await context.CallActivityAsync<string>(nameof("GetRandomString")); //Use "nameof" to prevent specifying an activity name that does not exist in your application

// Do this!!

Instant currentTime = context.getCurrentInstant();

Guid newIdentifier = context.newGuid();

String randomString = context.callActivity(GetRandomString.class.getName(), String.class).await();

// Do this!!

const currentTime = context.getCurrentUtcDateTime();

const randomString = yield context.callActivity(getRandomString);

const currentTime = ctx.CurrentUTCDateTime()

External data includes any data that isn’t stored in the workflow state. Workflows must not interact with global variables, environment variables, the file system, or make network calls.

Instead, workflows should interact with external state indirectly using workflow inputs, activity tasks, and through external event handling.

For example, instead of this:

// DON'T DO THIS!

string configuration = Environment.GetEnvironmentVariable("MY_CONFIGURATION")!;

string data = await new HttpClient().GetStringAsync("https://example.com/api/data");

// DON'T DO THIS!

String configuration = System.getenv("MY_CONFIGURATION");

HttpRequest request = HttpRequest.newBuilder().uri(new URI("https://postman-echo.com/post")).GET().build();

HttpResponse<String> response = HttpClient.newBuilder().build().send(request, HttpResponse.BodyHandlers.ofString());

// DON'T DO THIS!

// Accessing an Environment Variable (Node.js)

const configuration = process.env.MY_CONFIGURATION;

fetch('https://postman-echo.com/get')

.then(response => response.text())

.then(data => {

console.log(data);

})

.catch(error => {

console.error('Error:', error);

});

// DON'T DO THIS!

resp, err := http.Get("http://example.com/api/data")

Do this:

// Do this!!

string configuration = workflowInput.Configuration; // imaginary workflow input argument

string data = await context.CallActivityAsync<string>(nameof("MakeHttpCall"), "https://example.com/api/data");

// Do this!!

String configuration = ctx.getInput(InputType.class).getConfiguration(); // imaginary workflow input argument

String data = ctx.callActivity(MakeHttpCall.class, "https://example.com/api/data", String.class).await();

// Do this!!

const configuration = workflowInput.getConfiguration(); // imaginary workflow input argument

const data = yield ctx.callActivity(makeHttpCall, "https://example.com/api/data");

// Do this!!

err := ctx.CallActivity(MakeHttpCallActivity, workflow.ActivityInput("https://example.com/api/data")).Await(&output)

The implementation of each language SDK requires that all workflow function operations operate on the same thread (goroutine, etc.) that the function was scheduled on. Workflow functions must never:

Failure to follow this rule could result in undefined behavior. Any background processing should instead be delegated to activity tasks, which can be scheduled to run serially or concurrently.

For example, instead of this:

// DON'T DO THIS!

Task t = Task.Run(() => context.CallActivityAsync("DoSomething"));

await context.CreateTimer(5000).ConfigureAwait(false);

// DON'T DO THIS!

new Thread(() -> {

ctx.callActivity(DoSomethingActivity.class.getName()).await();

}).start();

ctx.createTimer(Duration.ofSeconds(5)).await();

Don’t declare JavaScript workflow as async. The Node.js runtime doesn’t guarantee that asynchronous functions are deterministic.

// DON'T DO THIS!

go func() {

err := ctx.CallActivity(DoSomething).Await(nil)

}()

err := ctx.CreateTimer(time.Second).Await(nil)

Do this:

// Do this!!

Task t = context.CallActivityAsync(nameof("DoSomething"));

await context.CreateTimer(5000).ConfigureAwait(true);

// Do this!!

ctx.callActivity(DoSomethingActivity.class.getName()).await();

ctx.createTimer(Duration.ofSeconds(5)).await();

Since the Node.js runtime doesn’t guarantee that asynchronous functions are deterministic, always declare JavaScript workflow as synchronous generator functions.

// Do this!

task := ctx.CallActivity(DoSomething)

task.Await(nil)

Make sure updates you make to the workflow code maintain its determinism. A couple examples of code updates that can break workflow determinism:

Changing workflow function signatures: Changing the name, input, or output of a workflow or activity function is considered a breaking change and must be avoided.

Changing the number or order of workflow tasks: Changing the number or order of workflow tasks causes a workflow instance’s history to no longer match the code and may result in runtime errors or other unexpected behavior.

To work around these constraints:

Dapr Workflows simplify complex, stateful coordination requirements in microservice architectures. The following sections describe several application patterns that can benefit from Dapr Workflows.

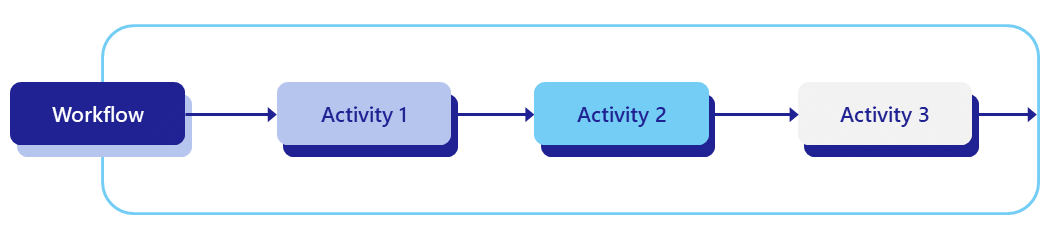

In the task chaining pattern, multiple steps in a workflow are run in succession, and the output of one step may be passed as the input to the next step. Task chaining workflows typically involve creating a sequence of operations that need to be performed on some data, such as filtering, transforming, and reducing.

In some cases, the steps of the workflow may need to be orchestrated across multiple microservices. For increased reliability and scalability, you’re also likely to use queues to trigger the various steps.

While the pattern is simple, there are many complexities hidden in the implementation. For example:

Dapr Workflow solves these complexities by allowing you to implement the task chaining pattern concisely as a simple function in the programming language of your choice, as shown in the following example.

import dapr.ext.workflow as wf

def task_chain_workflow(ctx: wf.DaprWorkflowContext, wf_input: int):

try:

result1 = yield ctx.call_activity(step1, input=wf_input)

result2 = yield ctx.call_activity(step2, input=result1)

result3 = yield ctx.call_activity(step3, input=result2)

except Exception as e:

yield ctx.call_activity(error_handler, input=str(e))

raise

return [result1, result2, result3]

def step1(ctx, activity_input):

print(f'Step 1: Received input: {activity_input}.')

# Do some work

return activity_input + 1

def step2(ctx, activity_input):

print(f'Step 2: Received input: {activity_input}.')

# Do some work

return activity_input * 2

def step3(ctx, activity_input):

print(f'Step 3: Received input: {activity_input}.')

# Do some work

return activity_input ^ 2

def error_handler(ctx, error):

print(f'Executing error handler: {error}.')

# Apply some compensating work

Note Workflow retry policies will be available in a future version of the Python SDK.

import { DaprWorkflowClient, WorkflowActivityContext, WorkflowContext, WorkflowRuntime, TWorkflow } from "@dapr/dapr";

async function start() {

// Update the gRPC client and worker to use a local address and port

const daprHost = "localhost";

const daprPort = "50001";

const workflowClient = new DaprWorkflowClient({

daprHost,

daprPort,

});

const workflowRuntime = new WorkflowRuntime({

daprHost,

daprPort,

});

const hello = async (_: WorkflowActivityContext, name: string) => {

return `Hello ${name}!`;

};

const sequence: TWorkflow = async function* (ctx: WorkflowContext): any {

const cities: string[] = [];

const result1 = yield ctx.callActivity(hello, "Tokyo");

cities.push(result1);

const result2 = yield ctx.callActivity(hello, "Seattle");

cities.push(result2);

const result3 = yield ctx.callActivity(hello, "London");

cities.push(result3);

return cities;

};

workflowRuntime.registerWorkflow(sequence).registerActivity(hello);

// Wrap the worker startup in a try-catch block to handle any errors during startup

try {

await workflowRuntime.start();

console.log("Workflow runtime started successfully");

} catch (error) {

console.error("Error starting workflow runtime:", error);

}

// Schedule a new orchestration

try {

const id = await workflowClient.scheduleNewWorkflow(sequence);

console.log(`Orchestration scheduled with ID: ${id}`);

// Wait for orchestration completion

const state = await workflowClient.waitForWorkflowCompletion(id, undefined, 30);

console.log(`Orchestration completed! Result: ${state?.serializedOutput}`);

} catch (error) {

console.error("Error scheduling or waiting for orchestration:", error);

}

await workflowRuntime.stop();

await workflowClient.stop();

// stop the dapr side car

process.exit(0);

}

start().catch((e) => {

console.error(e);

process.exit(1);

# Apply custom compensation logic

});

// Expotential backoff retry policy that survives long outages

var retryOptions = new WorkflowTaskOptions

{

RetryPolicy = new WorkflowRetryPolicy(

firstRetryInterval: TimeSpan.FromMinutes(1),

backoffCoefficient: 2.0,

maxRetryInterval: TimeSpan.FromHours(1),

maxNumberOfAttempts: 10),

};

try

{

var result1 = await context.CallActivityAsync<string>("Step1", wfInput, retryOptions);

var result2 = await context.CallActivityAsync<byte[]>("Step2", result1, retryOptions);

var result3 = await context.CallActivityAsync<long[]>("Step3", result2, retryOptions);

return string.Join(", ", result4);

}

catch (TaskFailedException) // Task failures are surfaced as TaskFailedException

{

// Retries expired - apply custom compensation logic

await context.CallActivityAsync<long[]>("MyCompensation", options: retryOptions);

throw;

}

Note In the example above,

"Step1","Step2","Step3", and"MyCompensation"represent workflow activities, which are functions in your code that actually implement the steps of the workflow. For brevity, these activity implementations are left out of this example.

public class ChainWorkflow extends Workflow {

@Override

public WorkflowStub create() {

return ctx -> {

StringBuilder sb = new StringBuilder();

String wfInput = ctx.getInput(String.class);

String result1 = ctx.callActivity("Step1", wfInput, String.class).await();

String result2 = ctx.callActivity("Step2", result1, String.class).await();

String result3 = ctx.callActivity("Step3", result2, String.class).await();

String result = sb.append(result1).append(',').append(result2).append(',').append(result3).toString();

ctx.complete(result);

};

}

}

class Step1 implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

Logger logger = LoggerFactory.getLogger(Step1.class);

logger.info("Starting Activity: " + ctx.getName());

// Do some work

return null;

}

}

class Step2 implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

Logger logger = LoggerFactory.getLogger(Step2.class);

logger.info("Starting Activity: " + ctx.getName());

// Do some work

return null;

}

}

class Step3 implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

Logger logger = LoggerFactory.getLogger(Step3.class);

logger.info("Starting Activity: " + ctx.getName());

// Do some work

return null;

}

}

func TaskChainWorkflow(ctx *workflow.WorkflowContext) (any, error) {

var input int

if err := ctx.GetInput(&input); err != nil {

return "", err

}

var result1 int

if err := ctx.CallActivity(Step1, workflow.ActivityInput(input)).Await(&result1); err != nil {

return nil, err

}

var result2 int

if err := ctx.CallActivity(Step2, workflow.ActivityInput(input)).Await(&result2); err != nil {

return nil, err

}

var result3 int

if err := ctx.CallActivity(Step3, workflow.ActivityInput(input)).Await(&result3); err != nil {

return nil, err

}

return []int{result1, result2, result3}, nil

}

func Step1(ctx workflow.ActivityContext) (any, error) {

var input int

if err := ctx.GetInput(&input); err != nil {

return "", err

}

fmt.Printf("Step 1: Received input: %s", input)

return input + 1, nil

}

func Step2(ctx workflow.ActivityContext) (any, error) {

var input int

if err := ctx.GetInput(&input); err != nil {

return "", err

}

fmt.Printf("Step 2: Received input: %s", input)

return input * 2, nil

}

func Step3(ctx workflow.ActivityContext) (any, error) {

var input int

if err := ctx.GetInput(&input); err != nil {

return "", err

}

fmt.Printf("Step 3: Received input: %s", input)

return int(math.Pow(float64(input), 2)), nil

}

As you can see, the workflow is expressed as a simple series of statements in the programming language of your choice. This allows any engineer in the organization to quickly understand the end-to-end flow without necessarily needing to understand the end-to-end system architecture.

Behind the scenes, the Dapr Workflow runtime:

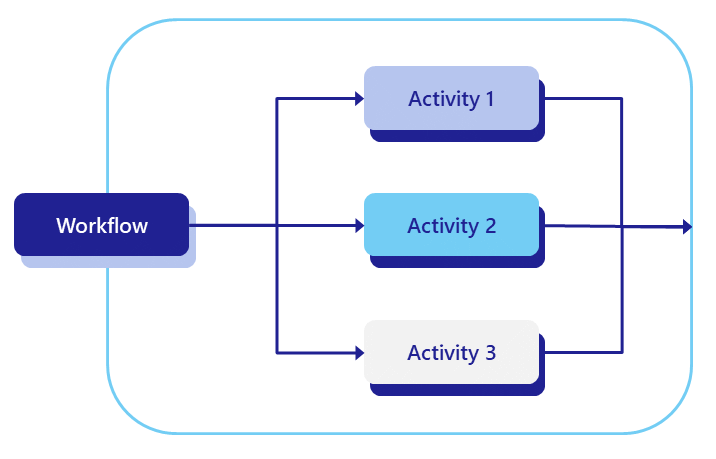

In the fan-out/fan-in design pattern, you execute multiple tasks simultaneously across potentially multiple workers, wait for them to finish, and perform some aggregation on the result.

In addition to the challenges mentioned in the previous pattern, there are several important questions to consider when implementing the fan-out/fan-in pattern manually:

Dapr Workflows provides a way to express the fan-out/fan-in pattern as a simple function, as shown in the following example:

import time

from typing import List

import dapr.ext.workflow as wf

def batch_processing_workflow(ctx: wf.DaprWorkflowContext, wf_input: int):

# get a batch of N work items to process in parallel

work_batch = yield ctx.call_activity(get_work_batch, input=wf_input)

# schedule N parallel tasks to process the work items and wait for all to complete

parallel_tasks = [ctx.call_activity(process_work_item, input=work_item) for work_item in work_batch]

outputs = yield wf.when_all(parallel_tasks)

# aggregate the results and send them to another activity

total = sum(outputs)

yield ctx.call_activity(process_results, input=total)

def get_work_batch(ctx, batch_size: int) -> List[int]:

return [i + 1 for i in range(batch_size)]

def process_work_item(ctx, work_item: int) -> int:

print(f'Processing work item: {work_item}.')

time.sleep(5)

result = work_item * 2

print(f'Work item {work_item} processed. Result: {result}.')

return result

def process_results(ctx, final_result: int):

print(f'Final result: {final_result}.')

import {

Task,

DaprWorkflowClient,

WorkflowActivityContext,

WorkflowContext,

WorkflowRuntime,

TWorkflow,

} from "@dapr/dapr";

// Wrap the entire code in an immediately-invoked async function

async function start() {

// Update the gRPC client and worker to use a local address and port

const daprHost = "localhost";

const daprPort = "50001";

const workflowClient = new DaprWorkflowClient({

daprHost,

daprPort,

});

const workflowRuntime = new WorkflowRuntime({

daprHost,

daprPort,

});

function getRandomInt(min: number, max: number): number {

return Math.floor(Math.random() * (max - min + 1)) + min;

}

async function getWorkItemsActivity(_: WorkflowActivityContext): Promise<string[]> {

const count: number = getRandomInt(2, 10);

console.log(`generating ${count} work items...`);

const workItems: string[] = Array.from({ length: count }, (_, i) => `work item ${i}`);

return workItems;

}

function sleep(ms: number): Promise<void> {

return new Promise((resolve) => setTimeout(resolve, ms));

}

async function processWorkItemActivity(context: WorkflowActivityContext, item: string): Promise<number> {

console.log(`processing work item: ${item}`);

// Simulate some work that takes a variable amount of time

const sleepTime = Math.random() * 5000;

await sleep(sleepTime);

// Return a result for the given work item, which is also a random number in this case

// For more information about random numbers in workflow please check

// https://learn.microsoft.com/azure/azure-functions/durable/durable-functions-code-constraints?tabpane=csharp#random-numbers

return Math.floor(Math.random() * 11);

}

const workflow: TWorkflow = async function* (ctx: WorkflowContext): any {

const tasks: Task<any>[] = [];

const workItems = yield ctx.callActivity(getWorkItemsActivity);

for (const workItem of workItems) {

tasks.push(ctx.callActivity(processWorkItemActivity, workItem));

}

const results: number[] = yield ctx.whenAll(tasks);

const sum: number = results.reduce((accumulator, currentValue) => accumulator + currentValue, 0);

return sum;

};

workflowRuntime.registerWorkflow(workflow);

workflowRuntime.registerActivity(getWorkItemsActivity);

workflowRuntime.registerActivity(processWorkItemActivity);

// Wrap the worker startup in a try-catch block to handle any errors during startup

try {

await workflowRuntime.start();

console.log("Worker started successfully");

} catch (error) {

console.error("Error starting worker:", error);

}

// Schedule a new orchestration

try {

const id = await workflowClient.scheduleNewWorkflow(workflow);

console.log(`Orchestration scheduled with ID: ${id}`);

// Wait for orchestration completion

const state = await workflowClient.waitForWorkflowCompletion(id, undefined, 30);

console.log(`Orchestration completed! Result: ${state?.serializedOutput}`);

} catch (error) {

console.error("Error scheduling or waiting for orchestration:", error);

}

// stop worker and client

await workflowRuntime.stop();

await workflowClient.stop();

// stop the dapr side car

process.exit(0);

}

start().catch((e) => {

console.error(e);

process.exit(1);

});

// Get a list of N work items to process in parallel.

object[] workBatch = await context.CallActivityAsync<object[]>("GetWorkBatch", null);

// Schedule the parallel tasks, but don't wait for them to complete yet.

var parallelTasks = new List<Task<int>>(workBatch.Length);

for (int i = 0; i < workBatch.Length; i++)

{

Task<int> task = context.CallActivityAsync<int>("ProcessWorkItem", workBatch[i]);

parallelTasks.Add(task);

}

// Everything is scheduled. Wait here until all parallel tasks have completed.

await Task.WhenAll(parallelTasks);

// Aggregate all N outputs and publish the result.

int sum = parallelTasks.Sum(t => t.Result);

await context.CallActivityAsync("PostResults", sum);

public class FaninoutWorkflow extends Workflow {

@Override

public WorkflowStub create() {

return ctx -> {

// Get a list of N work items to process in parallel.

Object[] workBatch = ctx.callActivity("GetWorkBatch", Object[].class).await();

// Schedule the parallel tasks, but don't wait for them to complete yet.

List<Task<Integer>> tasks = Arrays.stream(workBatch)

.map(workItem -> ctx.callActivity("ProcessWorkItem", workItem, int.class))

.collect(Collectors.toList());

// Everything is scheduled. Wait here until all parallel tasks have completed.

List<Integer> results = ctx.allOf(tasks).await();

// Aggregate all N outputs and publish the result.

int sum = results.stream().mapToInt(Integer::intValue).sum();

ctx.complete(sum);

};

}

}

func BatchProcessingWorkflow(ctx *workflow.WorkflowContext) (any, error) {

var input int

if err := ctx.GetInput(&input); err != nil {

return 0, err

}

var workBatch []int

if err := ctx.CallActivity(GetWorkBatch, workflow.ActivityInput(input)).Await(&workBatch); err != nil {

return 0, err

}

parallelTasks := workflow.NewTaskSlice(len(workBatch))

for i, workItem := range workBatch {

parallelTasks[i] = ctx.CallActivity(ProcessWorkItem, workflow.ActivityInput(workItem))

}

var outputs int

for _, task := range parallelTasks {

var output int

err := task.Await(&output)

if err == nil {

outputs += output

} else {

return 0, err

}

}

if err := ctx.CallActivity(ProcessResults, workflow.ActivityInput(outputs)).Await(nil); err != nil {

return 0, err

}

return 0, nil

}

func GetWorkBatch(ctx workflow.ActivityContext) (any, error) {

var batchSize int

if err := ctx.GetInput(&batchSize); err != nil {

return 0, err

}

batch := make([]int, batchSize)

for i := 0; i < batchSize; i++ {

batch[i] = i

}

return batch, nil

}

func ProcessWorkItem(ctx workflow.ActivityContext) (any, error) {

var workItem int

if err := ctx.GetInput(&workItem); err != nil {

return 0, err

}

fmt.Printf("Processing work item: %d\n", workItem)

time.Sleep(time.Second * 5)

result := workItem * 2

fmt.Printf("Work item %d processed. Result: %d\n", workItem, result)

return result, nil

}

func ProcessResults(ctx workflow.ActivityContext) (any, error) {

var finalResult int

if err := ctx.GetInput(&finalResult); err != nil {

return 0, err

}

fmt.Printf("Final result: %d\n", finalResult)

return finalResult, nil

}

The key takeaways from this example are:

Furthermore, the execution of the workflow is durable. If a workflow starts 100 parallel task executions and only 40 complete before the process crashes, the workflow restarts itself automatically and only schedules the remaining 60 tasks.

It’s possible to go further and limit the degree of concurrency using simple, language-specific constructs. The sample code below illustrates how to restrict the degree of fan-out to just 5 concurrent activity executions:

//Revisiting the earlier example...

// Get a list of N work items to process in parallel.

object[] workBatch = await context.CallActivityAsync<object[]>("GetWorkBatch", null);

const int MaxParallelism = 5;

var results = new List<int>();

var inFlightTasks = new HashSet<Task<int>>();

foreach(var workItem in workBatch)

{

if (inFlightTasks.Count >= MaxParallelism)

{

var finishedTask = await Task.WhenAny(inFlightTasks);

results.Add(finishedTask.Result);

inFlightTasks.Remove(finishedTask);

}

inFlightTasks.Add(context.CallActivityAsync<int>("ProcessWorkItem", workItem));

}

results.AddRange(await Task.WhenAll(inFlightTasks));

var sum = results.Sum(t => t);

await context.CallActivityAsync("PostResults", sum);

With the release of 1.16, it’s even easier to process workflow activities in parallel while putting an upper cap on

concurrency by using the following extension methods on the WorkflowContext:

//Revisiting the earlier example...

// Get a list of work items to process

var workBatch = await context.CallActivityAsync<object[]>("GetWorkBatch", null);

// Process deterministically in parallel with an upper cap of 5 activities at a time

var results = await context.ProcessInParallelAsync(workBatch, workItem => context.CallActivityAsync<int>("ProcessWorkItem", workItem), maxConcurrency: 5);

var sum = results.Sum(t => t);

await context.CallActivityAsync("PostResults", sum);

Limiting the degree of concurrency in this way can be useful for limiting contention against shared resources. For example, if the activities need to call into external resources that have their own concurrency limits, like a databases or external APIs, it can be useful to ensure that no more than a specified number of activities call that resource concurrently.

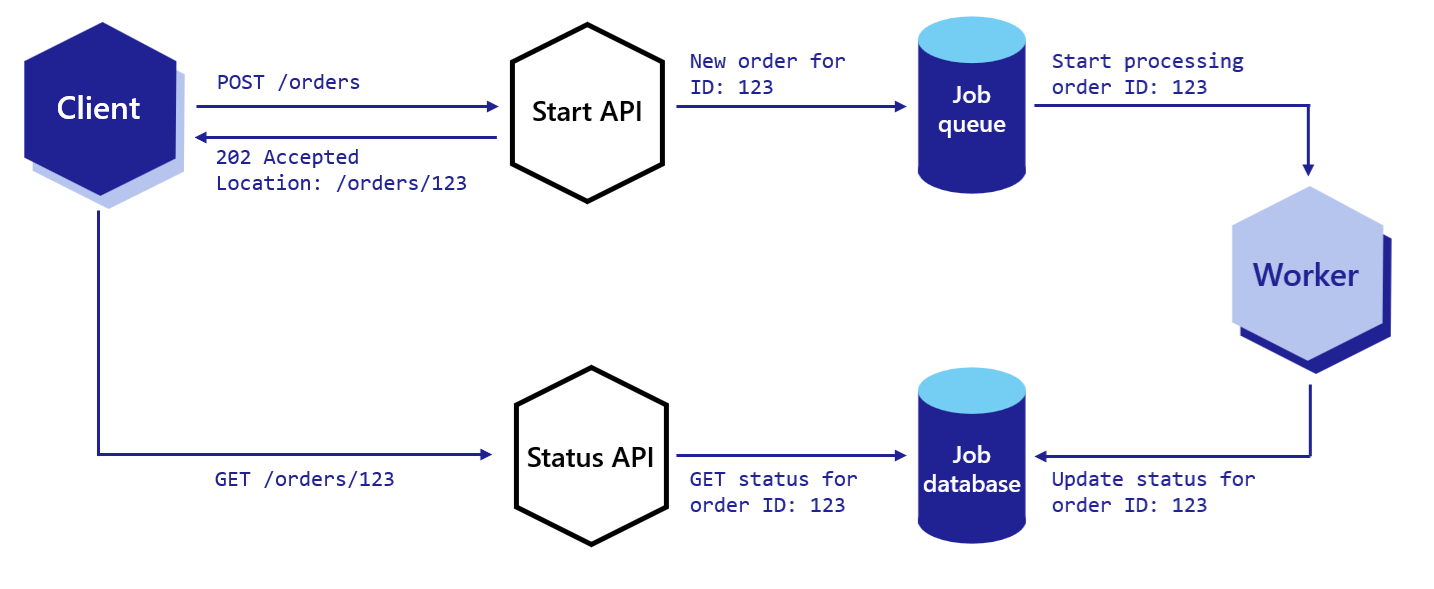

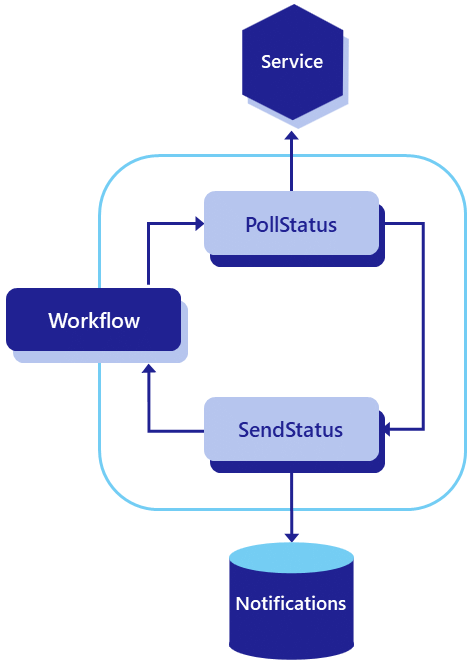

Asynchronous HTTP APIs are typically implemented using the Asynchronous Request-Reply pattern. Implementing this pattern traditionally involves the following:

The end-to-end flow is illustrated in the following diagram.

The challenge with implementing the asynchronous request-reply pattern is that it involves the use of multiple APIs and state stores. It also involves implementing the protocol correctly so that the client knows how to automatically poll for status and know when the operation is complete.

The Dapr workflow HTTP API supports the asynchronous request-reply pattern out-of-the box, without requiring you to write any code or do any state management.

The following curl commands illustrate how the workflow APIs support this pattern.

curl -X POST http://localhost:3500/v1.0/workflows/dapr/OrderProcessingWorkflow/start?instanceID=12345678 -d '{"Name":"Paperclips","Quantity":1,"TotalCost":9.95}'

The previous command will result in the following response JSON:

{"instanceID":"12345678"}

The HTTP client can then construct the status query URL using the workflow instance ID and poll it repeatedly until it sees the “COMPLETE”, “FAILURE”, or “TERMINATED” status in the payload.

curl http://localhost:3500/v1.0/workflows/dapr/12345678

The following is an example of what an in-progress workflow status might look like.

{

"instanceID": "12345678",

"workflowName": "OrderProcessingWorkflow",

"createdAt": "2023-05-03T23:22:11.143069826Z",

"lastUpdatedAt": "2023-05-03T23:22:22.460025267Z",

"runtimeStatus": "RUNNING",

"properties": {

"dapr.workflow.custom_status": "",

"dapr.workflow.input": "{\"Name\":\"Paperclips\",\"Quantity\":1,\"TotalCost\":9.95}"

}

}

As you can see from the previous example, the workflow’s runtime status is RUNNING, which lets the client know that it should continue polling.

If the workflow has completed, the status might look as follows.

{

"instanceID": "12345678",

"workflowName": "OrderProcessingWorkflow",

"createdAt": "2023-05-03T23:30:11.381146313Z",

"lastUpdatedAt": "2023-05-03T23:30:52.923870615Z",

"runtimeStatus": "COMPLETED",

"properties": {

"dapr.workflow.custom_status": "",

"dapr.workflow.input": "{\"Name\":\"Paperclips\",\"Quantity\":1,\"TotalCost\":9.95}",

"dapr.workflow.output": "{\"Processed\":true}"

}

}

As you can see from the previous example, the runtime status of the workflow is now COMPLETED, which means the client can stop polling for updates.

The monitor pattern is recurring process that typically:

The following diagram provides a rough illustration of this pattern.

Depending on the business needs, there may be a single monitor or there may be multiple monitors, one for each business entity (for example, a stock). Furthermore, the amount of time to sleep may need to change, depending on the circumstances. These requirements make using cron-based scheduling systems impractical.

Dapr Workflow supports this pattern natively by allowing you to implement eternal workflows. Rather than writing infinite while-loops (which is an anti-pattern), Dapr Workflow exposes a continue-as-new API that workflow authors can use to restart a workflow function from the beginning with a new input.

from dataclasses import dataclass

from datetime import timedelta

import random

import dapr.ext.workflow as wf

@dataclass

class JobStatus:

job_id: str

is_healthy: bool

def status_monitor_workflow(ctx: wf.DaprWorkflowContext, job: JobStatus):

# poll a status endpoint associated with this job

status = yield ctx.call_activity(check_status, input=job)

if not ctx.is_replaying:

print(f"Job '{job.job_id}' is {status}.")

if status == "healthy":

job.is_healthy = True

next_sleep_interval = 60 # check less frequently when healthy

else:

if job.is_healthy:

job.is_healthy = False

ctx.call_activity(send_alert, input=f"Job '{job.job_id}' is unhealthy!")

next_sleep_interval = 5 # check more frequently when unhealthy

yield ctx.create_timer(fire_at=ctx.current_utc_datetime + timedelta(minutes=next_sleep_interval))

# restart from the beginning with a new JobStatus input

ctx.continue_as_new(job)

def check_status(ctx, _) -> str:

return random.choice(["healthy", "unhealthy"])

def send_alert(ctx, message: str):

print(f'*** Alert: {message}')

const statusMonitorWorkflow: TWorkflow = async function* (ctx: WorkflowContext): any {

let duration;

const status = yield ctx.callActivity(checkStatusActivity);

if (status === "healthy") {

// Check less frequently when in a healthy state

// set duration to 1 hour

duration = 60 * 60;

} else {

yield ctx.callActivity(alertActivity, "job unhealthy");

// Check more frequently when in an unhealthy state

// set duration to 5 minutes

duration = 5 * 60;

}

// Put the workflow to sleep until the determined time

ctx.createTimer(duration);

// Restart from the beginning with the updated state

ctx.continueAsNew();

};

public override async Task<object> RunAsync(WorkflowContext context, MyEntityState myEntityState)

{

TimeSpan nextSleepInterval;

var status = await context.CallActivityAsync<string>("GetStatus");

if (status == "healthy")

{

myEntityState.IsHealthy = true;

// Check less frequently when in a healthy state

nextSleepInterval = TimeSpan.FromMinutes(60);

}

else

{

if (myEntityState.IsHealthy)

{

myEntityState.IsHealthy = false;

await context.CallActivityAsync("SendAlert", myEntityState);

}

// Check more frequently when in an unhealthy state

nextSleepInterval = TimeSpan.FromMinutes(5);

}

// Put the workflow to sleep until the determined time

await context.CreateTimer(nextSleepInterval);

// Restart from the beginning with the updated state

context.ContinueAsNew(myEntityState);

return null;

}

This example assumes you have a predefined

MyEntityStateclass with a booleanIsHealthyproperty.

public class MonitorWorkflow extends Workflow {

@Override

public WorkflowStub create() {

return ctx -> {

Duration nextSleepInterval;

var status = ctx.callActivity(DemoWorkflowStatusActivity.class.getName(), DemoStatusActivityOutput.class).await();

var isHealthy = status.getIsHealthy();

if (isHealthy) {

// Check less frequently when in a healthy state

nextSleepInterval = Duration.ofMinutes(60);

} else {

ctx.callActivity(DemoWorkflowAlertActivity.class.getName()).await();

// Check more frequently when in an unhealthy state

nextSleepInterval = Duration.ofMinutes(5);

}

// Put the workflow to sleep until the determined time

try {

ctx.createTimer(nextSleepInterval);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

// Restart from the beginning with the updated state

ctx.continueAsNew();

}

}

}

type JobStatus struct {

JobID string `json:"job_id"`

IsHealthy bool `json:"is_healthy"`

}

func StatusMonitorWorkflow(ctx *workflow.WorkflowContext) (any, error) {

var sleepInterval time.Duration

var job JobStatus

if err := ctx.GetInput(&job); err != nil {

return "", err

}

var status string

if err := ctx.CallActivity(CheckStatus, workflow.ActivityInput(job)).Await(&status); err != nil {

return "", err

}

if status == "healthy" {

job.IsHealthy = true

sleepInterval = time.Minutes * 60

} else {

if job.IsHealthy {

job.IsHealthy = false

err := ctx.CallActivity(SendAlert, workflow.ActivityInput(fmt.Sprintf("Job '%s' is unhealthy!", job.JobID))).Await(nil)

if err != nil {

return "", err

}

}

sleepInterval = time.Minutes * 5

}

if err := ctx.CreateTimer(sleepInterval).Await(nil); err != nil {

return "", err

}

ctx.ContinueAsNew(job, false)

return "", nil

}

func CheckStatus(ctx workflow.ActivityContext) (any, error) {

statuses := []string{"healthy", "unhealthy"}

return statuses[rand.Intn(1)], nil

}

func SendAlert(ctx workflow.ActivityContext) (any, error) {

var message string

if err := ctx.GetInput(&message); err != nil {

return "", err

}

fmt.Printf("*** Alert: %s", message)

return "", nil

}

A workflow implementing the monitor pattern can loop forever or it can terminate itself gracefully by not calling continue-as-new.

In some cases, a workflow may need to pause and wait for an external system to perform some action. For example, a workflow may need to pause and wait for a payment to be received. In this case, a payment system might publish an event to a pub/sub topic on receipt of a payment, and a listener on that topic can raise an event to the workflow using the raise event workflow API.

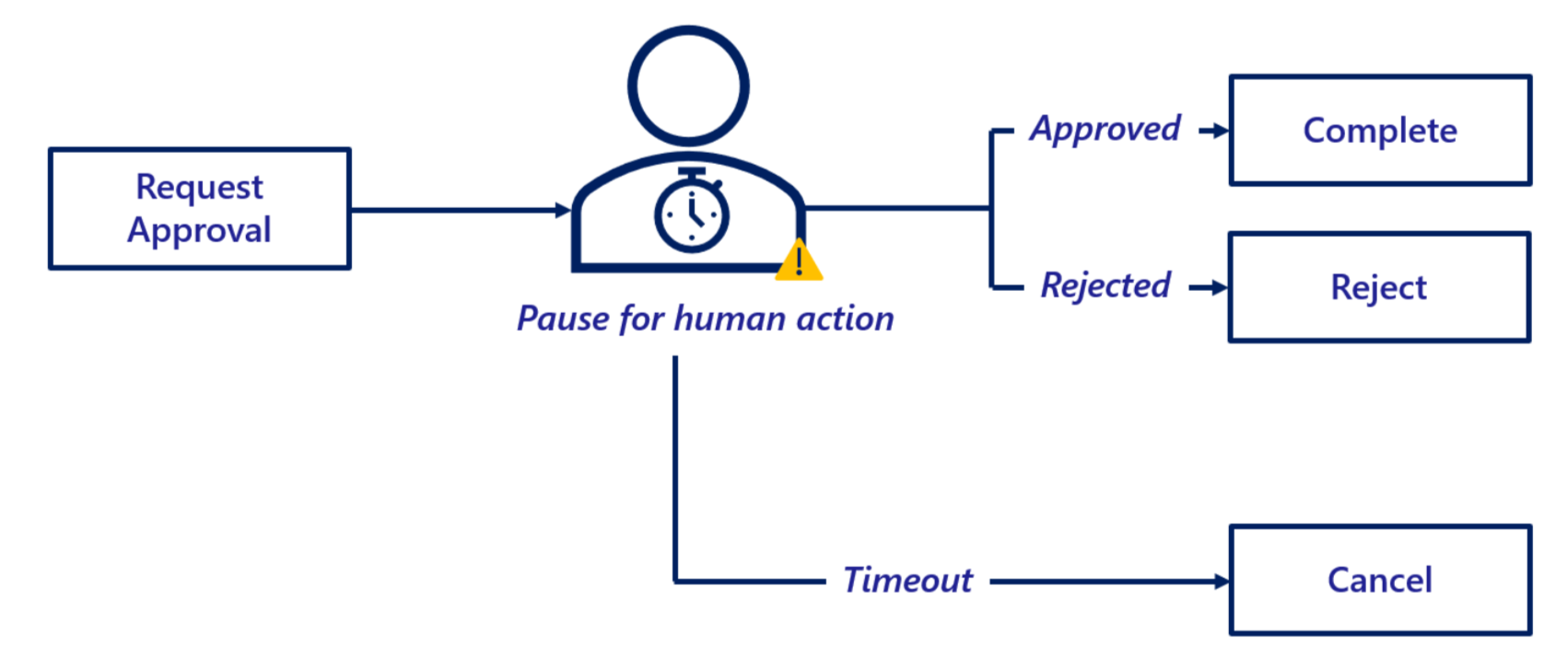

Another very common scenario is when a workflow needs to pause and wait for a human, for example when approving a purchase order. Dapr Workflow supports this event pattern via the external events feature.

Here’s an example workflow for a purchase order involving a human:

The following diagram illustrates this flow.

The following example code shows how this pattern can be implemented using Dapr Workflow.

from dataclasses import dataclass

from datetime import timedelta

import dapr.ext.workflow as wf

@dataclass

class Order:

cost: float

product: str

quantity: int

def __str__(self):

return f'{self.product} ({self.quantity})'

@dataclass

class Approval:

approver: str

@staticmethod

def from_dict(dict):

return Approval(**dict)

def purchase_order_workflow(ctx: wf.DaprWorkflowContext, order: Order):

# Orders under $1000 are auto-approved

if order.cost < 1000:

return "Auto-approved"

# Orders of $1000 or more require manager approval

yield ctx.call_activity(send_approval_request, input=order)

# Approvals must be received within 24 hours or they will be canceled.

approval_event = ctx.wait_for_external_event("approval_received")

timeout_event = ctx.create_timer(timedelta(hours=24))

winner = yield wf.when_any([approval_event, timeout_event])

if winner == timeout_event:

return "Cancelled"

# The order was approved

yield ctx.call_activity(place_order, input=order)

approval_details = Approval.from_dict(approval_event.get_result())

return f"Approved by '{approval_details.approver}'"

def send_approval_request(_, order: Order) -> None:

print(f'*** Sending approval request for order: {order}')

def place_order(_, order: Order) -> None:

print(f'*** Placing order: {order}')

import {

Task,

DaprWorkflowClient,

WorkflowActivityContext,

WorkflowContext,

WorkflowRuntime,

TWorkflow,

} from "@dapr/dapr";

import * as readlineSync from "readline-sync";

// Wrap the entire code in an immediately-invoked async function

async function start() {

class Order {

cost: number;

product: string;

quantity: number;

constructor(cost: number, product: string, quantity: number) {

this.cost = cost;

this.product = product;

this.quantity = quantity;

}

}

function sleep(ms: number): Promise<void> {

return new Promise((resolve) => setTimeout(resolve, ms));

}

// Update the gRPC client and worker to use a local address and port

const daprHost = "localhost";

const daprPort = "50001";

const workflowClient = new DaprWorkflowClient({

daprHost,

daprPort,

});

const workflowRuntime = new WorkflowRuntime({

daprHost,

daprPort,

});

// Activity function that sends an approval request to the manager

const sendApprovalRequest = async (_: WorkflowActivityContext, order: Order) => {

// Simulate some work that takes an amount of time

await sleep(3000);

console.log(`Sending approval request for order: ${order.product}`);

};

// Activity function that places an order

const placeOrder = async (_: WorkflowActivityContext, order: Order) => {

console.log(`Placing order: ${order.product}`);

};

// Orchestrator function that represents a purchase order workflow

const purchaseOrderWorkflow: TWorkflow = async function* (ctx: WorkflowContext, order: Order): any {

// Orders under $1000 are auto-approved

if (order.cost < 1000) {

return "Auto-approved";

}

// Orders of $1000 or more require manager approval

yield ctx.callActivity(sendApprovalRequest, order);

// Approvals must be received within 24 hours or they will be cancled.

const tasks: Task<any>[] = [];

const approvalEvent = ctx.waitForExternalEvent("approval_received");

const timeoutEvent = ctx.createTimer(24 * 60 * 60);

tasks.push(approvalEvent);

tasks.push(timeoutEvent);

const winner = ctx.whenAny(tasks);

if (winner == timeoutEvent) {

return "Cancelled";

}

yield ctx.callActivity(placeOrder, order);

const approvalDetails = approvalEvent.getResult();

return `Approved by ${approvalDetails.approver}`;

};

workflowRuntime

.registerWorkflow(purchaseOrderWorkflow)

.registerActivity(sendApprovalRequest)

.registerActivity(placeOrder);

// Wrap the worker startup in a try-catch block to handle any errors during startup

try {

await workflowRuntime.start();

console.log("Worker started successfully");

} catch (error) {

console.error("Error starting worker:", error);

}

// Schedule a new orchestration

try {

const cost = readlineSync.questionInt("Cost of your order:");

const approver = readlineSync.question("Approver of your order:");

const timeout = readlineSync.questionInt("Timeout for your order in seconds:");

const order = new Order(cost, "MyProduct", 1);

const id = await workflowClient.scheduleNewWorkflow(purchaseOrderWorkflow, order);

console.log(`Orchestration scheduled with ID: ${id}`);

// prompt for approval asynchronously

promptForApproval(approver, workflowClient, id);

// Wait for orchestration completion

const state = await workflowClient.waitForWorkflowCompletion(id, undefined, timeout + 2);

console.log(`Orchestration completed! Result: ${state?.serializedOutput}`);

} catch (error) {

console.error("Error scheduling or waiting for orchestration:", error);

}

// stop worker and client

await workflowRuntime.stop();

await workflowClient.stop();

// stop the dapr side car

process.exit(0);

}

async function promptForApproval(approver: string, workflowClient: DaprWorkflowClient, id: string) {

if (readlineSync.keyInYN("Press [Y] to approve the order... Y/yes, N/no")) {

const approvalEvent = { approver: approver };

await workflowClient.raiseEvent(id, "approval_received", approvalEvent);

} else {

return "Order rejected";

}

}

start().catch((e) => {

console.error(e);

process.exit(1);

});

public override async Task<OrderResult> RunAsync(WorkflowContext context, OrderPayload order)

{

// ...(other steps)...

// Require orders over a certain threshold to be approved

if (order.TotalCost > OrderApprovalThreshold)

{

try

{

// Request human approval for this order

await context.CallActivityAsync(nameof(RequestApprovalActivity), order);

// Pause and wait for a human to approve the order

ApprovalResult approvalResult = await context.WaitForExternalEventAsync<ApprovalResult>(

eventName: "ManagerApproval",

timeout: TimeSpan.FromDays(3));

if (approvalResult == ApprovalResult.Rejected)

{

// The order was rejected, end the workflow here

return new OrderResult(Processed: false);

}

}

catch (TaskCanceledException)

{

// An approval timeout results in automatic order cancellation

return new OrderResult(Processed: false);

}

}

// ...(other steps)...

// End the workflow with a success result

return new OrderResult(Processed: true);

}

Note In the example above,

RequestApprovalActivityis the name of a workflow activity to invoke andApprovalResultis an enumeration defined by the workflow app. For brevity, these definitions were left out of the example code.

public class ExternalSystemInteractionWorkflow extends Workflow {

@Override

public WorkflowStub create() {

return ctx -> {

// ...other steps...

Integer orderCost = ctx.getInput(int.class);

// Require orders over a certain threshold to be approved

if (orderCost > ORDER_APPROVAL_THRESHOLD) {

try {

// Request human approval for this order

ctx.callActivity("RequestApprovalActivity", orderCost, Void.class).await();

// Pause and wait for a human to approve the order

boolean approved = ctx.waitForExternalEvent("ManagerApproval", Duration.ofDays(3), boolean.class).await();

if (!approved) {

// The order was rejected, end the workflow here

ctx.complete("Process reject");

}

} catch (TaskCanceledException e) {

// An approval timeout results in automatic order cancellation

ctx.complete("Process cancel");

}

}

// ...other steps...

// End the workflow with a success result

ctx.complete("Process approved");

};

}

}

type Order struct {

Cost float64 `json:"cost"`

Product string `json:"product"`

Quantity int `json:"quantity"`

}

type Approval struct {

Approver string `json:"approver"`

}

func PurchaseOrderWorkflow(ctx *workflow.WorkflowContext) (any, error) {

var order Order

if err := ctx.GetInput(&order); err != nil {

return "", err

}

// Orders under $1000 are auto-approved

if order.Cost < 1000 {

return "Auto-approved", nil

}

// Orders of $1000 or more require manager approval

if err := ctx.CallActivity(SendApprovalRequest, workflow.ActivityInput(order)).Await(nil); err != nil {

return "", err

}

// Approvals must be received within 24 hours or they will be cancelled

var approval Approval

if err := ctx.WaitForExternalEvent("approval_received", time.Hour*24).Await(&approval); err != nil {

// Assuming that a timeout has taken place - in any case; an error.

return "error/cancelled", err

}

// The order was approved

if err := ctx.CallActivity(PlaceOrder, workflow.ActivityInput(order)).Await(nil); err != nil {

return "", err

}

return fmt.Sprintf("Approved by %s", approval.Approver), nil

}

func SendApprovalRequest(ctx workflow.ActivityContext) (any, error) {

var order Order

if err := ctx.GetInput(&order); err != nil {

return "", err

}

fmt.Printf("*** Sending approval request for order: %v\n", order)

return "", nil

}

func PlaceOrder(ctx workflow.ActivityContext) (any, error) {

var order Order

if err := ctx.GetInput(&order); err != nil {

return "", err

}

fmt.Printf("*** Placing order: %v", order)

return "", nil

}

The code that delivers the event to resume the workflow execution is external to the workflow. Workflow events can be delivered to a waiting workflow instance using the raise event workflow management API, as shown in the following example:

from dapr.clients import DaprClient

from dataclasses import asdict

with DaprClient() as d:

d.raise_workflow_event(

instance_id=instance_id,

workflow_component="dapr",

event_name="approval_received",

event_data=asdict(Approval("Jane Doe")))

import { DaprClient } from "@dapr/dapr";

public async raiseEvent(workflowInstanceId: string, eventName: string, eventPayload?: any) {

this._innerClient.raiseOrchestrationEvent(workflowInstanceId, eventName, eventPayload);

}

// Raise the workflow event to the waiting workflow

await daprClient.RaiseWorkflowEventAsync(

instanceId: orderId,

workflowComponent: "dapr",

eventName: "ManagerApproval",

eventData: ApprovalResult.Approved);

System.out.println("**SendExternalMessage: RestartEvent**");

client.raiseEvent(restartingInstanceId, "RestartEvent", "RestartEventPayload");

func raiseEvent() {

daprClient, err := client.NewClient()

if err != nil {

log.Fatalf("failed to initialize the client")

}

err = daprClient.RaiseEventWorkflow(context.Background(), &client.RaiseEventWorkflowRequest{

InstanceID: "instance_id",

WorkflowComponent: "dapr",

EventName: "approval_received",

EventData: Approval{

Approver: "Jane Doe",

},

})

if err != nil {

log.Fatalf("failed to raise event on workflow")

}

log.Println("raised an event on specified workflow")

}

External events don’t have to be directly triggered by humans. They can also be triggered by other systems. For example, a workflow may need to pause and wait for a payment to be received. In this case, a payment system might publish an event to a pub/sub topic on receipt of a payment, and a listener on that topic can raise an event to the workflow using the raise event workflow API.

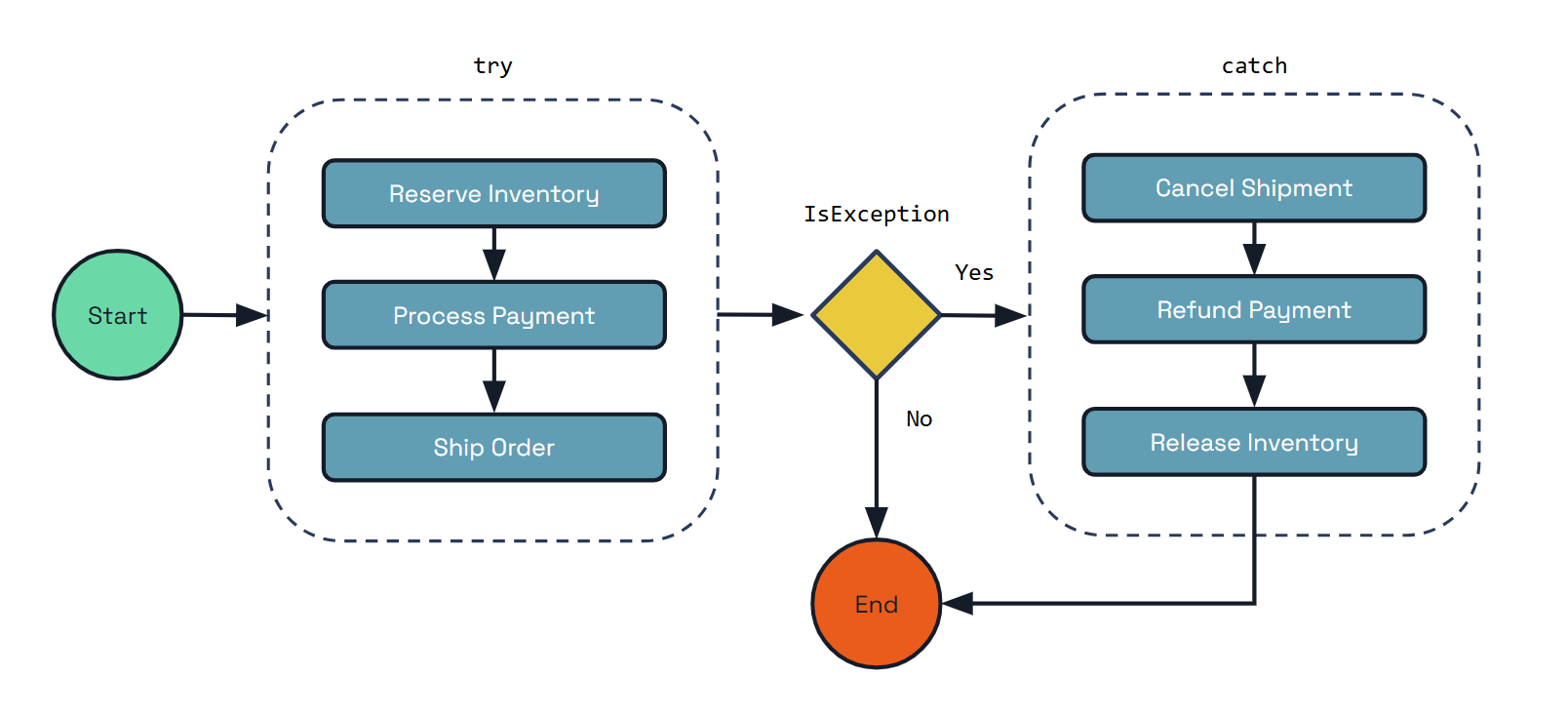

The compensation pattern (also known as the saga pattern) provides a mechanism for rolling back or undoing operations that have already been executed when a workflow fails partway through. This pattern is particularly important for long-running workflows that span multiple microservices where traditional database transactions are not feasible.

In distributed microservice architectures, you often need to coordinate operations across multiple services. When these operations cannot be wrapped in a single transaction, the compensation pattern provides a way to maintain consistency by defining compensating actions for each step in the workflow.

The compensation pattern addresses several critical challenges:

Common use cases for the compensation pattern include:

Dapr Workflow provides support for the compensation pattern, allowing you to register compensation activities for each step and execute them in reverse order when needed.

Here’s an example workflow for an e-commerce process:

The following diagram illustrates this flow.

public class PaymentProcessingWorkflow implements Workflow {

@Override

public WorkflowStub create() {

return ctx -> {

ctx.getLogger().info("Starting Workflow: " + ctx.getName());

var orderId = ctx.getInput(String.class);

List<String> compensations = new ArrayList<>();

try {

// Step 1: Reserve inventory

String reservationId = ctx.callActivity(ReserveInventoryActivity.class.getName(), orderId, String.class).await();

ctx.getLogger().info("Inventory reserved: {}", reservationId);

compensations.add("ReleaseInventory");

// Step 2: Process payment

String paymentId = ctx.callActivity(ProcessPaymentActivity.class.getName(), orderId, String.class).await();

ctx.getLogger().info("Payment processed: {}", paymentId);

compensations.add("RefundPayment");

// Step 3: Ship order

String shipmentId = ctx.callActivity(ShipOrderActivity.class.getName(), orderId, String.class).await();

ctx.getLogger().info("Order shipped: {}", shipmentId);

compensations.add("CancelShipment");

} catch (TaskFailedException e) {

ctx.getLogger().error("Activity failed: {}", e.getMessage());

// Execute compensations in reverse order

Collections.reverse(compensations);

for (String compensation : compensations) {

try {

switch (compensation) {

case "CancelShipment":

String shipmentCancelResult = ctx.callActivity(

CancelShipmentActivity.class.getName(),

orderId,

String.class).await();

ctx.getLogger().info("Shipment cancellation completed: {}", shipmentCancelResult);

break;

case "RefundPayment":

String refundResult = ctx.callActivity(

RefundPaymentActivity.class.getName(),

orderId,

String.class).await();

ctx.getLogger().info("Payment refund completed: {}", refundResult);

break;

case "ReleaseInventory":

String releaseResult = ctx.callActivity(

ReleaseInventoryActivity.class.getName(),

orderId,

String.class).await();

ctx.getLogger().info("Inventory release completed: {}", releaseResult);

break;

}

} catch (TaskFailedException ex) {

ctx.getLogger().error("Compensation activity failed: {}", ex.getMessage());

}

}

ctx.complete("Order processing failed, compensation applied");

}

// Step 4: Send confirmation

ctx.callActivity(SendConfirmationActivity.class.getName(), orderId, Void.class).await();

ctx.getLogger().info("Confirmation sent for order: {}", orderId);

ctx.complete("Order processed successfully: " + orderId);

};

}

}

// Example activities

class ReserveInventoryActivity implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

String orderId = ctx.getInput(String.class);

// Logic to reserve inventory

String reservationId = "reservation_" + orderId;

System.out.println("Reserved inventory for order: " + orderId);

return reservationId;

}

}

class ReleaseInventoryActivity implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

String reservationId = ctx.getInput(String.class);

// Logic to release inventory reservation

System.out.println("Released inventory reservation: " + reservationId);

return "Released: " + reservationId;

}

}

class ProcessPaymentActivity implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

String orderId = ctx.getInput(String.class);

// Logic to process payment

String paymentId = "payment_" + orderId;

System.out.println("Processed payment for order: " + orderId);

return paymentId;

}

}

class RefundPaymentActivity implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

String paymentId = ctx.getInput(String.class);

// Logic to refund payment

System.out.println("Refunded payment: " + paymentId);

return "Refunded: " + paymentId;

}

}

class ShipOrderActivity implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

String orderId = ctx.getInput(String.class);

// Logic to ship order

String shipmentId = "shipment_" + orderId;

System.out.println("Shipped order: " + orderId);

return shipmentId;

}

}

class CancelShipmentActivity implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

String shipmentId = ctx.getInput(String.class);

// Logic to cancel shipment

System.out.println("Canceled shipment: " + shipmentId);

return "Canceled: " + shipmentId;

}

}

class SendConfirmationActivity implements WorkflowActivity {

@Override

public Object run(WorkflowActivityContext ctx) {

String orderId = ctx.getInput(String.class);

// Logic to send confirmation

System.out.println("Sent confirmation for order: " + orderId);

return null;

}

}

The key benefits of using Dapr Workflow’s compensation pattern include:

The compensation pattern ensures that your distributed workflows can maintain consistency and recover gracefully from failures, making it an essential tool for building reliable microservice architectures.

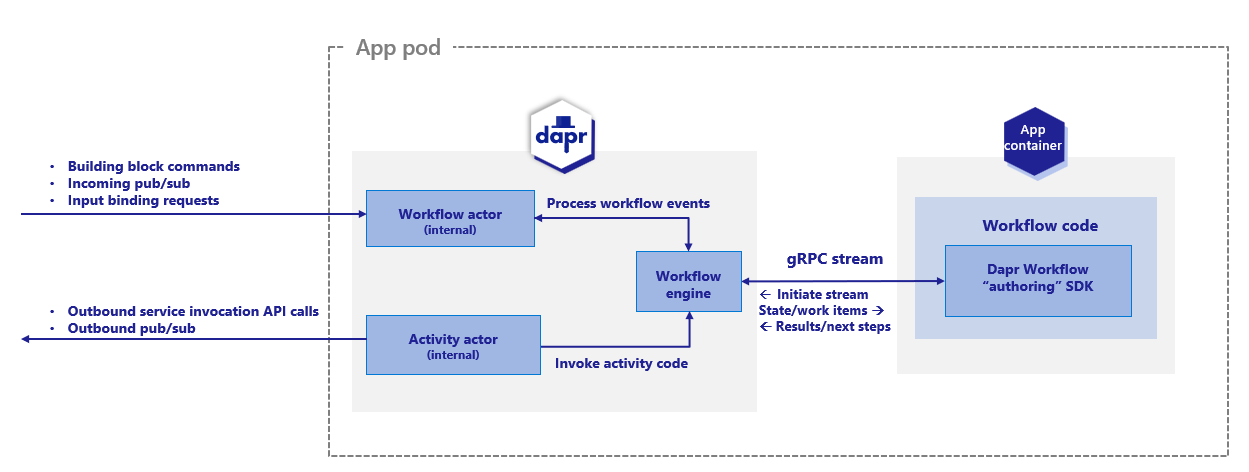

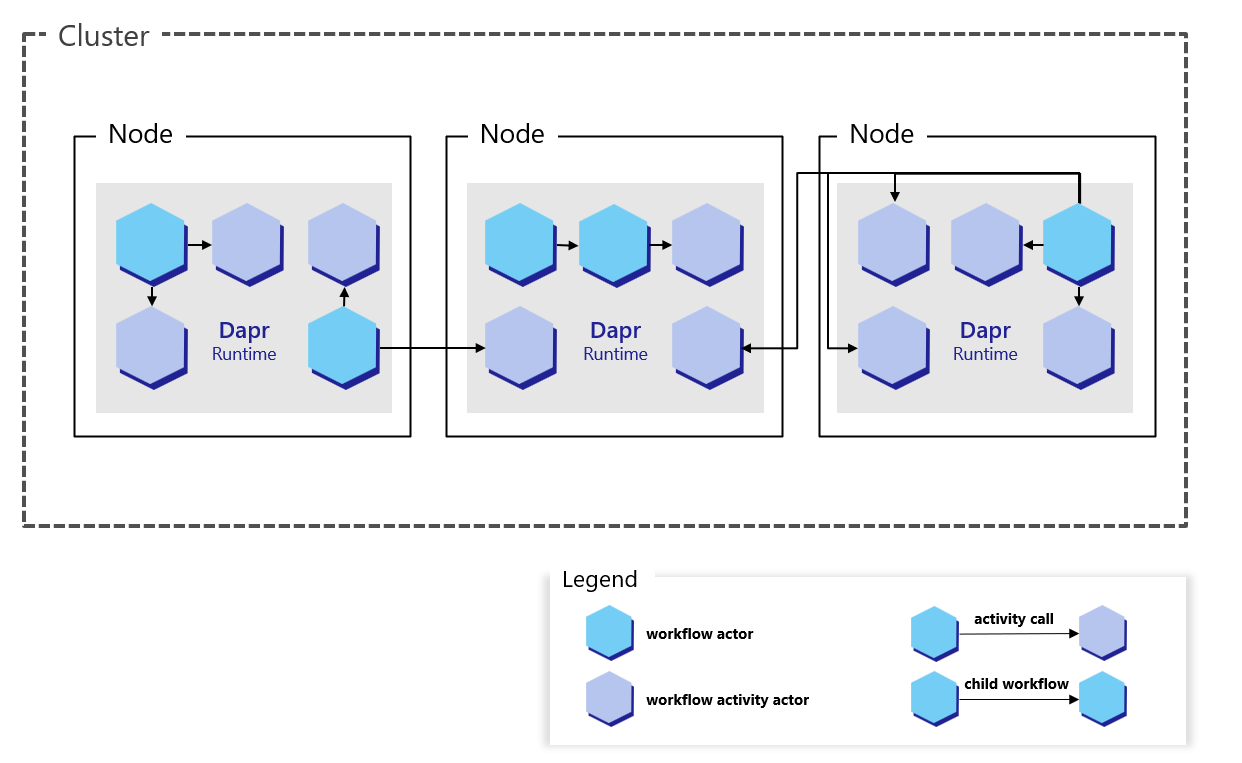

Dapr Workflows allow developers to define workflows using ordinary code in a variety of programming languages. The workflow engine runs inside of the Dapr sidecar and orchestrates workflow code deployed as part of your application. Dapr Workflows are built on top of Dapr Actors providing durability and scalability for workflow execution.

This article describes:

For more information on how to author Dapr Workflows in your application, see How to: Author a workflow.

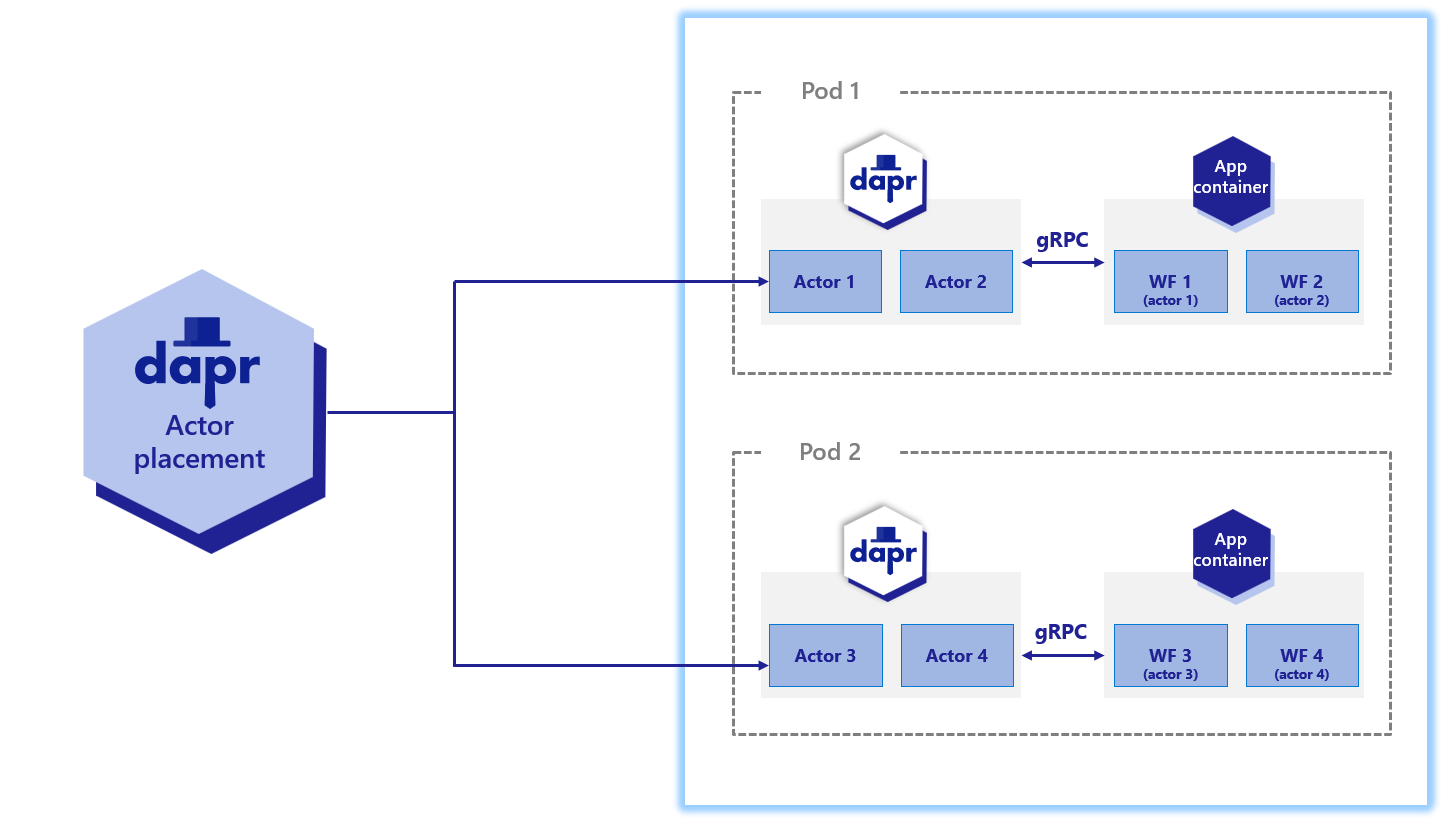

The Dapr Workflow engine is internally powered by Dapr’s actor runtime. The following diagram illustrates the Dapr Workflow architecture in Kubernetes mode:

To use the Dapr Workflow building block, you write workflow code in your application using the Dapr Workflow SDK, which internally connects to the sidecar using a gRPC stream. This registers the workflow and any workflow activities, or tasks that workflows can schedule.

The engine is embedded directly into the sidecar and implemented using the durabletask-go framework library. This framework allows you to swap out different storage providers, including a storage provider created for Dapr that leverages internal actors behind the scenes. Since Dapr Workflows use actors, you can store workflow state in state stores.

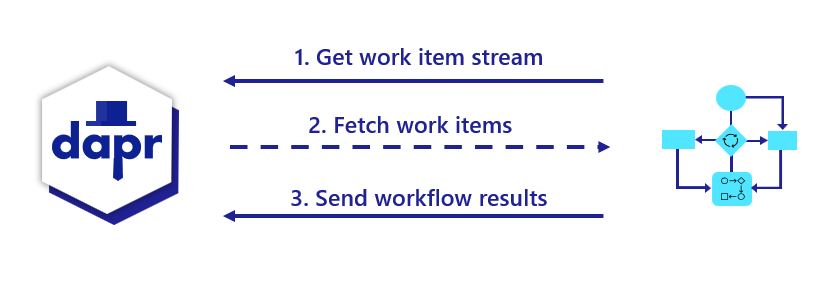

When a workflow application starts up, it uses a workflow authoring SDK to send a gRPC request to the Dapr sidecar and get back a stream of workflow work items, following the server streaming RPC pattern. These work items can be anything from “start a new X workflow” (where X is the type of a workflow) to “schedule activity Y with input Z to run on behalf of workflow X”.

The workflow app executes the appropriate workflow code and then sends a gRPC request back to the sidecar with the execution results.

All interactions happen over a single gRPC channel and are initiated by the application, which means the application doesn’t need to open any inbound ports. The details of these interactions are internally handled by the language-specific Dapr Workflow authoring SDK.

If you’re familiar with Dapr actors, you may notice a few differences in terms of how sidecar interactions works for workflows compared to application defined actors.

| Actors | Workflows |

|---|---|

| Actors created by the application can interact with the sidecar using either HTTP or gRPC. | Workflows only use gRPC. Due to the workflow gRPC protocol’s complexity, an SDK is required when implementing workflows. |

| Actor operations are pushed to application code from the sidecar. This requires the application to listen on a particular app port. | For workflows, operations are pulled from the sidecar by the application using a streaming protocol. The application doesn’t need to listen on any ports to run workflows. |

| Actors explicitly register themselves with the sidecar. | Workflows do not register themselves with the sidecar. The embedded engine doesn’t keep track of workflow types. This responsibility is instead delegated to the workflow application and its SDK. |

The durabletask-go core used by the workflow engine writes distributed traces using Open Telemetry SDKs.

These traces are captured automatically by the Dapr sidecar and exported to the configured Open Telemetry provider, such as Zipkin.

Each workflow instance managed by the engine is represented as one or more spans. There is a single parent span representing the full workflow execution and child spans for the various tasks, including spans for activity task execution and durable timers.

Workflow activity code currently does not have access to the trace context.

Upon the workflow client connecting to the sidecar, there are two types of actors that are registered in support of the workflow engine:

dapr.internal.{namespace}.{appID}.workflowdapr.internal.{namespace}.{appID}.activityThe {namespace} value is the Dapr namespace and defaults to default if no namespace is configured.

The {appID} value is the app’s ID.

For example, if you have a workflow app named “wfapp”, then the type of the workflow actor would be dapr.internal.default.wfapp.workflow and the type of the activity actor would be dapr.internal.default.wfapp.activity.

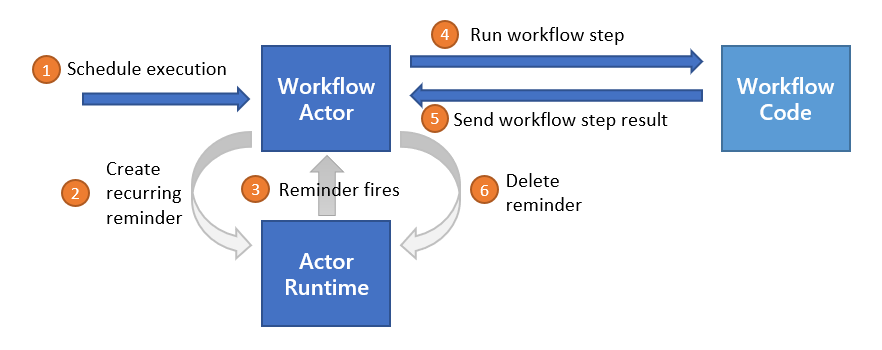

The following diagram demonstrates how workflow actors operate in a Kubernetes scenario:

Just like user-defined actors, workflow actors are distributed across the cluster by the hashing lookup table provided by the actor placement service. They also maintain their own state and make use of reminders. However, unlike actors that live in application code, these workflow actors are embedded into the Dapr sidecar. Application code is completely unaware that these actors exist.

There are 2 different types of actors used with workflows: workflow actors and activity actors. Workflow actors are responsible for managing the state and placement of all workflows running in the app. A new instance of the workflow actor is activated for every workflow instance that gets scheduled. The ID of the workflow actor is the ID of the workflow. This workflow actor stores the state of the workflow as it progresses, and determines the node on which the workflow code executes via the actor lookup table.

As workflows are based on actors, all workflow and activity work is randomly distributed across all replicas of the application implementing workflows. There is no locality or relationship between where a workflow is started and where each work item is executed.

Each workflow actor saves its state using the following keys in the configured actor state store:

| Key | Description |

|---|---|

inbox-NNNNNN | A workflow’s inbox is effectively a FIFO queue of messages that drive a workflow’s execution. Example messages include workflow creation messages, activity task completion messages, etc. Each message is stored in its own key in the state store with the name inbox-NNNNNN where NNNNNN is a 6-digit number indicating the ordering of the messages. These state keys are removed once the corresponding messages are consumed by the workflow. |

history-NNNNNN | A workflow’s history is an ordered list of events that represent a workflow’s execution history. Each key in the history holds the data for a single history event. Like an append-only log, workflow history events are only added and never removed (except when a workflow performs a “continue as new” operation, which purges all history and restarts a workflow with a new input). |

customStatus | Contains a user-defined workflow status value. There is exactly one customStatus key for each workflow actor instance. |

metadata | Contains meta information about the workflow as a JSON blob and includes details such as the length of the inbox, the length of the history, and a 64-bit integer representing the workflow generation (for cases where the instance ID gets reused). The length information is used to determine which keys need to be read or written to when loading or saving workflow state updates. |

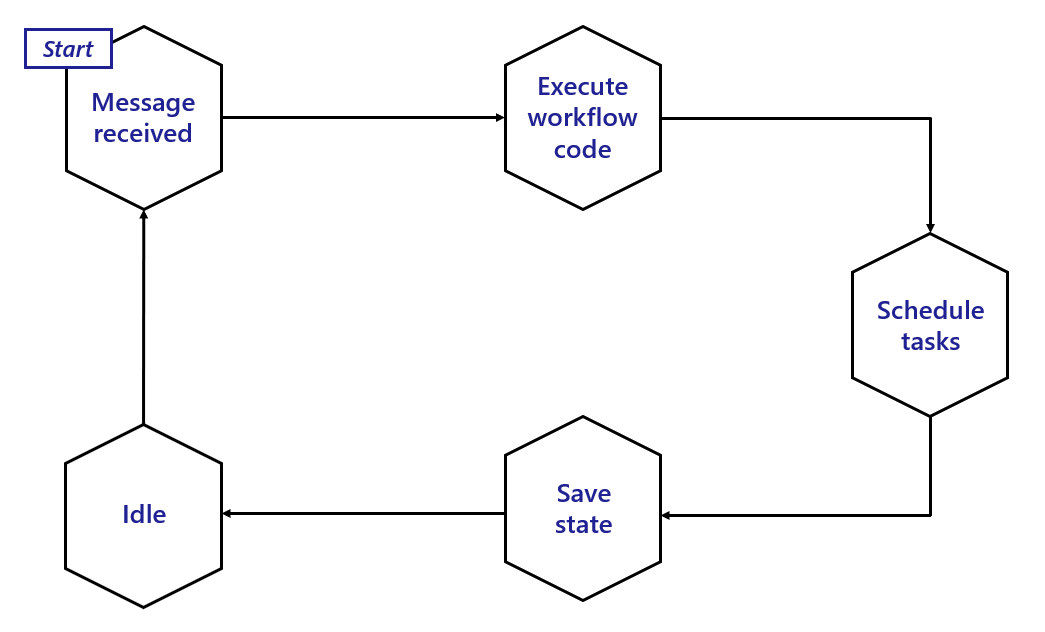

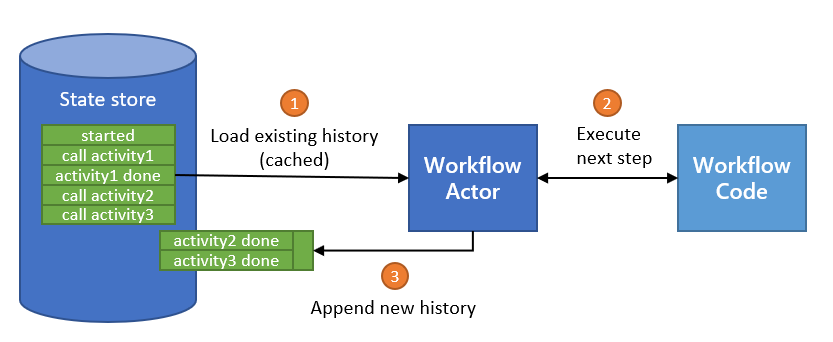

The following diagram illustrates the typical lifecycle of a workflow actor.

To summarize:

Activity actors are responsible for managing the state and placement of all workflow activity invocations.

A new instance of the activity actor is activated for every activity task that gets scheduled by a workflow.

The ID of the activity actor is the ID of the workflow combined with a sequence number (sequence numbers start with 0), as well as the “generation” (incremented during instances of rerunning from using continue as new).

For example, if a workflow has an ID of 876bf371 and is the third activity to be scheduled by the workflow, it’s ID will be 876bf371::2::1 where 2 is the sequence number, and 1 is the generation.

If the activity is scheduled again after a continue as new, the ID will be 876bf371::2::2.

No state is stored by activity actors, and instead all resulting data is sent back to the parent workflow actor.

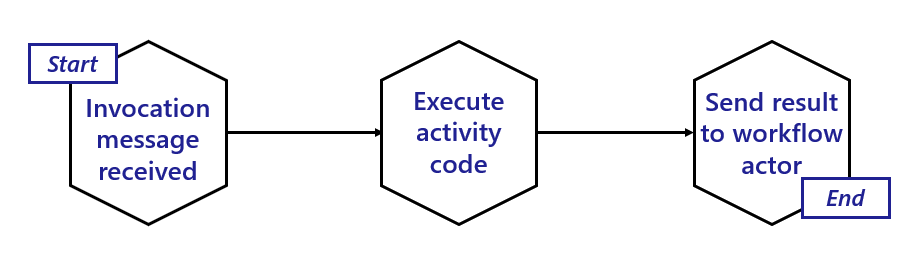

The following diagram illustrates the typical lifecycle of an activity actor.

Activity actors are short-lived:

The Dapr Workflow ensures workflow fault-tolerance by using actor reminders to recover from transient system failures. Prior to invoking application workflow code, the workflow or activity actor will create a new reminder. These reminders are made “one shot”, meaning that they will expire after successful triggering. If the application code executes without interruption, the reminder is triggered and expired. However, if the node or the sidecar hosting the associated workflow or activity crashes, the reminder will reactivate the corresponding actor and the execution will be retried, forever.

Dapr Workflows use actors internally to drive the execution of workflows. Like any actors, these workflow actors store their state in the configured actor state store. Any state store that supports actors implicitly supports Dapr Workflow.

As discussed in the workflow actors section, workflows save their state incrementally by appending to a history log. The history log for a workflow is distributed across multiple state store keys so that each “checkpoint” only needs to append the newest entries.

The size of each checkpoint is determined by the number of concurrent actions scheduled by the workflow before it goes into an idle state. Sequential workflows will therefore make smaller batch updates to the state store, while fan-out/fan-in workflows will require larger batches. The size of the batch is also impacted by the size of inputs and outputs when workflows invoke activities or child workflows.

Different state store implementations may implicitly put restrictions on the types of workflows you can author. For example, the Azure Cosmos DB state store limits item sizes to 2 MB of UTF-8 encoded JSON (source). The input or output payload of an activity or child workflow is stored as a single record in the state store, so a item limit of 2 MB means that workflow and activity inputs and outputs can’t exceed 2 MB of JSON-serialized data.

Similarly, if a state store imposes restrictions on the size of a batch transaction, that may limit the number of parallel actions that can be scheduled by a workflow.

Workflow state can be purged from a state store, including all its history. Each Dapr SDK exposes APIs for purging all metadata related to specific workflow instances.