Get a high-level overview of Dapr building blocks in the Concepts section.

This is the multi-page printable view of this section. Click here to print.

Get a high-level overview of Dapr building blocks in the Concepts section.

Learn more about how to use Dapr Service Invocation:

Using service invocation, your application can reliably and securely communicate with other applications using the standard gRPC or HTTP protocols.

In many microservice-based applications, multiple services need the ability to communicate with one another. This inter-service communication requires that application developers handle problems like:

Dapr addresses these challenges by providing a service invocation API that acts similar to a reverse proxy with built-in service discovery, while leveraging built-in distributed tracing, metrics, error handling, encryption and more.

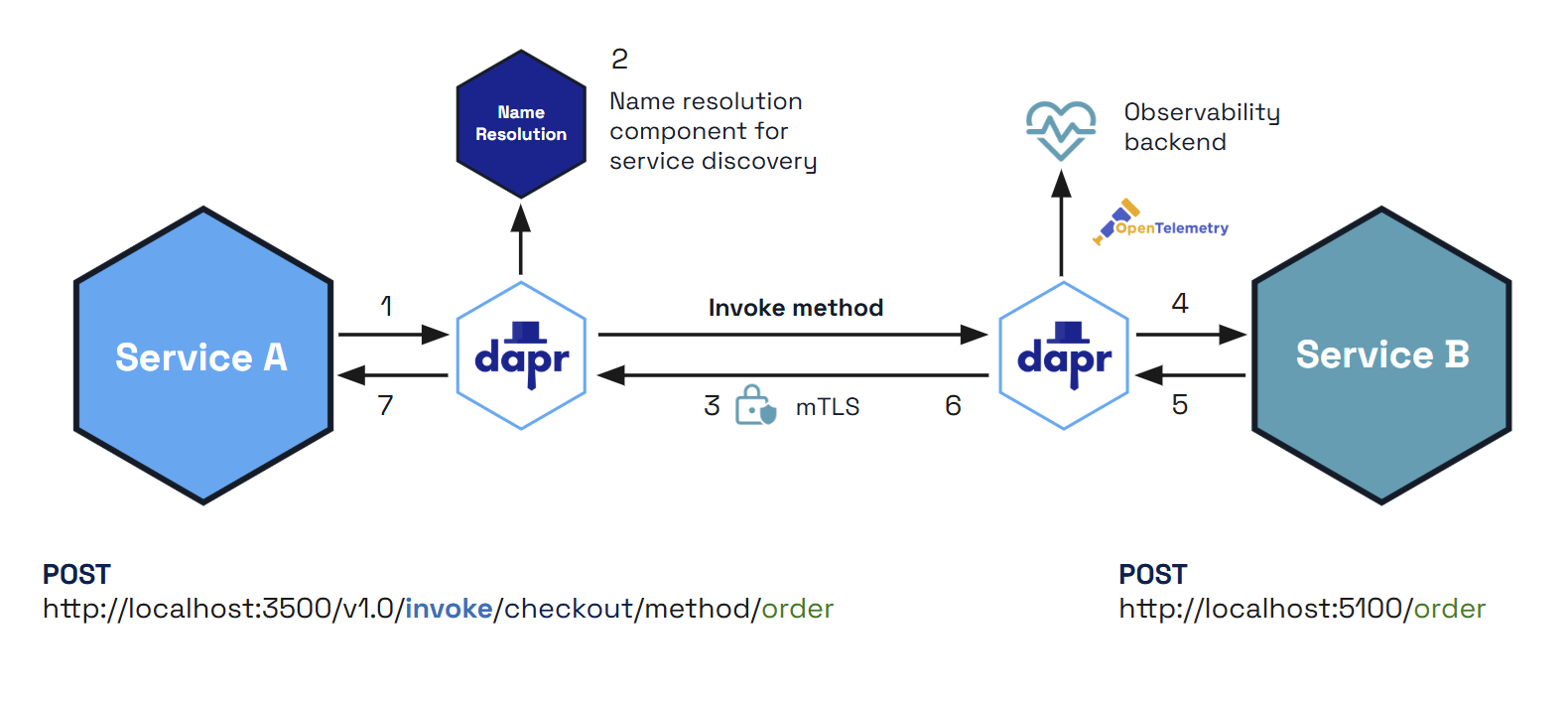

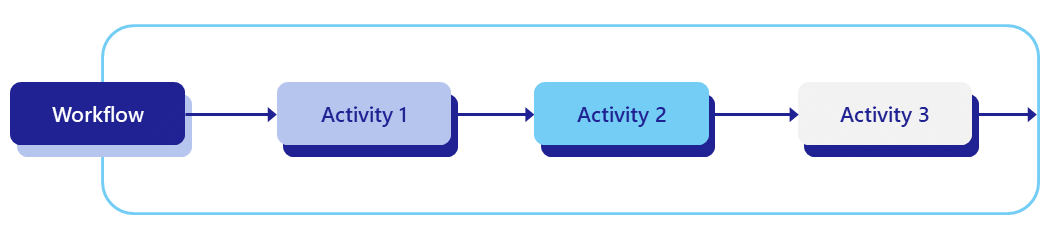

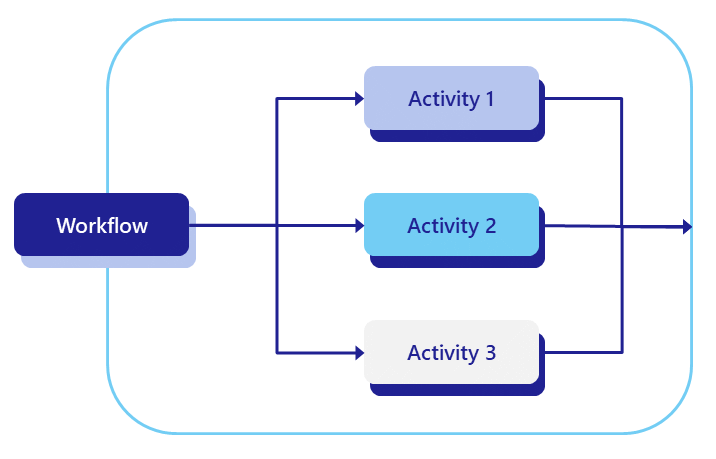

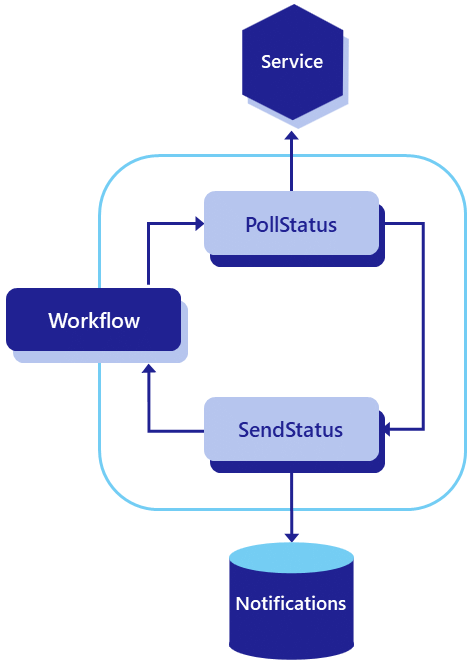

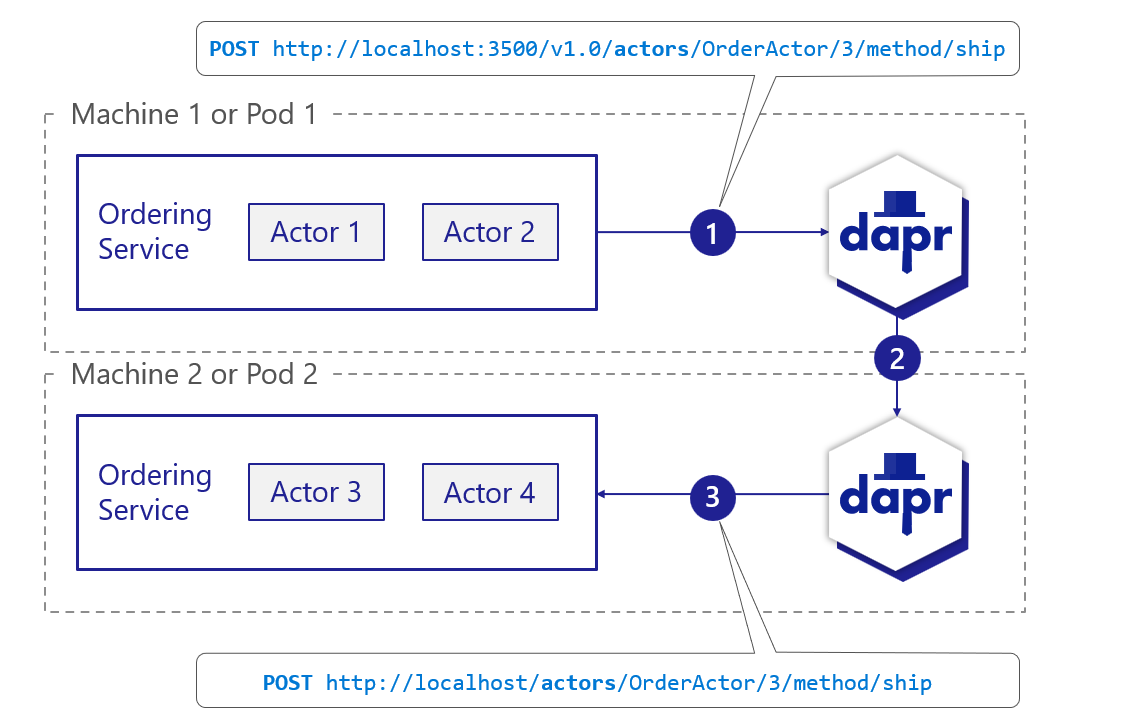

Dapr uses a sidecar architecture. To invoke an application using Dapr:

invoke API on the Dapr instance.The following overview video and demo demonstrates how Dapr service invocation works.

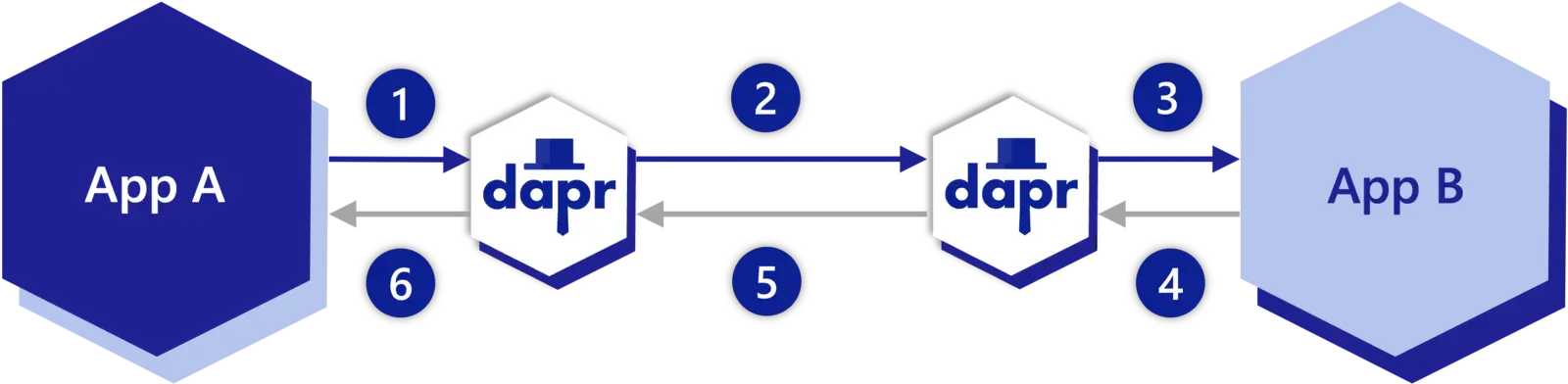

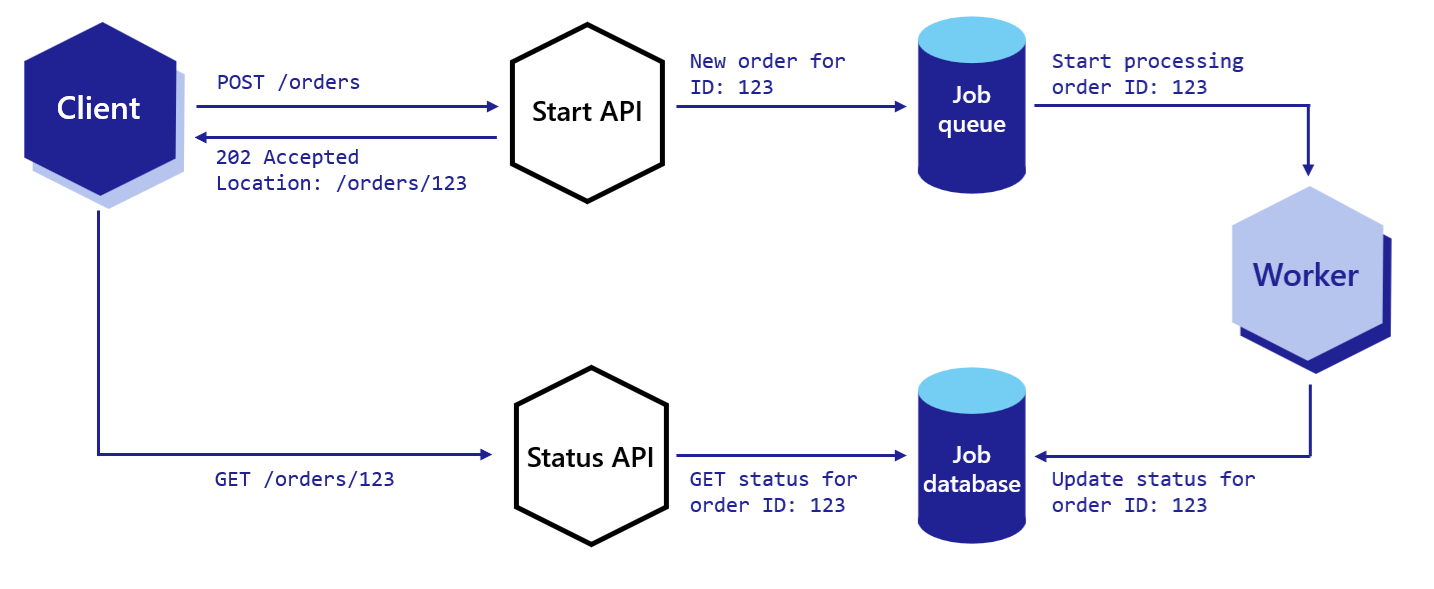

The diagram below is an overview of how Dapr’s service invocation works between two Dapr-ized applications.

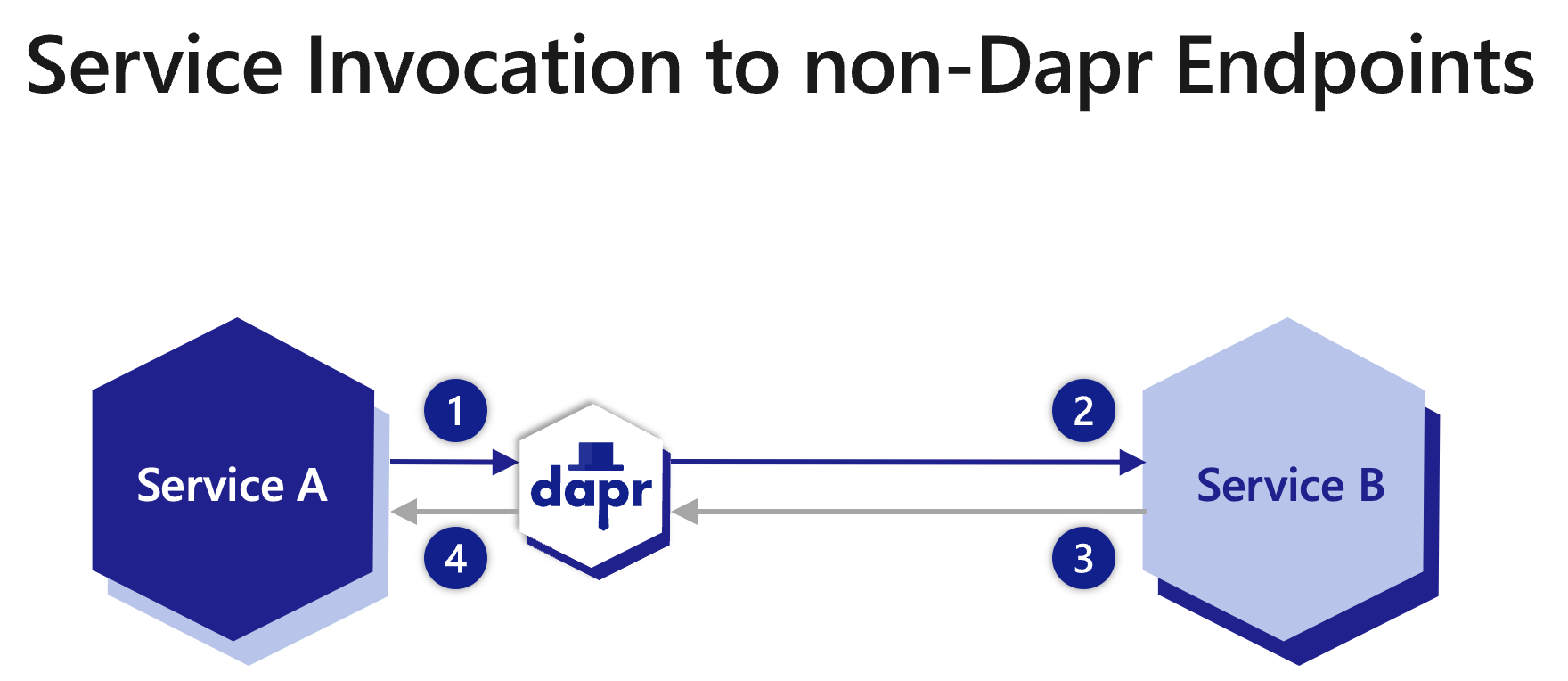

You can also call non-Dapr HTTP endpoints using the service invocation API. For example, you may only use Dapr in part of an overall application, may not have access to the code to migrate an existing application to use Dapr, or simply need to call an external HTTP service. Read “How-To: Invoke Non-Dapr Endpoints using HTTP” for more information.

Service invocation provides several features to make it easy for you to call methods between applications or to call external HTTP endpoints.

dapr-app-id header and you’re ready to go. For more information, see Invoke Services using HTTP.With the Dapr Sentry service, all calls between Dapr applications can be made secure with mutual (mTLS) authentication on hosted platforms, including automatic certificate rollover.

For more information read the service-to-service security article.

In the event of call failures and transient errors, service invocation provides a resiliency feature that performs automatic retries with backoff time periods. To find out more, see the Resiliency article here.

By default, all calls between applications are traced and metrics are gathered to provide insights and diagnostics for applications. This is especially important in production scenarios, providing call graphs and metrics on the calls between your services. For more information read about observability.

With access policies, applications can control:

For example, you can restrict sensitive applications with personnel information from being accessed by unauthorized applications. Combined with service-to-service secure communication, you can provide for soft multi-tenancy deployments.

For more information read the access control allow lists for service invocation article.

You can scope applications to namespaces for deployment and security and call between services deployed to different namespaces. For more information, read the Service invocation across namespaces article.

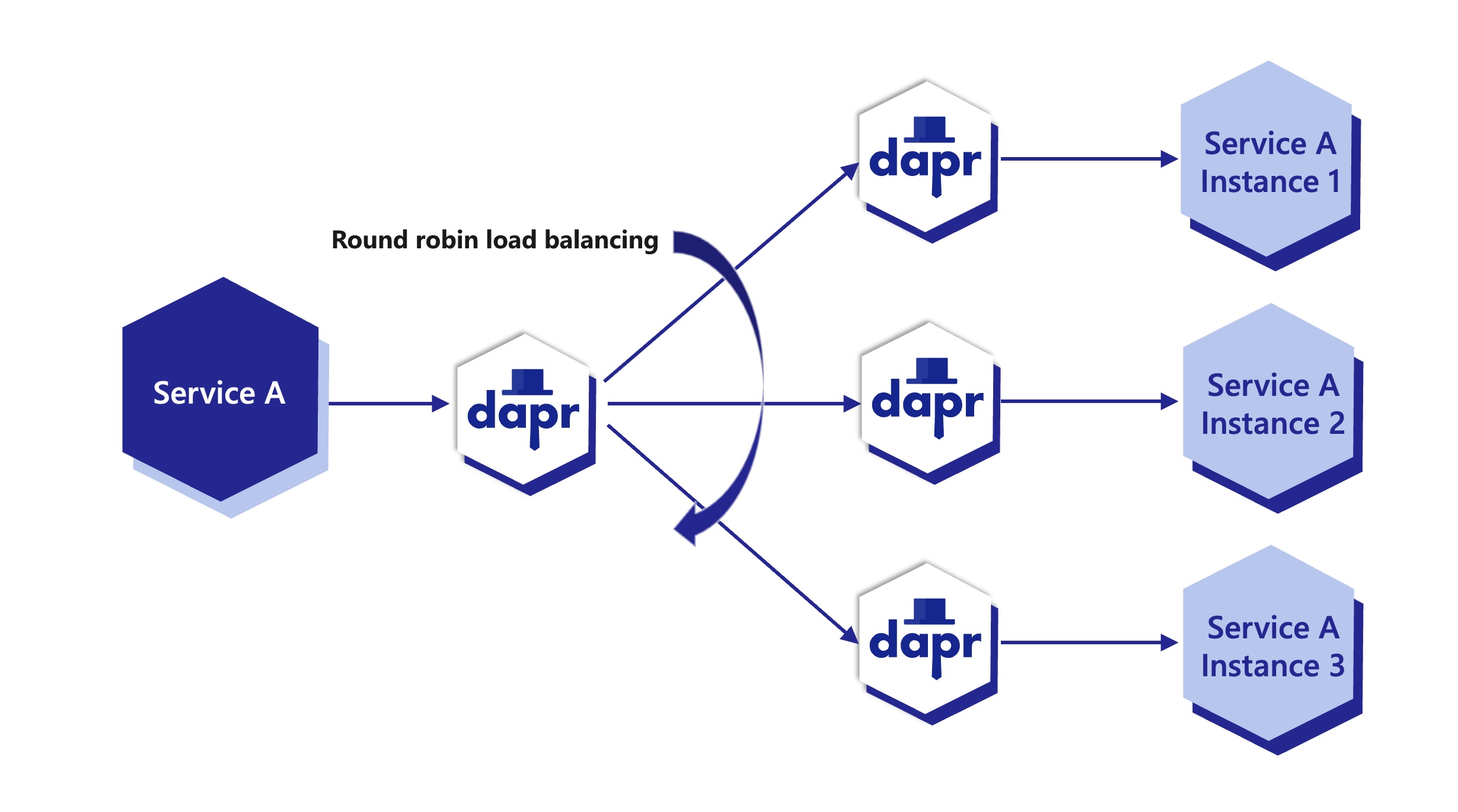

Dapr provides round robin load balancing of service invocation requests with the mDNS protocol, for example with a single machine or with multiple, networked, physical machines.

The diagram below shows an example of how this works. If you have 1 instance of an application with app ID FrontEnd and 3 instances of application with app ID Cart and you call from FrontEnd app to Cart app, Dapr round robins’ between the 3 instances. These instance can be on the same machine or on different machines. .

Note: App ID is unique per application, not application instance. Regardless how many instances of that application exist (due to scaling), all of them will share the same app ID.

Dapr can run on a variety of hosting platforms. To enable swappable service discovery with service invocation, Dapr uses name resolution components. For example, the Kubernetes name resolution component uses the Kubernetes DNS service to resolve the location of other applications running in the cluster.

Self-hosted machines can use the mDNS name resolution component. As an alternative, you can use the SQLite name resolution component to run Dapr on single-node environments and for local development scenarios. Dapr sidecars that are part of the cluster store their information in a SQLite database on the local machine.

The Consul name resolution component is particularly suited to multi-machine deployments and can be used in any hosting environment, including Kubernetes, multiple VMs, or self-hosted.

You can handle data as a stream in HTTP service invocation. This can offer improvements in performance and memory utilization when using Dapr to invoke another service using HTTP with large request or response bodies.

The diagram below demonstrates the six steps of data flow.

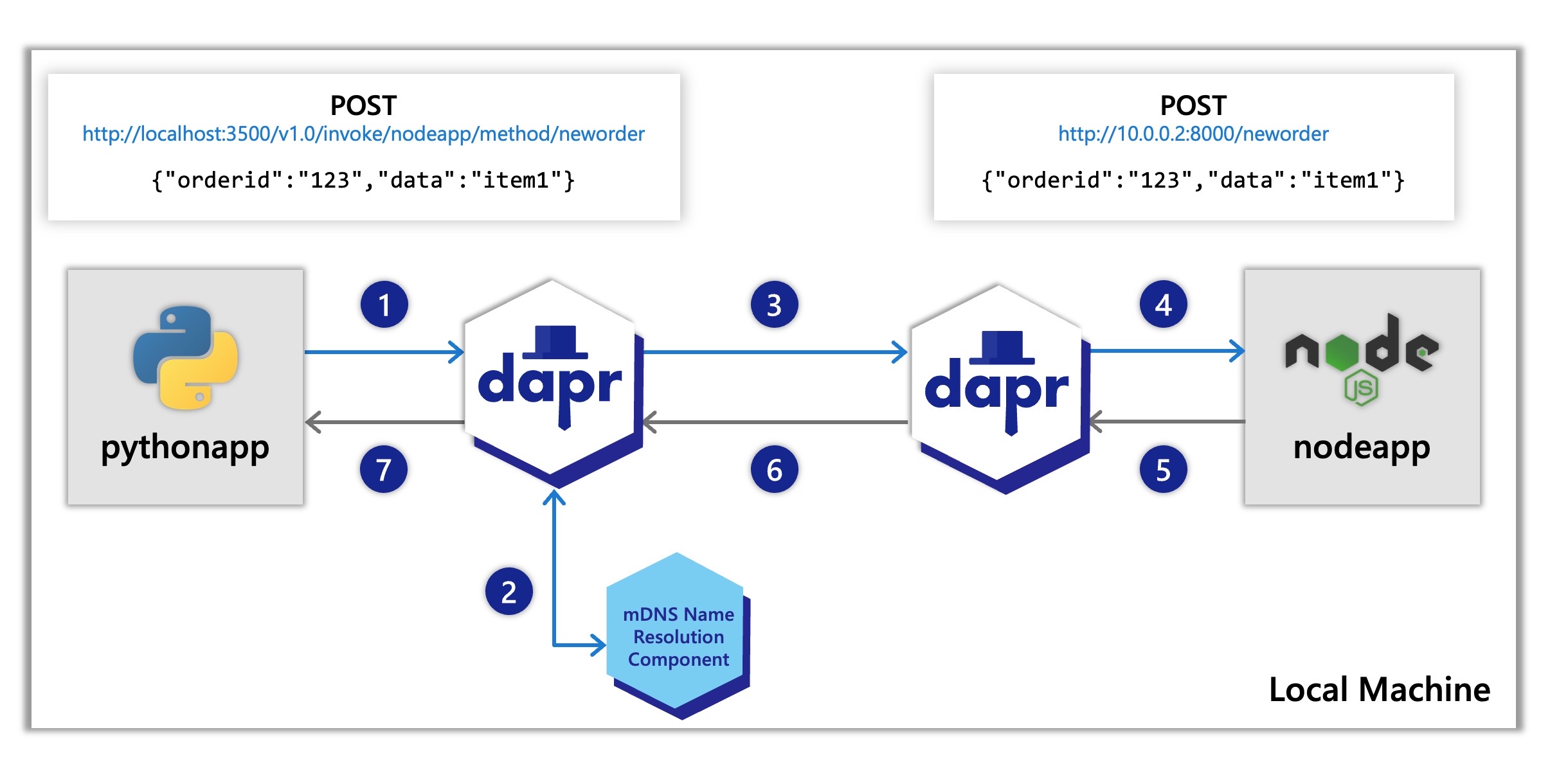

Following the above call sequence, suppose you have the applications as described in the Hello World tutorial, where a python app invokes a node.js app. In such a scenario, the python app would be “Service A” , and a Node.js app would be “Service B”.

The diagram below shows sequence 1-7 again on a local machine showing the API calls:

nodeapp. The python app invokes the Node.js app’s neworder method by POSTing http://localhost:3500/v1.0/invoke/nodeapp/method/neworder, which first goes to the python app’s local Dapr sidecar.The Dapr docs contain multiple quickstarts that leverage the service invocation building block in different example architectures. To get a straight-forward understanding of the service invocation api and it’s features we recommend starting with our quickstarts:

| Quickstart/tutorial | Description |

|---|---|

| Service invocation quickstart | This quickstart gets you interacting directly with the service invocation building block. |

| Hello world tutorial | This tutorial shows how to use both the service invocation and state management building blocks all running locally on your machine. |

| Hello world kubernetes tutorial | This tutorial walks through using Dapr in kubernetes and covers both the service invocation and state management building blocks as well. |

Want to skip the quickstarts? Not a problem. You can try out the service invocation building block directly in your application to securely communicate with other services. After Dapr is installed, you can begin using the service invocation API in the following ways.

Invoke services using:

dapr-app-id header and you’re ready to get started. Read more on this here, Invoke Services using HTTP.localhost:<dapr-http-port> and you’ll be able to directly call the API. You can also read more on this in the Invoke Services using HTTP docs linked above under HTTP proxying.For quick testing, try using the Dapr CLI for service invocation:

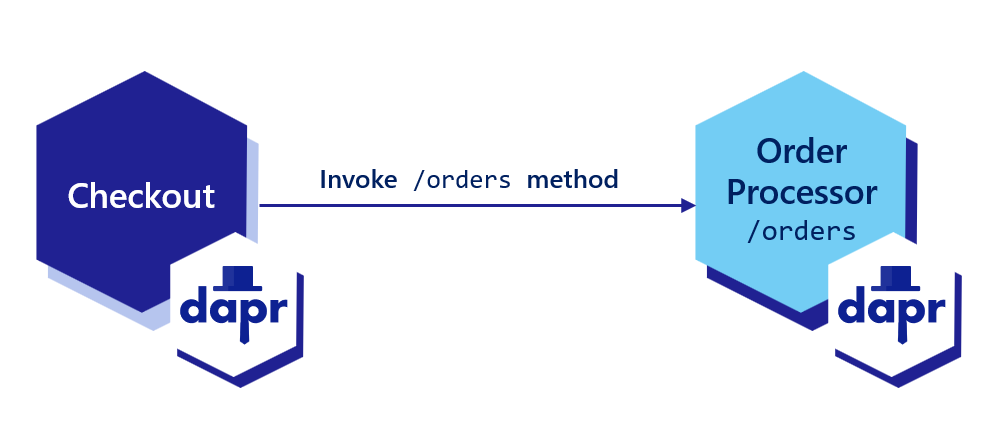

dapr invoke --method <method-name> command along with the method flag and the method of interest. Read more on this in Dapr CLI.This article demonstrates how to deploy services each with an unique application ID for other services to discover and call endpoints on them using service invocation over HTTP.

Dapr allows you to assign a global, unique ID for your app. This ID encapsulates the state for your application, regardless of the number of instances it may have.

dapr run --app-id checkout --app-protocol http --dapr-http-port 3500 -- python3 checkout/app.py

dapr run --app-id order-processor --app-port 8001 --app-protocol http --dapr-http-port 3501 -- python3 order-processor/app.py

If your app uses a TLS, you can tell Dapr to invoke your app over a TLS connection by setting --app-protocol https:

dapr run --app-id checkout --app-protocol https --dapr-http-port 3500 -- python3 checkout/app.py

dapr run --app-id order-processor --app-port 8001 --app-protocol https --dapr-http-port 3501 -- python3 order-processor/app.py

dapr run --app-id checkout --app-protocol http --dapr-http-port 3500 -- npm start

dapr run --app-id order-processor --app-port 5001 --app-protocol http --dapr-http-port 3501 -- npm start

If your app uses a TLS, you can tell Dapr to invoke your app over a TLS connection by setting --app-protocol https:

dapr run --app-id checkout --dapr-http-port 3500 --app-protocol https -- npm start

dapr run --app-id order-processor --app-port 5001 --dapr-http-port 3501 --app-protocol https -- npm start

dapr run --app-id checkout --app-protocol http --dapr-http-port 3500 -- dotnet run

dapr run --app-id order-processor --app-port 7001 --app-protocol http --dapr-http-port 3501 -- dotnet run

If your app uses a TLS, you can tell Dapr to invoke your app over a TLS connection by setting --app-protocol https:

dapr run --app-id checkout --dapr-http-port 3500 --app-protocol https -- dotnet run

dapr run --app-id order-processor --app-port 7001 --dapr-http-port 3501 --app-protocol https -- dotnet run

dapr run --app-id checkout --app-protocol http --dapr-http-port 3500 -- java -jar target/CheckoutService-0.0.1-SNAPSHOT.jar

dapr run --app-id order-processor --app-port 9001 --app-protocol http --dapr-http-port 3501 -- java -jar target/OrderProcessingService-0.0.1-SNAPSHOT.jar

If your app uses a TLS, you can tell Dapr to invoke your app over a TLS connection by setting --app-protocol https:

dapr run --app-id checkout --dapr-http-port 3500 --app-protocol https -- java -jar target/CheckoutService-0.0.1-SNAPSHOT.jar

dapr run --app-id order-processor --app-port 9001 --dapr-http-port 3501 --app-protocol https -- java -jar target/OrderProcessingService-0.0.1-SNAPSHOT.jar

dapr run --app-id checkout --dapr-http-port 3500 -- go run .

dapr run --app-id order-processor --app-port 6006 --app-protocol http --dapr-http-port 3501 -- go run .

If your app uses a TLS, you can tell Dapr to invoke your app over a TLS connection by setting --app-protocol https:

dapr run --app-id checkout --dapr-http-port 3500 --app-protocol https -- go run .

dapr run --app-id order-processor --app-port 6006 --dapr-http-port 3501 --app-protocol https -- go run .

In Kubernetes, set the dapr.io/app-id annotation on your pod:

apiVersion: apps/v1

kind: Deployment

metadata:

name: <language>-app

namespace: default

labels:

app: <language>-app

spec:

replicas: 1

selector:

matchLabels:

app: <language>-app

template:

metadata:

labels:

app: <language>-app

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "order-processor"

dapr.io/app-port: "6001"

...

If your app uses a TLS connection, you can tell Dapr to invoke your app over TLS with the app-protocol: "https" annotation (full list here). Note that Dapr does not validate TLS certificates presented by the app.

To invoke an application using Dapr, you can use the invoke API on any Dapr instance. The sidecar programming model encourages each application to interact with its own instance of Dapr. The Dapr sidecars discover and communicate with one another.

Below are code examples that leverage Dapr SDKs for service invocation.

#dependencies

import random

from time import sleep

import logging

import requests

#code

logging.basicConfig(level = logging.INFO)

while True:

sleep(random.randrange(50, 5000) / 1000)

orderId = random.randint(1, 1000)

#Invoke a service

result = requests.post(

url='%s/orders' % (base_url),

data=json.dumps(order),

headers=headers

)

logging.basicConfig(level = logging.INFO)

logging.info('Order requested: ' + str(orderId))

logging.info('Result: ' + str(result))

//dependencies

import axios from "axios";

//code

const daprHost = "127.0.0.1";

var main = function() {

for(var i=0;i<10;i++) {

sleep(5000);

var orderId = Math.floor(Math.random() * (1000 - 1) + 1);

start(orderId).catch((e) => {

console.error(e);

process.exit(1);

});

}

}

//Invoke a service

const result = await axios.post('order-processor' , "orders/" + orderId , axiosConfig);

console.log("Order requested: " + orderId);

console.log("Result: " + result.config.data);

function sleep(ms) {

return new Promise(resolve => setTimeout(resolve, ms));

}

main();

//dependencies

using System;

using System.Collections.Generic;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using System.Threading;

//code

namespace EventService

{

class Program

{

static async Task Main(string[] args)

{

while(true) {

await Task.Delay(5000)

var random = new Random();

var orderId = random.Next(1,1000);

//Using Dapr SDK to invoke a method

var order = new Order(orderId.ToString());

var httpClient = DaprClient.CreateInvokeHttpClient();

var response = await httpClient.PostAsJsonAsync("http://order-processor/orders", order);

var result = await response.Content.ReadAsStringAsync();

Console.WriteLine("Order requested: " + orderId);

Console.WriteLine("Result: " + result);

}

}

}

}

//dependencies

import java.io.IOException;

import java.net.URI;

import java.net.http.HttpClient;

import java.net.http.HttpRequest;

import java.net.http.HttpResponse;

import java.time.Duration;

import java.util.HashMap;

import java.util.Map;

import java.util.Random;

import java.util.concurrent.TimeUnit;

//code

@SpringBootApplication

public class CheckoutServiceApplication {

private static final HttpClient httpClient = HttpClient.newBuilder()

.version(HttpClient.Version.HTTP_2)

.connectTimeout(Duration.ofSeconds(10))

.build();

public static void main(String[] args) throws InterruptedException, IOException {

while (true) {

TimeUnit.MILLISECONDS.sleep(5000);

Random random = new Random();

int orderId = random.nextInt(1000 - 1) + 1;

// Create a Map to represent the request body

Map<String, Object> requestBody = new HashMap<>();

requestBody.put("orderId", orderId);

// Add other fields to the requestBody Map as needed

HttpRequest request = HttpRequest.newBuilder()

.POST(HttpRequest.BodyPublishers.ofString(new JSONObject(requestBody).toString()))

.uri(URI.create(dapr_url))

.header("Content-Type", "application/json")

.header("dapr-app-id", "order-processor")

.build();

HttpResponse<String> response = httpClient.send(request, HttpResponse.BodyHandlers.ofString());

System.out.println("Order passed: " + orderId);

TimeUnit.MILLISECONDS.sleep(1000);

log.info("Order requested: " + orderId);

log.info("Result: " + response.body());

}

}

}

package main

import (

"fmt"

"io"

"log"

"math/rand"

"net/http"

"os"

"time"

)

func main() {

daprHttpPort := os.Getenv("DAPR_HTTP_PORT")

if daprHttpPort == "" {

daprHttpPort = "3500"

}

client := &http.Client{

Timeout: 15 * time.Second,

}

for i := 0; i < 10; i++ {

time.Sleep(5000)

orderId := rand.Intn(1000-1) + 1

url := fmt.Sprintf("http://localhost:%s/checkout/%v", daprHttpPort, orderId)

req, err := http.NewRequest(http.MethodGet, url, nil)

if err != nil {

panic(err)

}

// Adding target app id as part of the header

req.Header.Add("dapr-app-id", "order-processor")

// Invoking a service

resp, err := client.Do(req)

if err != nil {

log.Fatal(err.Error())

}

b, err := io.ReadAll(resp.Body)

if err != nil {

panic(err)

}

fmt.Println(string(b))

}

}

To invoke a ‘GET’ endpoint:

curl http://localhost:3602/v1.0/invoke/checkout/method/checkout/100

To avoid changing URL paths as much as possible, Dapr provides the following ways to call the service invocation API:

localhost:<dapr-http-port>.dapr-app-id header to specify the ID of the target service, or alternatively pass the ID via HTTP Basic Auth: http://dapr-app-id:<service-id>@localhost:3602/path.For example, the following command:

curl http://localhost:3602/v1.0/invoke/checkout/method/checkout/100

is equivalent to:

curl -H 'dapr-app-id: checkout' 'http://localhost:3602/checkout/100' -X POST

or:

curl 'http://dapr-app-id:checkout@localhost:3602/checkout/100' -X POST

Using CLI:

dapr invoke --app-id checkout --method checkout/100

You can also append a query string or a fragment to the end of the URL and Dapr will pass it through unchanged. This means that if you need to pass some additional arguments in your service invocation that aren’t part of a payload or the path, you can do so by appending a ? to the end of the URL, followed by the key/value pairs separated by = signs and delimited by &. For example:

curl 'http://dapr-app-id:checkout@localhost:3602/checkout/100?basket=1234&key=abc' -X POST

When running on namespace supported platforms, you include the namespace of the target app in the app ID. For example, following the <app>.<namespace> format, use checkout.production.

Using this example, invoking the service with a namespace would look like:

curl http://localhost:3602/v1.0/invoke/checkout.production/method/checkout/100 -X POST

See the Cross namespace API spec for more information on namespaces.

Our example above showed you how to directly invoke a different service running locally or in Kubernetes. Dapr:

For more information on tracing and logs, see the observability article.

This article describe how to use Dapr to connect services using gRPC.

By using Dapr’s gRPC proxying capability, you can use your existing proto-based gRPC services and have the traffic go through the Dapr sidecar. Doing so yields the following Dapr service invocation benefits to developers:

Dapr allows proxying all kinds of gRPC invocations, including unary and stream-based ones.

The following example is taken from the “hello world” grpc-go example. Although this example is in Go, the same concepts apply to all programming languages supported by gRPC.

package main

import (

"context"

"log"

"net"

"google.golang.org/grpc"

pb "google.golang.org/grpc/examples/helloworld/helloworld"

)

const (

port = ":50051"

)

// server is used to implement helloworld.GreeterServer.

type server struct {

pb.UnimplementedGreeterServer

}

// SayHello implements helloworld.GreeterServer

func (s *server) SayHello(ctx context.Context, in *pb.HelloRequest) (*pb.HelloReply, error) {

log.Printf("Received: %v", in.GetName())

return &pb.HelloReply{Message: "Hello " + in.GetName()}, nil

}

func main() {

lis, err := net.Listen("tcp", port)

if err != nil {

log.Fatalf("failed to listen: %v", err)

}

s := grpc.NewServer()

pb.RegisterGreeterServer(s, &server{})

log.Printf("server listening at %v", lis.Addr())

if err := s.Serve(lis); err != nil {

log.Fatalf("failed to serve: %v", err)

}

}

This Go app implements the Greeter proto service and exposes a SayHello method.

dapr run --app-id server --app-port 50051 -- go run main.go

Using the Dapr CLI, we’re assigning a unique id to the app, server, using the --app-id flag.

The following example shows you how to discover the Greeter service using Dapr from a gRPC client.

Notice that instead of invoking the target service directly at port 50051, the client is invoking its local Dapr sidecar over port 50007 which then provides all the capabilities of service invocation including service discovery, tracing, mTLS and retries.

package main

import (

"context"

"log"

"time"

"google.golang.org/grpc"

pb "google.golang.org/grpc/examples/helloworld/helloworld"

"google.golang.org/grpc/metadata"

)

const (

address = "localhost:50007"

)

func main() {

// Set up a connection to the server.

conn, err := grpc.Dial(address, grpc.WithInsecure(), grpc.WithBlock())

if err != nil {

log.Fatalf("did not connect: %v", err)

}

defer conn.Close()

c := pb.NewGreeterClient(conn)

ctx, cancel := context.WithTimeout(context.Background(), time.Second*2)

defer cancel()

ctx = metadata.AppendToOutgoingContext(ctx, "dapr-app-id", "server")

r, err := c.SayHello(ctx, &pb.HelloRequest{Name: "Darth Tyrannus"})

if err != nil {

log.Fatalf("could not greet: %v", err)

}

log.Printf("Greeting: %s", r.GetMessage())

}

The following line tells Dapr to discover and invoke an app named server:

ctx = metadata.AppendToOutgoingContext(ctx, "dapr-app-id", "server")

All languages supported by gRPC allow for adding metadata. Here are a few examples:

Metadata headers = new Metadata();

Metadata.Key<String> jwtKey = Metadata.Key.of("dapr-app-id", "server");

GreeterService.ServiceBlockingStub stub = GreeterService.newBlockingStub(channel);

stub = MetadataUtils.attachHeaders(stub, header);

stub.SayHello(new HelloRequest() { Name = "Darth Malak" });

var metadata = new Metadata

{

{ "dapr-app-id", "server" }

};

var call = client.SayHello(new HelloRequest { Name = "Darth Nihilus" }, metadata);

metadata = (('dapr-app-id', 'server'),)

response = stub.SayHello(request={ name: 'Darth Revan' }, metadata=metadata)

const metadata = new grpc.Metadata();

metadata.add('dapr-app-id', 'server');

client.sayHello({ name: "Darth Malgus" }, metadata)

metadata = { 'dapr-app-id' : 'server' }

response = service.sayHello({ 'name': 'Darth Bane' }, metadata)

grpc::ClientContext context;

context.AddMetadata("dapr-app-id", "server");

dapr run --app-id client --dapr-grpc-port 50007 -- go run main.go

If you’re running Dapr locally with Zipkin installed, open the browser at http://localhost:9411 and view the traces between the client and server.

Set the following Dapr annotations on your deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: grpc-app

namespace: default

labels:

app: grpc-app

spec:

replicas: 1

selector:

matchLabels:

app: grpc-app

template:

metadata:

labels:

app: grpc-app

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "server"

dapr.io/app-protocol: "grpc"

dapr.io/app-port: "50051"

...

The dapr.io/app-protocol: "grpc" annotation tells Dapr to invoke the app using gRPC.

If your app uses a TLS connection, you can tell Dapr to invoke your app over TLS with the app-protocol: "grpcs" annotation (full list here). Note that Dapr does not validate TLS certificates presented by the app.

When running on namespace supported platforms, you include the namespace of the target app in the app ID: myApp.production

For example, invoking the gRPC server on a different namespace:

ctx = metadata.AppendToOutgoingContext(ctx, "dapr-app-id", "server.production")

See the Cross namespace API spec for more information on namespaces.

The example above showed you how to directly invoke a different service running locally or in Kubernetes. Dapr outputs metrics, tracing and logging information allowing you to visualize a call graph between services, log errors and optionally log the payload body.

For more information on tracing and logs see the observability article.

When using Dapr to proxy streaming RPC calls using gRPC, you must set an additional metadata option dapr-stream with value true.

For example:

ctx = metadata.AppendToOutgoingContext(ctx, "dapr-app-id", "server")

ctx = metadata.AppendToOutgoingContext(ctx, "dapr-stream", "true")

Metadata headers = new Metadata();

Metadata.Key<String> jwtKey = Metadata.Key.of("dapr-app-id", "server");

Metadata.Key<String> jwtKey = Metadata.Key.of("dapr-stream", "true");

var metadata = new Metadata

{

{ "dapr-app-id", "server" },

{ "dapr-stream", "true" }

};

metadata = (('dapr-app-id', 'server'), ('dapr-stream', 'true'),)

const metadata = new grpc.Metadata();

metadata.add('dapr-app-id', 'server');

metadata.add('dapr-stream', 'true');

metadata = { 'dapr-app-id' : 'server' }

metadata = { 'dapr-stream' : 'true' }

grpc::ClientContext context;

context.AddMetadata("dapr-app-id", "server");

context.AddMetadata("dapr-stream", "true");

Currently, resiliency policies are not supported for service invocation via gRPC.

When proxying streaming gRPCs, due to their long-lived nature, resiliency policies are applied on the “initial handshake” only. As a consequence:

Watch this video on how to use Dapr’s gRPC proxying capability:

This article demonstrates how to call a non-Dapr endpoint using Dapr over HTTP.

Using Dapr’s service invocation API, you can communicate with endpoints that either use or do not use Dapr. Using Dapr to call endpoints that do not use Dapr not only provides a consistent API, but also the following Dapr service invocation benefits:

Sometimes you need to call a non-Dapr HTTP endpoint. For example:

By defining an HTTPEndpoint resource, you declaratively define a way to interact with a non-Dapr endpoint. You then use the service invocation URL to invoke non-Dapr endpoints. Alternatively, you can place a non-Dapr Fully Qualified Domain Name (FQDN) endpoint URL directly into the service invocation URL.

When using service invocation, the Dapr runtime follows a precedence order:

HTTPEndpoint resource?http:// or https:// prefix?appID?The diagram below is an overview of how Dapr’s service invocation works when invoking non-Dapr endpoints.

HTTPEndpoint or FQDN URL then forwards the message to Service B.There are two ways to invoke a non-Dapr endpoint when communicating either to Dapr applications or non-Dapr applications. A Dapr application can invoke a non-Dapr endpoint by providing one of the following:

A named HTTPEndpoint resource, including defining an HTTPEndpoint resource type. See the HTTPEndpoint reference guide for an example.

localhost:3500/v1.0/invoke/<HTTPEndpoint-name>/method/<my-method>

For example, with an HTTPEndpoint resource called “palpatine” and a method called “Order66”, this would be:

curl http://localhost:3500/v1.0/invoke/palpatine/method/order66

A FQDN URL to the non-Dapr endpoint.

localhost:3500/v1.0/invoke/<URL>/method/<my-method>

For example, with an FQDN resource called https://darthsidious.starwars, this would be:

curl http://localhost:3500/v1.0/invoke/https://darthsidious.starwars/method/order66

AppIDs are always used to call Dapr applications with the appID and my-method. Read the How-To: Invoke services using HTTP guide for more information. For example:

localhost:3500/v1.0/invoke/<appID>/method/<my-method>

curl http://localhost:3602/v1.0/invoke/orderprocessor/method/checkout

Using the HTTPEndpoint resource allows you to use any combination of a root certificate, client certificate and private key according to the authentication requirements of the remote endpoint.

apiVersion: dapr.io/v1alpha1

kind: HTTPEndpoint

metadata:

name: "external-http-endpoint-tls"

spec:

baseUrl: https://service-invocation-external:443

headers:

- name: "Accept-Language"

value: "en-US"

clientTLS:

rootCA:

secretKeyRef:

name: dapr-tls-client

key: ca.crt

apiVersion: dapr.io/v1alpha1

kind: HTTPEndpoint

metadata:

name: "external-http-endpoint-tls"

spec:

baseUrl: https://service-invocation-external:443

headers:

- name: "Accept-Language"

value: "en-US"

clientTLS:

certificate:

secretKeyRef:

name: dapr-tls-client

key: tls.crt

privateKey:

secretKeyRef:

name: dapr-tls-key

key: tls.key

Watch this video on how to use service invocation to call non-Dapr endpoints.

In this article, you’ll learn how you can call between services deployed to different namespaces. By default, service invocation supports invoking services within the same namespace by simply referencing the app ID (nodeapp):

localhost:3500/v1.0/invoke/nodeapp/method/neworder

Service invocation also supports calls across namespaces. On all supported hosting platforms, Dapr app IDs conform to a valid FQDN format that includes the target namespace. You can specify both:

nodeapp), andproduction).Example 1

Call the neworder method on the nodeapp in the production namespace:

localhost:3500/v1.0/invoke/nodeapp.production/method/neworder

When calling an application in a namespace using service invocation, you qualify it with the namespace. This proves useful in cross-namespace calls in a Kubernetes cluster.

Example 2

Call the ping method on myapp scoped to the production namespace:

https://localhost:3500/v1.0/invoke/myapp.production/method/ping

Example 3

Call the same ping method as example 2 using a curl command from an external DNS address (in this case, api.demo.dapr.team) and supply the Dapr API token for authentication:

MacOS/Linux:

curl -i -d '{ "message": "hello" }' \

-H "Content-type: application/json" \

-H "dapr-api-token: ${API_TOKEN}" \

https://api.demo.dapr.team/v1.0/invoke/myapp.production/method/ping

Learn more about how to use Dapr Pub/sub:

Publish and subscribe (pub/sub) enables microservices to communicate with each other using messages for event-driven architectures.

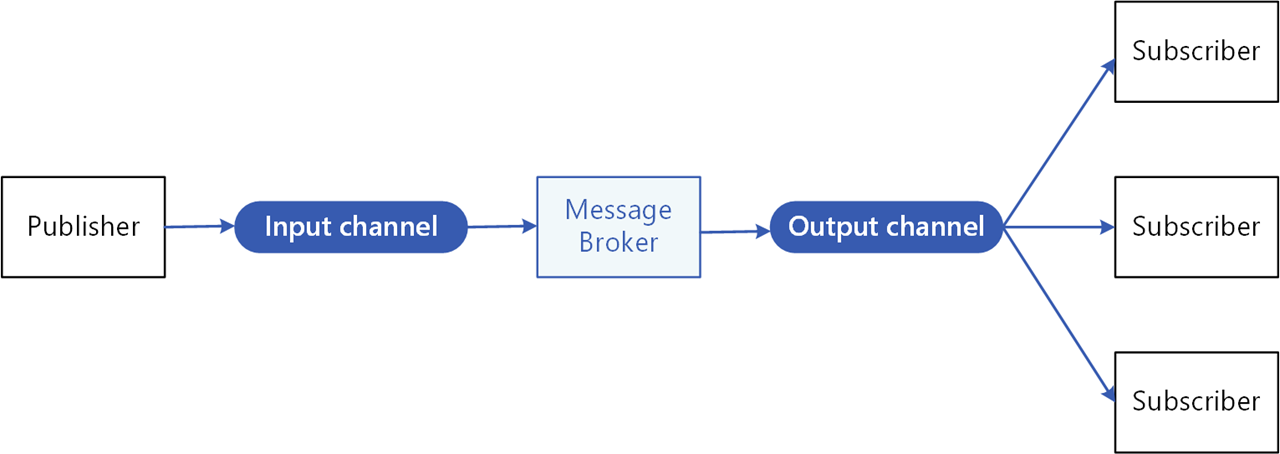

An intermediary message broker copies each message from a publisher’s input channel to an output channel for all subscribers interested in that message. This pattern is especially useful when you need to decouple microservices from one another.

The pub/sub API in Dapr:

The specific message broker used by your service is pluggable and configured as a Dapr pub/sub component at runtime. This removes the dependency from your service and makes your service more portable and flexible to changes.

When using pub/sub in Dapr:

The following overview video and demo demonstrates how Dapr pub/sub works.

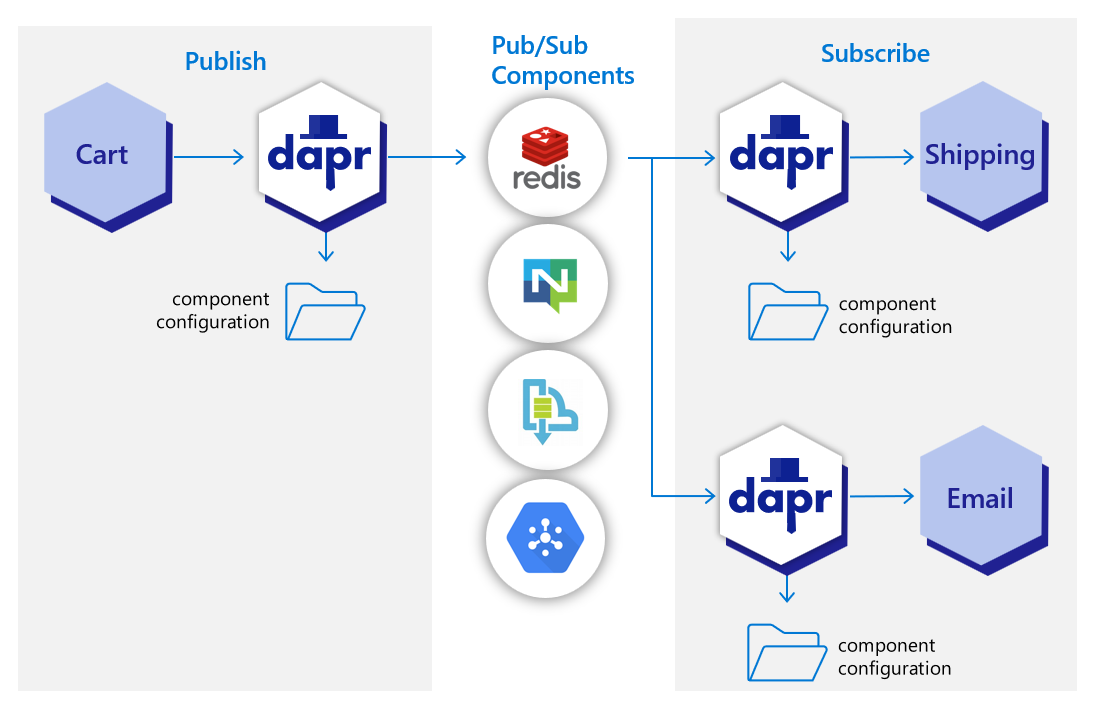

In the diagram below, a “shipping” service and an “email” service have both subscribed to topics published by a “cart” service. Each service loads pub/sub component configuration files that point to the same pub/sub message broker component; for example: Redis Streams, NATS Streaming, Azure Service Bus, or GCP pub/sub.

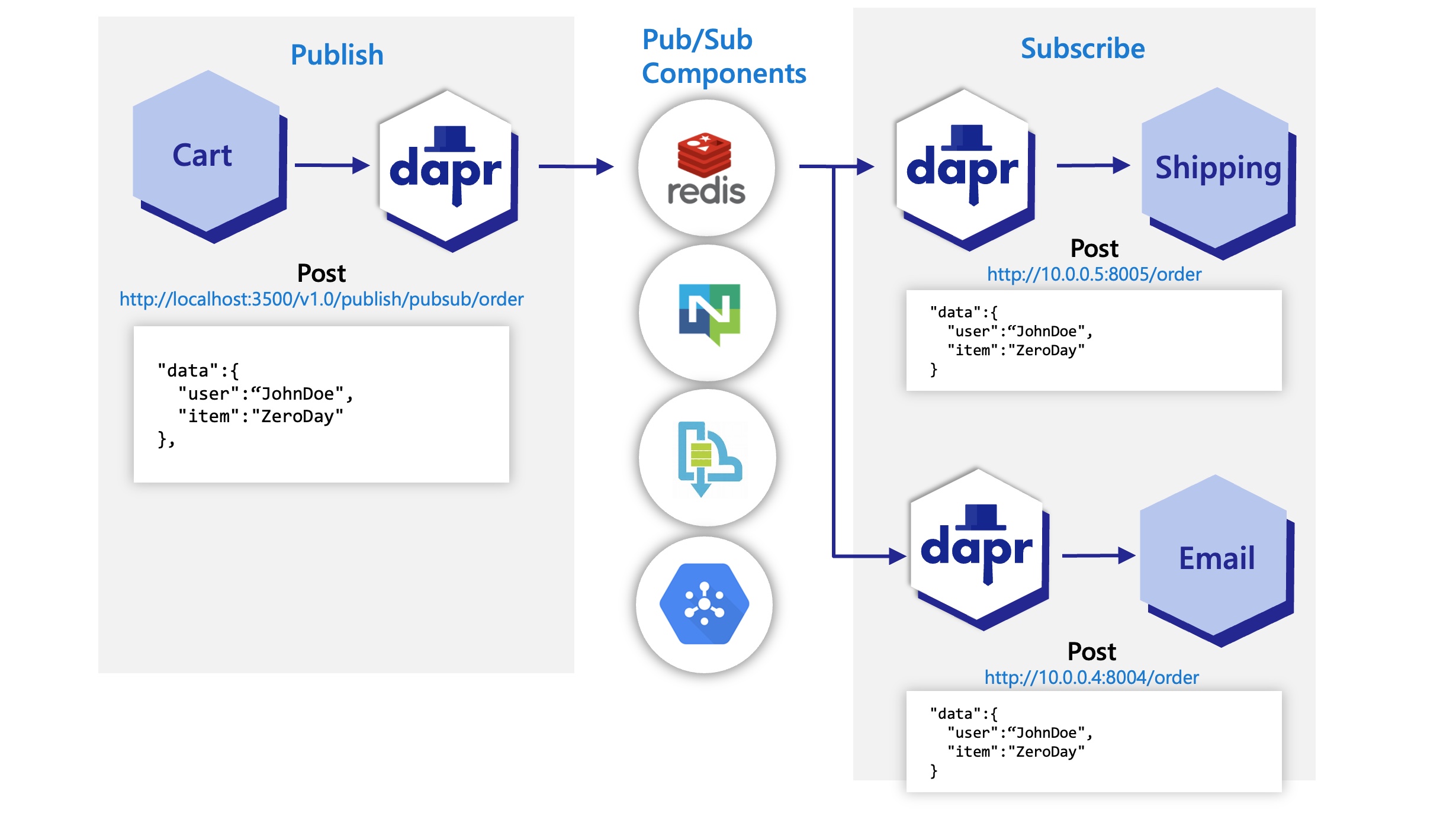

In the diagram below, the Dapr API posts an “order” topic from the publishing “cart” service to “order” endpoints on the “shipping” and “email” subscribing services.

View the complete list of pub/sub components that Dapr supports.

The pub/sub API building block brings several features to your application.

To enable message routing and provide additional context with each message between services, Dapr uses the CloudEvents 1.0 specification as its message format. Any message sent by an application to a topic using Dapr is automatically wrapped in a Cloud Events envelope, using Content-Type header value for datacontenttype attribute.

For more information, read about messaging with CloudEvents, or sending raw messages without CloudEvents.

If one of your applications uses Dapr while another doesn’t, you can disable the CloudEvent wrapping for a publisher or subscriber. This allows partial adoption of Dapr pub/sub in applications that cannot adopt Dapr all at once.

For more information, read how to use pub/sub without CloudEvents.

When publishing a message, it’s important to specify the content type of the data being sent. Unless specified, Dapr will assume text/plain.

Content-Type headerIn principle, Dapr considers a message successfully delivered once the subscriber processes the message and responds with a non-error response. For more granular control, Dapr’s pub/sub API also provides explicit statuses, defined in the response payload, with which the subscriber indicates specific handling instructions to Dapr (for example, RETRY or DROP).

Dapr applications can subscribe to published topics via three subscription types that support the same features: declarative, streaming and programmatic.

| Subscription type | Description |

|---|---|

| Declarative | The subscription is defined in an external file. The declarative approach removes the Dapr dependency from your code and allows for existing applications to subscribe to topics, without having to change code. |

| Streaming | The subscription is defined in the user code. Streaming subscriptions are dynamic, meaning they allow for adding or removing subscriptions at runtime. They do not require a subscription endpoint in your application (that is required by both programmatic and declarative subscriptions), making them easy to configure in code. Streaming subscriptions also do not require an app to be configured with the sidecar to receive messages. With streaming subscriptions, since messages are sent to a message handler code, there is no concept of routes or bulk subscriptions. |

| Programmatic | Subscription is defined in the user code. The programmatic approach implements the static subscription and requires an endpoint in your code. |

For more information, read about the subscriptions in Subscription Types.

To reload topic subscriptions that are defined programmatically or declaratively, the Dapr sidecar needs to be restarted.

The Dapr sidecar can be made to dynamically reload changed declarative topic subscriptions without restarting by enabling the HotReload feature gate.

Hot reloading of topic subscriptions is currently a preview feature.

In-flight messages are unaffected when reloading a subscription.

Dapr provides content-based routing pattern. Pub/sub routing is an implementation of this pattern that allows developers to use expressions to route CloudEvents based on their contents to different URIs/paths and event handlers in your application. If no route matches, an optional default route is used. This is useful as your applications expands to support multiple event versions or special cases.

This feature is available to both the declarative and programmatic subscription approaches.

For more information on message routing, read Dapr pub/sub API reference

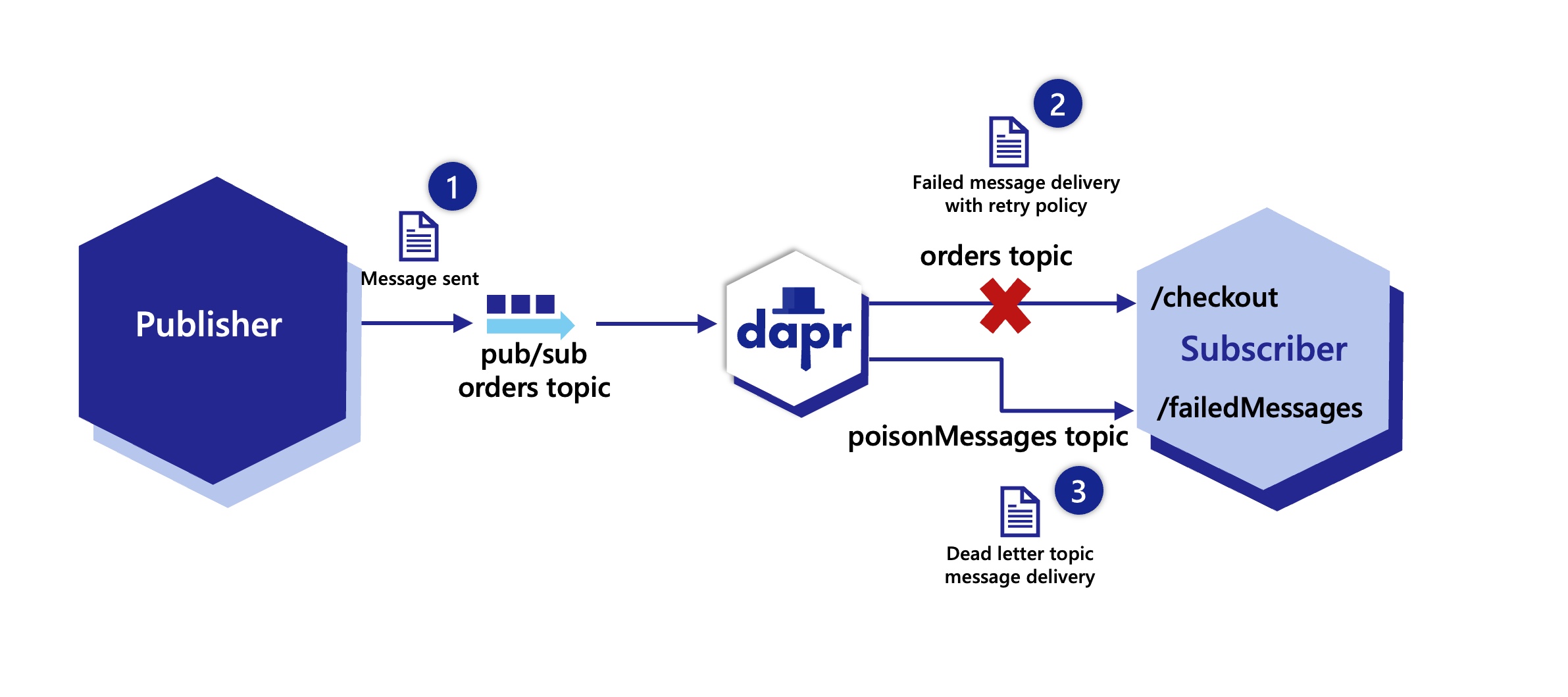

Sometimes, messages can’t be processed because of a variety of possible issues, such as erroneous conditions within the producer or consumer application or an unexpected state change that causes an issue with your application code. Dapr allows developers to set dead letter topics to deal with messages that cannot be delivered to an application. This feature is available on all pub/sub components and prevents consumer applications from endlessly retrying a failed message. For more information, read about dead letter topics

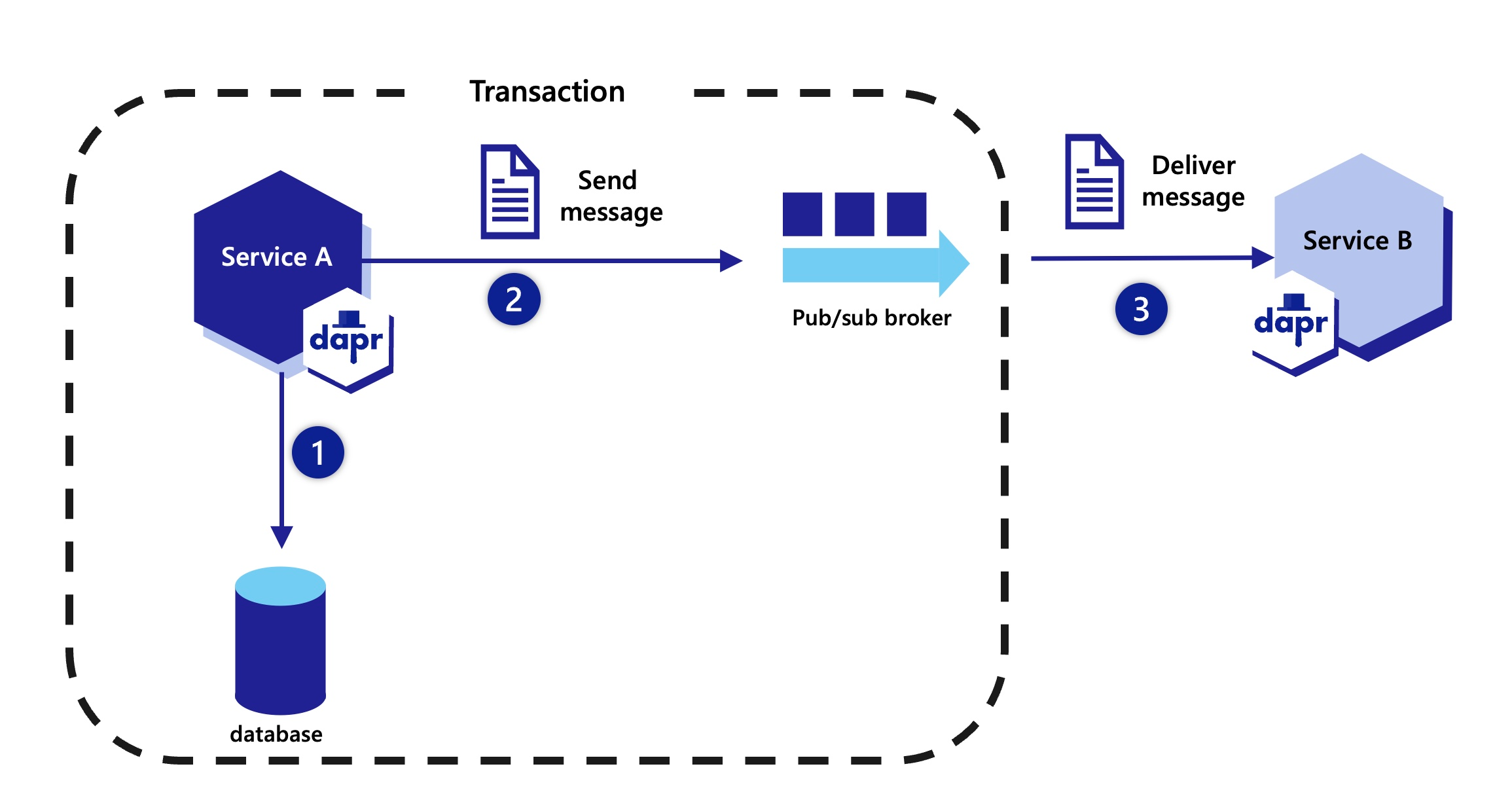

Dapr enables developers to use the outbox pattern for achieving a single transaction across a transactional state store and any message broker. For more information, read How to enable transactional outbox messaging

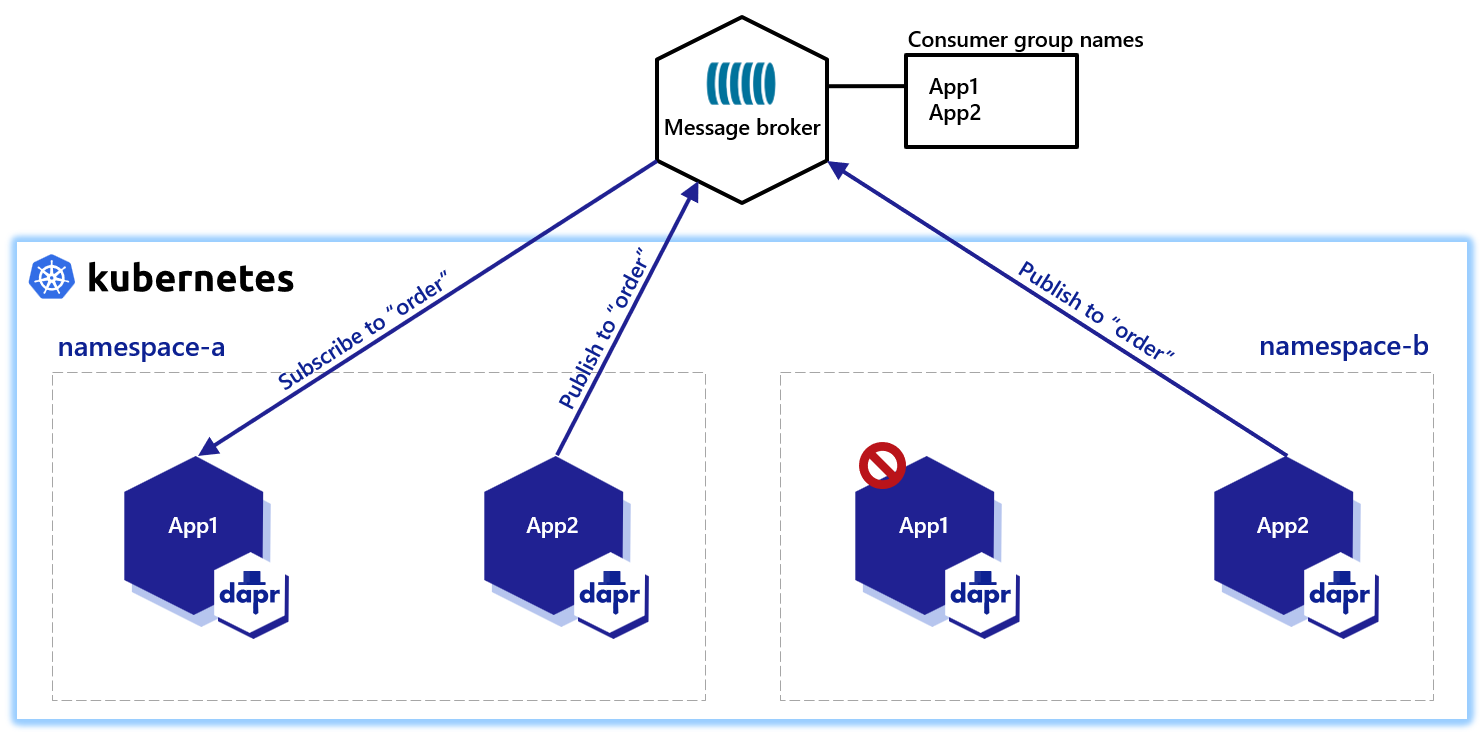

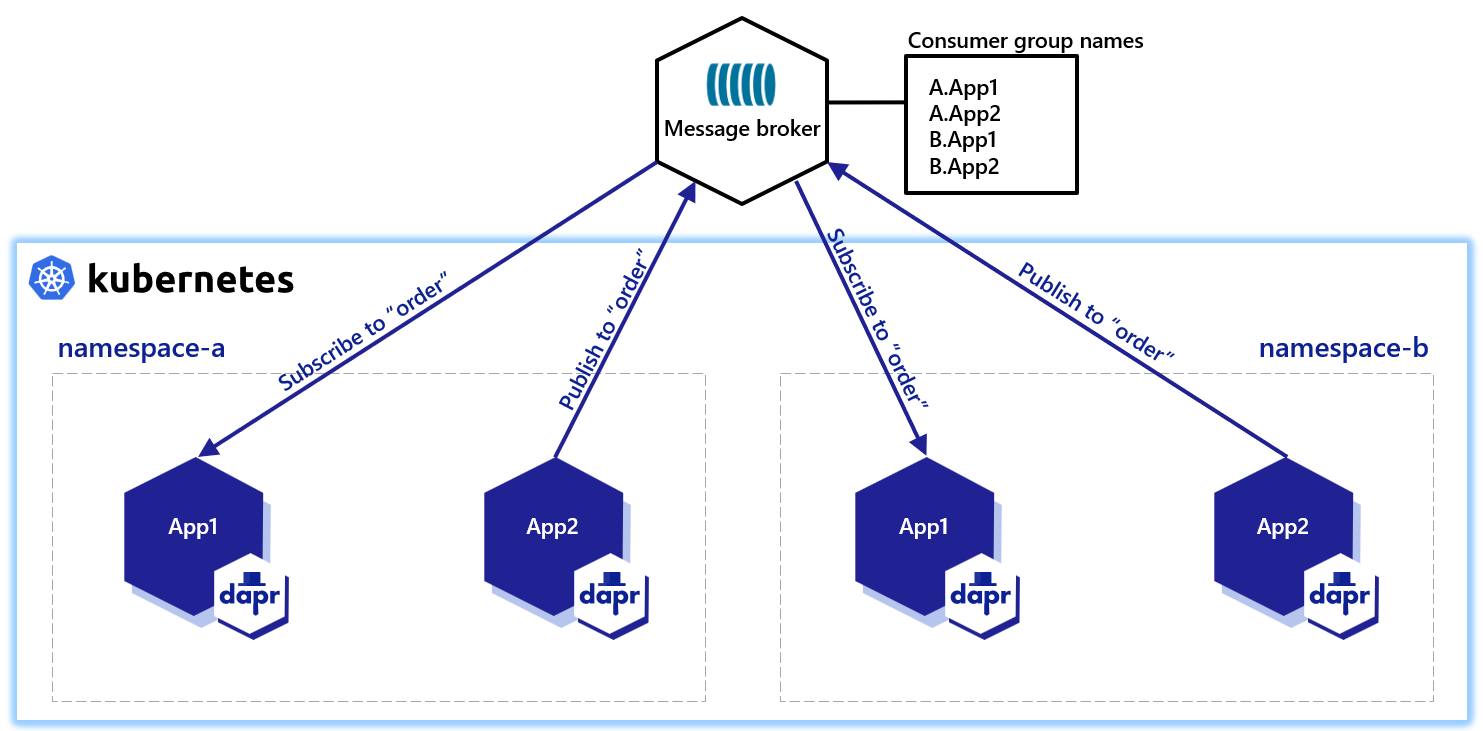

Dapr solves multi-tenancy at-scale with namespaces for consumer groups. Simply include the "{namespace}" value in your component metadata for consumer groups to allow multiple namespaces with applications of the same app-id to publish and subscribe to the same message broker.

Dapr guarantees at-least-once semantics for message delivery. When an application publishes a message to a topic using the pub/sub API, Dapr ensures the message is delivered at least once to every subscriber.

Even if the message fails to deliver, or your application crashes, Dapr attempts to redeliver the message until successful delivery.

All Dapr pub/sub components support the at-least-once guarantee.

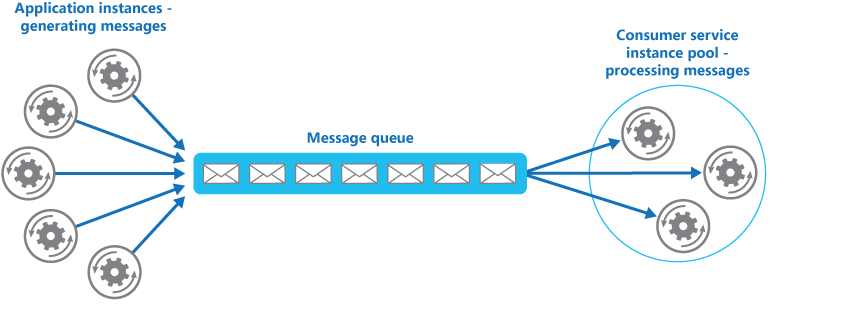

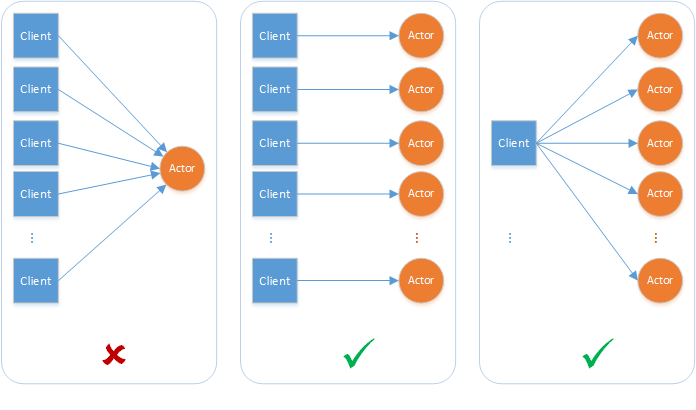

Dapr handles the burden of dealing with consumer groups and the competing consumers pattern. In the competing consumers pattern, multiple application instances using a single consumer group compete for the message. Dapr enforces the competing consumer pattern when replicas use the same app-id without explicit consumer group overrides.

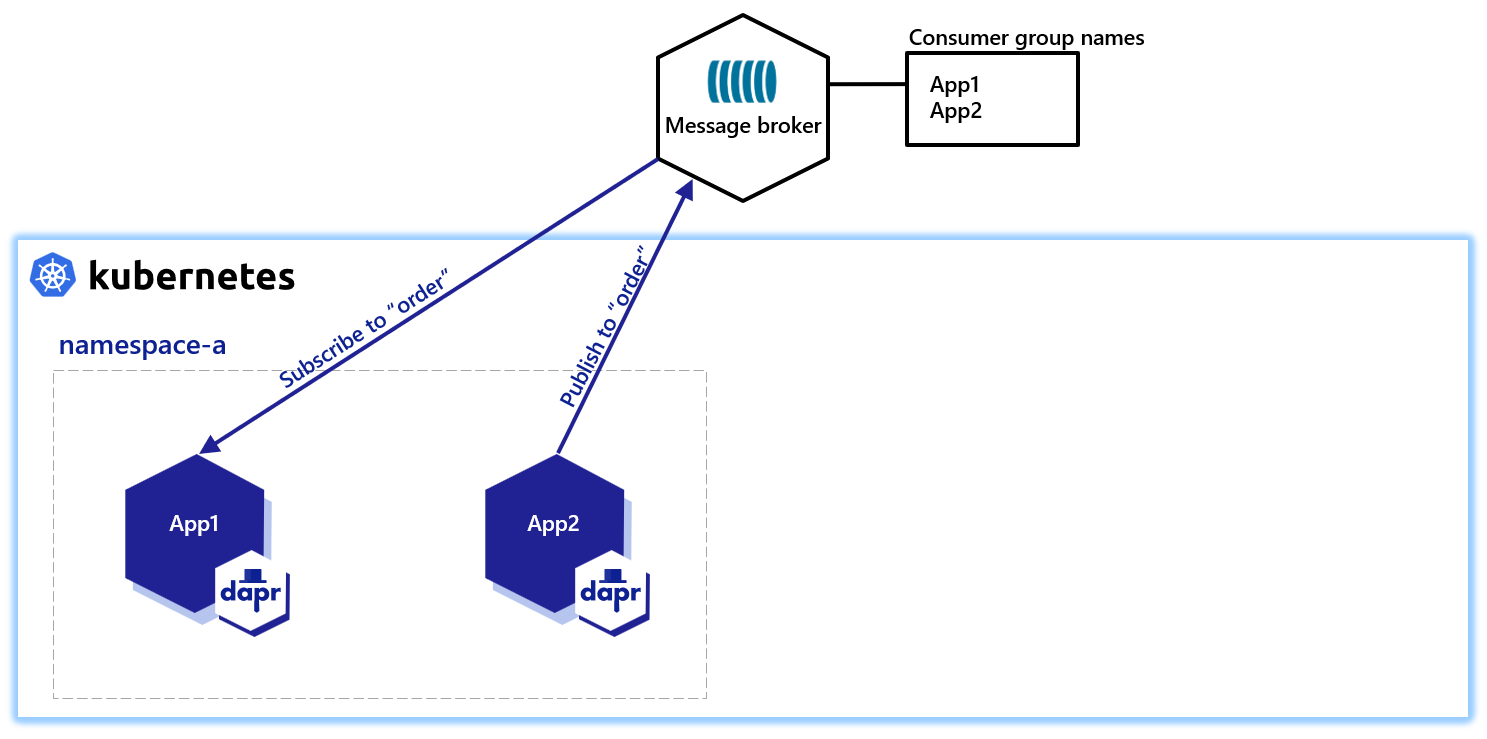

When multiple instances of the same application (with same app-id) subscribe to a topic, Dapr delivers each message to only one instance of that application. This concept is illustrated in the diagram below.

Similarly, if two different applications (with different app-id) subscribe to the same topic, Dapr delivers each message to only one instance of each application.

Not all Dapr pub/sub components support the competing consumer pattern. Currently, the following (non-exhaustive) pub/sub components support this:

By default, all topic messages associated with an instance of a pub/sub component are available to every application configured with that component. You can limit which application can publish or subscribe to topics with Dapr topic scoping. For more information, read: pub/sub topic scoping.

Dapr can set a timeout message on a per-message basis, meaning that if the message is not read from the pub/sub component, then the message is discarded. This timeout message prevents a build up of unread messages. If a message has been in the queue longer than the configured TTL, it is marked as dead. For more information, read pub/sub message TTL.

Dapr supports sending and receiving multiple messages in a single request. When writing applications that need to send or receive a large number of messages, using bulk operations allows achieving high throughput by reducing the overall number of requests. For more information, read pub/sub bulk messages.

When running on Kubernetes, subscribers can have a sticky consumerID per instance when using StatefulSets in combination with the {podName} marker. See how to horizontally scale subscribers with StatefulSets.

Want to put the Dapr pub/sub API to the test? Walk through the following quickstart and tutorials to see pub/sub in action:

| Quickstart/tutorial | Description |

|---|---|

| Pub/sub quickstart | Send and receive messages using the publish and subscribe API. |

| Pub/sub tutorial | Demonstrates how to use Dapr to enable pub-sub applications. Uses Redis as a pub-sub component. |

Want to skip the quickstarts? Not a problem. You can try out the pub/sub building block directly in your application to publish messages and subscribe to a topic. After Dapr is installed, you can begin using the pub/sub API starting with the pub/sub how-to guide.

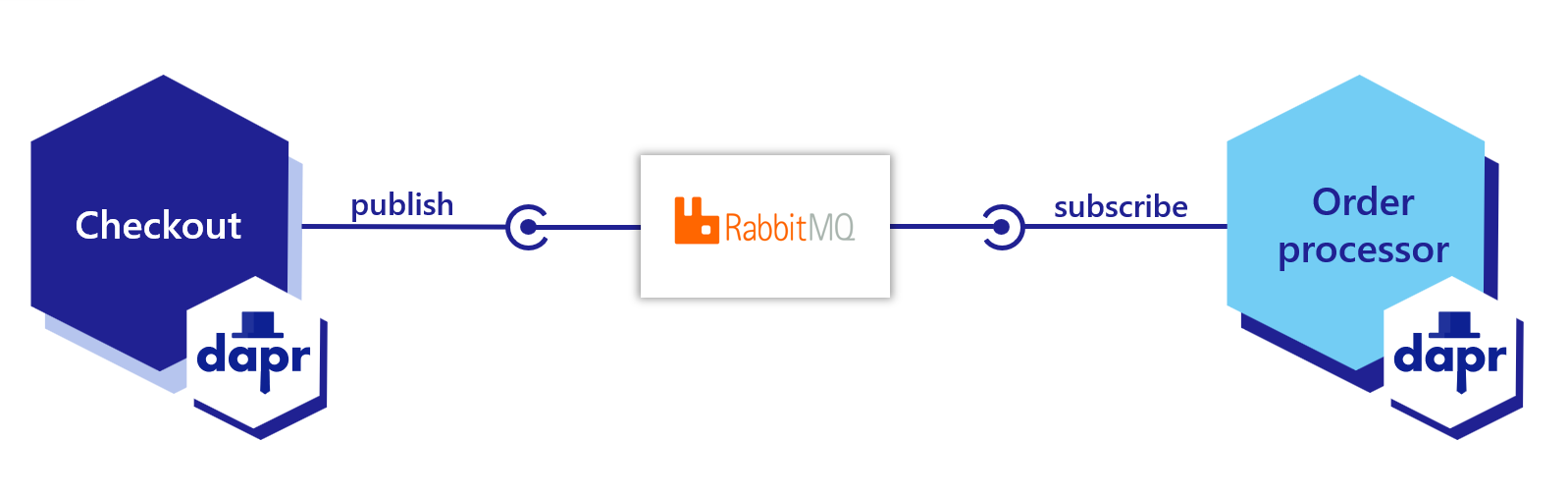

Now that you’ve learned what the Dapr pub/sub building block provides, learn how it can work in your service. The below code example loosely describes an application that processes orders with two services, each with Dapr sidecars:

Dapr automatically wraps the user payload in a CloudEvents v1.0 compliant envelope, using Content-Type header value for datacontenttype attribute. Learn more about messages with CloudEvents.

The following example demonstrates how your applications publish and subscribe to a topic called orders.

The first step is to set up the pub/sub component:

When you run dapr init, Dapr creates a default Redis pubsub.yaml and runs a Redis container on your local machine, located:

%UserProfile%\.dapr\components\pubsub.yaml~/.dapr/components/pubsub.yamlWith the pubsub.yaml component, you can easily swap out underlying components without application code changes. In this example, RabbitMQ is used.

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: order-pub-sub

spec:

type: pubsub.rabbitmq

version: v1

metadata:

- name: host

value: "amqp://localhost:5672"

- name: durable

value: "false"

- name: deletedWhenUnused

value: "false"

- name: autoAck

value: "false"

- name: reconnectWait

value: "0"

- name: concurrency

value: parallel

scopes:

- orderprocessing

- checkout

You can override this file with another pubsub component by creating a components directory (in this example, myComponents) containing the file and using the flag --resources-path with the dapr run CLI command.

To deploy this into a Kubernetes cluster, fill in the metadata connection details of the pub/sub component in the YAML below, save as pubsub.yaml, and run kubectl apply -f pubsub.yaml.

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: order-pub-sub

spec:

type: pubsub.rabbitmq

version: v1

metadata:

- name: connectionString

value: "amqp://localhost:5672"

- name: protocol

value: amqp

- name: hostname

value: localhost

- name: username

value: username

- name: password

value: password

- name: durable

value: "false"

- name: deletedWhenUnused

value: "false"

- name: autoAck

value: "false"

- name: reconnectWait

value: "0"

- name: concurrency

value: parallel

scopes:

- orderprocessing

- checkout

dapr run --app-id myapp --resources-path ./myComponents -- dotnet run

dapr run --app-id myapp --resources-path ./myComponents -- mvn spring-boot:run

dapr run --app-id myapp --resources-path ./myComponents -- python3 app.py

dapr run --app-id myapp --resources-path ./myComponents -- go run app.go

dapr run --app-id myapp --resources-path ./myComponents -- npm start

Dapr provides three methods by which you can subscribe to topics:

Learn more in the declarative, streaming, and programmatic subscriptions doc. This example demonstrates a declarative subscription.

Create a file named subscription.yaml and paste the following:

apiVersion: dapr.io/v2alpha1

kind: Subscription

metadata:

name: order-pub-sub

spec:

topic: orders

routes:

default: /checkout

pubsubname: order-pub-sub

scopes:

- orderprocessing

- checkout

The example above shows an event subscription to topic orders, for the pubsub component order-pub-sub.

route field tells Dapr to send all topic messages to the /checkout endpoint in the app.scopes field enables this subscription for apps with IDs orderprocessing and checkout.Place subscription.yaml in the same directory as your pubsub.yaml component. When Dapr starts up, it loads subscriptions along with the components.

HotReload feature gate.

To prevent reprocessing or loss of unprocessed messages, in-flight messages between Dapr and your application are unaffected during hot reload events.

Below are code examples that leverage Dapr SDKs to subscribe to the topic you defined in subscription.yaml.

using System.Collections.Generic;

using System.Threading.Tasks;

using System;

using Microsoft.AspNetCore.Mvc;

using Dapr;

using Dapr.Client;

namespace CheckoutService.Controllers;

[ApiController]

public sealed class CheckoutServiceController : ControllerBase

{

//Subscribe to a topic called "orders" from the "order-pub-sub" compoennt

[Topic("order-pub-sub", "orders")]

[HttpPost("checkout")]

public void GetCheckout([FromBody] int orderId)

{

Console.WriteLine("Subscriber received : " + orderId);

}

}

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the subscriber application:

dapr run --app-id checkout --app-port 6002 --dapr-http-port 3602 --dapr-grpc-port 60002 --app-protocol https dotnet run

//dependencies

import io.dapr.Topic;

import io.dapr.client.domain.CloudEvent;

import org.springframework.web.bind.annotation.*;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import reactor.core.publisher.Mono;

//code

@RestController

public class CheckoutServiceController {

private static final Logger log = LoggerFactory.getLogger(CheckoutServiceController.class);

//Subscribe to a topic

@Topic(name = "orders", pubsubName = "order-pub-sub")

@PostMapping(path = "/checkout")

public Mono<Void> getCheckout(@RequestBody(required = false) CloudEvent<String> cloudEvent) {

return Mono.fromRunnable(() -> {

try {

log.info("Subscriber received: " + cloudEvent.getData());

} catch (Exception e) {

throw new RuntimeException(e);

}

});

}

}

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the subscriber application:

dapr run --app-id checkout --app-port 6002 --dapr-http-port 3602 --dapr-grpc-port 60002 mvn spring-boot:run

#dependencies

from cloudevents.sdk.event import v1

from dapr.ext.grpc import App

import logging

import json

#code

app = App()

logging.basicConfig(level = logging.INFO)

#Subscribe to a topic

@app.subscribe(pubsub_name='order-pub-sub', topic='orders')

def mytopic(event: v1.Event) -> None:

data = json.loads(event.Data())

logging.info('Subscriber received: ' + str(data))

app.run(6002)

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the subscriber application:

dapr run --app-id checkout --app-port 6002 --dapr-http-port 3602 --app-protocol grpc -- python3 CheckoutService.py

//dependencies

import (

"log"

"net/http"

"context"

"github.com/dapr/go-sdk/service/common"

daprd "github.com/dapr/go-sdk/service/http"

)

//code

var sub = &common.Subscription{

PubsubName: "order-pub-sub",

Topic: "orders",

Route: "/checkout",

}

func main() {

s := daprd.NewService(":6002")

//Subscribe to a topic

if err := s.AddTopicEventHandler(sub, eventHandler); err != nil {

log.Fatalf("error adding topic subscription: %v", err)

}

if err := s.Start(); err != nil && err != http.ErrServerClosed {

log.Fatalf("error listenning: %v", err)

}

}

func eventHandler(ctx context.Context, e *common.TopicEvent) (retry bool, err error) {

log.Printf("Subscriber received: %s", e.Data)

return false, nil

}

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the subscriber application:

dapr run --app-id checkout --app-port 6002 --dapr-http-port 3602 --dapr-grpc-port 60002 go run CheckoutService.go

//dependencies

import { DaprServer, CommunicationProtocolEnum } from '@dapr/dapr';

//code

const daprHost = "127.0.0.1";

const serverHost = "127.0.0.1";

const serverPort = "6002";

start().catch((e) => {

console.error(e);

process.exit(1);

});

async function start(orderId) {

const server = new DaprServer({

serverHost,

serverPort,

communicationProtocol: CommunicationProtocolEnum.HTTP,

clientOptions: {

daprHost,

daprPort: process.env.DAPR_HTTP_PORT,

},

});

//Subscribe to a topic

await server.pubsub.subscribe("order-pub-sub", "orders", async (orderId) => {

console.log(`Subscriber received: ${JSON.stringify(orderId)}`)

});

await server.start();

}

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the subscriber application:

dapr run --app-id checkout --app-port 6002 --dapr-http-port 3602 --dapr-grpc-port 60002 npm start

Start an instance of Dapr with an app-id called orderprocessing:

dapr run --app-id orderprocessing --dapr-http-port 3601

Then publish a message to the orders topic:

dapr publish --publish-app-id orderprocessing --pubsub order-pub-sub --topic orders --data '{"orderId": "100"}'

curl -X POST http://localhost:3601/v1.0/publish/order-pub-sub/orders -H "Content-Type: application/json" -d '{"orderId": "100"}'

Invoke-RestMethod -Method Post -ContentType 'application/json' -Body '{"orderId": "100"}' -Uri 'http://localhost:3601/v1.0/publish/order-pub-sub/orders'

Below are code examples that leverage Dapr SDKs to publish a topic.

using System;

using System.Collections.Generic;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Threading.Tasks;

using Dapr.Client;

using System.Threading;

const string PUBSUB_NAME = "order-pub-sub";

const string TOPIC_NAME = "orders";

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddDaprClient();

var app = builder.Build();

var random = new Random();

var client = app.Services.GetRequiredService<DaprClient>();

while(true) {

await Task.Delay(TimeSpan.FromSeconds(5));

var orderId = random.Next(1,1000);

var source = new CancellationTokenSource();

var cancellationToken = source.Token;

//Using Dapr SDK to publish a topic

await client.PublishEventAsync(PUBSUB_NAME, TOPIC_NAME, orderId, cancellationToken);

Console.WriteLine("Published data: " + orderId);

}

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the publisher application:

dapr run --app-id orderprocessing --app-port 6001 --dapr-http-port 3601 --dapr-grpc-port 60001 --app-protocol https dotnet run

//dependencies

import io.dapr.client.DaprClient;

import io.dapr.client.DaprClientBuilder;

import io.dapr.client.domain.Metadata;

import static java.util.Collections.singletonMap;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.Random;

import java.util.concurrent.TimeUnit;

//code

@SpringBootApplication

public class OrderProcessingServiceApplication {

private static final Logger log = LoggerFactory.getLogger(OrderProcessingServiceApplication.class);

public static void main(String[] args) throws InterruptedException{

String MESSAGE_TTL_IN_SECONDS = "1000";

String TOPIC_NAME = "orders";

String PUBSUB_NAME = "order-pub-sub";

while(true) {

TimeUnit.MILLISECONDS.sleep(5000);

Random random = new Random();

int orderId = random.nextInt(1000-1) + 1;

DaprClient client = new DaprClientBuilder().build();

//Using Dapr SDK to publish a topic

client.publishEvent(

PUBSUB_NAME,

TOPIC_NAME,

orderId,

singletonMap(Metadata.TTL_IN_SECONDS, MESSAGE_TTL_IN_SECONDS)).block();

log.info("Published data:" + orderId);

}

}

}

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the publisher application:

dapr run --app-id orderprocessing --app-port 6001 --dapr-http-port 3601 --dapr-grpc-port 60001 mvn spring-boot:run

#dependencies

import random

from time import sleep

import requests

import logging

import json

from dapr.clients import DaprClient

#code

logging.basicConfig(level = logging.INFO)

while True:

sleep(random.randrange(50, 5000) / 1000)

orderId = random.randint(1, 1000)

PUBSUB_NAME = 'order-pub-sub'

TOPIC_NAME = 'orders'

with DaprClient() as client:

#Using Dapr SDK to publish a topic

result = client.publish_event(

pubsub_name=PUBSUB_NAME,

topic_name=TOPIC_NAME,

data=json.dumps(orderId),

data_content_type='application/json',

)

logging.info('Published data: ' + str(orderId))

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the publisher application:

dapr run --app-id orderprocessing --app-port 6001 --dapr-http-port 3601 --app-protocol grpc python3 OrderProcessingService.py

//dependencies

import (

"context"

"log"

"math/rand"

"time"

"strconv"

dapr "github.com/dapr/go-sdk/client"

)

//code

var (

PUBSUB_NAME = "order-pub-sub"

TOPIC_NAME = "orders"

)

func main() {

for i := 0; i < 10; i++ {

time.Sleep(5000)

orderId := rand.Intn(1000-1) + 1

client, err := dapr.NewClient()

if err != nil {

panic(err)

}

defer client.Close()

ctx := context.Background()

//Using Dapr SDK to publish a topic

if err := client.PublishEvent(ctx, PUBSUB_NAME, TOPIC_NAME, []byte(strconv.Itoa(orderId)));

err != nil {

panic(err)

}

log.Println("Published data: " + strconv.Itoa(orderId))

}

}

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the publisher application:

dapr run --app-id orderprocessing --app-port 6001 --dapr-http-port 3601 --dapr-grpc-port 60001 go run OrderProcessingService.go

//dependencies

import { DaprServer, DaprClient, CommunicationProtocolEnum } from '@dapr/dapr';

const daprHost = "127.0.0.1";

var main = function() {

for(var i=0;i<10;i++) {

sleep(5000);

var orderId = Math.floor(Math.random() * (1000 - 1) + 1);

start(orderId).catch((e) => {

console.error(e);

process.exit(1);

});

}

}

async function start(orderId) {

const PUBSUB_NAME = "order-pub-sub"

const TOPIC_NAME = "orders"

const client = new DaprClient({

daprHost,

daprPort: process.env.DAPR_HTTP_PORT,

communicationProtocol: CommunicationProtocolEnum.HTTP

});

console.log("Published data:" + orderId)

//Using Dapr SDK to publish a topic

await client.pubsub.publish(PUBSUB_NAME, TOPIC_NAME, orderId);

}

function sleep(ms) {

return new Promise(resolve => setTimeout(resolve, ms));

}

main();

Navigate to the directory containing the above code, then run the following command to launch both a Dapr sidecar and the publisher application:

dapr run --app-id orderprocessing --app-port 6001 --dapr-http-port 3601 --dapr-grpc-port 60001 npm start

In order to tell Dapr that a message was processed successfully, return a 200 OK response. If Dapr receives any other return status code than 200, or if your app crashes, Dapr will attempt to redeliver the message following at-least-once semantics.

Watch this demo video to learn more about pub/sub messaging with Dapr.

To enable message routing and provide additional context with each message, Dapr uses the CloudEvents 1.0 specification as its message format. Any message sent by an application to a topic using Dapr is automatically wrapped in a CloudEvents envelope, using the Content-Type header value for datacontenttype attribute.

Dapr uses CloudEvents to provide additional context to the event payload, enabling features like:

You can choose any of three methods for publish a CloudEvent via pub/sub:

Sending a publish operation to Dapr automatically wraps it in a CloudEvent envelope containing the following fields:

idsourcespecversiontypetraceparenttraceidtracestatetopicpubsubnametimedatacontenttype (optional)The following example demonstrates a CloudEvent generated by Dapr for a publish operation to the orders topic that includes:

traceid unique to the messagedata and the fields for the CloudEvent where the data content is serialized as JSON{

"topic": "orders",

"pubsubname": "order_pub_sub",

"traceid": "00-113ad9c4e42b27583ae98ba698d54255-e3743e35ff56f219-01",

"tracestate": "",

"data": {

"orderId": 1

},

"id": "5929aaac-a5e2-4ca1-859c-edfe73f11565",

"specversion": "1.0",

"datacontenttype": "application/json; charset=utf-8",

"source": "checkout",

"type": "com.dapr.event.sent",

"time": "2020-09-23T06:23:21Z",

"traceparent": "00-113ad9c4e42b27583ae98ba698d54255-e3743e35ff56f219-01"

}

As another example of a v1.0 CloudEvent, the following shows data as XML content in a CloudEvent message serialized as JSON:

{

"topic": "orders",

"pubsubname": "order_pub_sub",

"traceid": "00-113ad9c4e42b27583ae98ba698d54255-e3743e35ff56f219-01",

"tracestate": "",

"data" : "<note><to></to><from>user2</from><message>Order</message></note>",

"id" : "id-1234-5678-9101",

"specversion" : "1.0",

"datacontenttype" : "text/xml",

"subject" : "Test XML Message",

"source" : "https://example.com/message",

"type" : "xml.message",

"time" : "2020-09-23T06:23:21Z"

}

Dapr automatically generates several CloudEvent properties. You can replace these generated CloudEvent properties by providing the following optional metadata key/value:

cloudevent.id: overrides idcloudevent.source: overrides sourcecloudevent.type: overrides typecloudevent.traceid: overrides traceidcloudevent.tracestate: overrides tracestatecloudevent.traceparent: overrides traceparentThe ability to replace CloudEvents properties using these metadata properties applies to all pub/sub components.

For example, to replace the source and id values from the CloudEvent example above in code:

with DaprClient() as client:

order = {'orderId': i}

# Publish an event/message using Dapr PubSub

result = client.publish_event(

pubsub_name='order_pub_sub',

topic_name='orders',

publish_metadata={'cloudevent.id': 'd99b228f-6c73-4e78-8c4d-3f80a043d317', 'cloudevent.source': 'payment'}

)

# or

cloud_event = {

'specversion': '1.0',

'type': 'com.example.event',

'source': 'payment',

'id': 'd99b228f-6c73-4e78-8c4d-3f80a043d317',

'data': {'orderId': i},

'datacontenttype': 'application/json',

...

}

# Set the data content type to 'application/cloudevents+json'

result = client.publish_event(

pubsub_name='order_pub_sub',

topic_name='orders',

data=json.dumps(cloud_event),

data_content_type='application/cloudevents+json',

)

var order = new Order(i);

using var client = new DaprClientBuilder().Build();

// Override cloudevent metadata

var metadata = new Dictionary<string,string>() {

{ "cloudevent.source", "payment" },

{ "cloudevent.id", "d99b228f-6c73-4e78-8c4d-3f80a043d317" }

}

// Publish an event/message using Dapr PubSub

await client.PublishEventAsync("order_pub_sub", "orders", order, metadata);

Console.WriteLine("Published data: " + order);

await Task.Delay(TimeSpan.FromSeconds(1));

The JSON payload then reflects the new source and id values:

{

"topic": "orders",

"pubsubname": "order_pub_sub",

"traceid": "00-113ad9c4e42b27583ae98ba698d54255-e3743e35ff56f219-01",

"tracestate": "",

"data": {

"orderId": 1

},

"id": "d99b228f-6c73-4e78-8c4d-3f80a043d317",

"specversion": "1.0",

"datacontenttype": "application/json; charset=utf-8",

"source": "payment",

"type": "com.dapr.event.sent",

"time": "2020-09-23T06:23:21Z",

"traceparent": "00-113ad9c4e42b27583ae98ba698d54255-e3743e35ff56f219-01"

}

traceid/traceparent and tracestate, doing this may interfere with tracing events and report inconsistent results in tracing tools. It’s recommended to use Open Telemetry for distributed traces. Learn more about distributed tracing.

If you want to use your own CloudEvent, make sure to specify the datacontenttype as application/cloudevents+json.

If the CloudEvent that was authored by the app does not contain the minimum required fields in the CloudEvent specification, the message is rejected. Dapr adds the following fields to the CloudEvent if they are missing:

timetraceidtraceparenttracestatetopicpubsubnamesourcetypespecversionYou can add additional fields to a custom CloudEvent that are not part of the official CloudEvent specification. Dapr will pass these fields as-is.

Publish a CloudEvent to the orders topic:

dapr publish --publish-app-id orderprocessing --pubsub order-pub-sub --topic orders --data '{\"orderId\": \"100\"}'

Publish a CloudEvent to the orders topic:

curl -X POST http://localhost:3601/v1.0/publish/order-pub-sub/orders -H "Content-Type: application/cloudevents+json" -d '{"specversion" : "1.0", "type" : "com.dapr.cloudevent.sent", "source" : "testcloudeventspubsub", "subject" : "Cloud Events Test", "id" : "someCloudEventId", "time" : "2021-08-02T09:00:00Z", "datacontenttype" : "application/cloudevents+json", "data" : {"orderId": "100"}}'

Publish a CloudEvent to the orders topic:

Invoke-RestMethod -Method Post -ContentType 'application/cloudevents+json' -Body '{"specversion" : "1.0", "type" : "com.dapr.cloudevent.sent", "source" : "testcloudeventspubsub", "subject" : "Cloud Events Test", "id" : "someCloudEventId", "time" : "2021-08-02T09:00:00Z", "datacontenttype" : "application/cloudevents+json", "data" : {"orderId": "100"}}' -Uri 'http://localhost:3601/v1.0/publish/order-pub-sub/orders'

When using cloud events created by Dapr, the envelope contains an id field which can be used by the app to perform message deduplication. Dapr does not handle deduplication automatically. Dapr supports using message brokers that natively enable message deduplication.

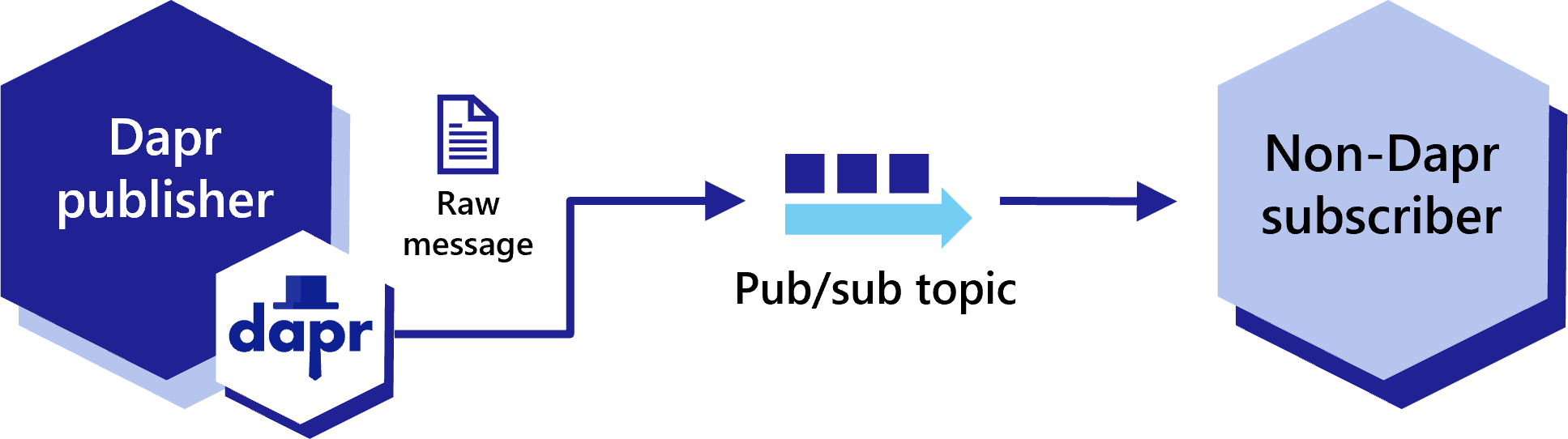

When adding Dapr to your application, some services may still need to communicate via pub/sub messages not encapsulated in CloudEvents, due to either compatibility reasons or some apps not using Dapr. These are referred to as “raw” pub/sub messages. Dapr enables apps to publish and subscribe to raw events not wrapped in a CloudEvent for compatibility and to send data that is not JSON serializable.

Dapr apps are able to publish raw events to pub/sub topics without CloudEvent encapsulation, for compatibility with non-Dapr apps.

To disable CloudEvent wrapping, set the rawPayload metadata to true as part of the publishing request. This allows subscribers to receive these messages without having to parse the CloudEvent schema.

curl -X "POST" http://localhost:3500/v1.0/publish/pubsub/TOPIC_A?metadata.rawPayload=true -H "Content-Type: application/json" -d '{"order-number": "345"}'

using Dapr.Client;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddControllers().AddDapr();

var app = builder.Build();

app.MapPost("/publish", async (DaprClient daprClient) =>

{

var message = new Message(

Guid.NewGuid().ToString(),

$"Hello at {DateTime.UtcNow}",

DateTime.UtcNow

);

await daprClient.PublishEventAsync(

"pubsub", // pubsub name

"messages", // topic name

message, // message data

new Dictionary<string, string>

{

{ "rawPayload", "true" },

{ "content-type", "application/json" }

}

);

return Results.Ok(message);

});

app.Run();

from dapr.clients import DaprClient

with DaprClient() as d:

req_data = {

'order-number': '345'

}

# Create a typed message with content type and body

resp = d.publish_event(

pubsub_name='pubsub',

topic_name='TOPIC_A',

data=json.dumps(req_data),

publish_metadata={'rawPayload': 'true'}

)

# Print the request

print(req_data, flush=True)

<?php

require_once __DIR__.'/vendor/autoload.php';

$app = \Dapr\App::create();

$app->run(function(\DI\FactoryInterface $factory) {

$publisher = $factory->make(\Dapr\PubSub\Publish::class, ['pubsub' => 'pubsub']);

$publisher->topic('TOPIC_A')->publish('data', ['rawPayload' => 'true']);

});

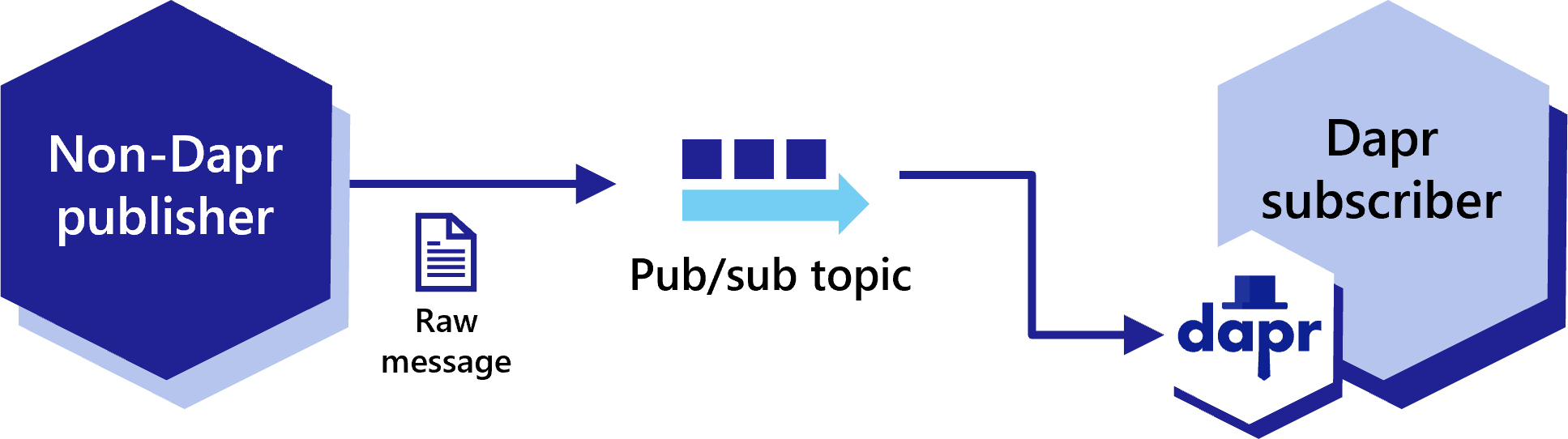

Dapr apps can subscribe to raw messages from pub/sub topics, even if they weren’t published as CloudEvents. However, the subscribing Dapr process still wraps these raw messages in a CloudEvent before delivering them to the subscribing application.

When subscribing programmatically, add the additional metadata entry for rawPayload to allow the subscriber to receive a message that is not wrapped by a CloudEvent. For .NET, this metadata entry is called isRawPayload.

When using raw payloads the message is always base64 encoded with content type application/octet-stream.

using System.Text.Json;

using System.Text.Json.Serialization;

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

app.MapGet("/dapr/subscribe", () =>

{

var subscriptions = new[]

{

new

{

pubsubname = "pubsub",

topic = "messages",

route = "/messages",

metadata = new Dictionary<string, string>

{

{ "isRawPayload", "true" },

{ "content-type", "application/json" }

}

}

};

return Results.Ok(subscriptions);

});

app.MapPost("/messages", async (HttpContext context) =>

{

using var reader = new StreamReader(context.Request.Body);

var json = await reader.ReadToEndAsync();

Console.WriteLine($"Raw message received: {json}");

return Results.Ok();

});

app.Run();

import flask

from flask import request, jsonify

from flask_cors import CORS

import json

import sys

app = flask.Flask(__name__)

CORS(app)

@app.route('/dapr/subscribe', methods=['GET'])

def subscribe():

subscriptions = [{'pubsubname': 'pubsub',

'topic': 'deathStarStatus',

'route': 'dsstatus',

'metadata': {

'rawPayload': 'true',

} }]

return jsonify(subscriptions)

@app.route('/dsstatus', methods=['POST'])

def ds_subscriber():

print(request.json, flush=True)

return json.dumps({'success':True}), 200, {'ContentType':'application/json'}

app.run()

<?php

require_once __DIR__.'/vendor/autoload.php';

$app = \Dapr\App::create(configure: fn(\DI\ContainerBuilder $builder) => $builder->addDefinitions(['dapr.subscriptions' => [

new \Dapr\PubSub\Subscription(pubsubname: 'pubsub', topic: 'deathStarStatus', route: '/dsstatus', metadata: [ 'rawPayload' => 'true'] ),

]]));

$app->post('/dsstatus', function(

#[\Dapr\Attributes\FromBody]

\Dapr\PubSub\CloudEvent $cloudEvent,

\Psr\Log\LoggerInterface $logger

) {

$logger->alert('Received event: {event}', ['event' => $cloudEvent]);

return ['status' => 'SUCCESS'];

}

);

$app->start();

Similarly, you can subscribe to raw events declaratively by adding the rawPayload metadata entry to your subscription specification.

apiVersion: dapr.io/v2alpha1

kind: Subscription

metadata:

name: myevent-subscription

spec:

topic: deathStarStatus

routes:

default: /dsstatus

pubsubname: pubsub

metadata:

isRawPayload: "true"

scopes:

- app1

- app2

Pub/sub routing is an implementation of content-based routing, a messaging pattern that utilizes a DSL instead of imperative application code. With pub/sub routing, you use expressions to route CloudEvents (based on their contents) to different URIs/paths and event handlers in your application. If no route matches, then an optional default route is used. This proves useful as your applications expand to support multiple event versions or special cases.

While routing can be implemented with code, keeping routing rules external from the application can improve portability.

This feature is available to both the declarative and programmatic subscription approaches, however does not apply to streaming subscriptions.

For declarative subscriptions, use dapr.io/v2alpha1 as the apiVersion. Here is an example of subscriptions.yaml using routing:

apiVersion: dapr.io/v2alpha1

kind: Subscription

metadata:

name: myevent-subscription

spec:

pubsubname: pubsub

topic: inventory

routes:

rules:

- match: event.type == "widget"

path: /widgets

- match: event.type == "gadget"

path: /gadgets

default: /products

scopes:

- app1

- app2

In the programmatic approach, the routes structure is returned instead of route. The JSON structure matches the declarative YAML:

import flask

from flask import request, jsonify

from flask_cors import CORS

import json

import sys

app = flask.Flask(__name__)

CORS(app)

@app.route('/dapr/subscribe', methods=['GET'])

def subscribe():

subscriptions = [

{

'pubsubname': 'pubsub',

'topic': 'inventory',

'routes': {

'rules': [

{

'match': 'event.type == "widget"',

'path': '/widgets'

},

{

'match': 'event.type == "gadget"',

'path': '/gadgets'

},

],

'default': '/products'

}

}]

return jsonify(subscriptions)

@app.route('/products', methods=['POST'])

def ds_subscriber():

print(request.json, flush=True)

return json.dumps({'success':True}), 200, {'ContentType':'application/json'}

app.run()

const express = require('express')

const bodyParser = require('body-parser')

const app = express()

app.use(bodyParser.json({ type: 'application/*+json' }));

const port = 3000

app.get('/dapr/subscribe', (req, res) => {

res.json([

{

pubsubname: "pubsub",

topic: "inventory",

routes: {

rules: [

{

match: 'event.type == "widget"',

path: '/widgets'

},

{

match: 'event.type == "gadget"',

path: '/gadgets'

},

],

default: '/products'

}

}

]);

})

app.post('/products', (req, res) => {

console.log(req.body);

res.sendStatus(200);

});

app.listen(port, () => console.log(`consumer app listening on port ${port}!`))

[Topic("pubsub", "inventory", "event.type ==\"widget\"", 1)]

[HttpPost("widgets")]

public async Task<ActionResult<Stock>> HandleWidget(Widget widget, [FromServices] DaprClient daprClient)

{

// Logic

return stock;

}

[Topic("pubsub", "inventory", "event.type ==\"gadget\"", 2)]

[HttpPost("gadgets")]

public async Task<ActionResult<Stock>> HandleGadget(Gadget gadget, [FromServices] DaprClient daprClient)

{

// Logic

return stock;

}

[Topic("pubsub", "inventory")]

[HttpPost("products")]

public async Task<ActionResult<Stock>> HandleProduct(Product product, [FromServices] DaprClient daprClient)

{

// Logic

return stock;

}

package main

import (

"encoding/json"

"fmt"

"log"

"net/http"

"github.com/gorilla/mux"

)

const appPort = 3000

type subscription struct {

PubsubName string `json:"pubsubname"`

Topic string `json:"topic"`

Metadata map[string]string `json:"metadata,omitempty"`

Routes routes `json:"routes"`

}

type routes struct {

Rules []rule `json:"rules,omitempty"`

Default string `json:"default,omitempty"`

}

type rule struct {

Match string `json:"match"`

Path string `json:"path"`

}

// This handles /dapr/subscribe

func configureSubscribeHandler(w http.ResponseWriter, _ *http.Request) {

t := []subscription{

{

PubsubName: "pubsub",

Topic: "inventory",

Routes: routes{

Rules: []rule{

{

Match: `event.type == "widget"`,

Path: "/widgets",

},

{

Match: `event.type == "gadget"`,

Path: "/gadgets",

},

},

Default: "/products",

},

},

}

w.WriteHeader(http.StatusOK)

json.NewEncoder(w).Encode(t)

}

func main() {

router := mux.NewRouter().StrictSlash(true)

router.HandleFunc("/dapr/subscribe", configureSubscribeHandler).Methods("GET")

log.Fatal(http.ListenAndServe(fmt.Sprintf(":%d", appPort), router))

}

<?php

require_once __DIR__.'/vendor/autoload.php';

$app = \Dapr\App::create(configure: fn(\DI\ContainerBuilder $builder) => $builder->addDefinitions(['dapr.subscriptions' => [

new \Dapr\PubSub\Subscription(pubsubname: 'pubsub', topic: 'inventory', routes: (

rules: => [

('match': 'event.type == "widget"', path: '/widgets'),

('match': 'event.type == "gadget"', path: '/gadgets'),

]

default: '/products')),

]]));

$app->post('/products', function(

#[\Dapr\Attributes\FromBody]

\Dapr\PubSub\CloudEvent $cloudEvent,

\Psr\Log\LoggerInterface $logger

) {

$logger->alert('Received event: {event}', ['event' => $cloudEvent]);

return ['status' => 'SUCCESS'];

}

);

$app->start();

In these examples, depending on the event.type, the application will be called on:

/widgets/gadgets/productsThe expressions are written as Common Expression Language (CEL) where event represents the cloud event. Any of the attributes from the CloudEvents core specification can be referenced in the expression.

Match “important” messages:

has(event.data.important) && event.data.important == true

Match deposits greater than $10,000:

event.type == "deposit" && int(event.data.amount) > 10000

event.data.amount is not cast as integer, the match is not performed. For more information, see the CEL documentation.

Match multiple versions of a message:

event.type == "mymessage.v1"

event.type == "mymessage.v2"

For reference, the following attributes are from the CloudEvents specification.

As defined by the term data, CloudEvents may include domain-specific information about the occurrence. When present, this information will be encapsulated within data.

datacontenttype attribute (e.g. application/json), and adheres to the dataschema format when those respective attributes are present.The following attributes are required in all CloudEvents:

Stringsource + id

are unique for each distinct event. If a duplicate event is re-sent (e.g. due

to a network error), it may have the same id. Consumers may assume that

events with identical source and id are duplicates.Type: URI-reference

Description: Identifies the context in which an event happened. Often this includes information such as:

The exact syntax and semantics behind the data encoded in the URI is defined by the event producer.

Producers must ensure that source + id are unique for each distinct event.

An application may:

source to each distinct producer, making it easier to produce unique IDs and preventing other producers from having the same source.source identifiers.A source may include more than one producer. In this case, the producers must collaborate to ensure that source + id are unique for each distinct event.

Constraints:

Examples:

Type: String

Description: The version of the CloudEvents specification used by the event. This enables the interpretation of the context. Compliant event producers must use a value of 1.0 when referring to this version of the specification.

Currently, this attribute only includes the ‘major’ and ‘minor’ version numbers. This allows patch changes to the specification to be made without changing this property’s value in the serialization.

Note: for ‘release candidate’ releases, a suffix might be used for testing purposes.

Constraints:

Stringtype. See Versioning of CloudEvents in the Primer for more information.The following attributes are optional to appear in CloudEvents. See the Notational Conventions section for more information on the definition of OPTIONAL.

Type: String per RFC 2046

Description: Content type of data value. This attribute enables data to carry any type of content, whereby format and encoding might differ from that of the chosen event format.

For example, an event rendered using the JSON envelope format might carry an XML payload in data. The consumer is informed by this attribute being set to "application/xml".

The rules for how data content is rendered for different datacontenttype values are defined in the event format specifications. For example, the JSON event format defines the relationship in section 3.1.

For some binary mode protocol bindings, this field is directly mapped to the respective protocol’s content-type metadata property. You can find normative rules for the binary mode and the content-type metadata mapping in the respective protocol.

In some event formats, you may omit the datacontenttype attribute. For example, if a JSON format event has no datacontenttype attribute, it’s implied that the data is a JSON value conforming to the "application/json" media type. In other words: a JSON-format event with no datacontenttype is exactly equivalent to one with datacontenttype="application/json".

When translating an event message with no datacontenttype attribute to a different format or protocol binding, the target datacontenttype should be set explicitly to the implied datacontenttype of the source.

Constraints:

For Media Type examples, see IANA Media Types

URIdata adheres to. Incompatible changes to the schema should be reflected by a different URI. See Versioning of CloudEvents in the Primer for more information.Type: String

Description: This describes the event subject in the context of the event producer (identified by source). In publish-subscribe scenarios, a subscriber will typically subscribe to events emitted by a source. The source identifier alone might not be sufficient as a qualifier for any specific event if the source context has internal sub-structure.